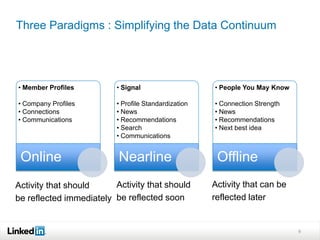

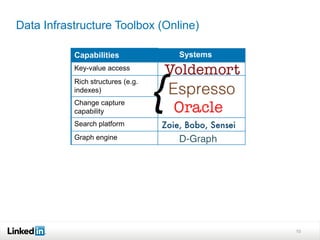

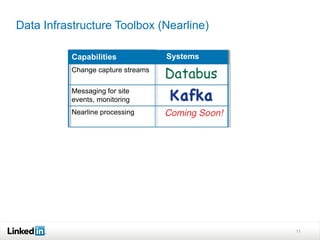

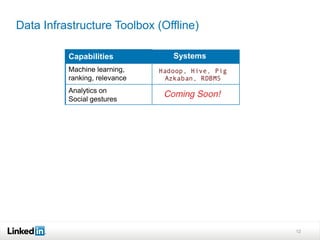

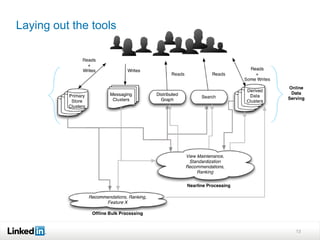

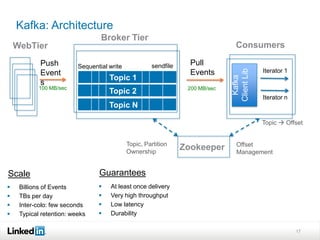

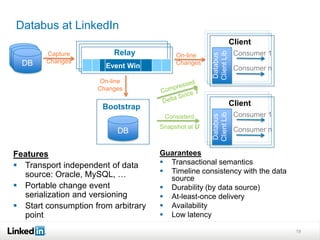

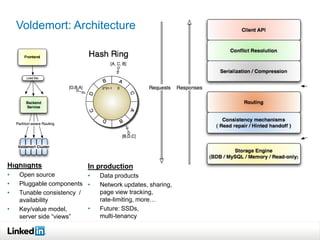

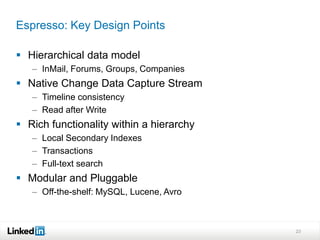

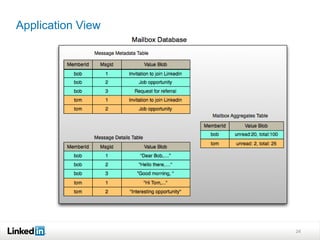

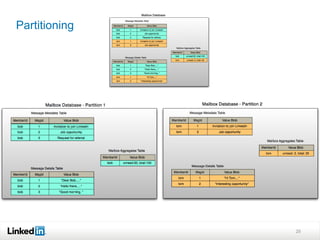

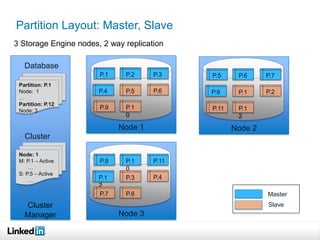

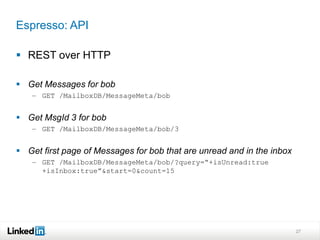

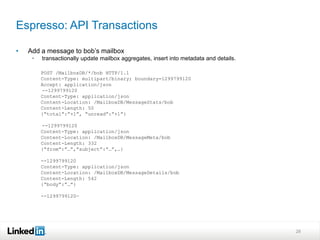

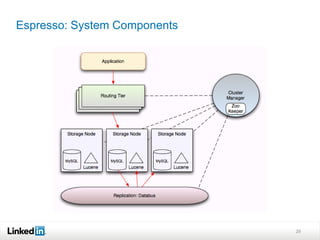

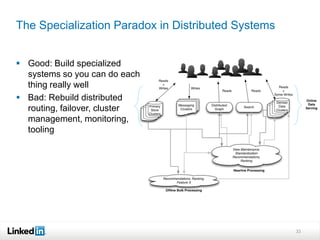

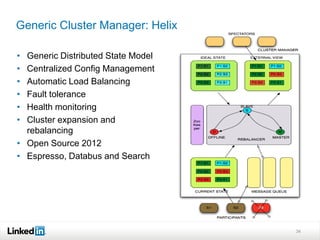

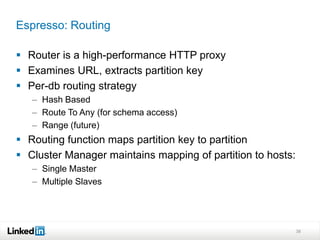

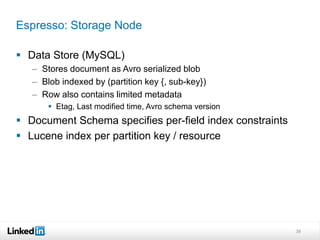

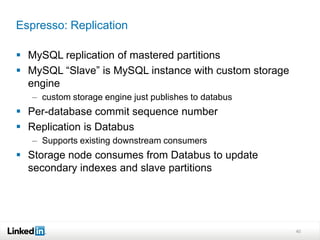

This document discusses LinkedIn's data infrastructure. It describes how LinkedIn handles large volumes of user data across online, nearline and offline systems. Key systems discussed include Kafka for messaging, Databus for change data capture, Voldemort for online key-value storage, and Espresso for indexed, timeline-consistent distributed storage. Espresso provides a rich data model and APIs for applications to access user data.