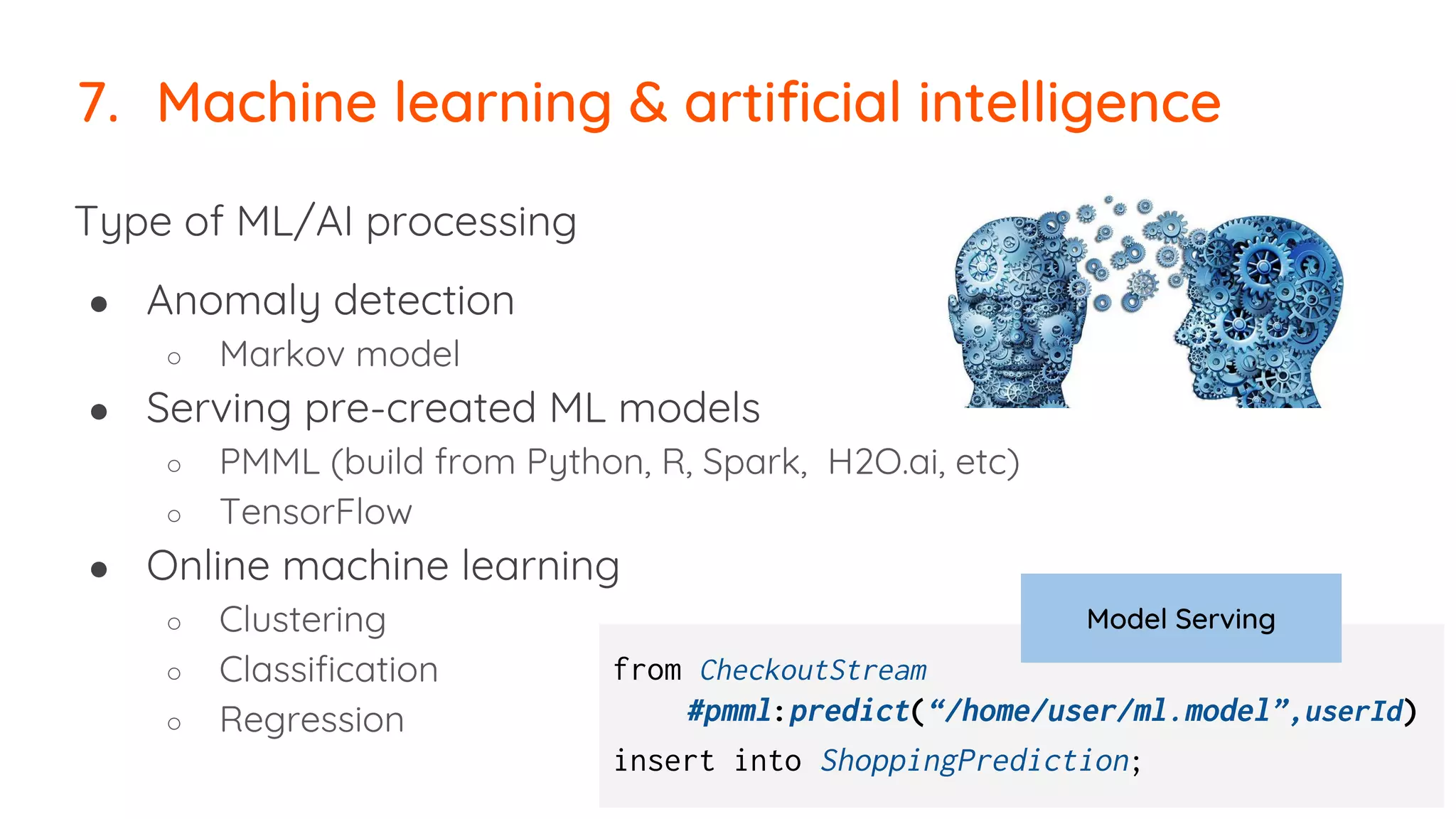

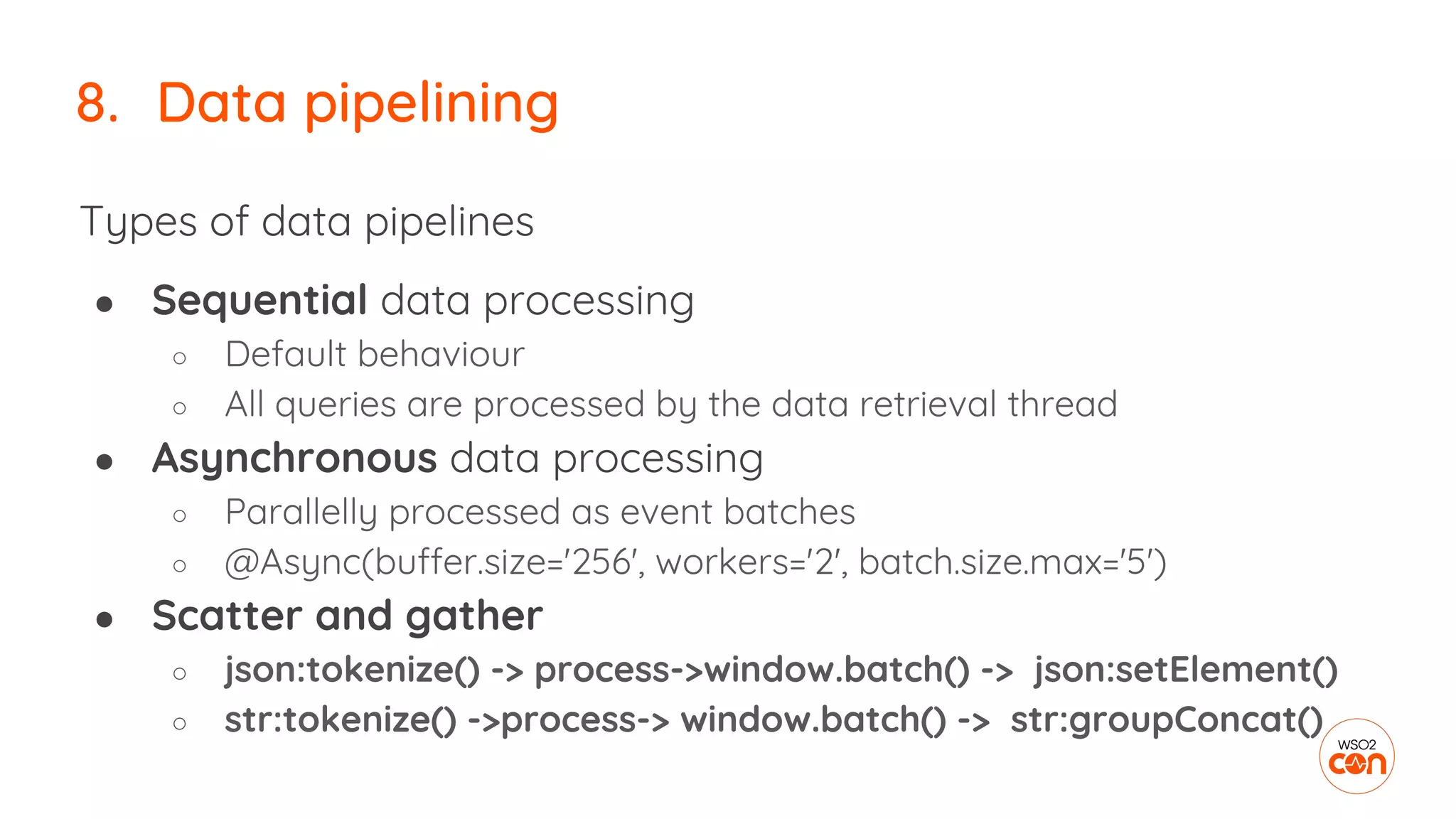

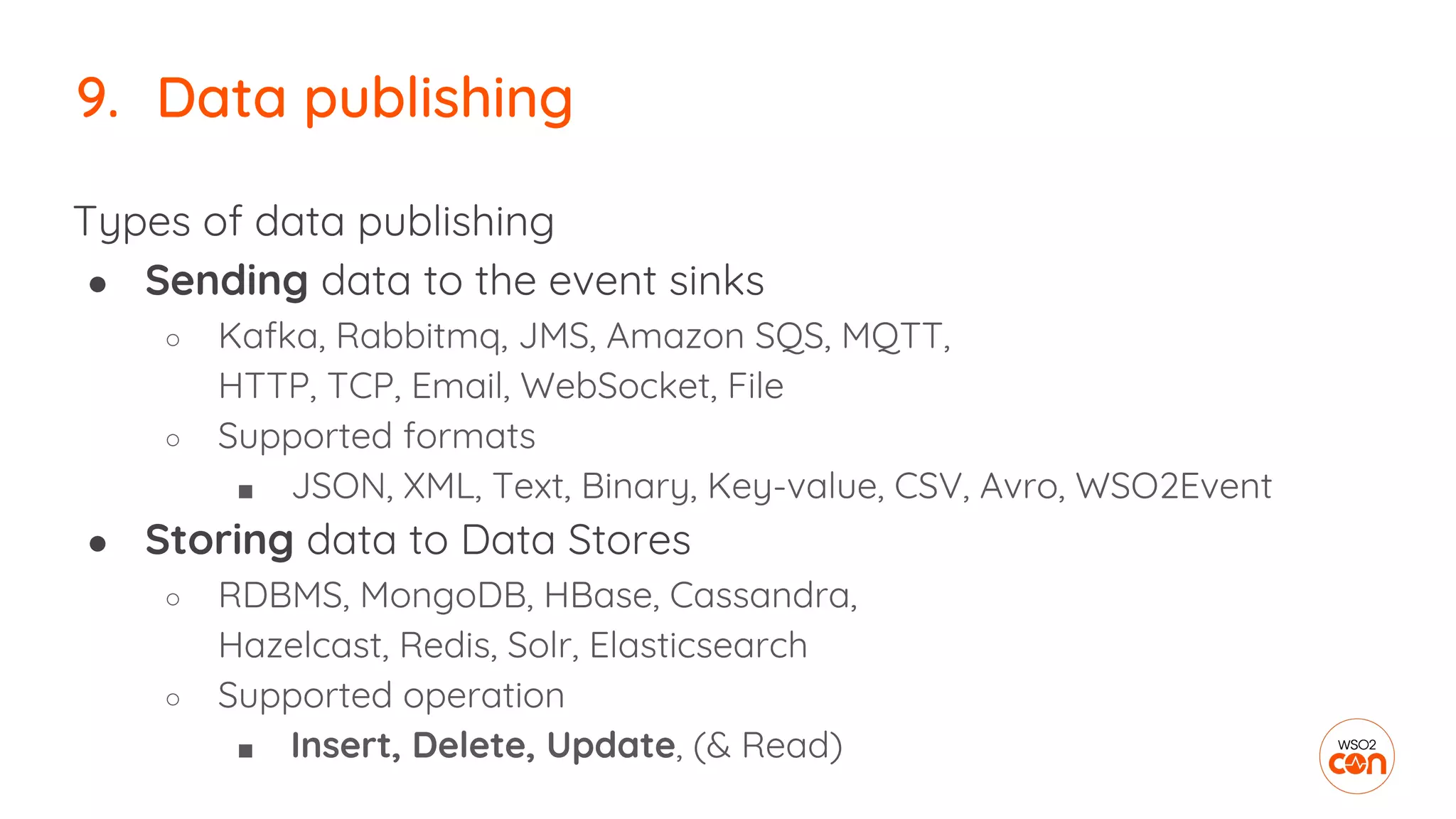

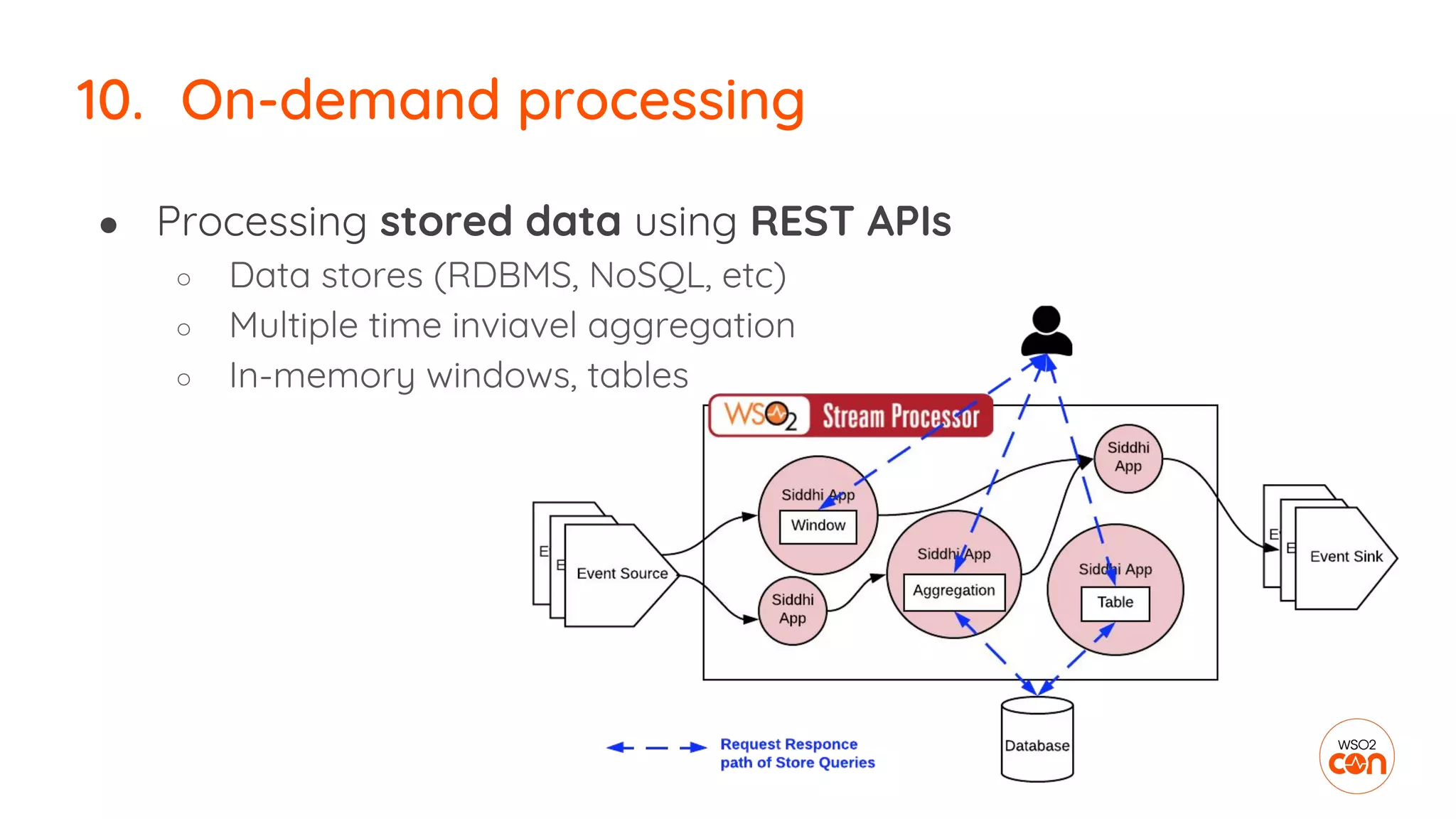

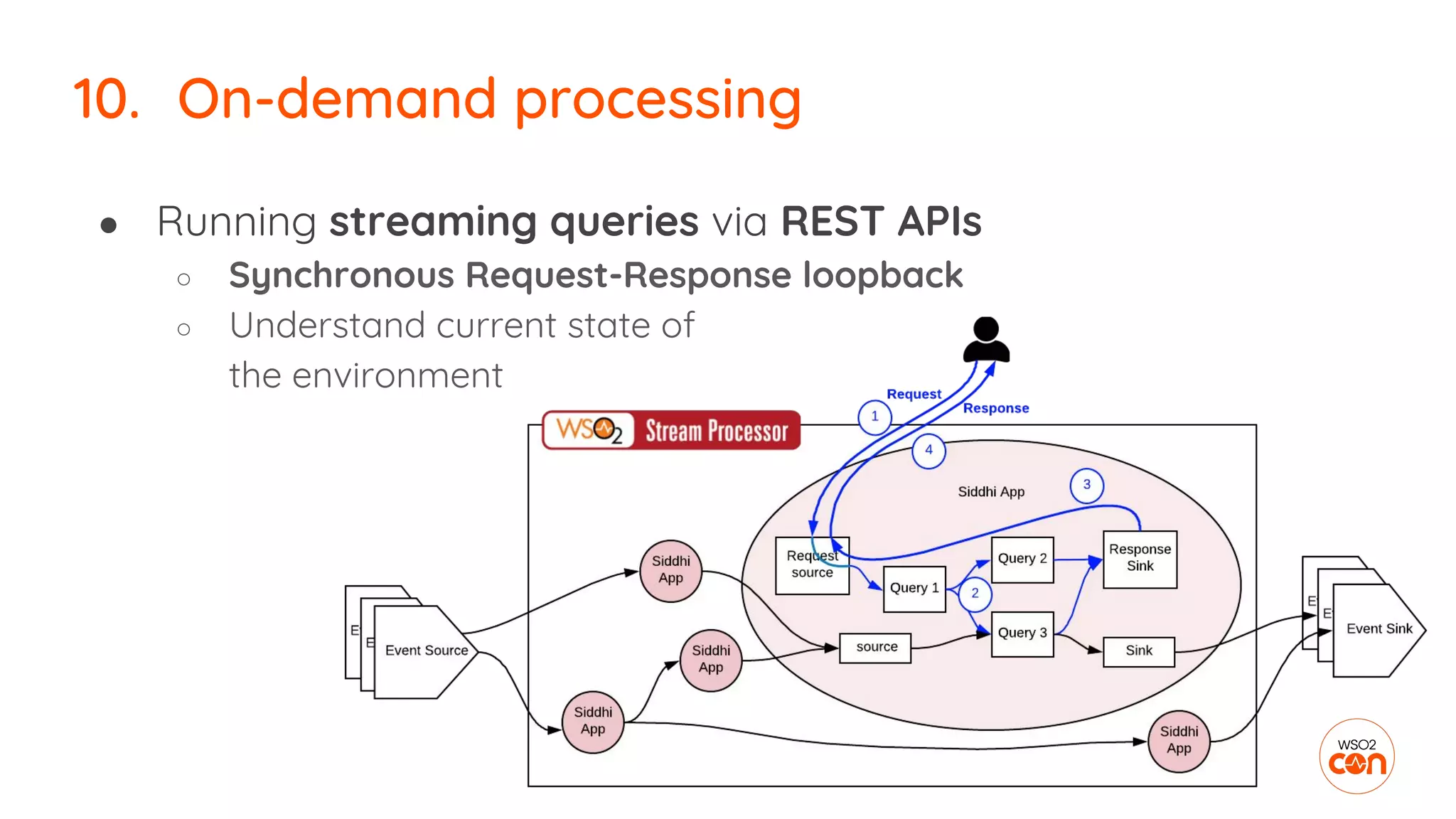

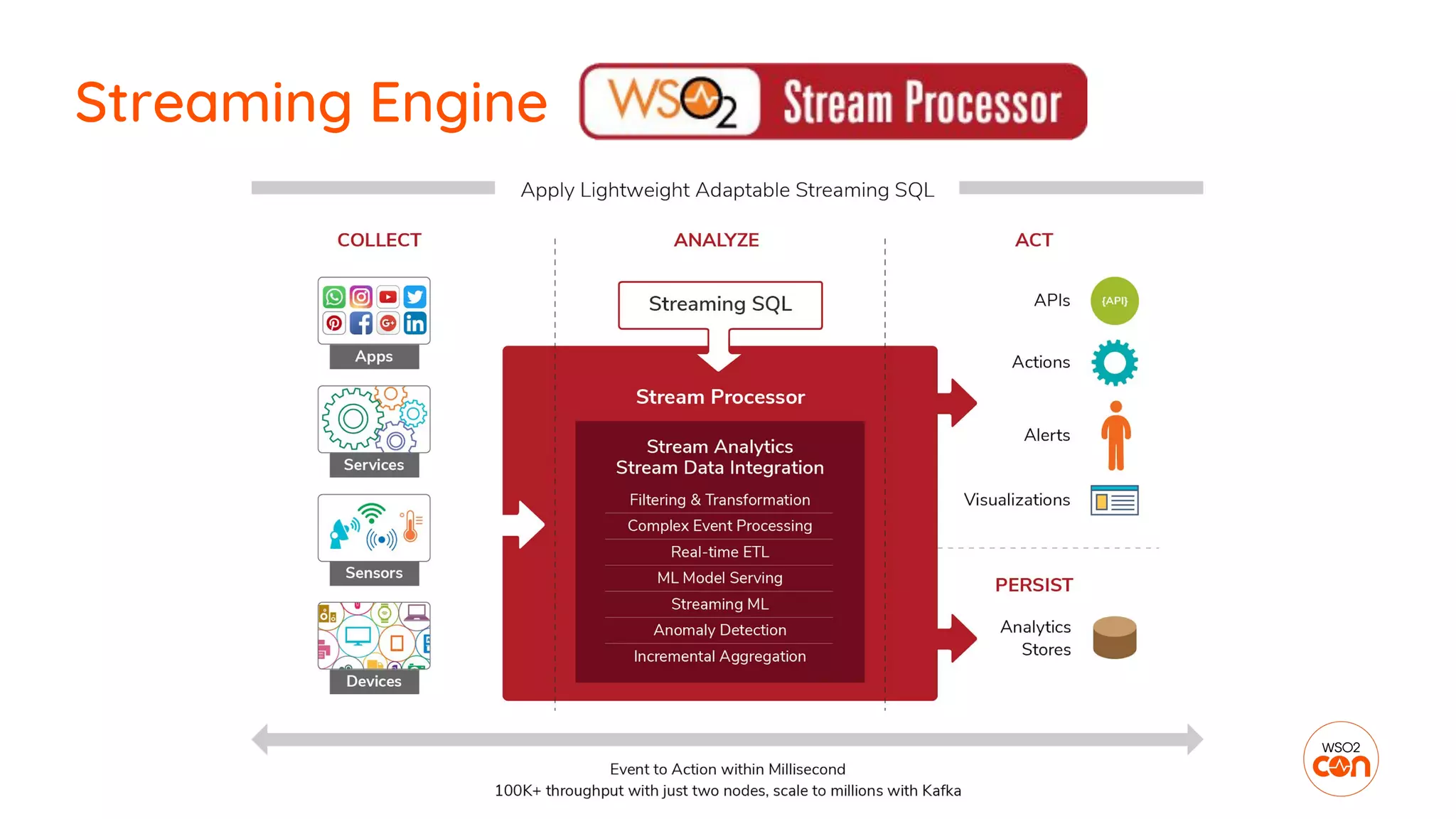

The document outlines essential strategies for building streaming applications using WSO2, detailing the importance of streaming patterns for real-time data processing across various business scenarios. It elaborates on eleven streaming patterns, including data collection, cleansing, transformation, enrichment, summarization, rule processing, and machine learning integration. Additionally, it emphasizes deployment, monitoring, and the capabilities of WSO2 Stream Processor in developing and managing these applications effectively.

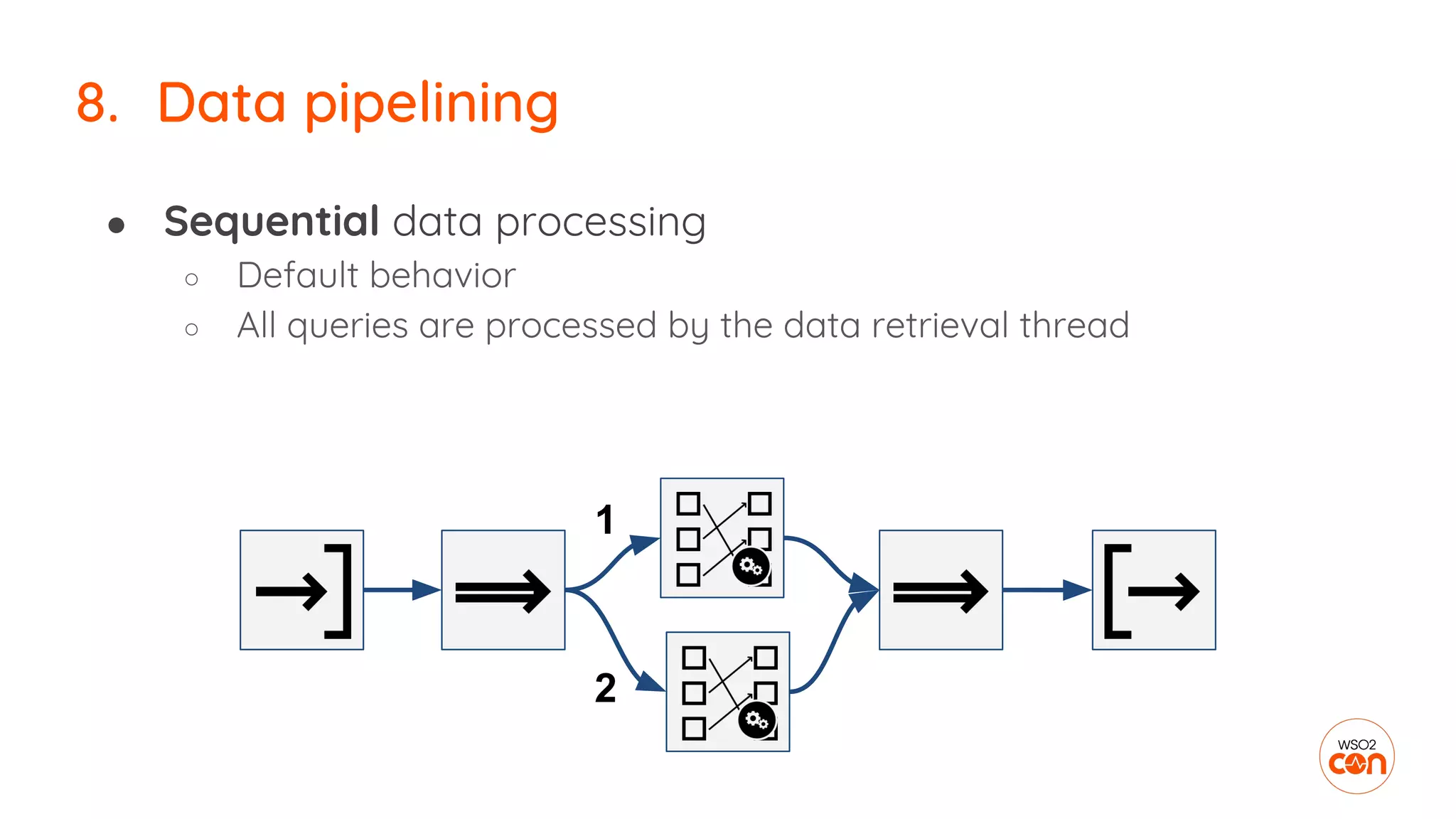

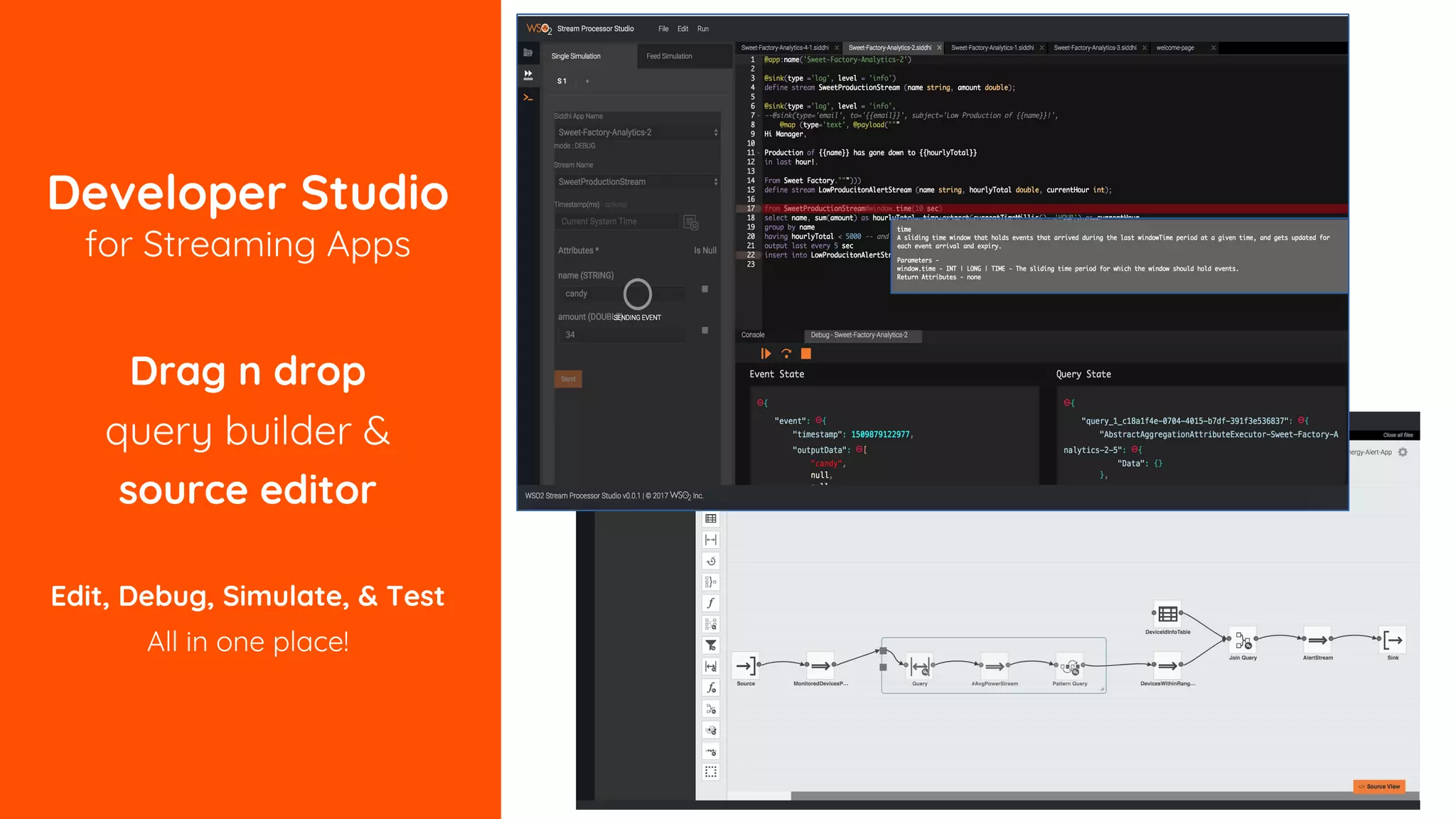

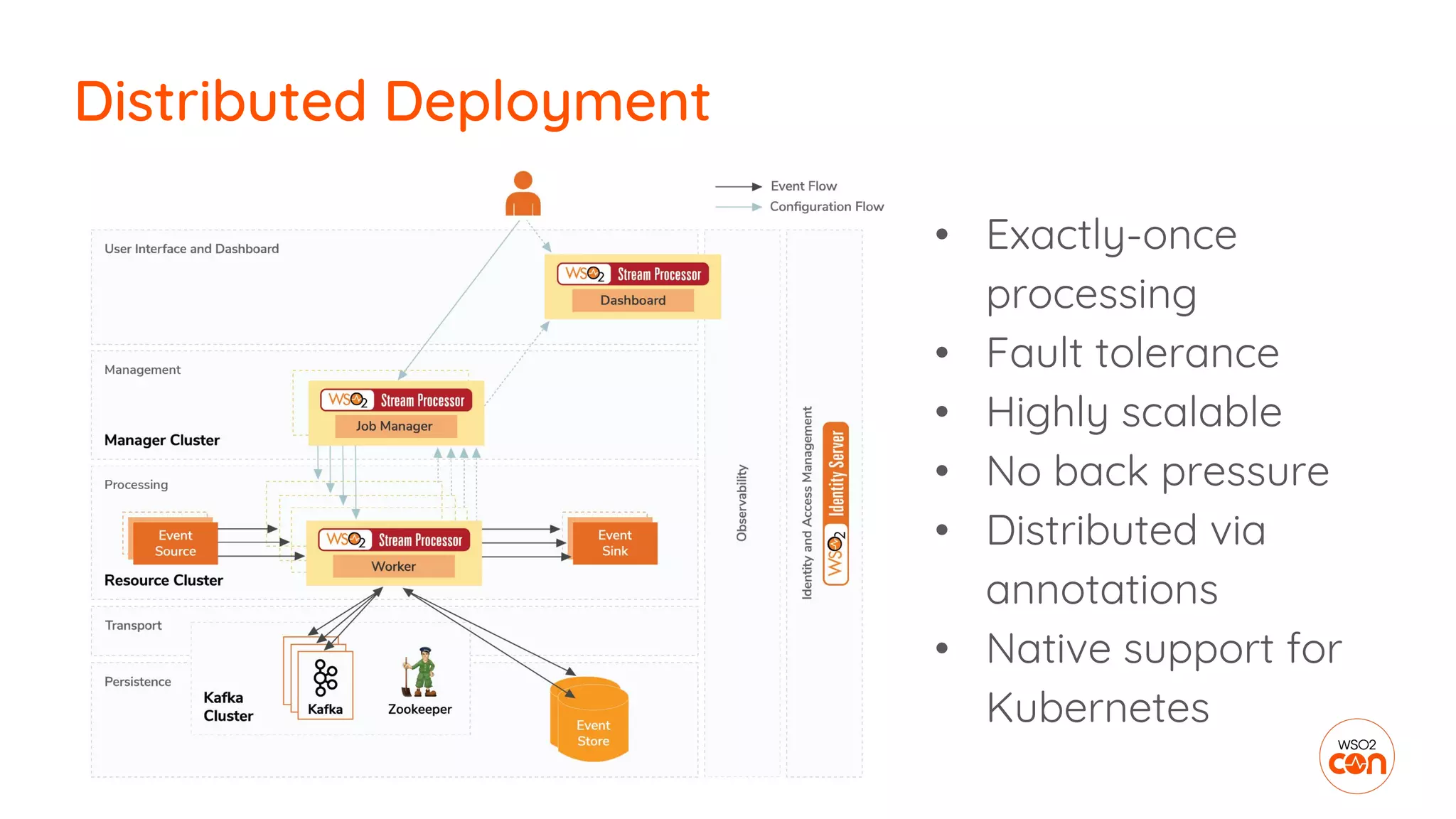

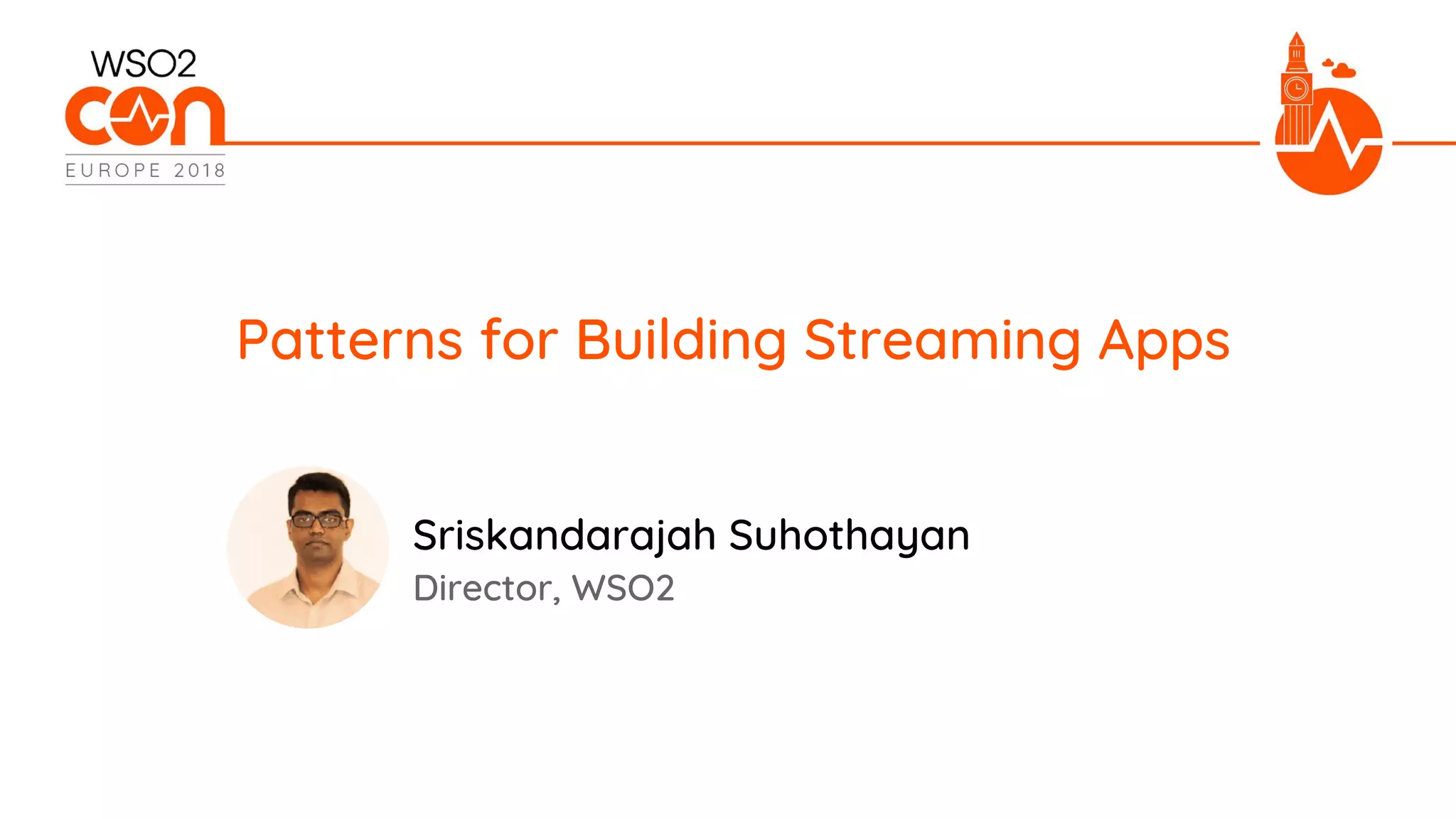

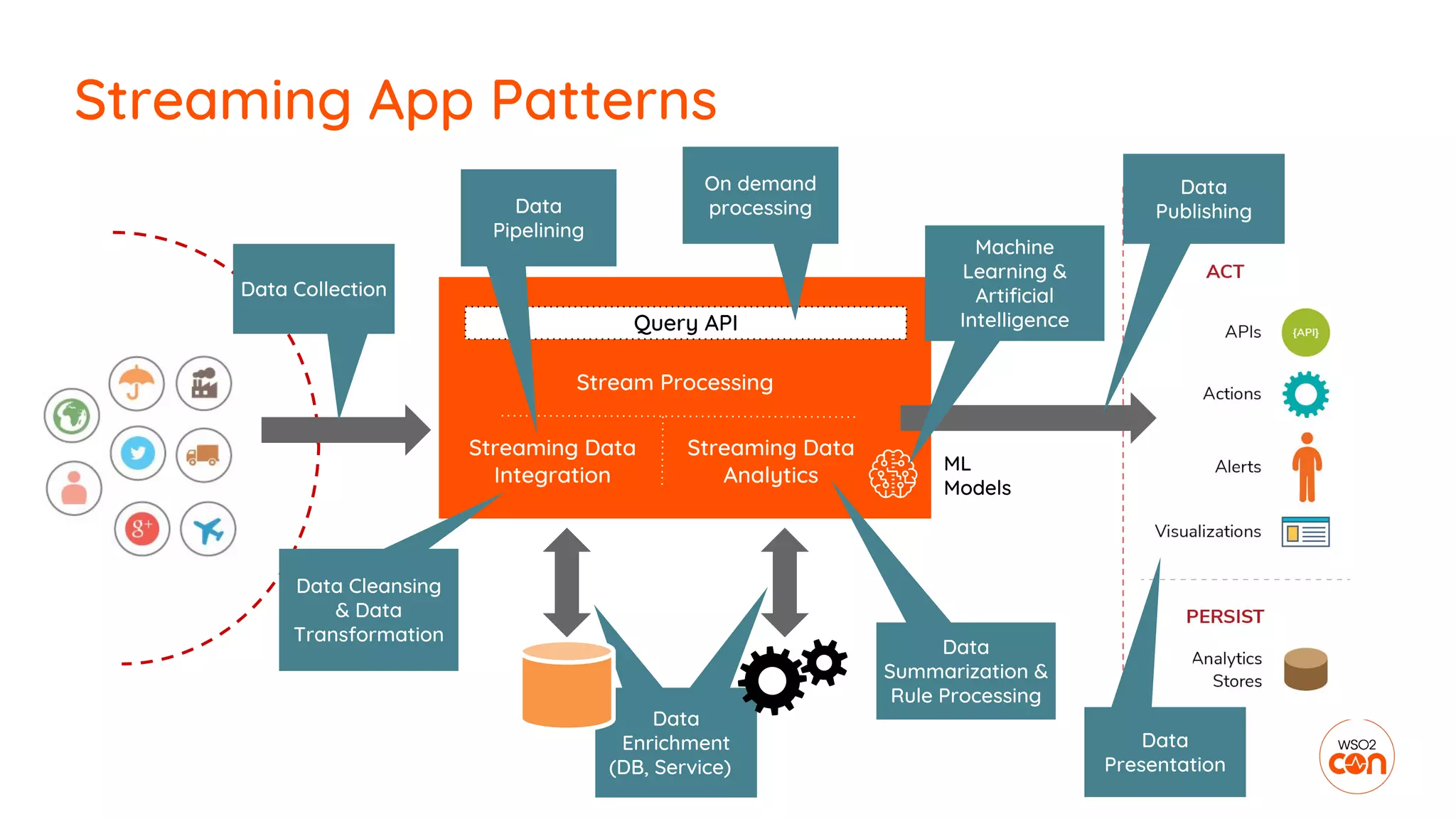

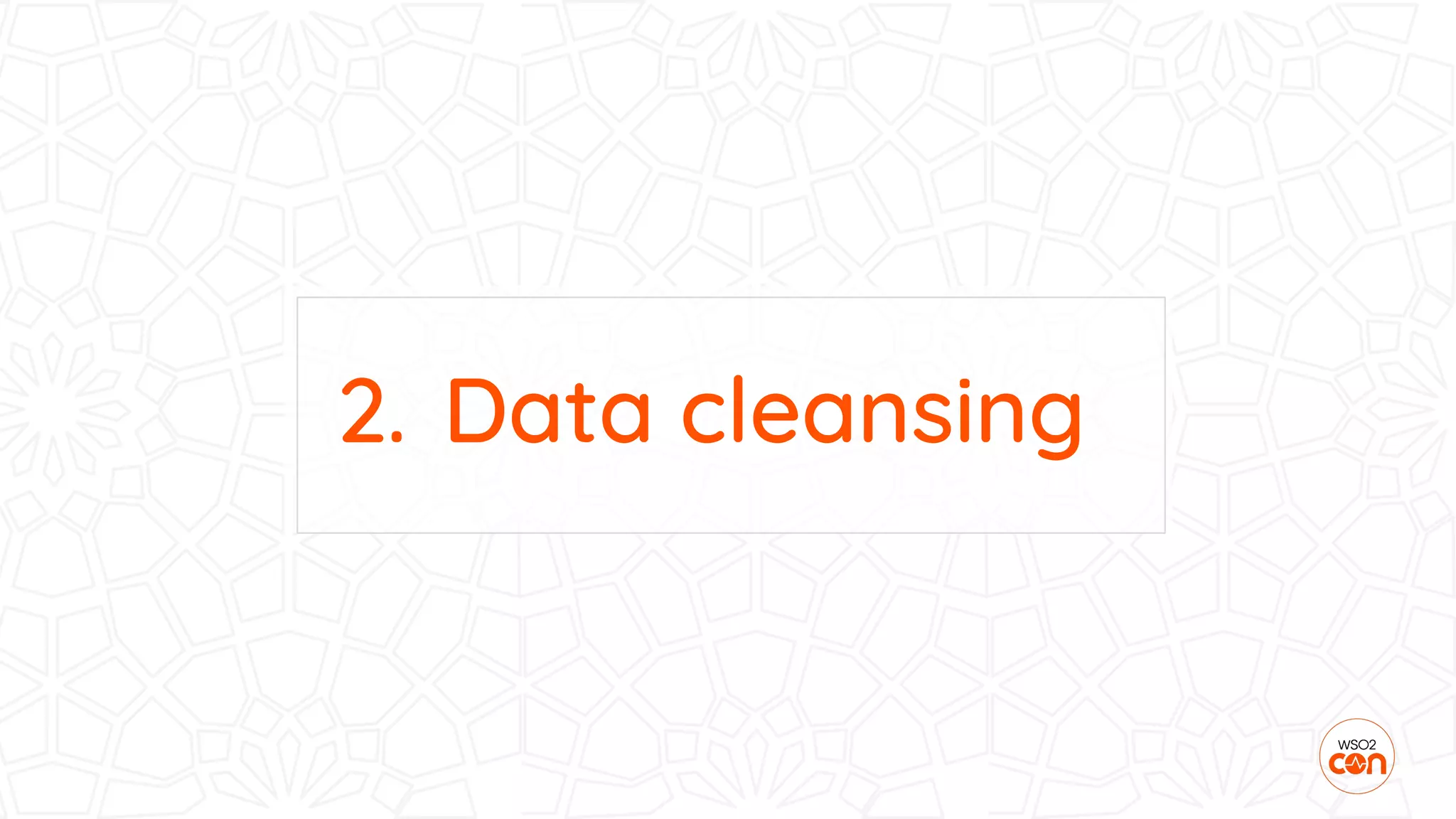

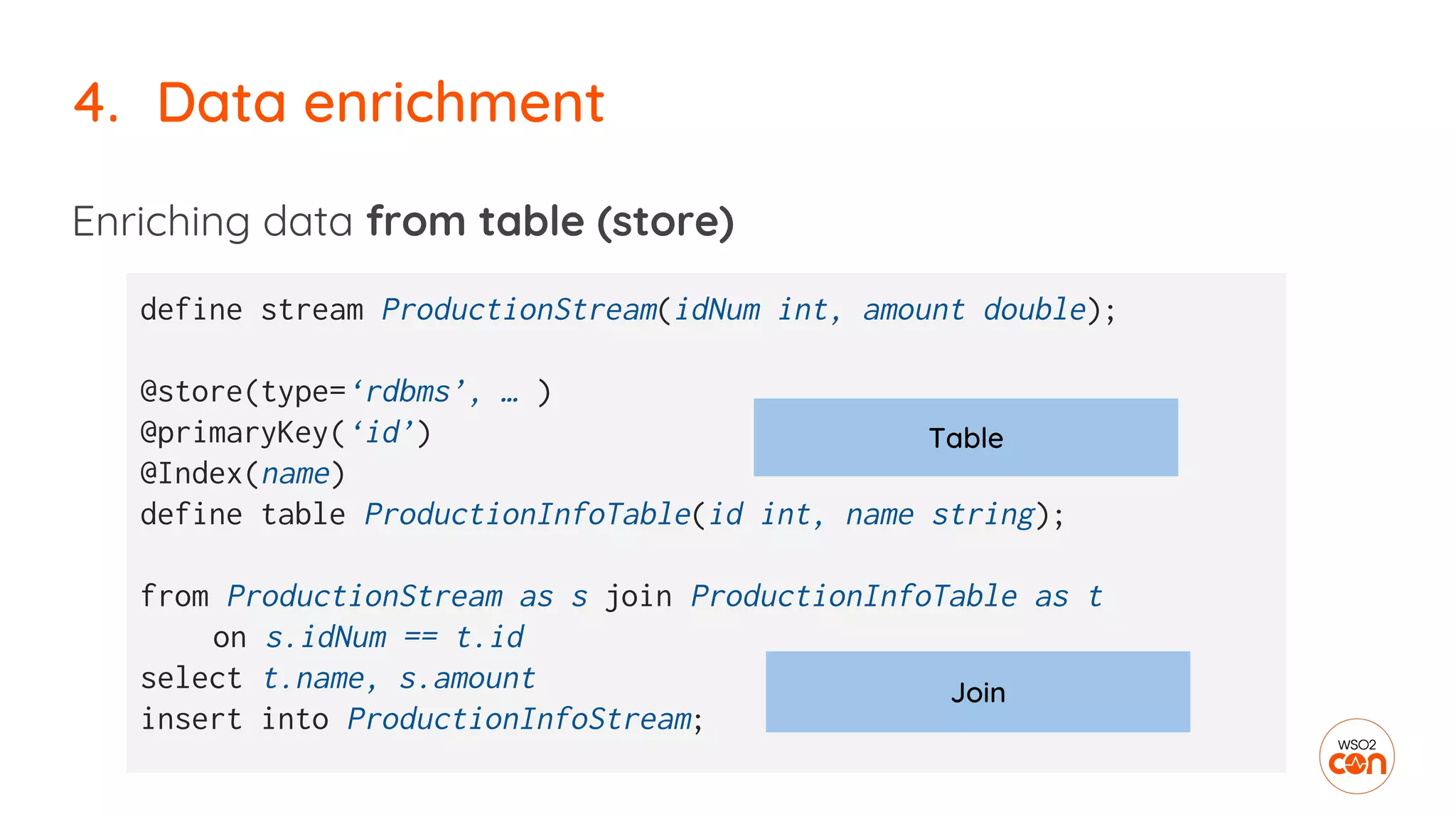

![2. Data cleansing

Types of data cleansing

● Filtering

○ value ranges

○ string matching

○ regex

● Setting Defaults

○ Null checks

○ If-then-else clouces

define stream ProductionStream

(name string, amount double);

from ProductionStream [name==“cake”]

select name, ifThenElse ( amount<0, 0.0,

amount) as amount

insert into CleansedProductionStream;](https://image.slidesharecdn.com/3-181113092919/75/WSO2Con-EU-2018-Patterns-for-Building-Streaming-Apps-15-2048.jpg)

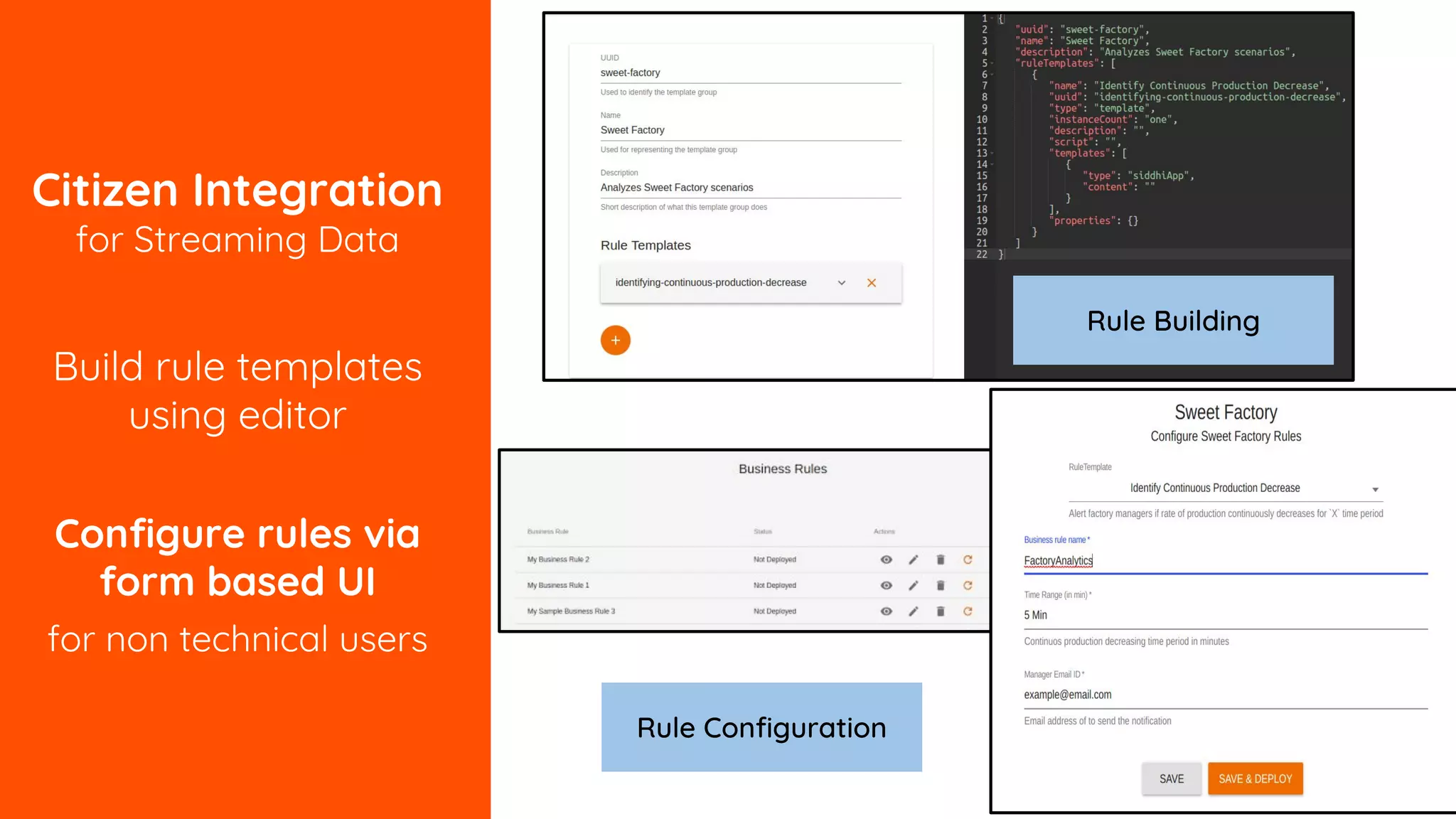

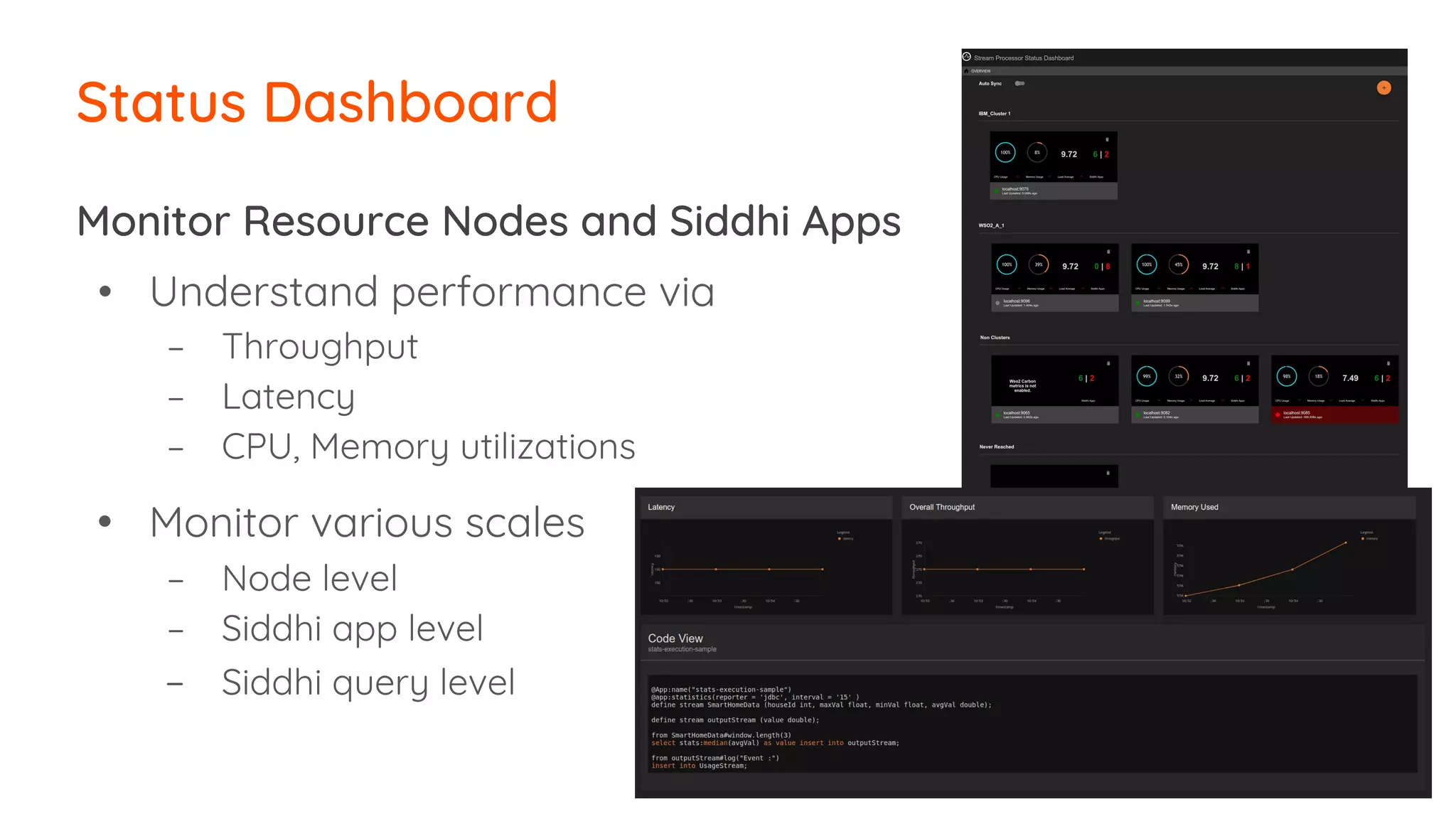

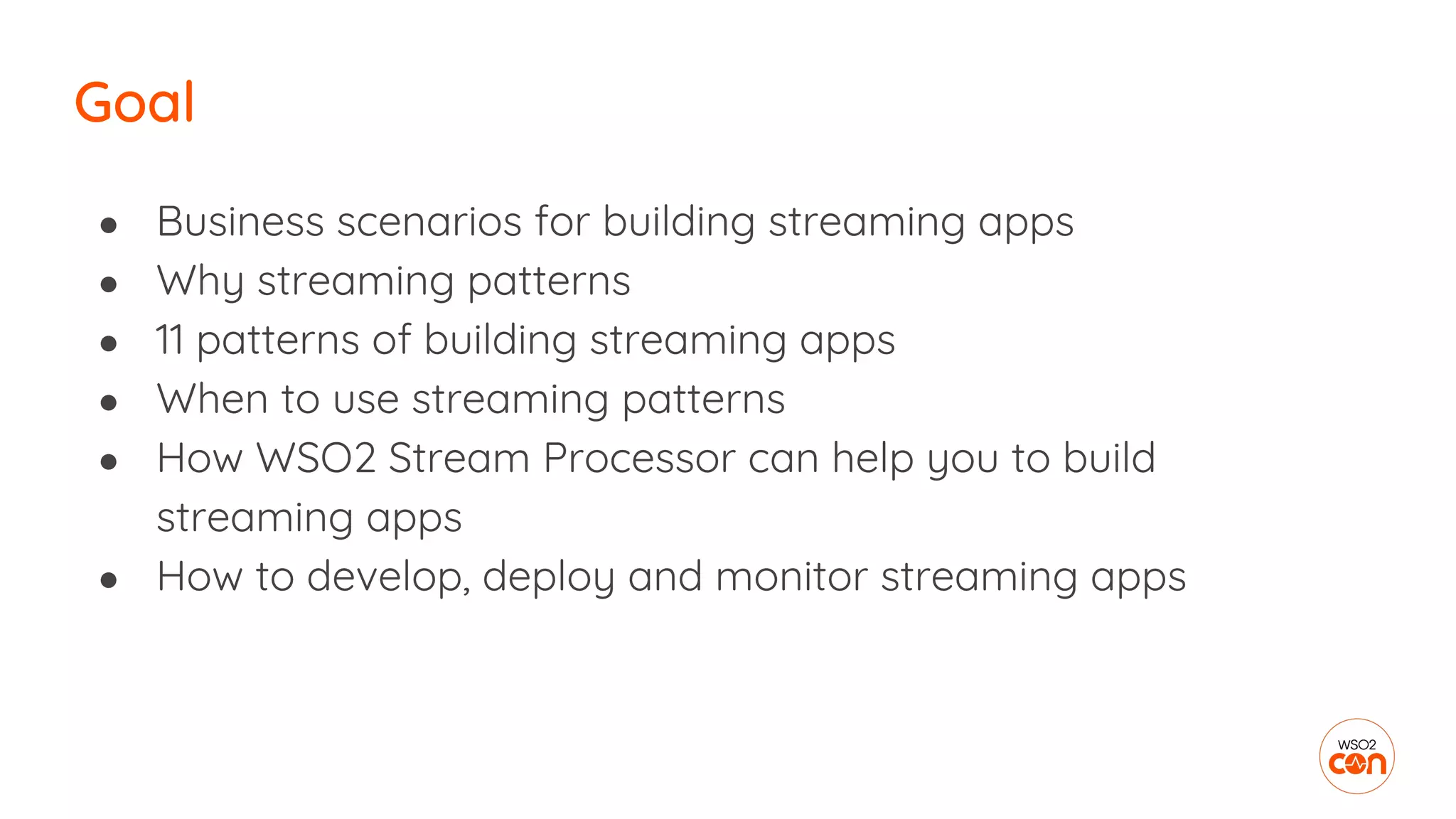

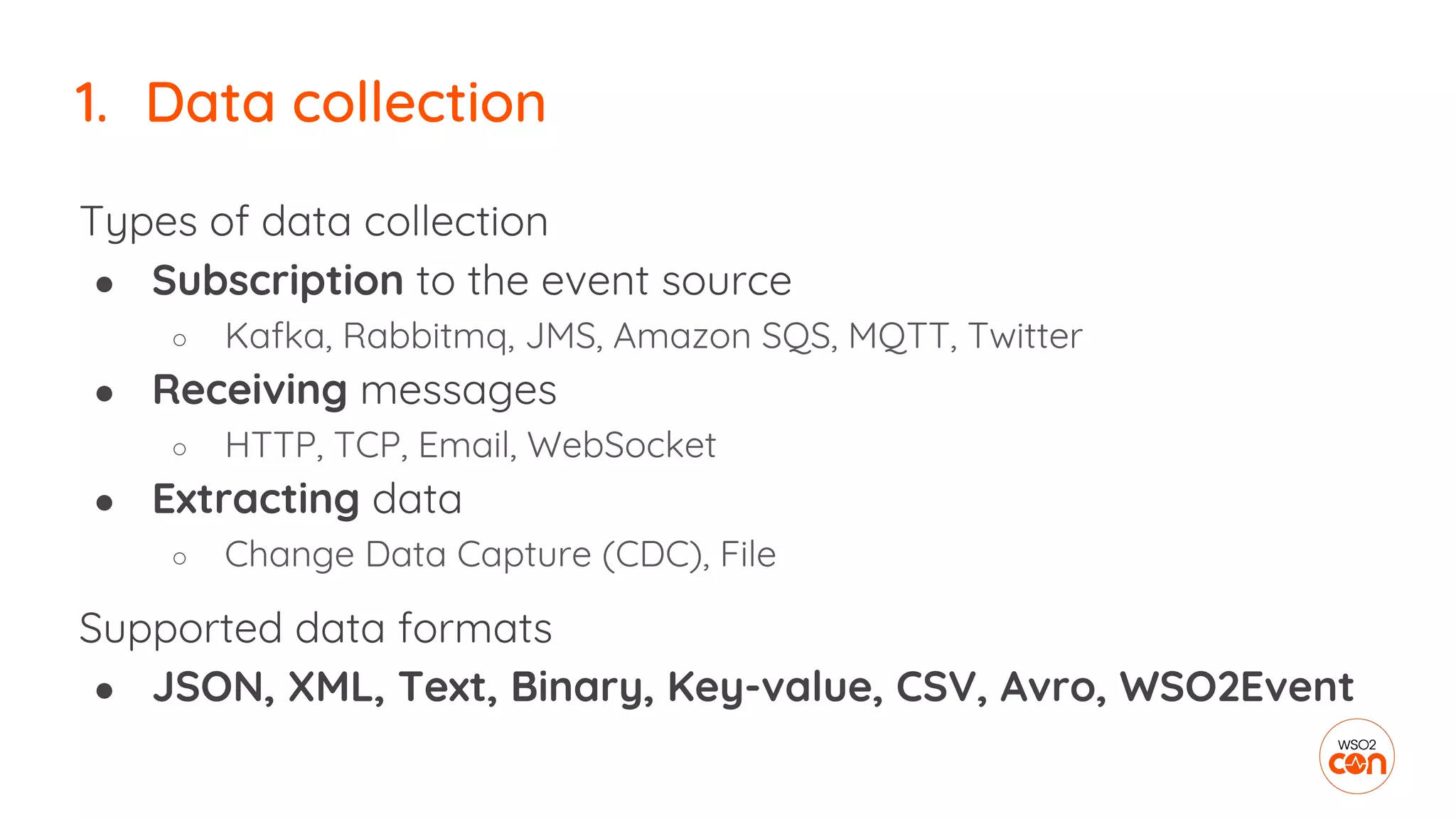

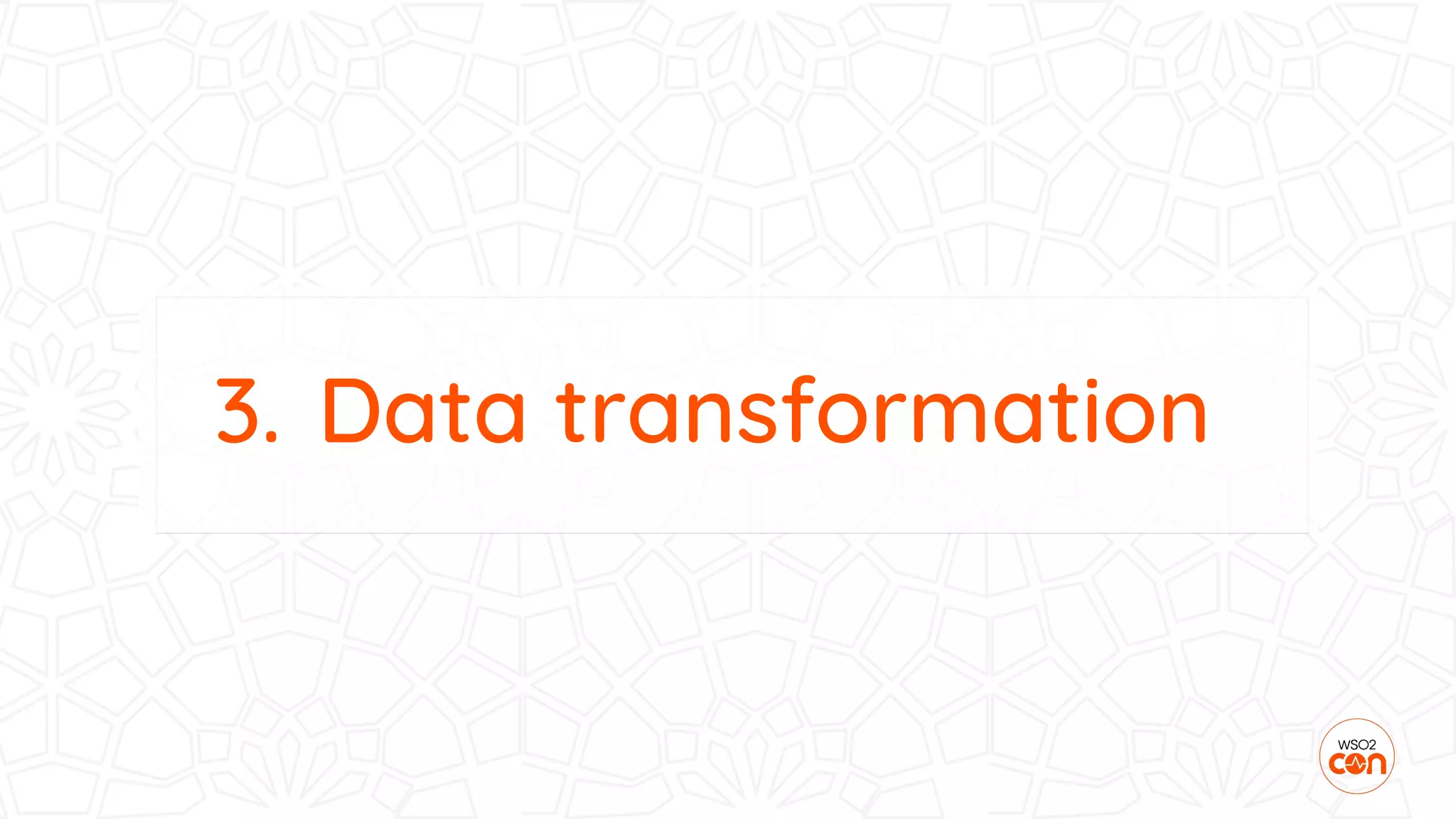

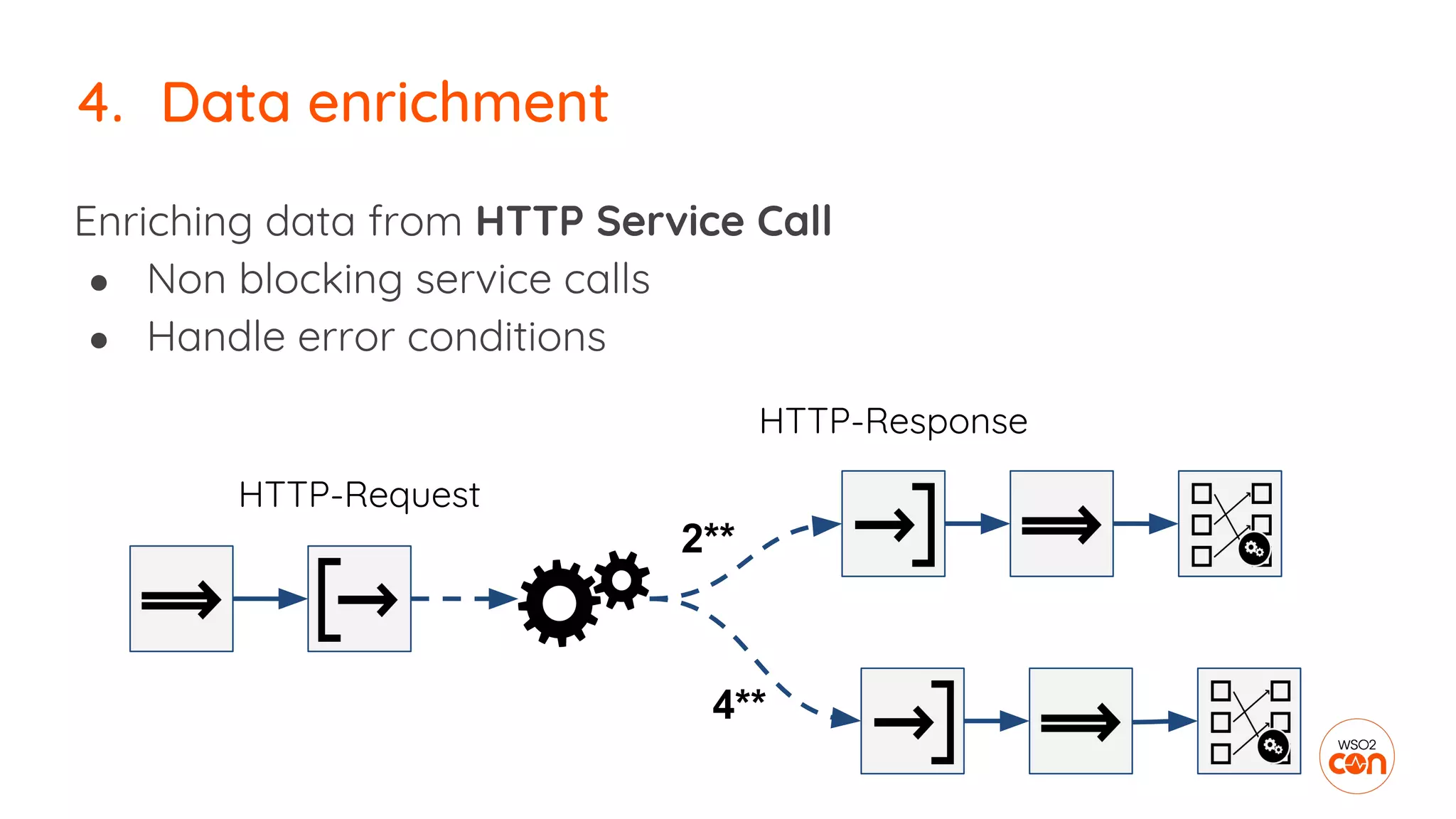

![Data type of Stream Processor is Tuple

Array[] containing values of

string, int, float, long, double, bool, object

JSON, XML,

Text, Binary,

Key-value,

CSV, Avro,

WSO2Event

Tuple

JSON, XML,

Text, Binary,

Key-value,

CSV, Avro,

WSO2Event

3. Data transformation](https://image.slidesharecdn.com/3-181113092919/75/WSO2Con-EU-2018-Patterns-for-Building-Streaming-Apps-17-2048.jpg)

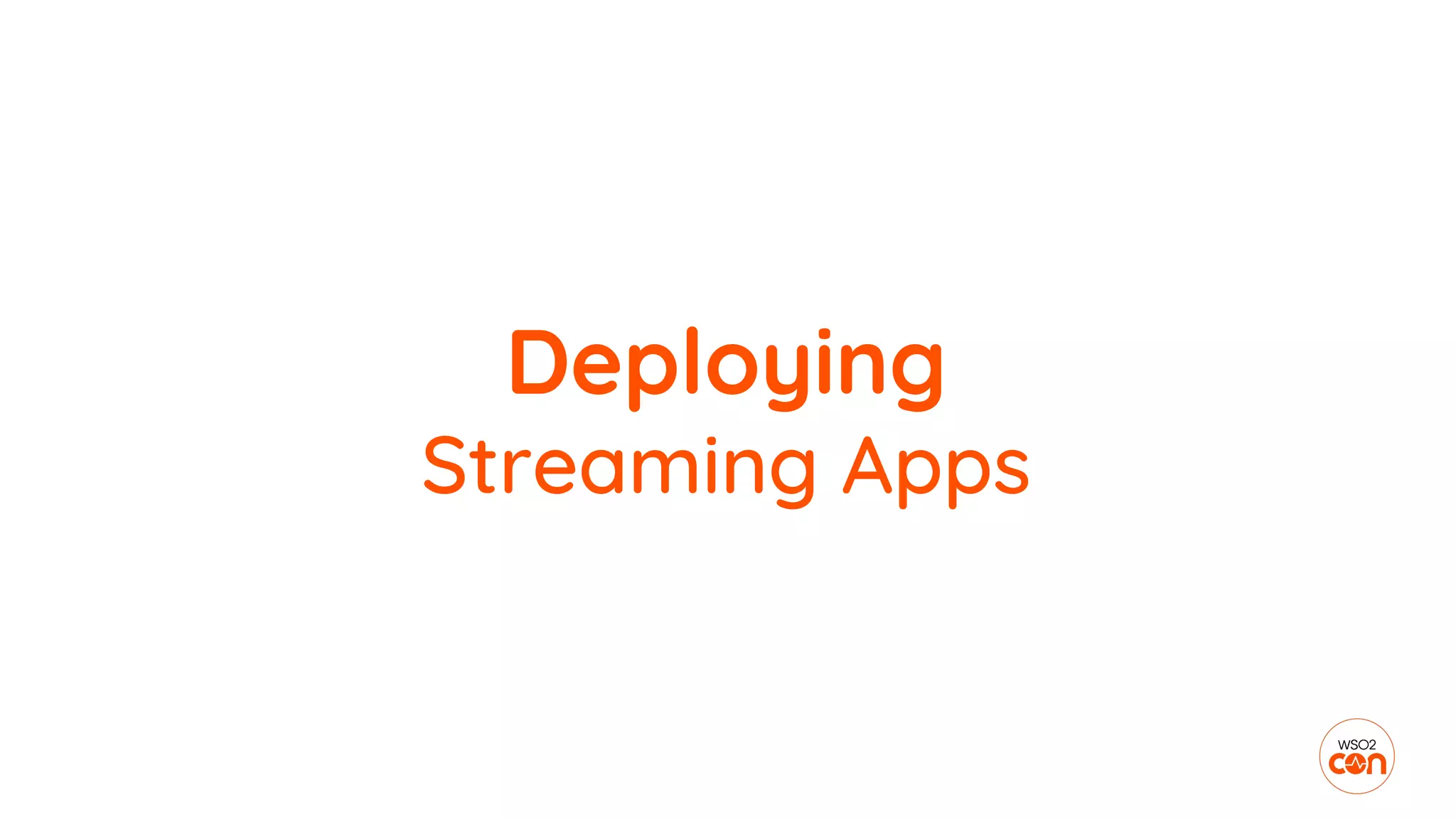

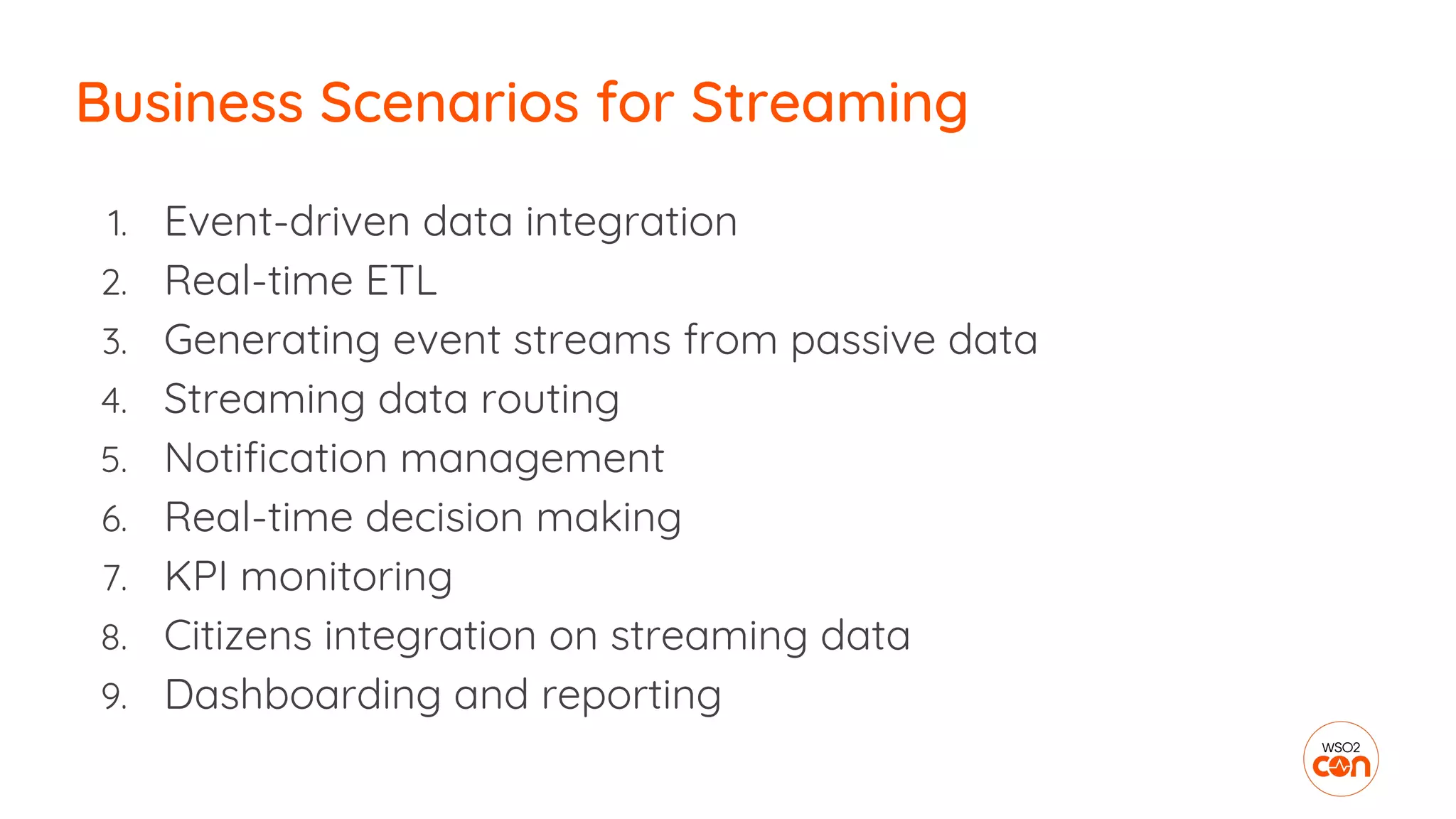

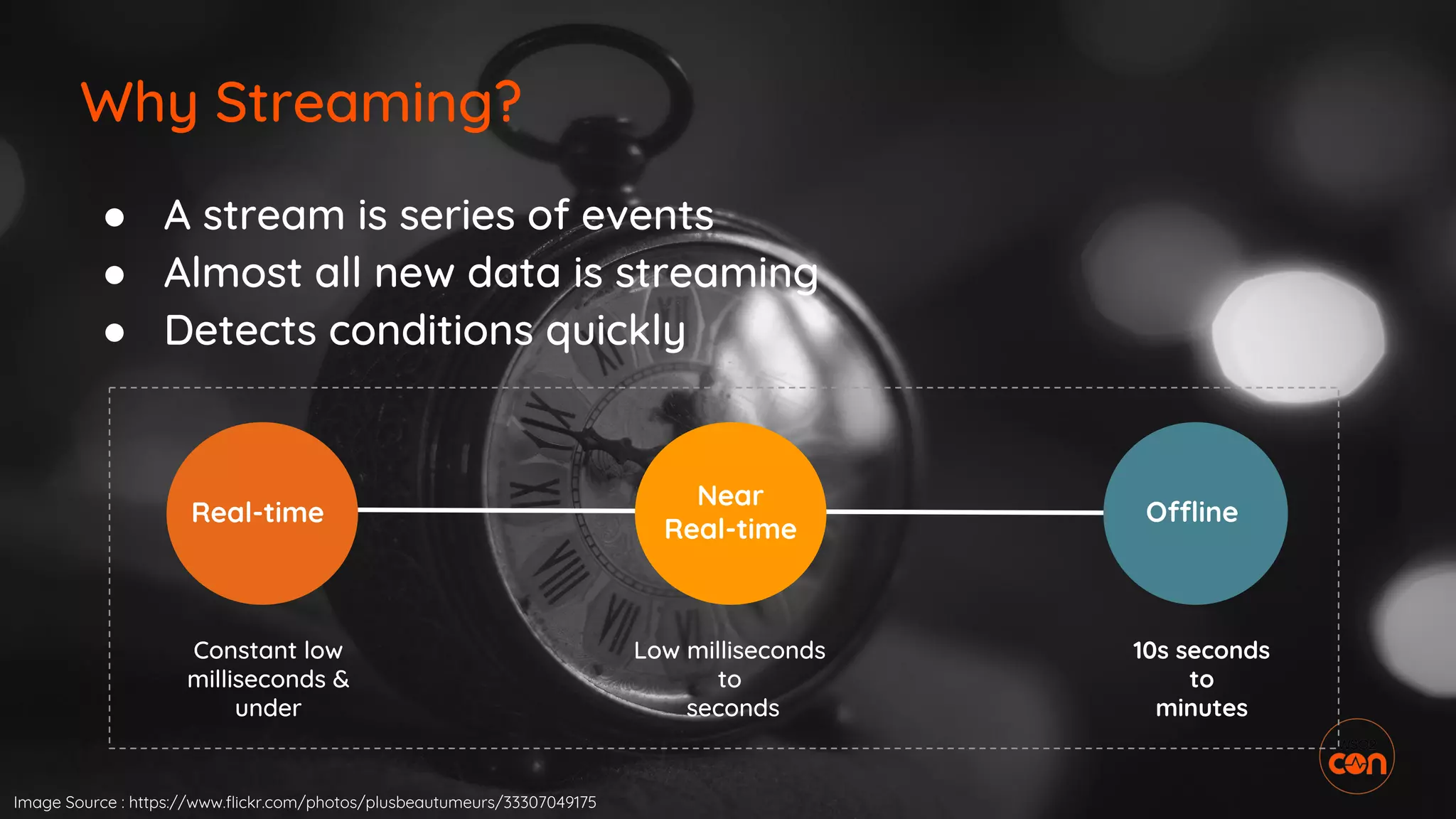

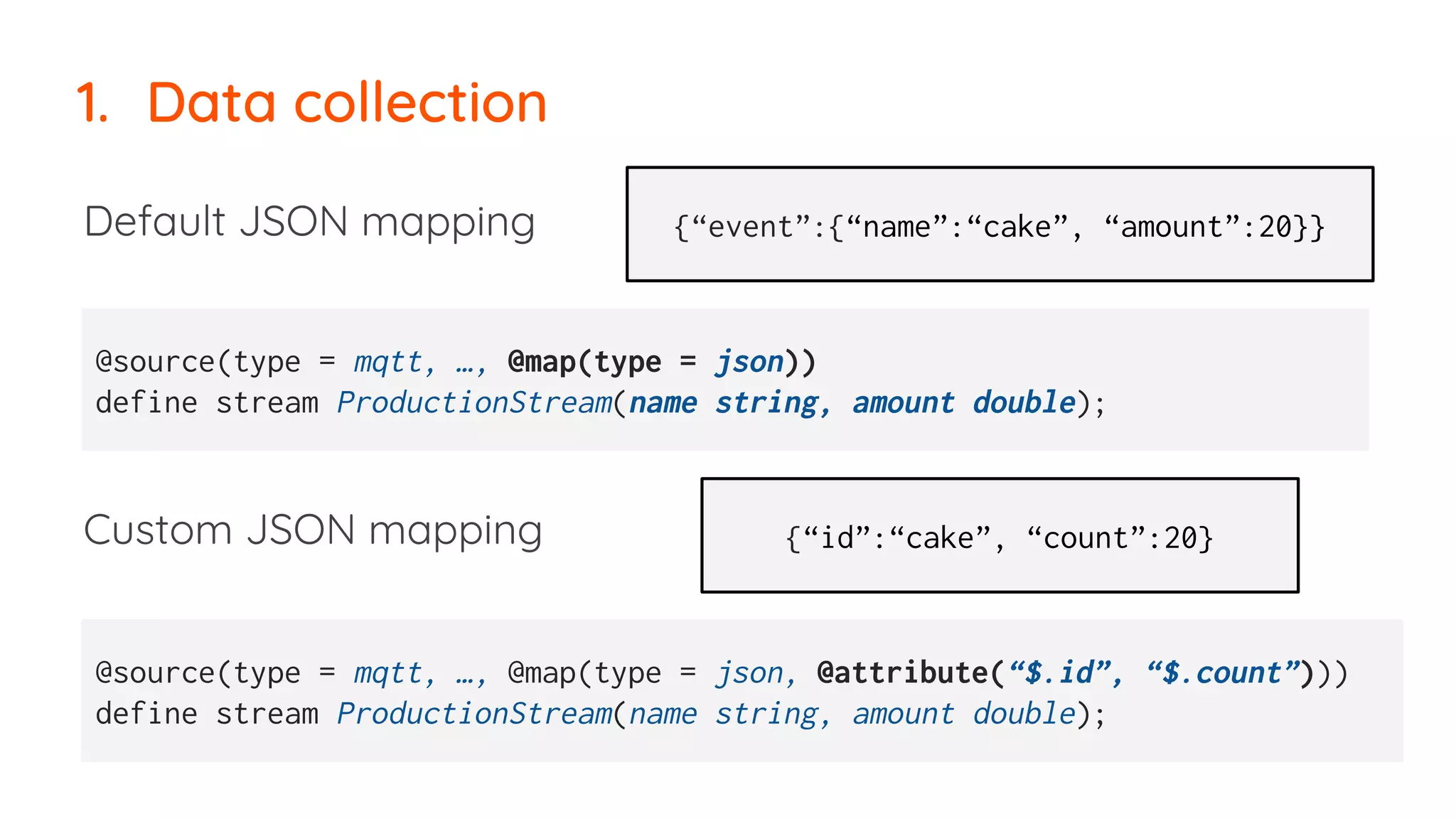

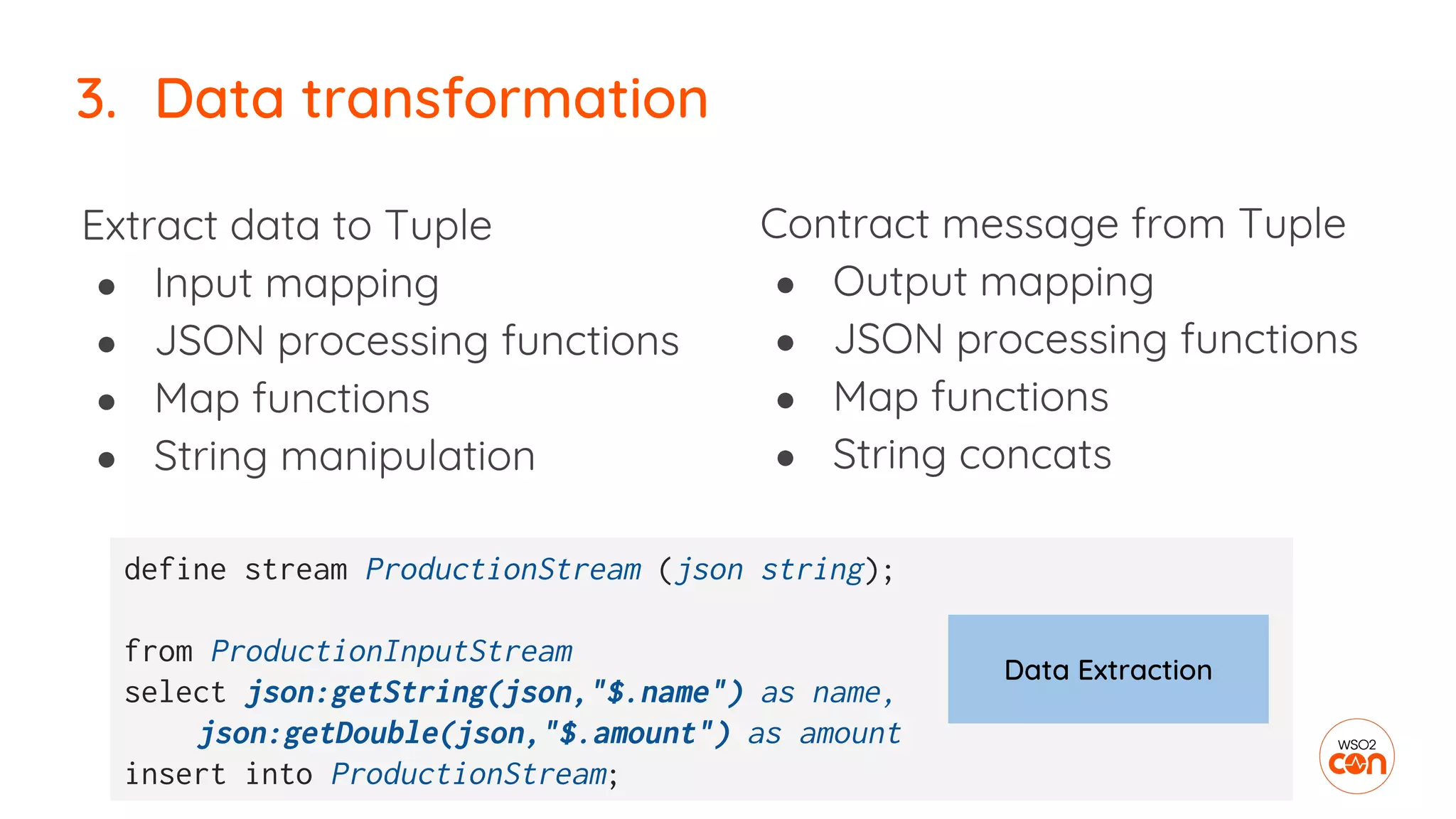

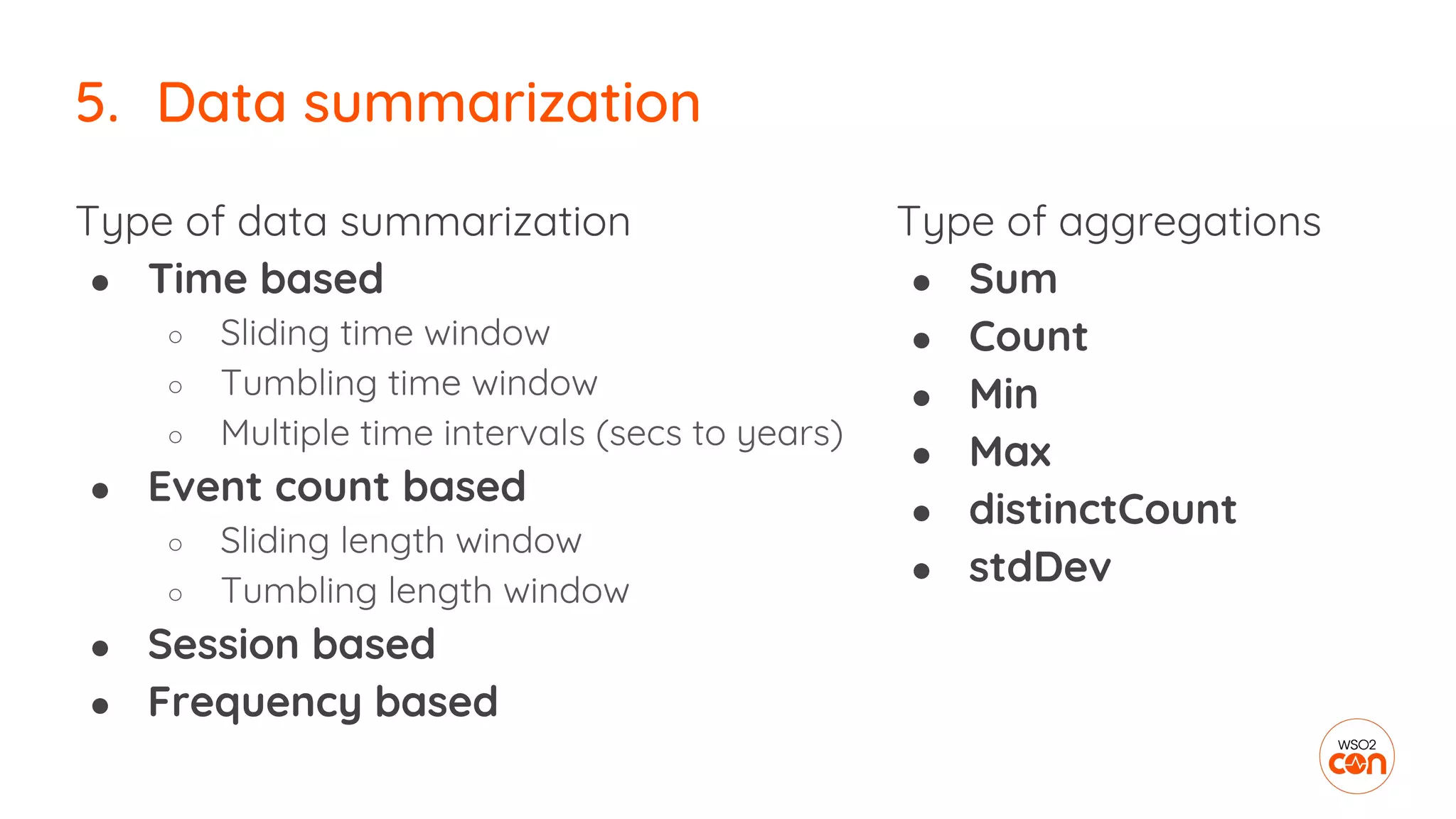

![Transform data by

● Inline operations

○ math & logical operations

● Inbuilt function calls

○ 60+ extensions

● Custom function calls

○ Java, JS, R

3. Data transformation

myFunction(item, price) as discount

define function myFunction[lang_name] return return_type {

function_body

};

str:upper(ItemID) as IteamCode,

amount * price as cost](https://image.slidesharecdn.com/3-181113092919/75/WSO2Con-EU-2018-Patterns-for-Building-Streaming-Apps-19-2048.jpg)

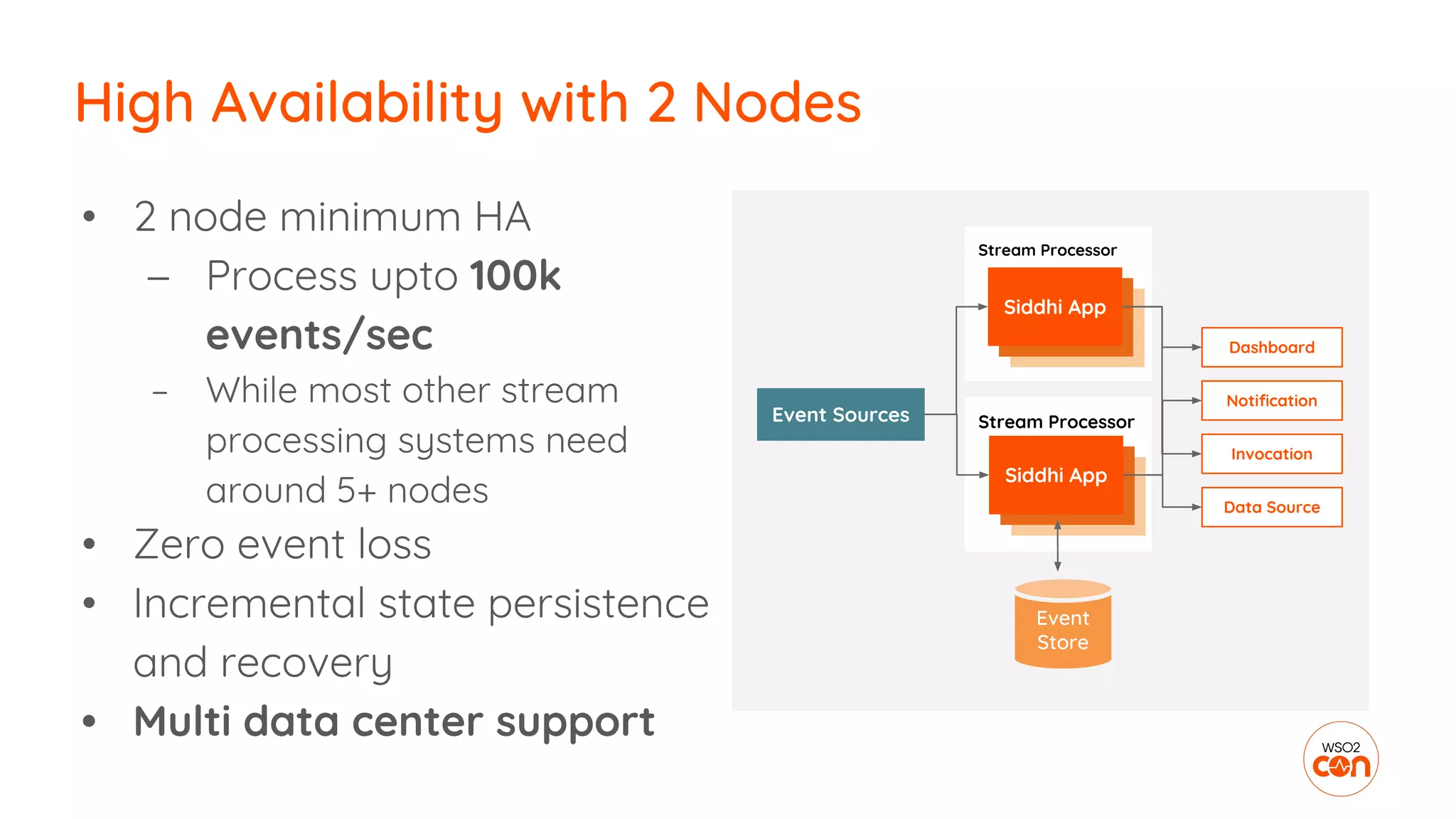

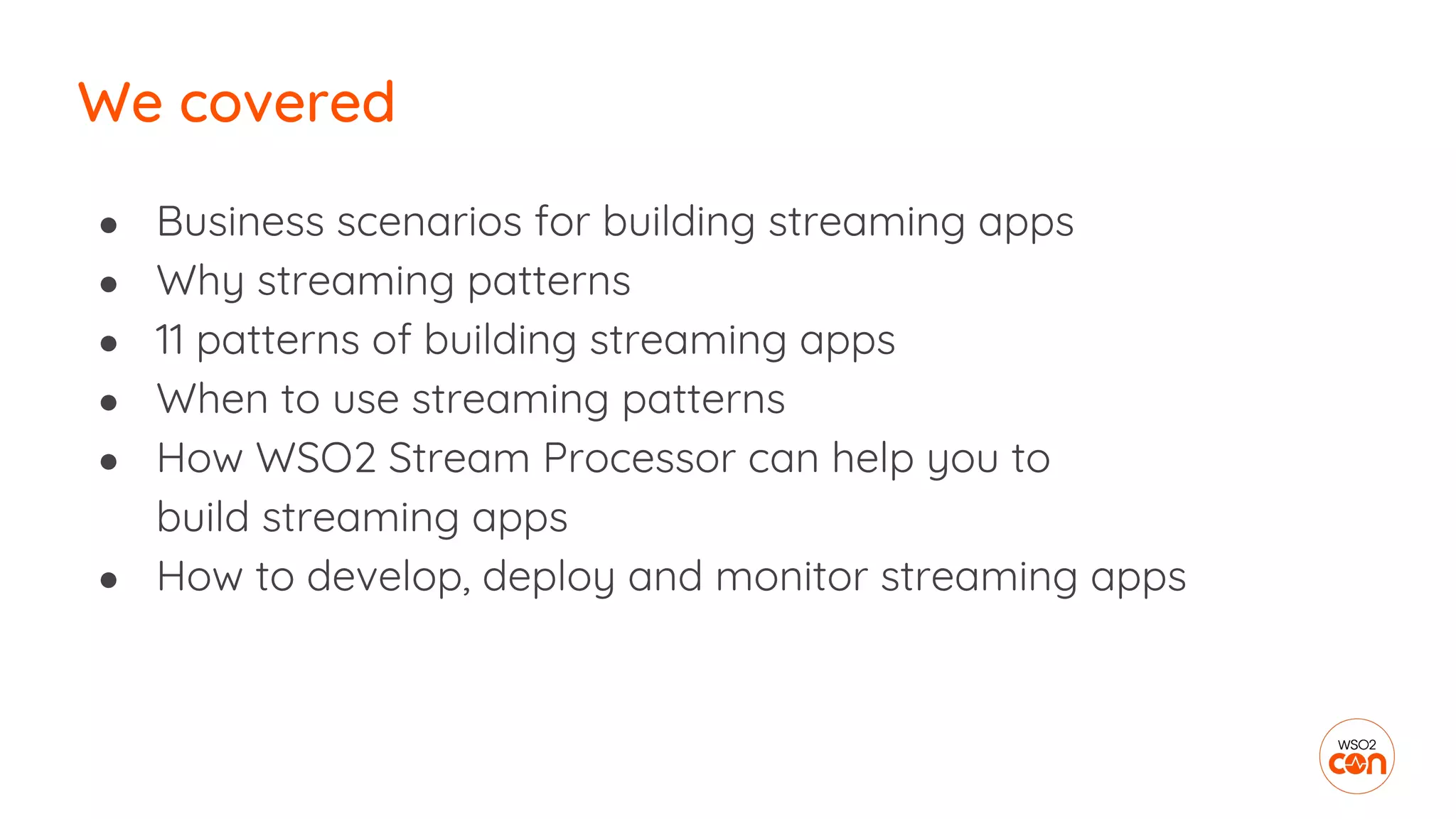

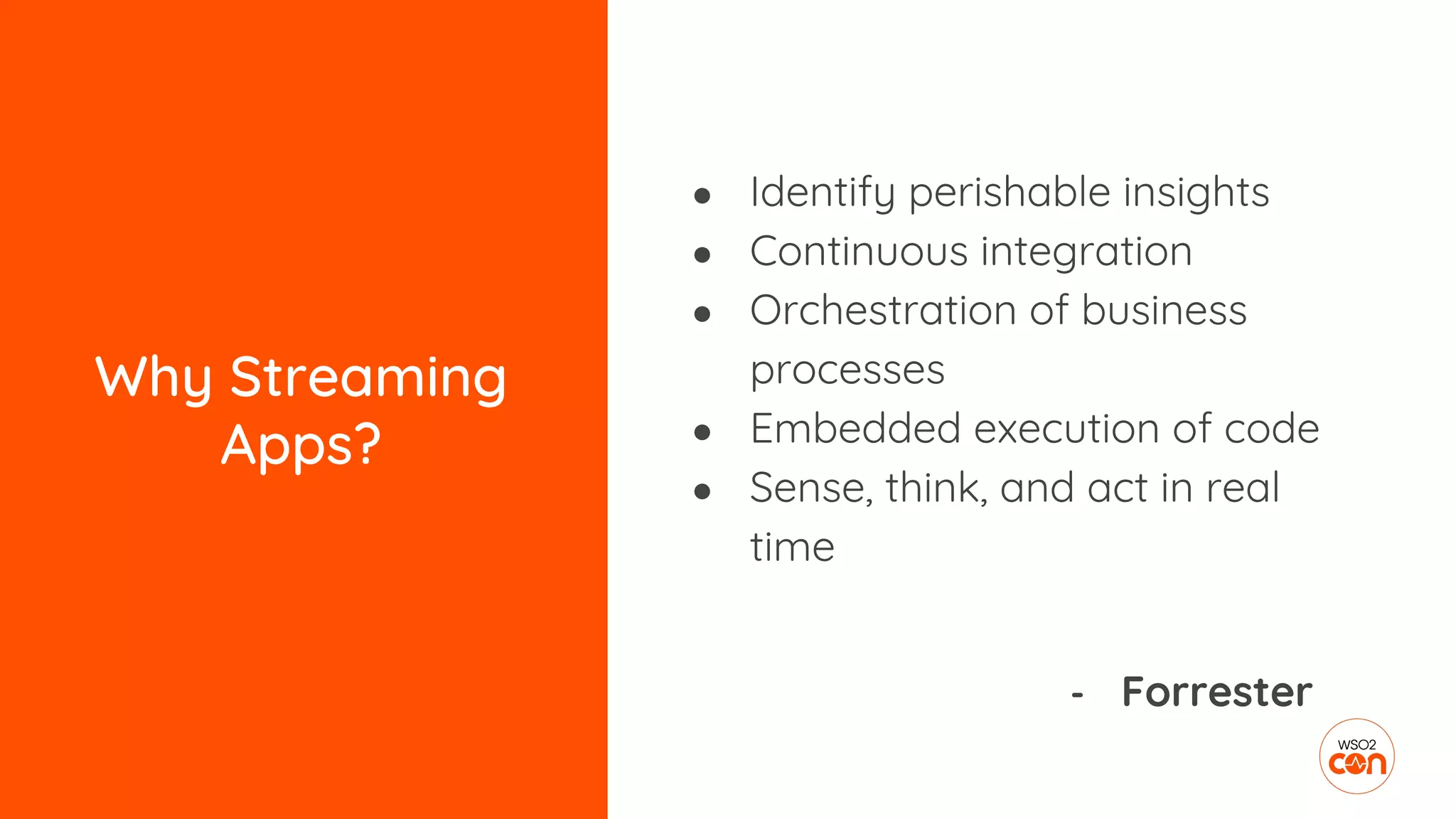

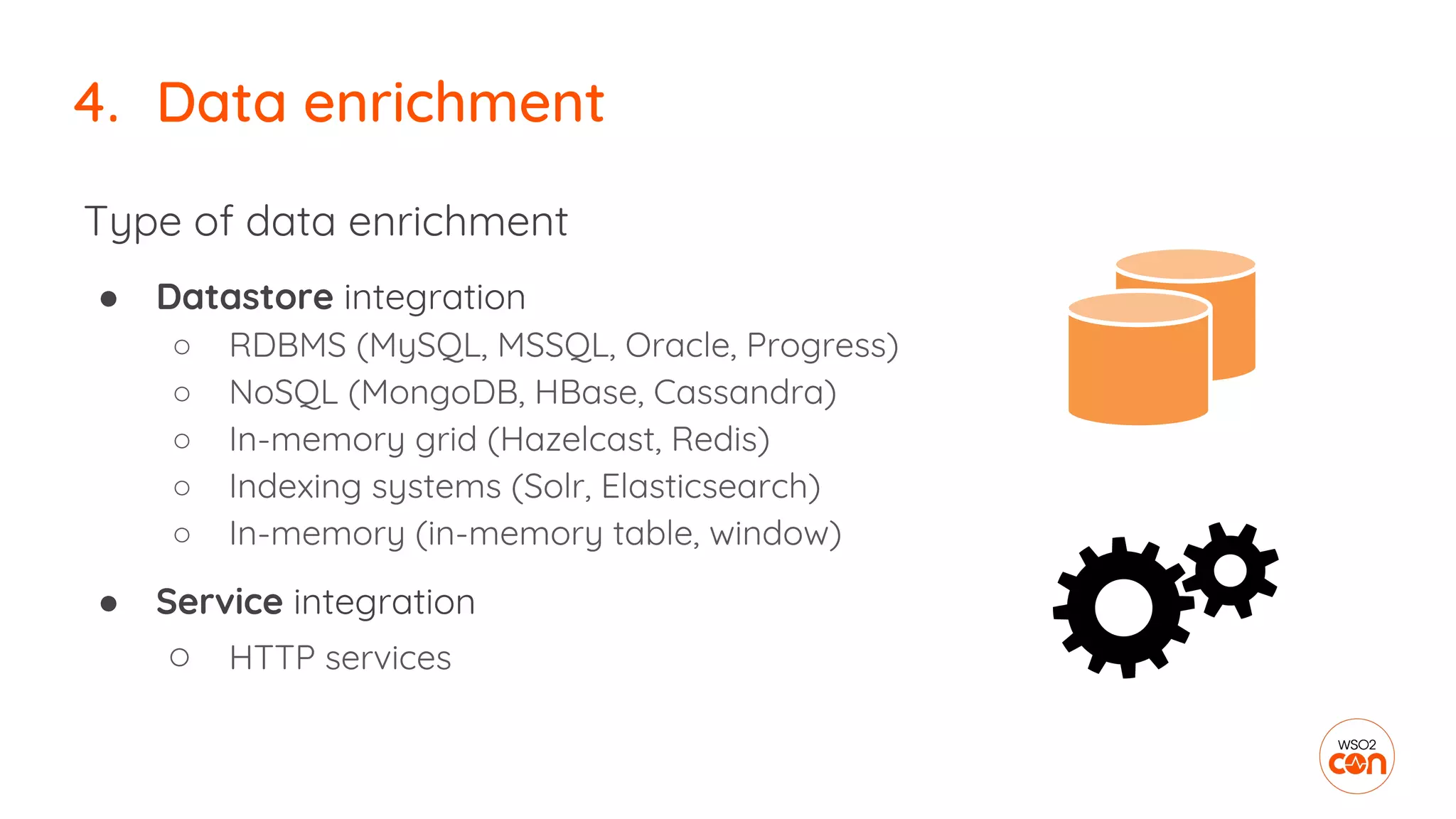

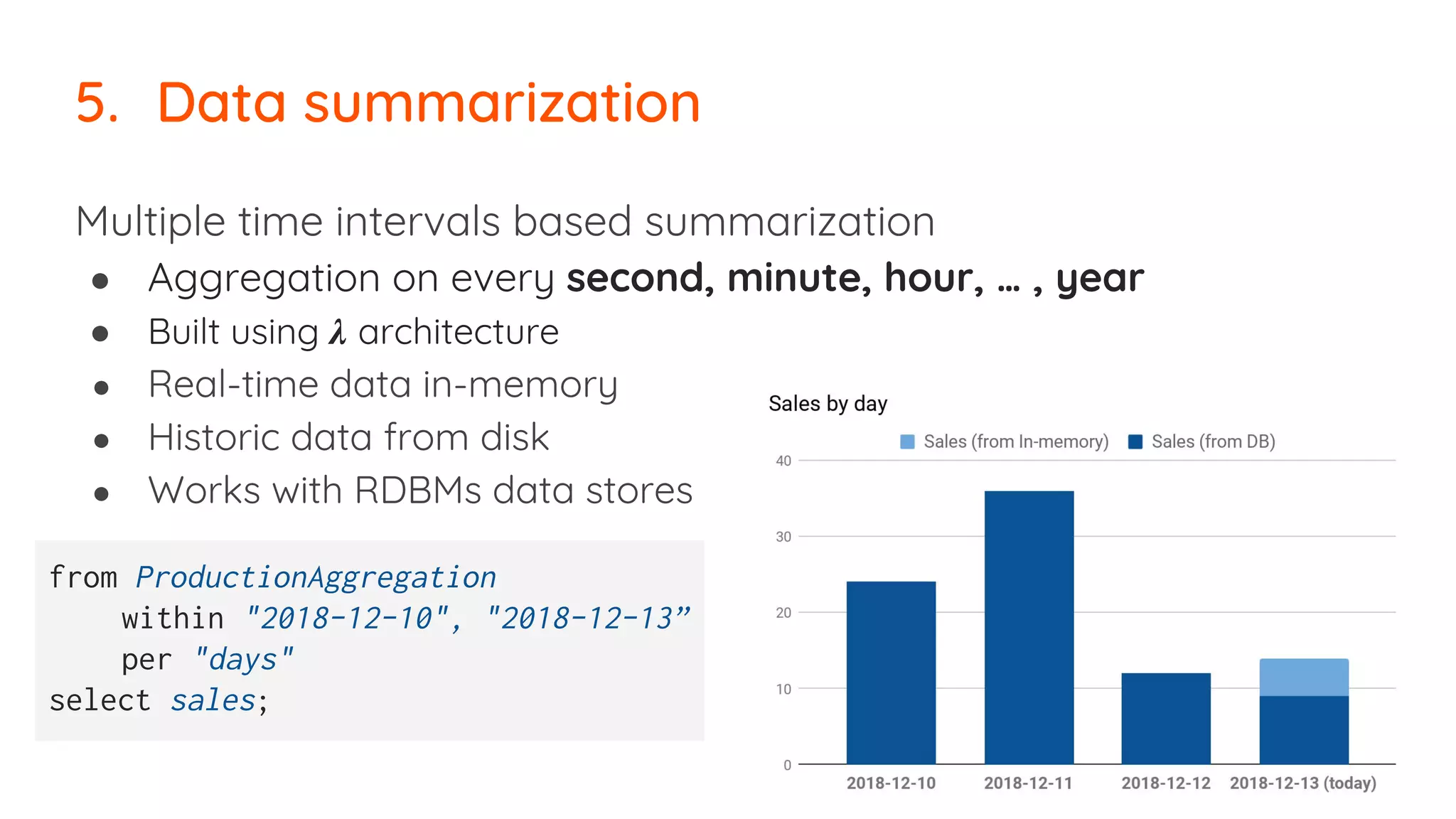

![No occurrence of event pattern detection

6. Rule processing

define stream DeliveryStream (orderId string, amount double);

define stream PaymentStream (orderId string, amount double);

from every (e1 = DeliveryStream)

-> not PaymentStream [orderId == e1.orderId] for 15 min

select e1.orderId, e1.amount

insert into PaymentDelayedStream ;](https://image.slidesharecdn.com/3-181113092919/75/WSO2Con-EU-2018-Patterns-for-Building-Streaming-Apps-29-2048.jpg)