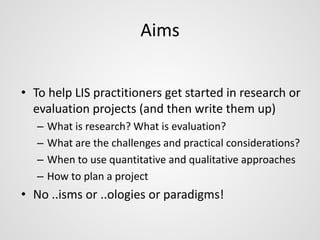

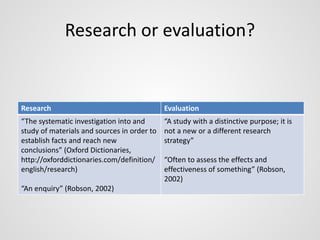

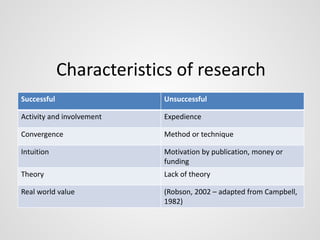

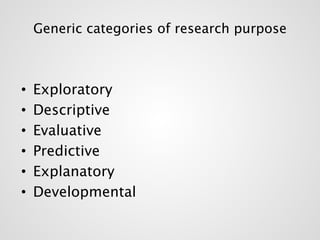

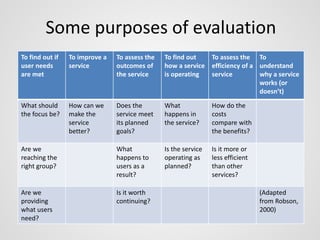

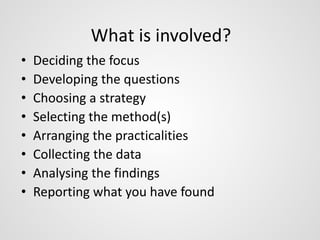

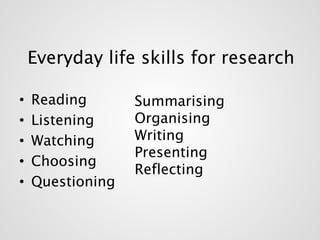

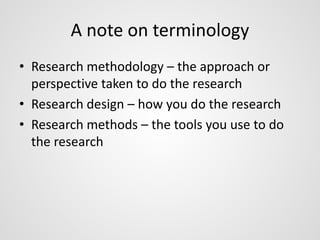

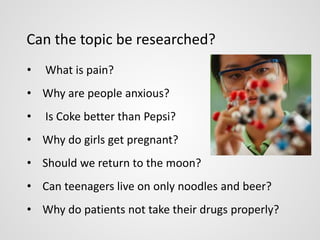

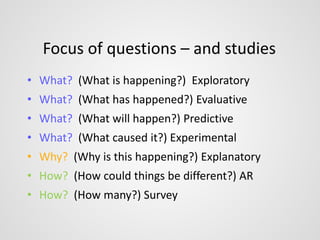

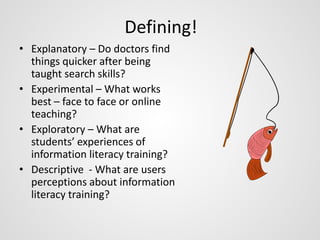

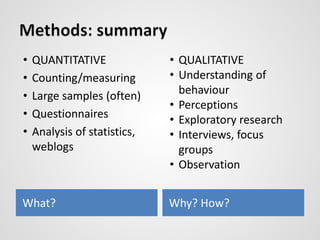

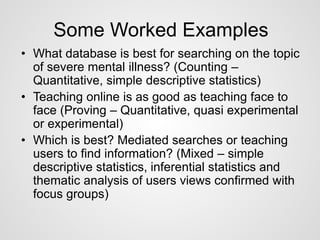

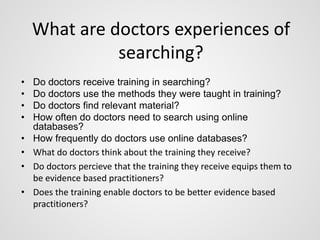

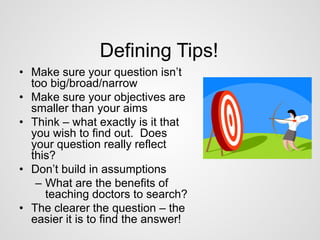

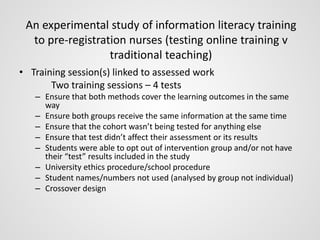

This document provides a comprehensive guide for library and information science practitioners on starting and conducting research or evaluation projects. It outlines various aspects such as defining research questions, designing studies, employing quantitative and qualitative methods, and writing up findings. The guide emphasizes the importance of understanding research contexts, literature reviews, ethical considerations, and effectively communicating results to various audiences.