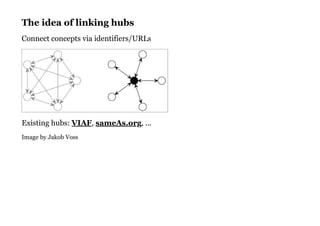

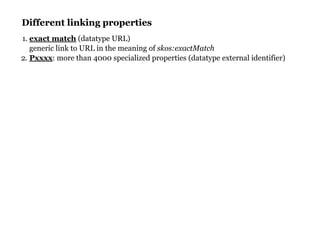

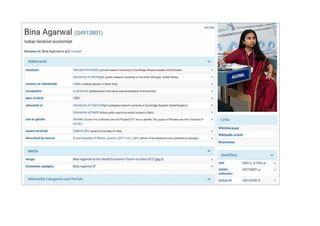

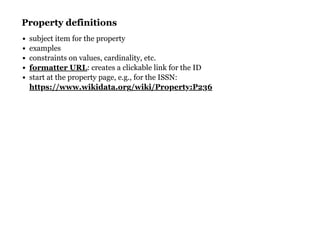

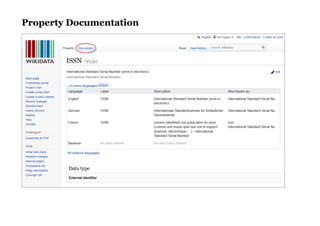

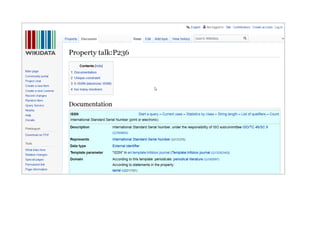

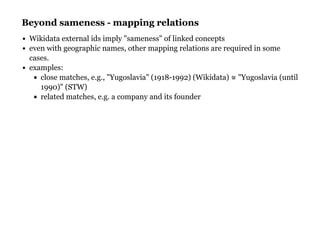

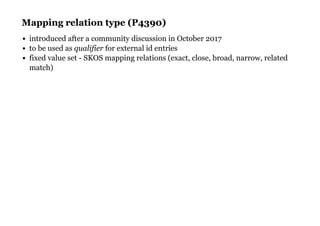

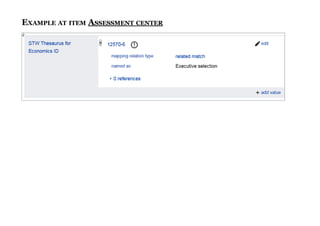

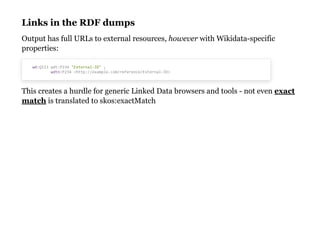

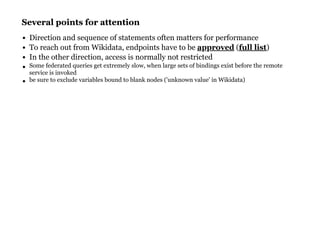

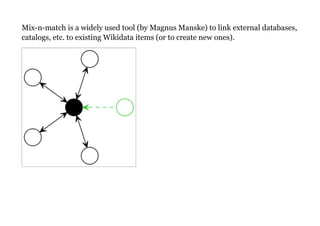

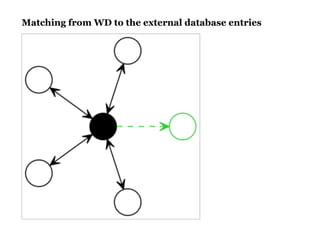

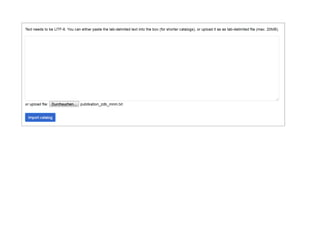

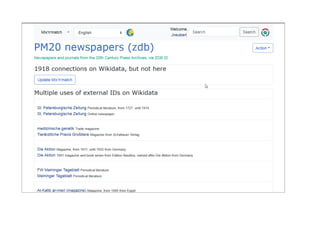

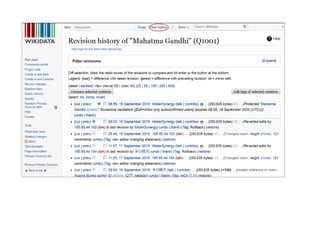

The document provides an overview of a tutorial presented at the DCMI Conference in Seoul regarding the usage and querying of Wikidata as a linking hub in the linked data cloud. It covers key concepts such as external identifiers, mapping relations, and the process of linking external data to Wikidata through tools like Mix-n-Match, while also addressing quality control and community involvement in Wikidata. Additionally, it outlines challenges in performance for federated SPARQL queries and emphasizes the importance of community consensus for decision-making in Wikidata.