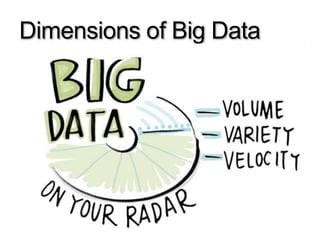

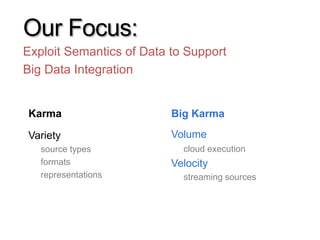

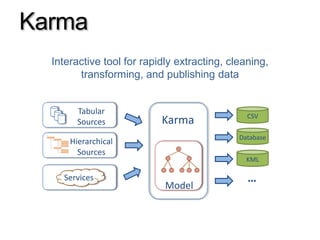

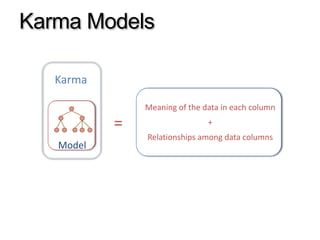

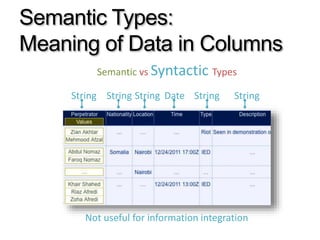

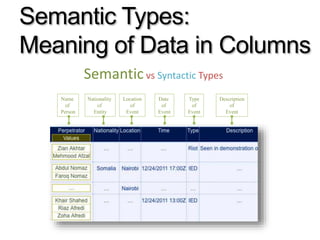

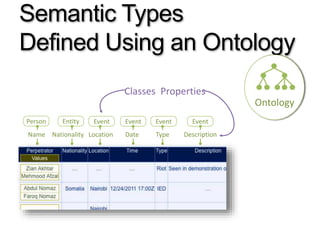

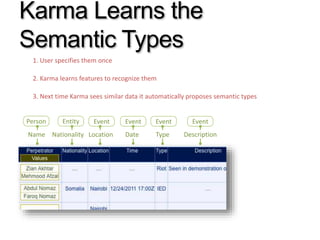

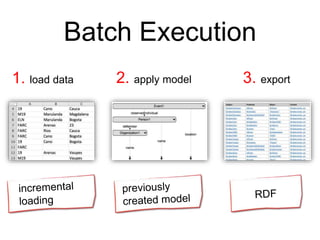

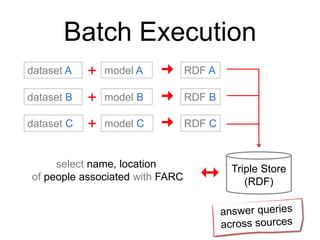

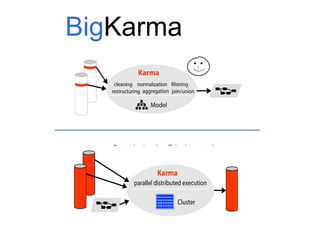

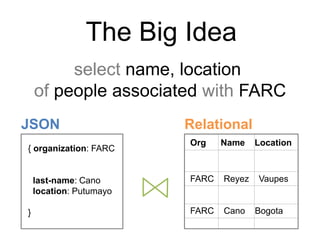

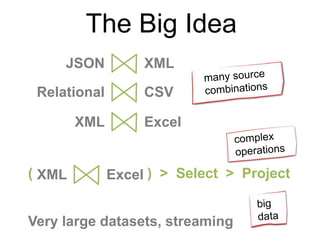

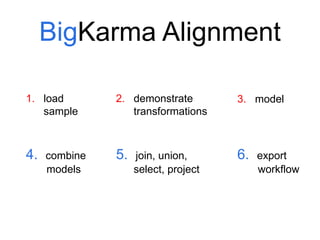

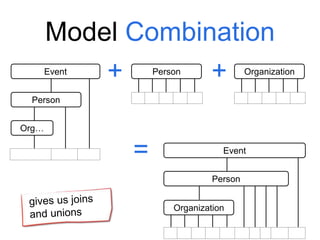

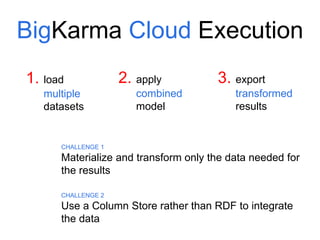

The document discusses Karma, a tool for big data integration and analysis that leverages semantics to enhance data extraction, cleaning, transformation, and publishing. It focuses on recognizing semantic types and relationships within data columns, enabling efficient modeling and execution of data workflows across various formats. Additionally, the document outlines challenges and solutions related to streaming data and cloud execution in the context of large datasets.