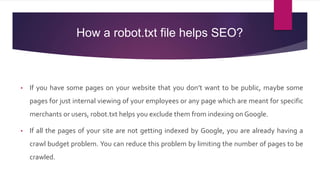

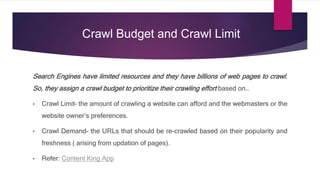

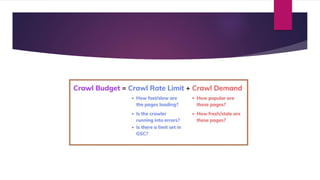

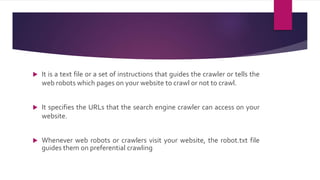

The robot.txt file is a text file that guides search engine crawlers on which pages of a website they are allowed to access and crawl. It specifies URLs that crawlers can and cannot access, follows the robot exclusion protocol standard, and is generally placed in the website root directory. The robot.txt file helps with search engine optimization by allowing webmasters to exclude internal or private pages from being indexed while also limiting crawling to optimize crawl budget and demand.

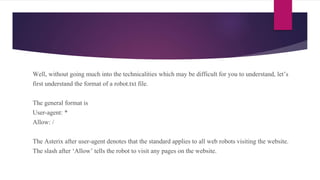

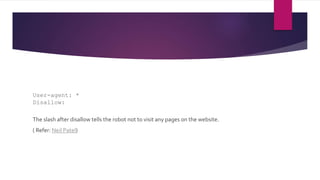

![User-agent: [user-agent name]

Disallow: [URL which should not to be crawled(string)]

In this case, the URL which should not be crawled has been specified.

User-agent: *

Disallow::/folder/

User-agent: *Disallow: /file.html](https://image.slidesharecdn.com/robottxtfile-211015172702/85/What-is-a-Robot-txt-file-7-320.jpg)