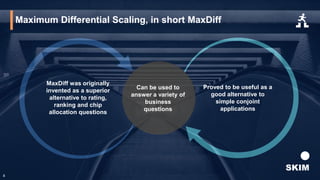

The document discusses maxdiff methodologies, highlighting its advantages over traditional rating systems in capturing consumer preferences through trade-offs. It addresses challenges such as the maximum number of items that can be effectively screened and introduces solutions like sparse and express maxdiff for large item sets. Additionally, it explores the relativity issue of maxdiff and suggests anchoring methods to improve preference accuracy.