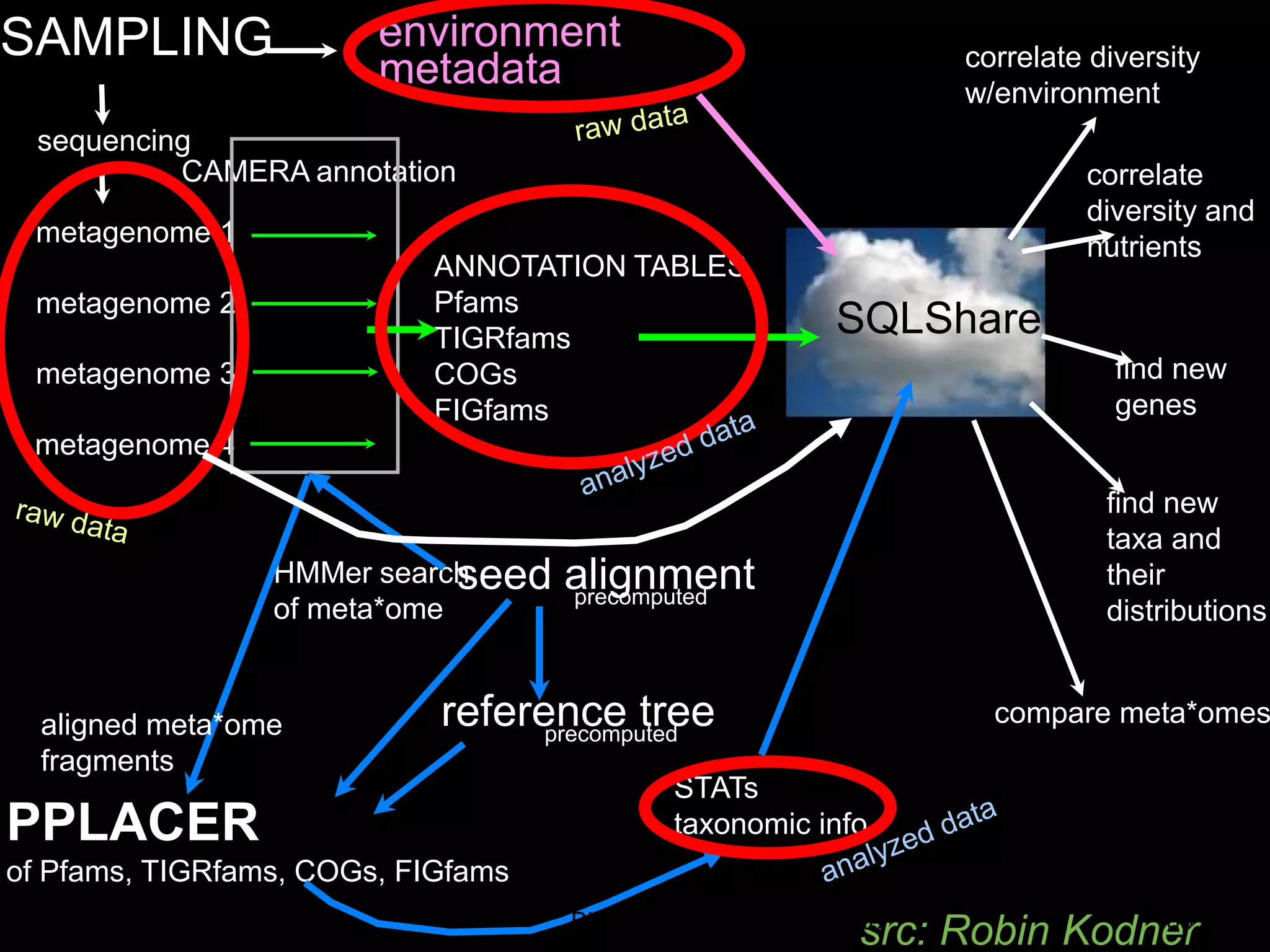

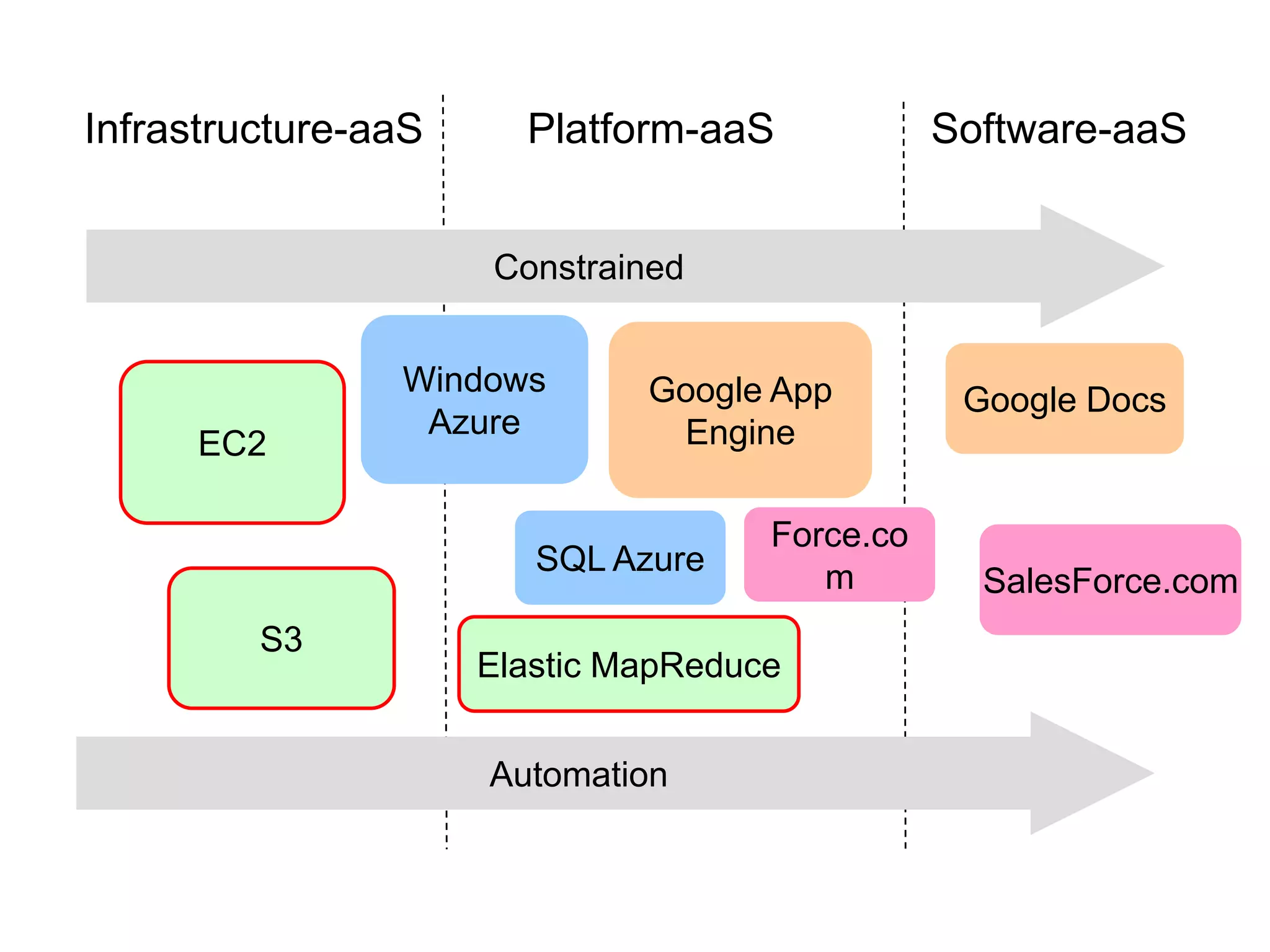

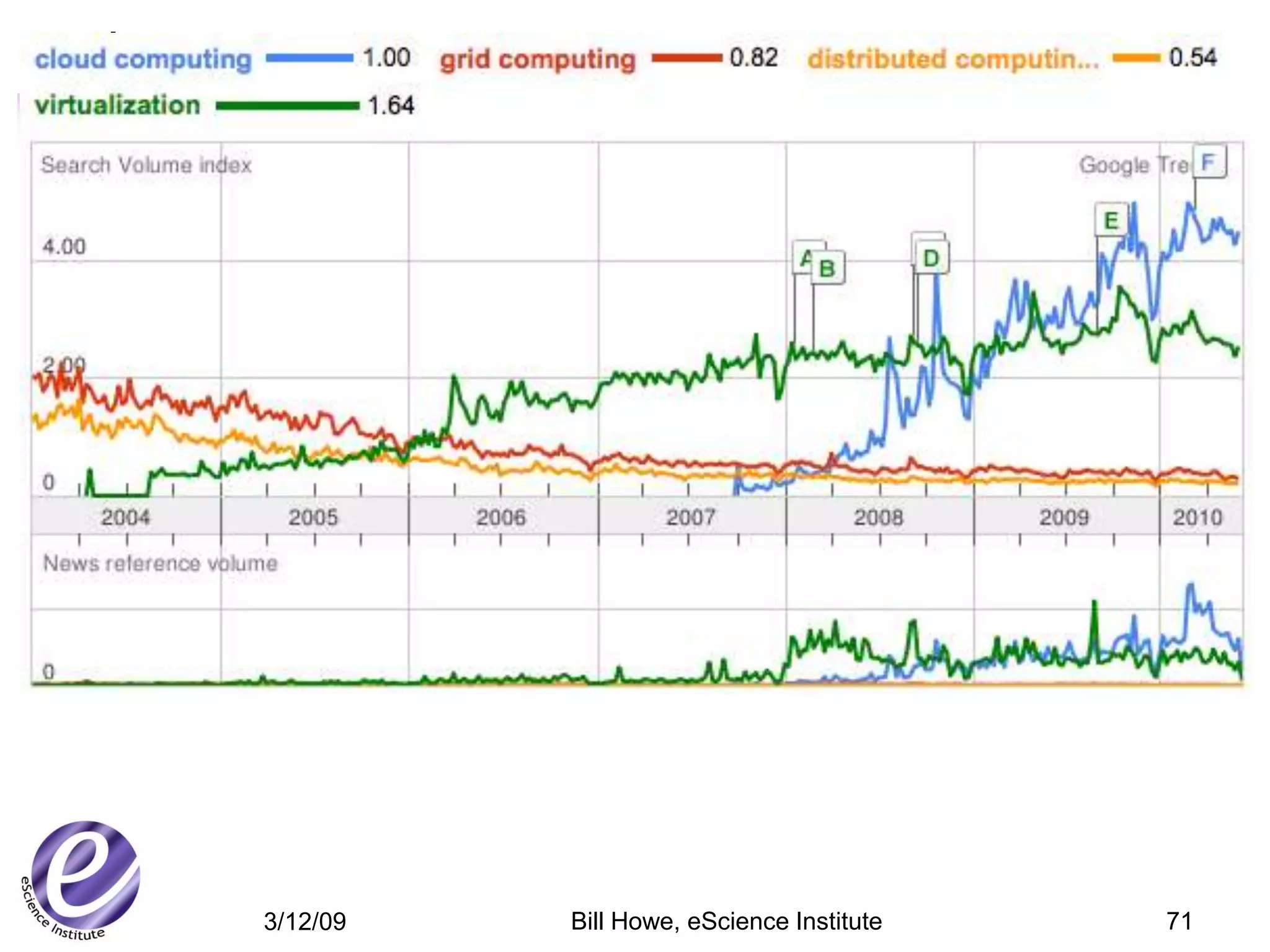

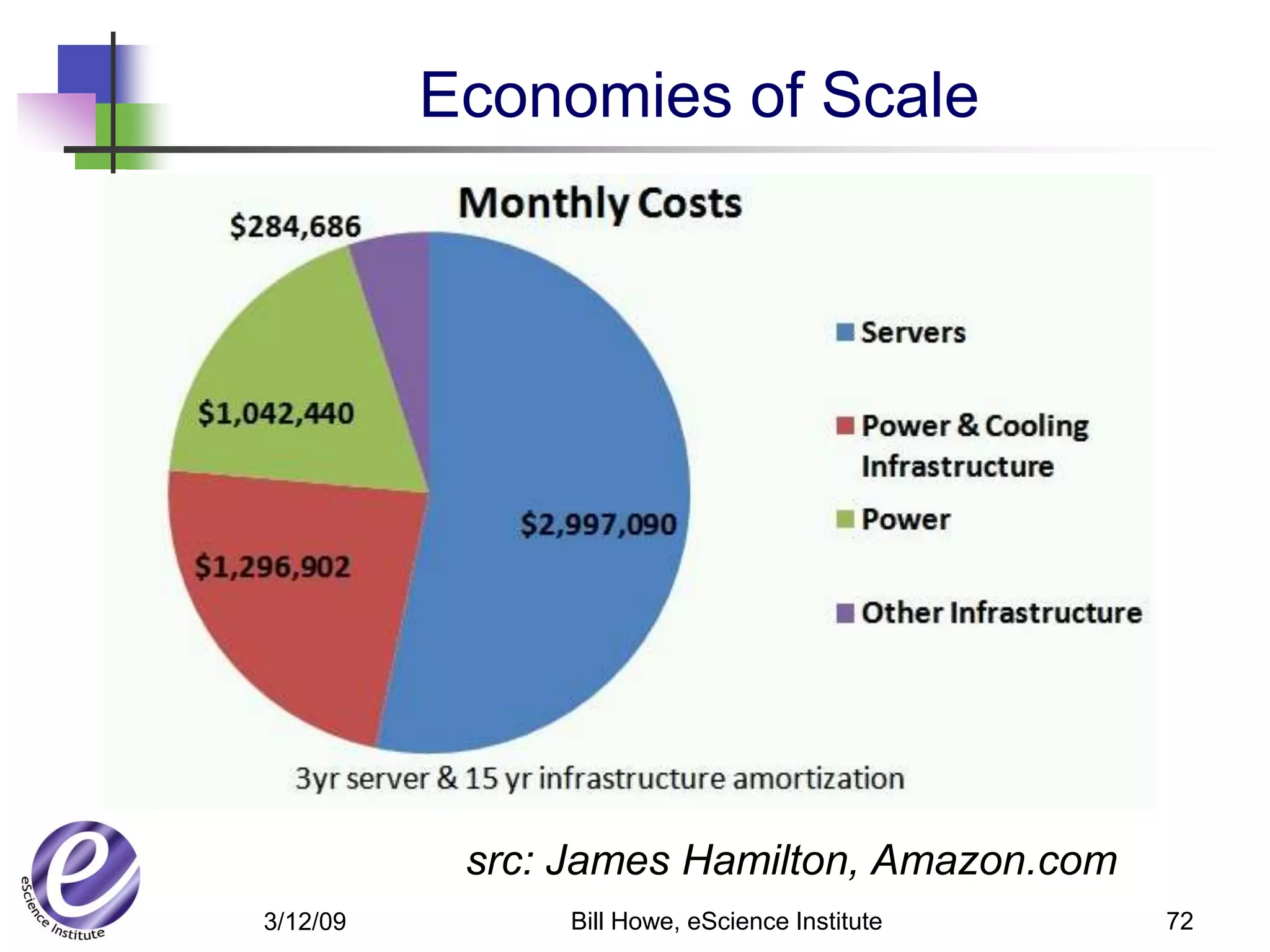

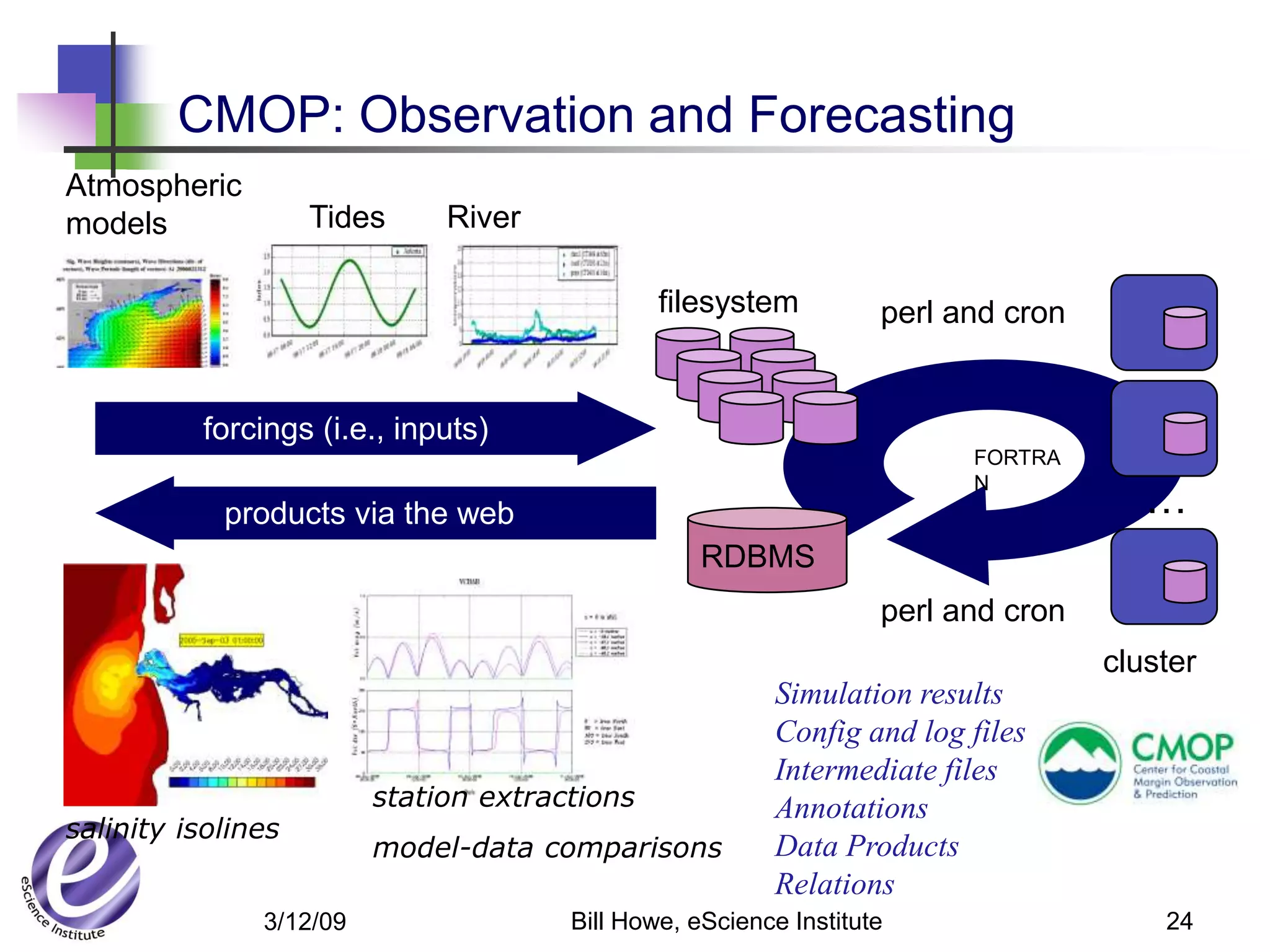

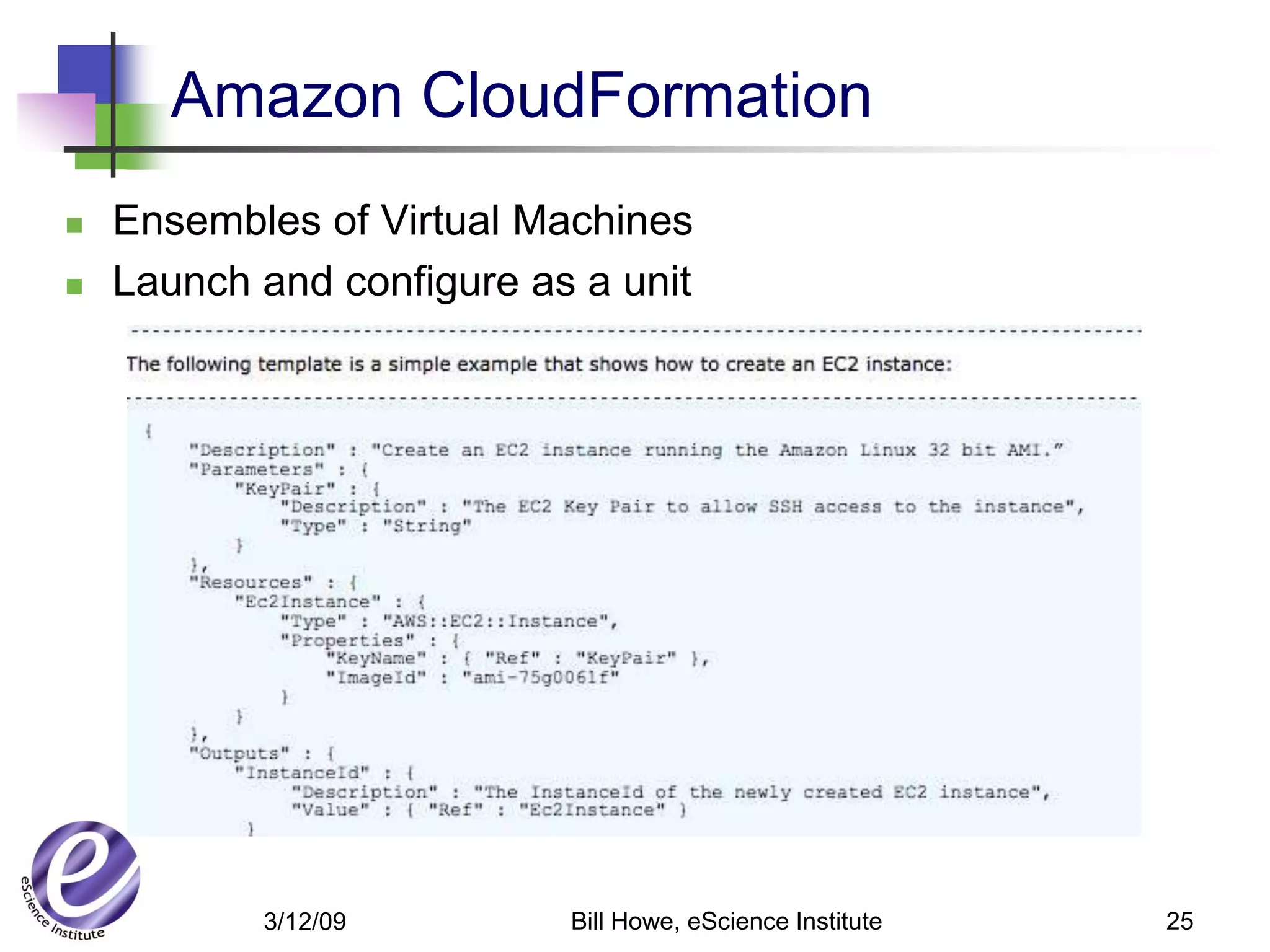

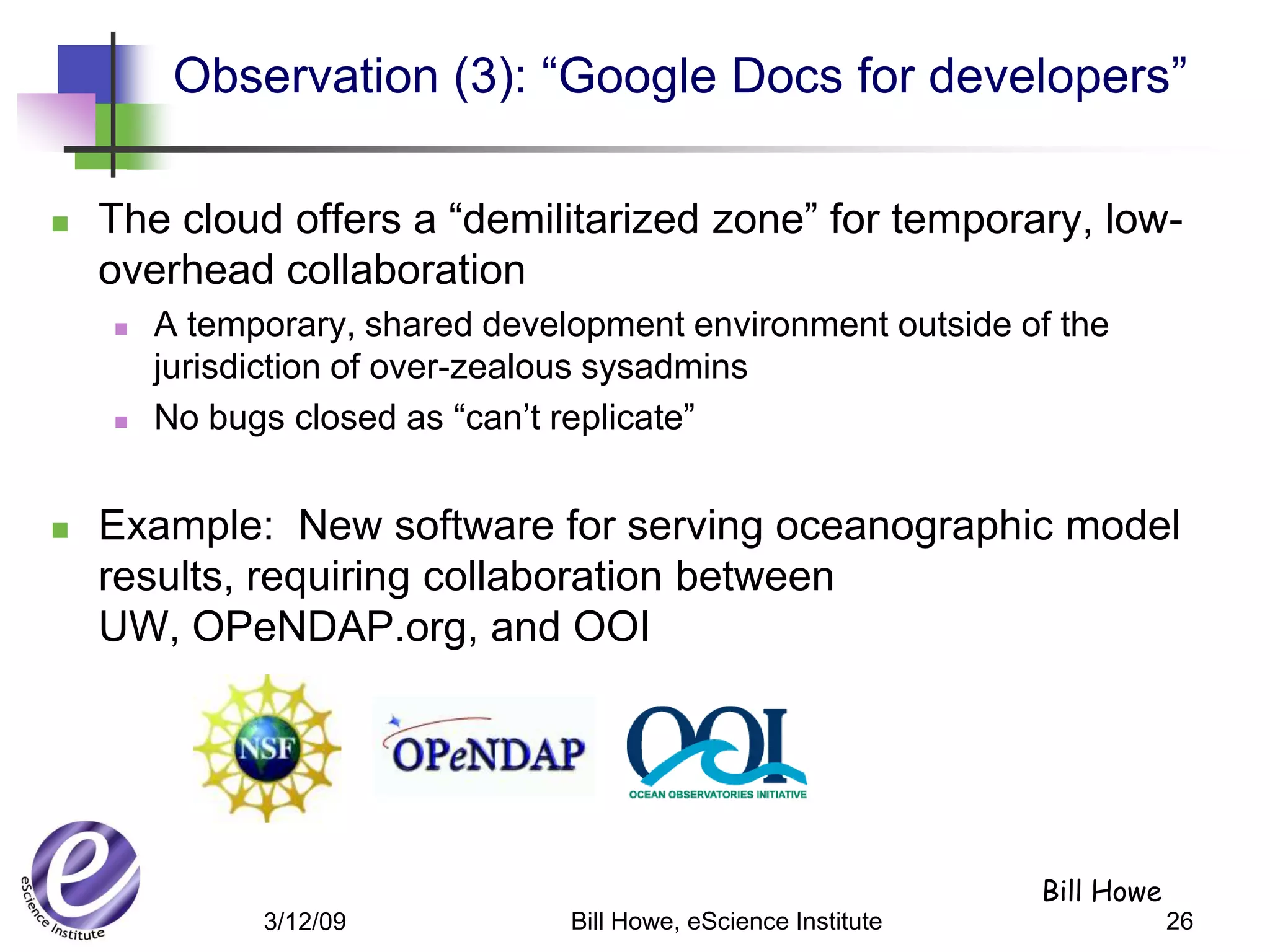

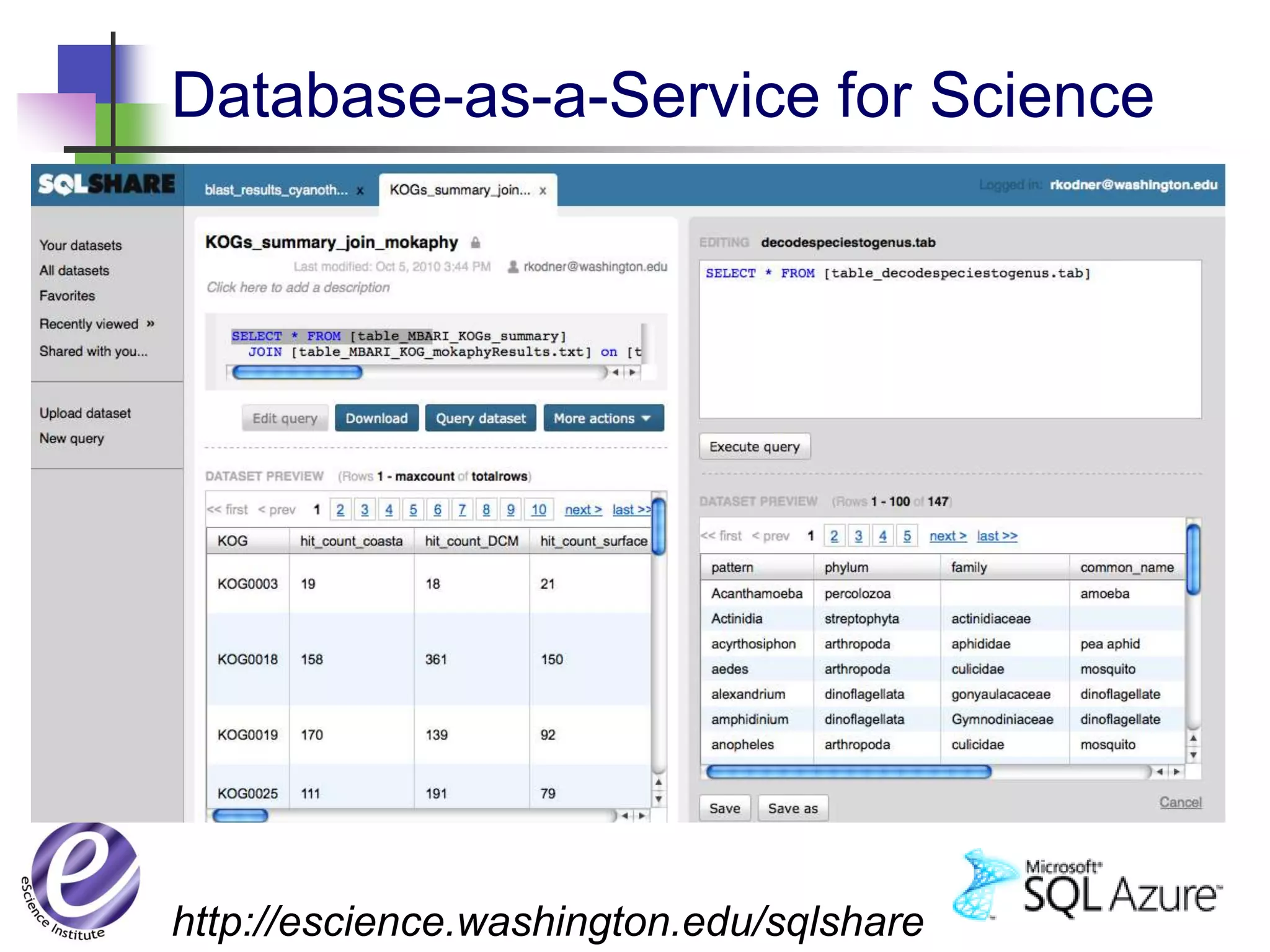

This document discusses the roles that cloud computing and virtualization can play in reproducible research. It notes that virtualization allows for capturing the full computational environment of an experiment. The cloud builds on this by providing scalable resources and services for storage, computation and managing virtual machines. Challenges include costs, handling large datasets, and cultural adoption issues. Databases in the cloud may help support exploratory analysis of large datasets. Overall, the cloud shows promise for improving reproducibility by enabling sharing of full experimental environments and resources for computationally intensive analysis.

![[Slide source: Werner

Generator Vogels]

3/12/09 Bill Howe, eScience Institute 10](https://image.slidesharecdn.com/reproducibilityinthecloud-120428231214-phpapp02/75/Virtual-Appliances-Cloud-Computing-and-Reproducible-Research-10-2048.jpg)

![Animoto

3/12/09 [Werner

Bill Howe, eScience Institute Vogels, Amazon.com]

34](https://image.slidesharecdn.com/reproducibilityinthecloud-120428231214-phpapp02/75/Virtual-Appliances-Cloud-Computing-and-Reproducible-Research-34-2048.jpg)

![Periodic

3/12/09 [Deepak

Bill Howe, eScience Institute Singh, Amazon.com]

35](https://image.slidesharecdn.com/reproducibilityinthecloud-120428231214-phpapp02/75/Virtual-Appliances-Cloud-Computing-and-Reproducible-Research-35-2048.jpg)

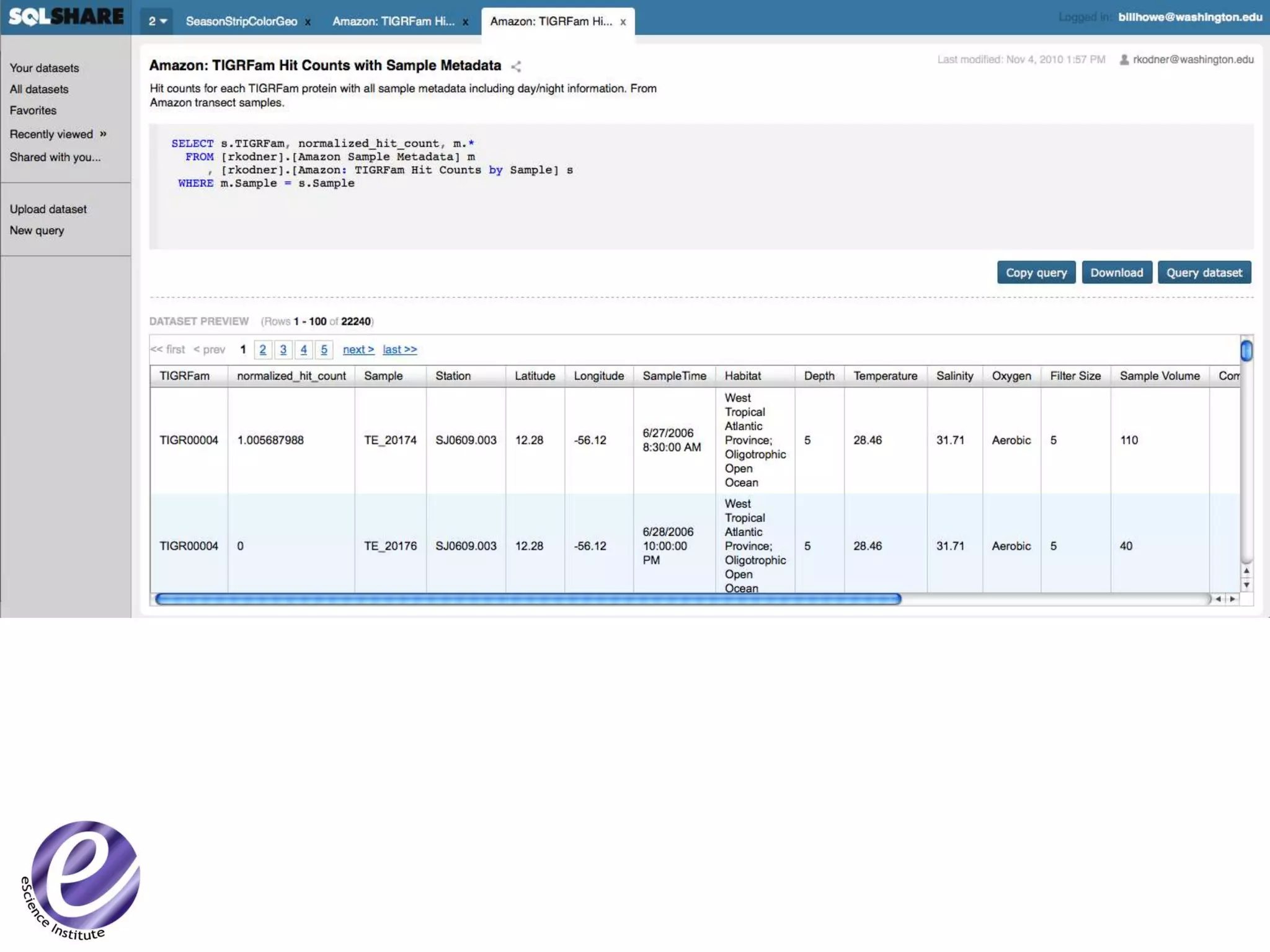

![Why SQL?

Find all TIGRFam ids (proteins) that are missing from at

least one of three samples (relations)

SELECT col0 FROM [refseq_hma_fasta_TGIRfam_refs]

UNION

SELECT col0 FROM [est_hma_fasta_TGIRfam_refs]

UNION

SELECT col0 FROM [combo_hma_fasta_TGIRfam_refs]

EXCEPT

SELECT col0 FROM [refseq_hma_fasta_TGIRfam_refs]

INTERSECT

SELECT col0 FROM [est_hma_fasta_TGIRfam_refs]

INTERSECT

SELECT col0 FROM [combo_hma_fasta_TGIRfam_refs]](https://image.slidesharecdn.com/reproducibilityinthecloud-120428231214-phpapp02/75/Virtual-Appliances-Cloud-Computing-and-Reproducible-Research-48-2048.jpg)

![Amazon

3/12/09 [Werner

Bill Howe, eScience Institute Vogels, Amazon.com]

59](https://image.slidesharecdn.com/reproducibilityinthecloud-120428231214-phpapp02/75/Virtual-Appliances-Cloud-Computing-and-Reproducible-Research-59-2048.jpg)

![3/12/09 [Werner

Bill Howe, eScience Institute Vogels, Amazon.com]

60](https://image.slidesharecdn.com/reproducibilityinthecloud-120428231214-phpapp02/75/Virtual-Appliances-Cloud-Computing-and-Reproducible-Research-60-2048.jpg)

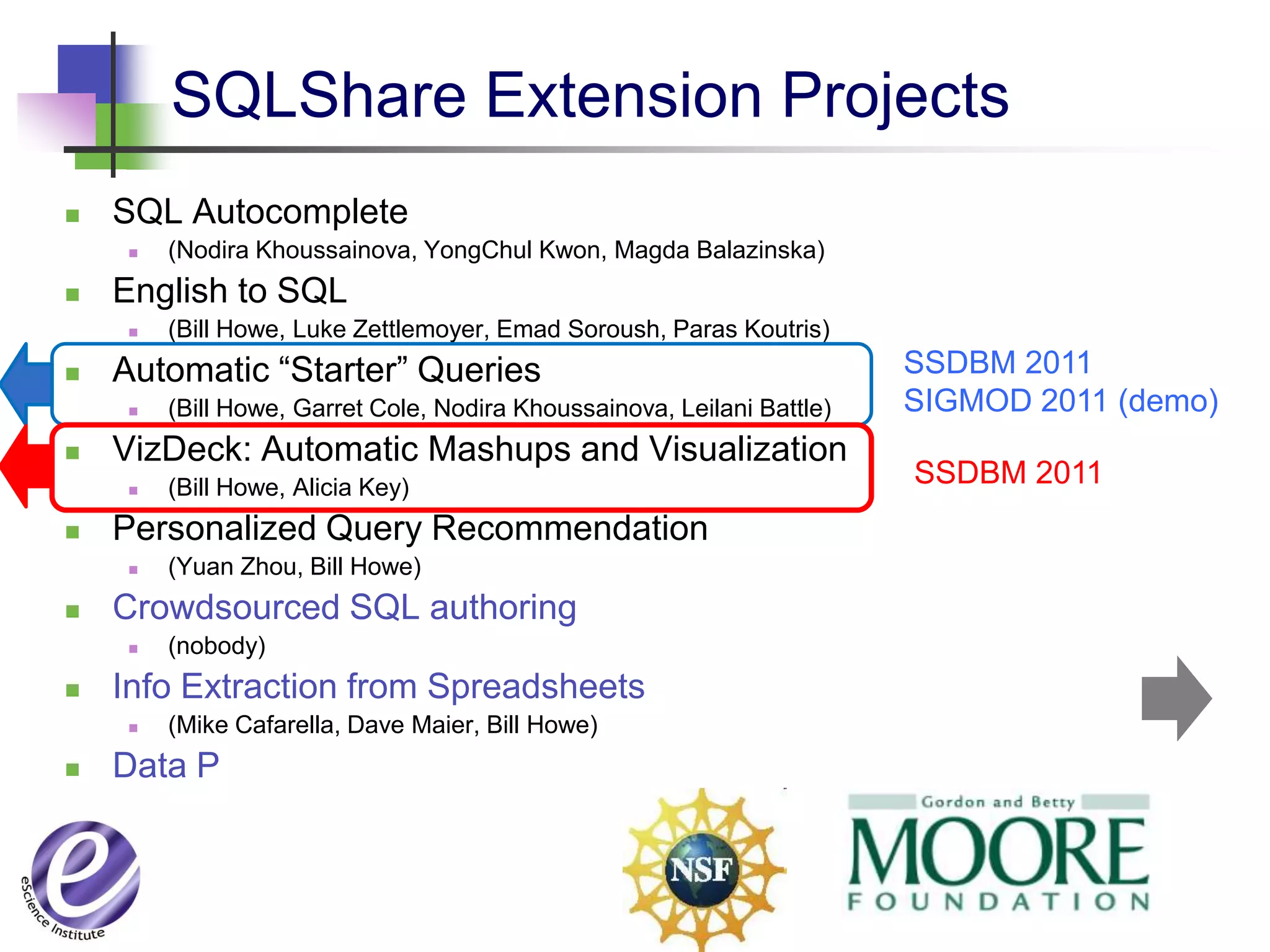

![Integrative Analysis

[Howe 2010, 2011]

Files Tables Views Visualization

s

SAS visual

parse relational analysi

/ analysis s

Exce extrac

l t

[Howe 2010] [Key

XML 2011]

CSV

SQL Azure

sqlshare.escience.washington.edu

vizdeck.com

3/12/09 Bill Howe, eScience Institute 64](https://image.slidesharecdn.com/reproducibilityinthecloud-120428231214-phpapp02/75/Virtual-Appliances-Cloud-Computing-and-Reproducible-Research-64-2048.jpg)