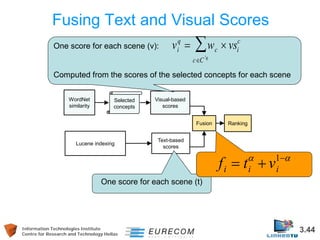

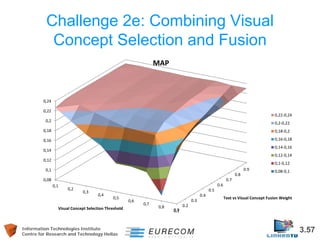

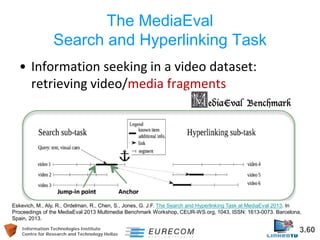

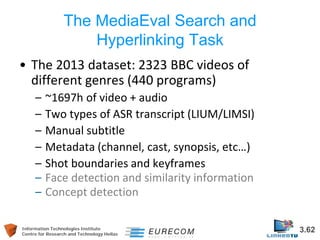

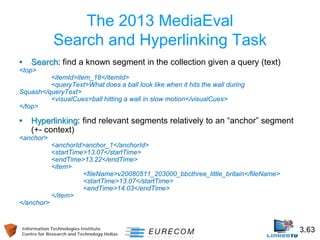

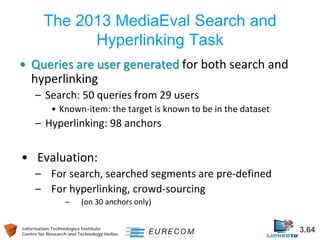

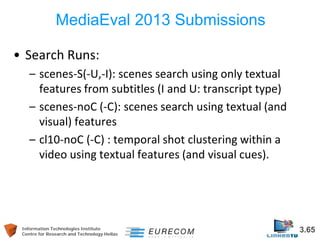

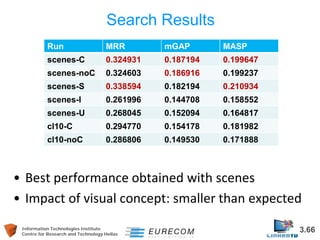

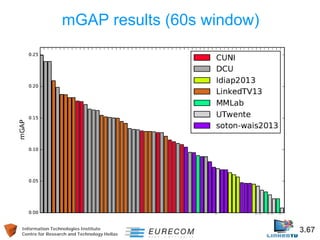

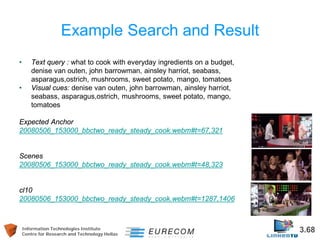

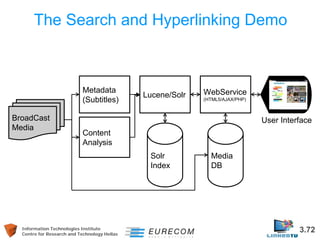

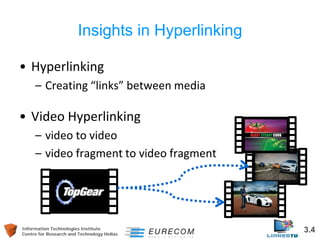

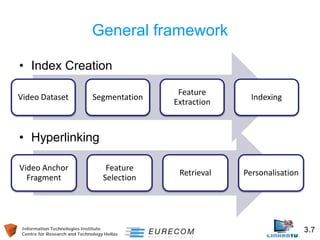

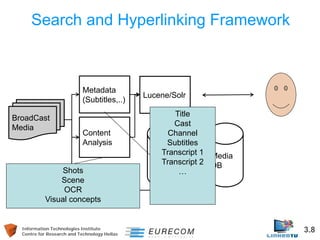

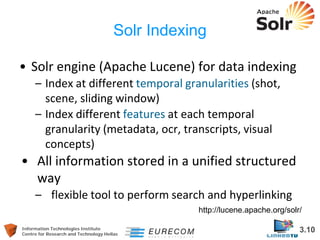

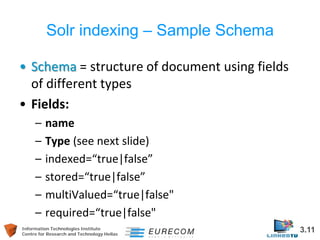

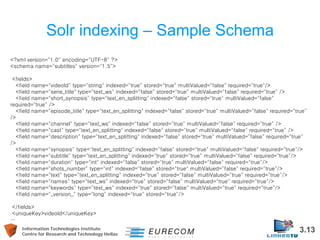

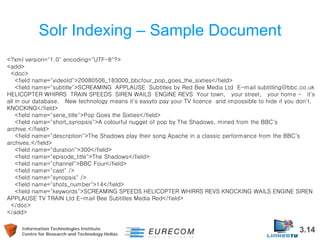

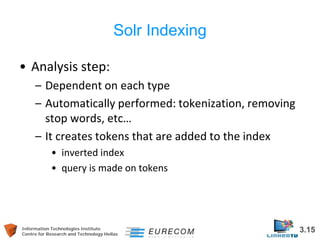

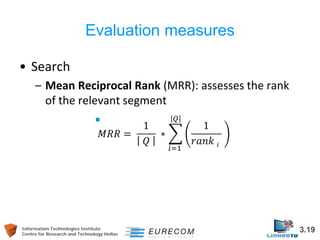

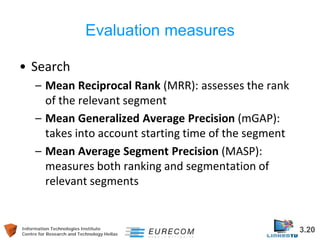

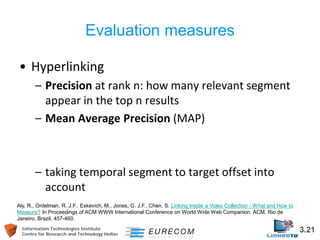

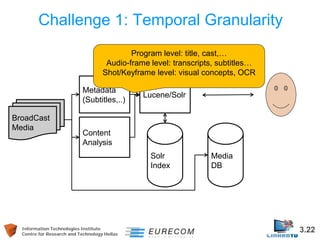

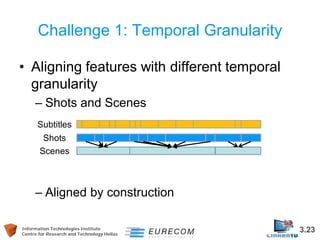

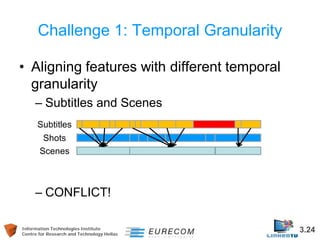

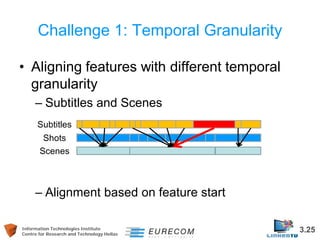

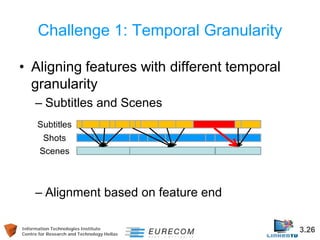

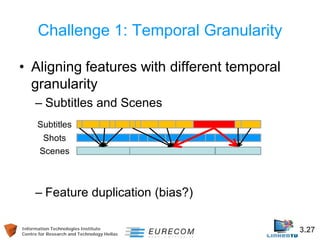

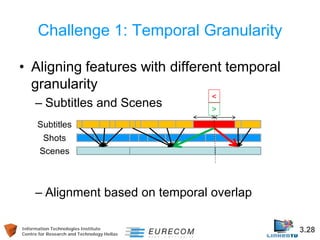

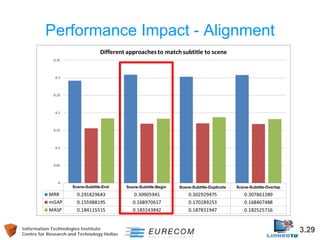

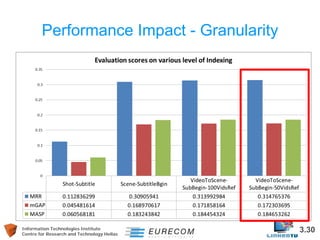

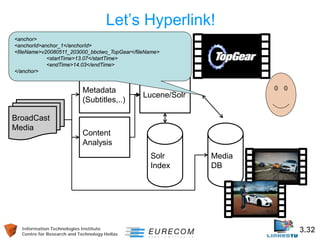

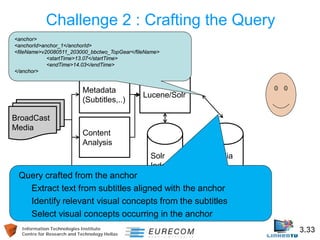

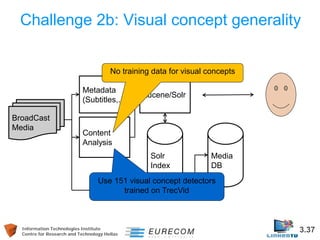

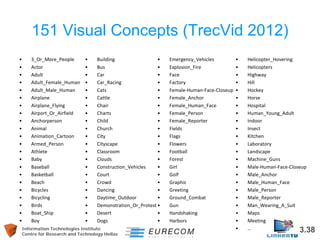

The document provides an overview of video hyperlinking, outlining its motivation, methods, and challenges involved in automatically linking multimedia content. It discusses various indexing systems like Apache Solr for organizing video data and evaluates performance metrics for video searches and hyperlinking accuracy. Additionally, it addresses specific technical challenges such as temporal granularity and crafting queries for effective video content retrieval.

![Information Technologies Institute 3.39

Centre for Research and Technology Hellas

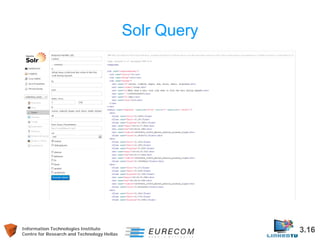

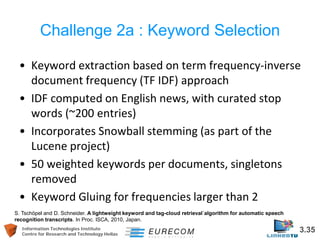

Solr Query

•

How to include the visual concepts in Solr?

–

Using float typed fields

–

<field name=“Animal" type=“float" indexed="true" stored=“true" multiValued=“false" required="true"/>

–

<field name=“Animal">0.74</field>

–

<field name=“Building">0.12</field>

•

Query can be made through http request

–

http://localhost:8983/solr/collection_mediaEval/select?q=text:(cow+in+a+farm)+Animal:[0.5+TO+1] +Building:[0.2+TO+1]](https://image.slidesharecdn.com/videohyperlinkingtutorialpartc-141104101318-conversion-gate02/85/Video-Hyperlinking-Tutorial-Part-C-39-320.jpg)