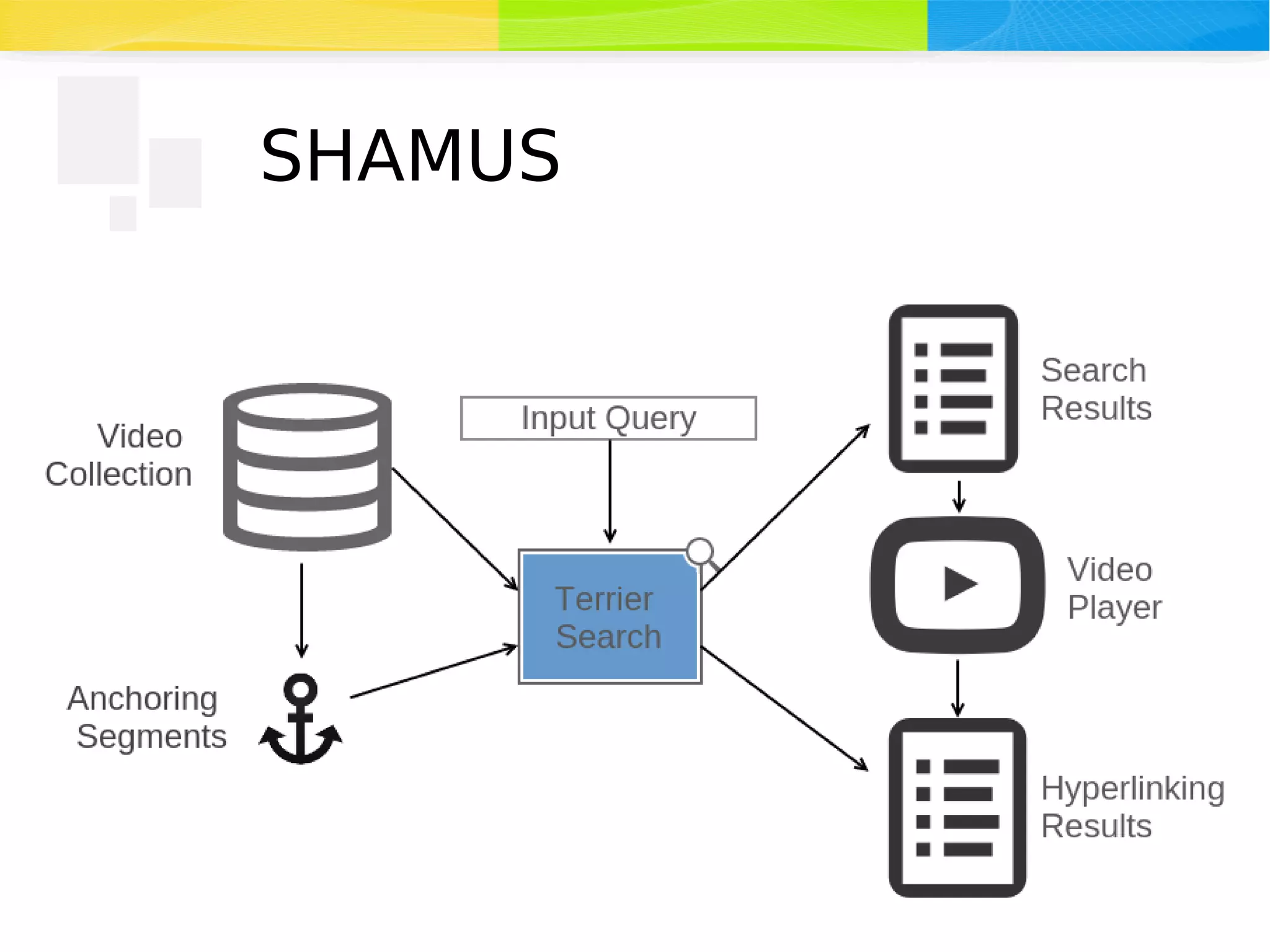

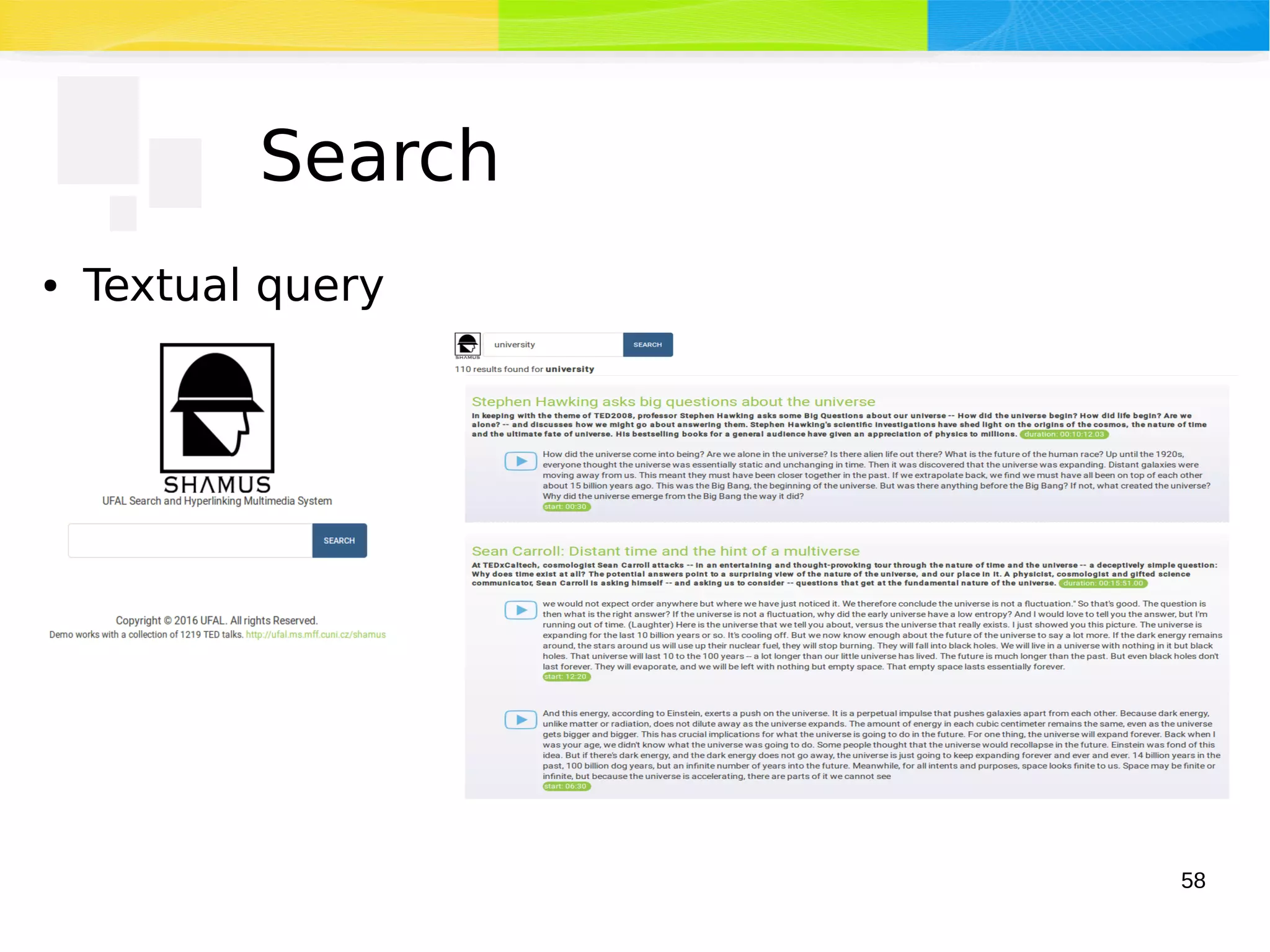

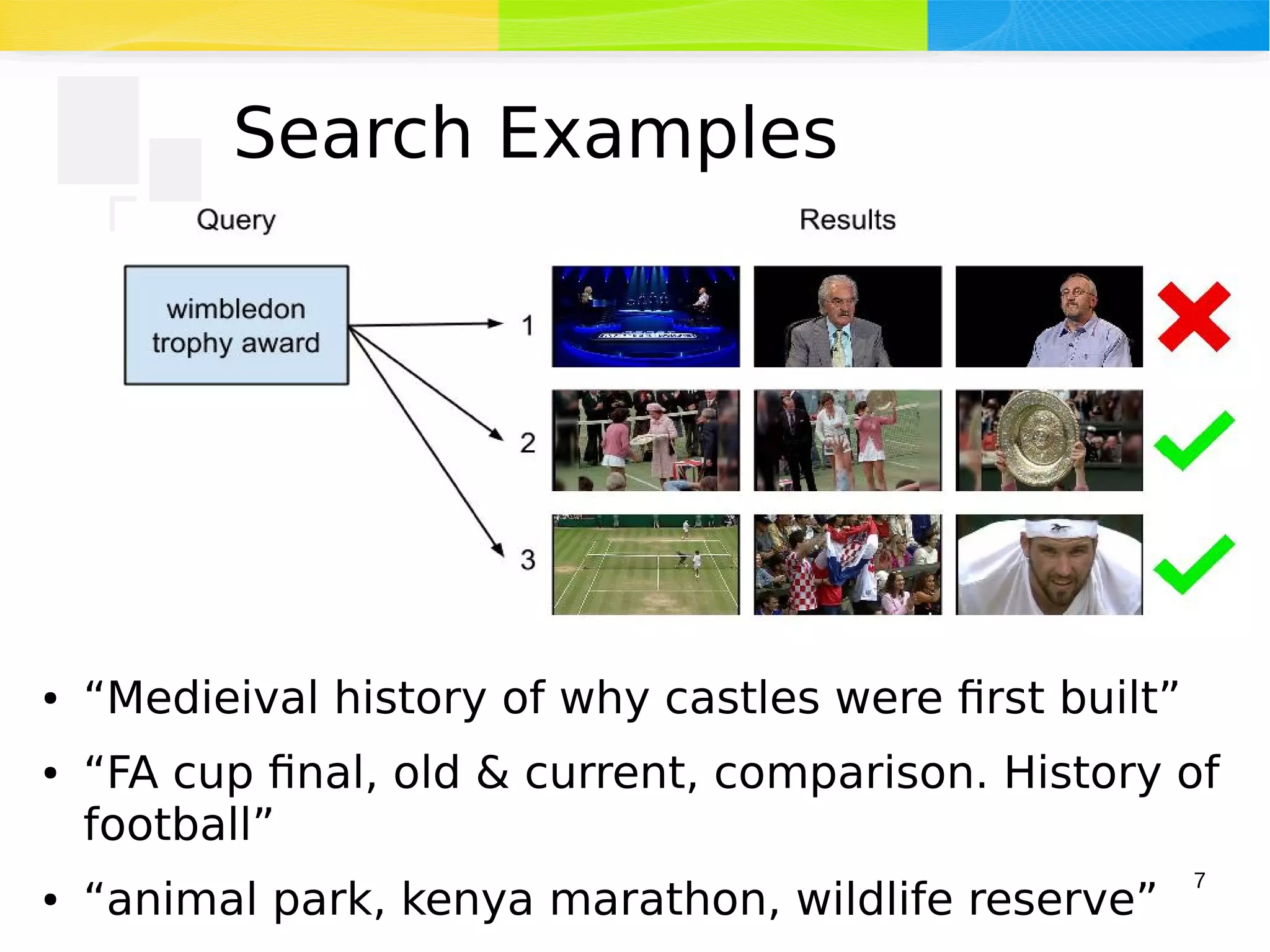

The document discusses advancements in multimedia retrieval, focusing on effective methods for linking and retrieving video content from vast collections of audio-visual documents. It emphasizes the integration of speech, acoustic, and visual information to enhance the search and hyperlinking capabilities in multimedia environments, and outlines techniques for content-based retrieval. The system described, Shamus, facilitates text-based search and hyperlinking in video content by processing metadata and user queries.

![11

Recommender Systems

● Focused on entertainment

● YouTube

● Generated by using a user’s personal activity

(watched, favourited, liked videos) [*]

● TED Talks

● Related talks manually selected by the

editors

[*] James Davidson, Benjamin Liebald, Junning Liu, Palash Nandy, Taylor Van Vleet, Ullas

Gargi, Sujoy Gupta, Yu He, Mike Lambert, Blake Livingston, and Dasarathi Sampath. 2010. The

YouTube video recommendation system. In Proc. of RecSys '10. ACM, New York, NY, USA,

293-296.](https://image.slidesharecdn.com/aplikace-galuscakova-170512102918/75/Multimodal-Features-for-Linking-Television-Content-11-2048.jpg)