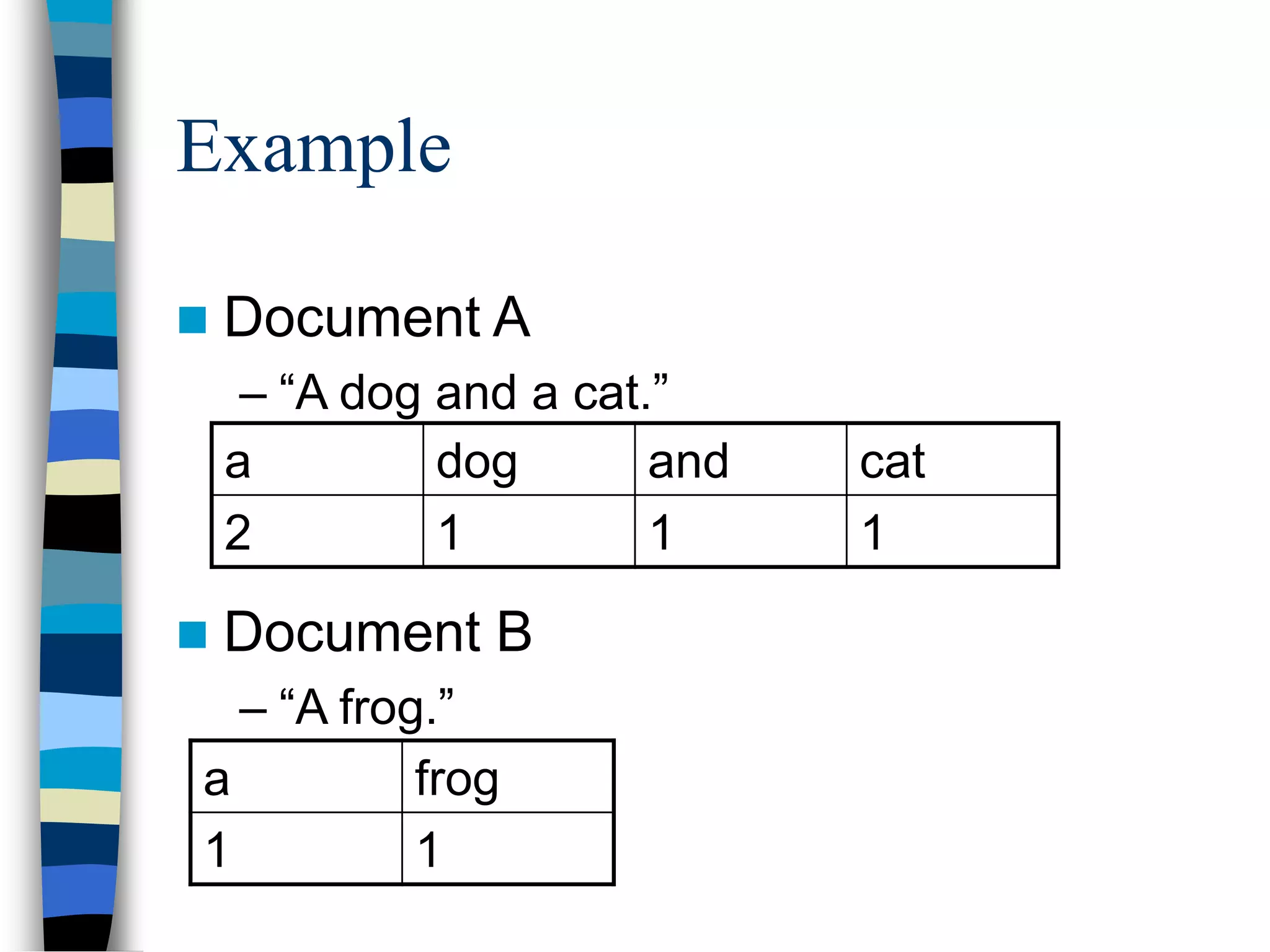

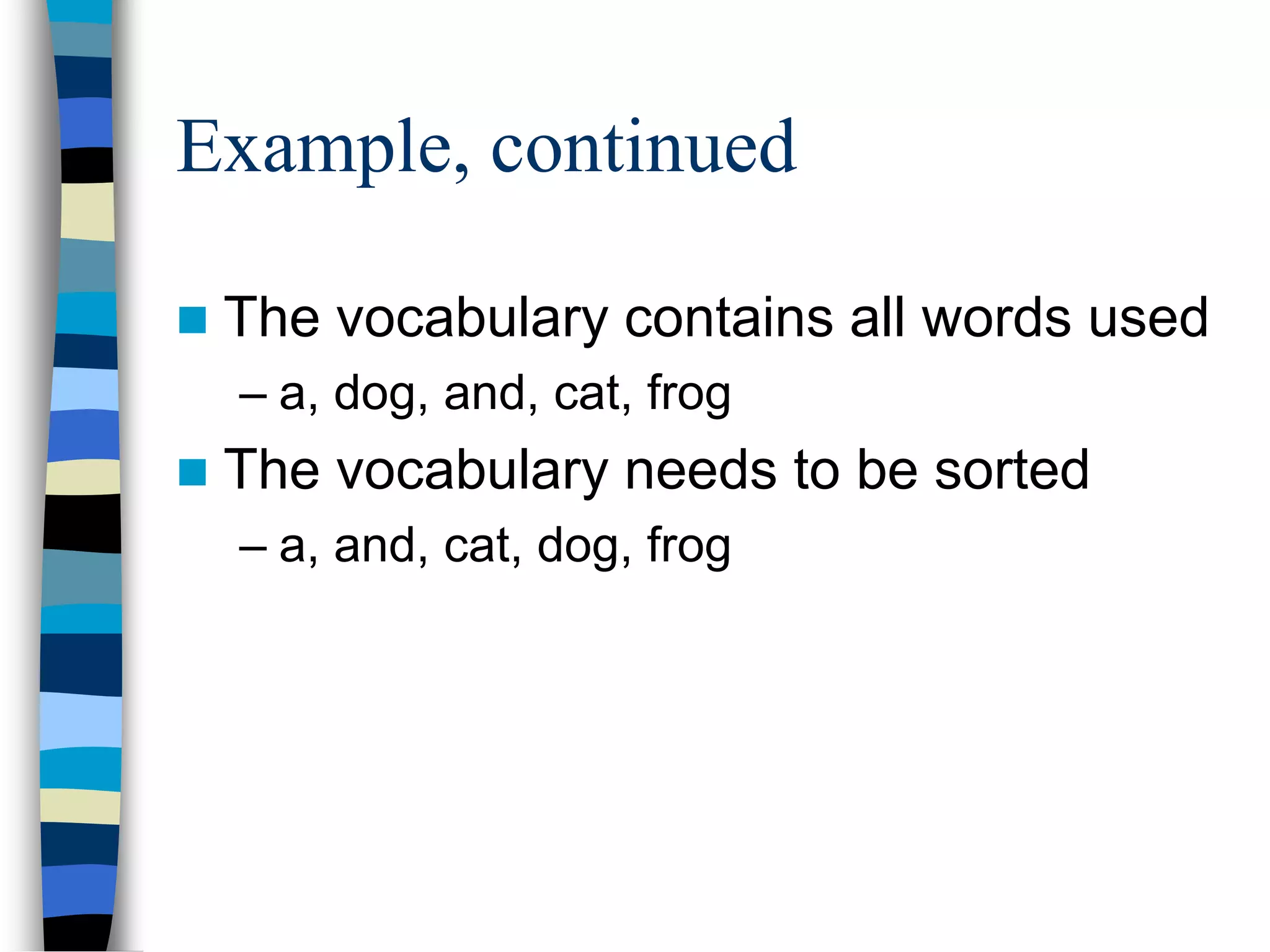

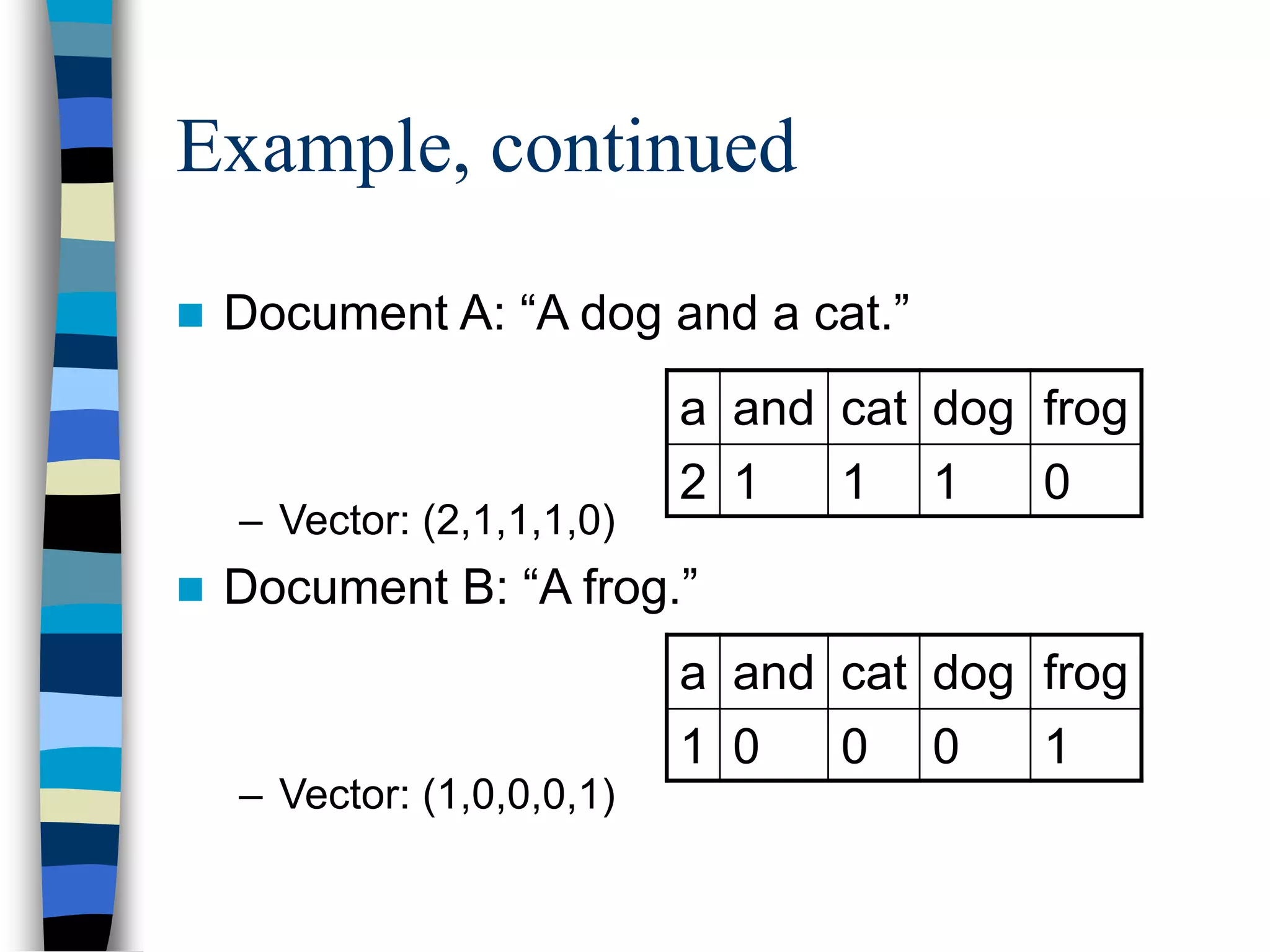

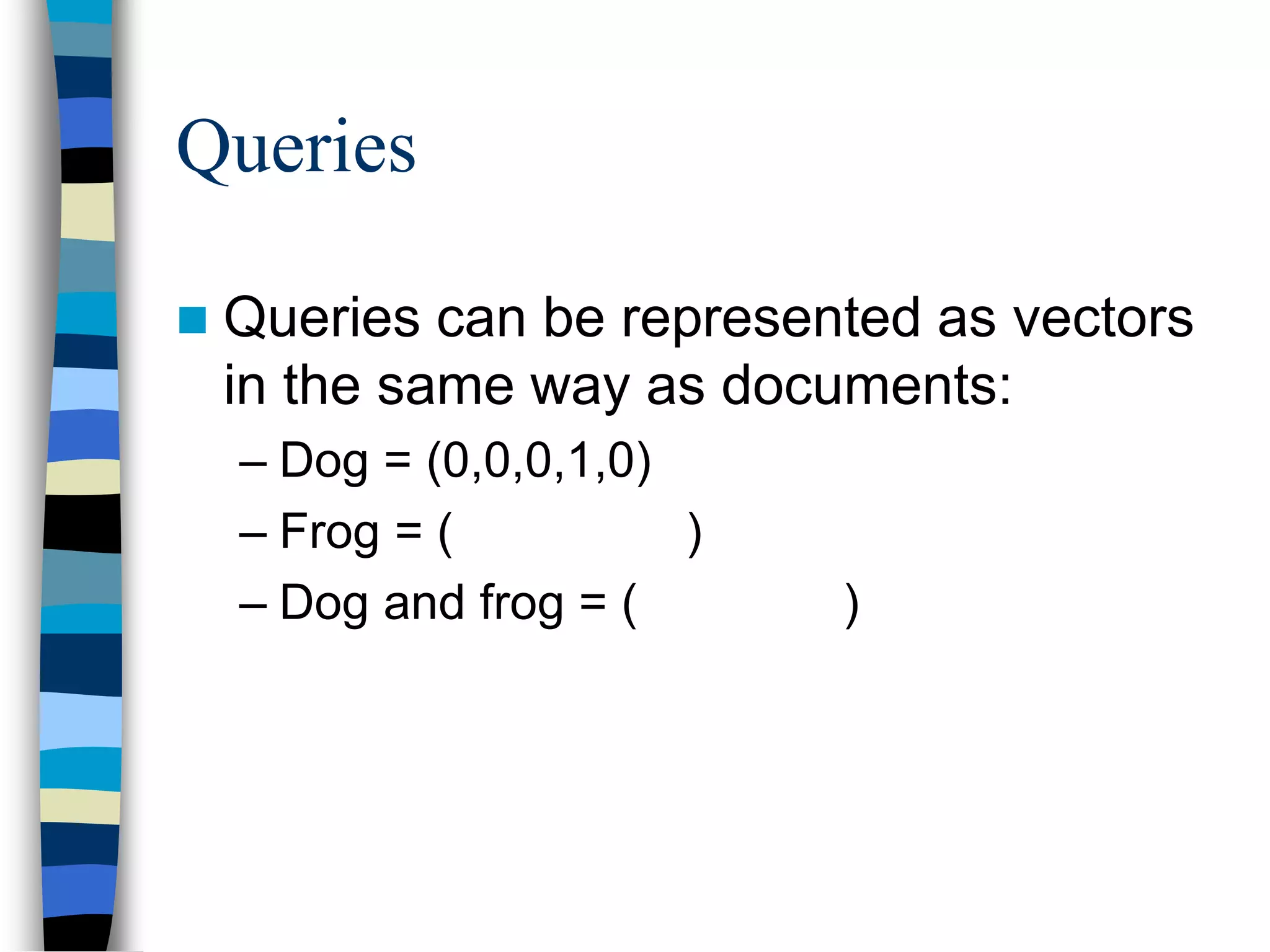

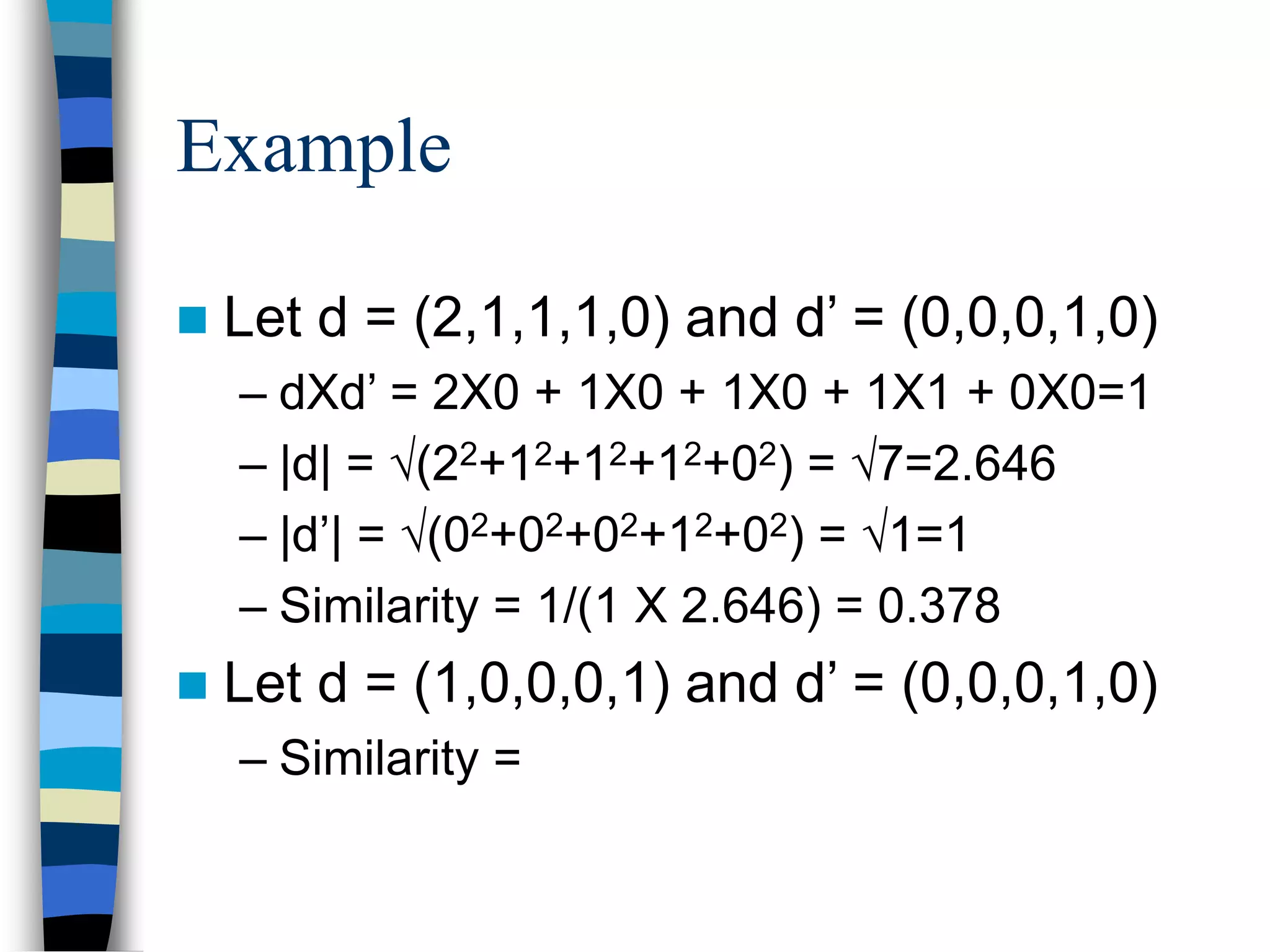

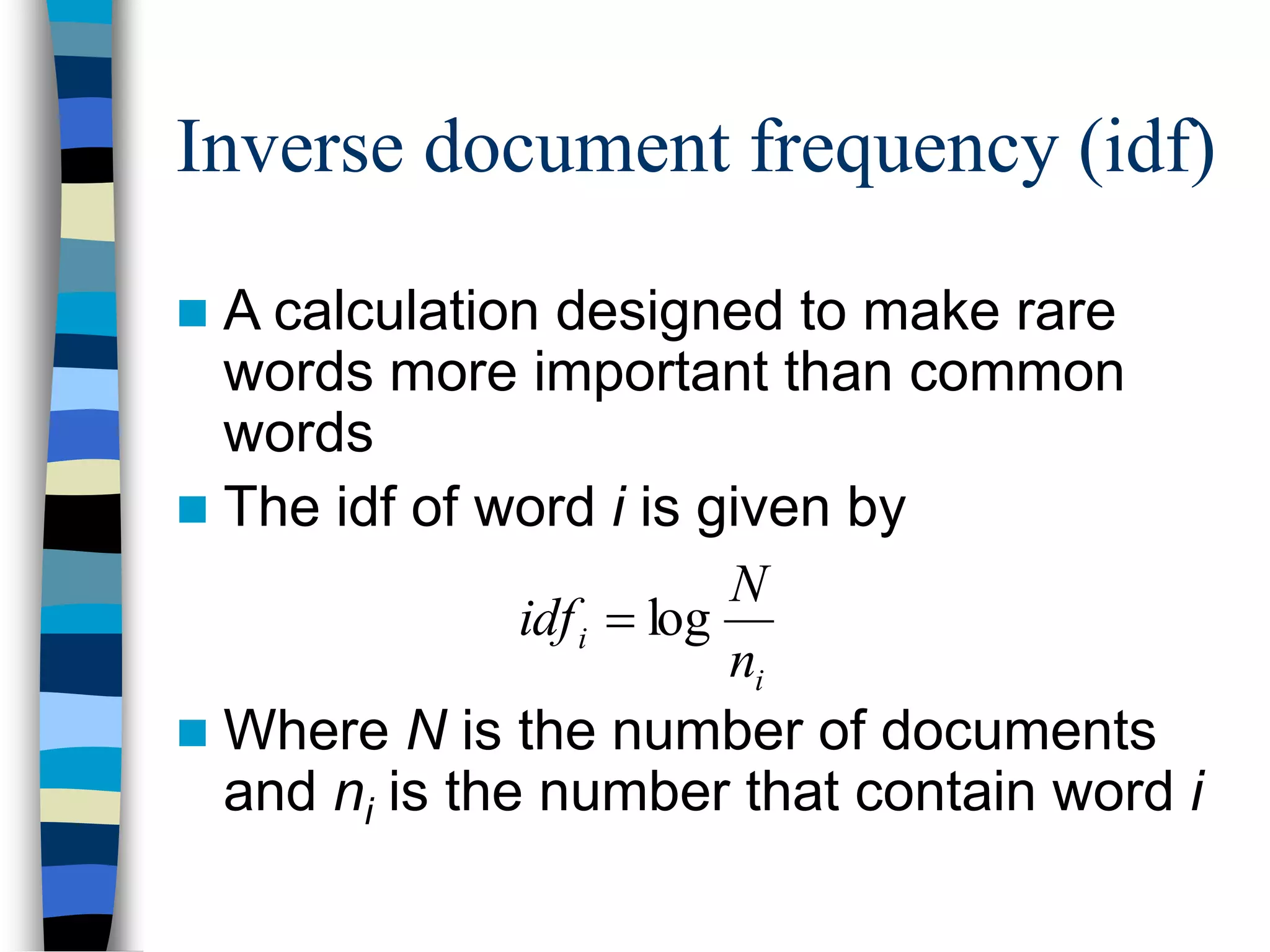

The vector space model (VSM) represents documents as vectors of identifiers such as words, where each unique word corresponds to a dimension. Documents are broken down and represented as vectors based on word frequency. Queries are also represented as vectors, and similarity measures such as cosine similarity are used to compare document and query vectors and retrieve the most relevant documents. Variations of the basic VSM include removing common words, weighting terms based on frequency and document distribution, and using tf-idf to emphasize important words.