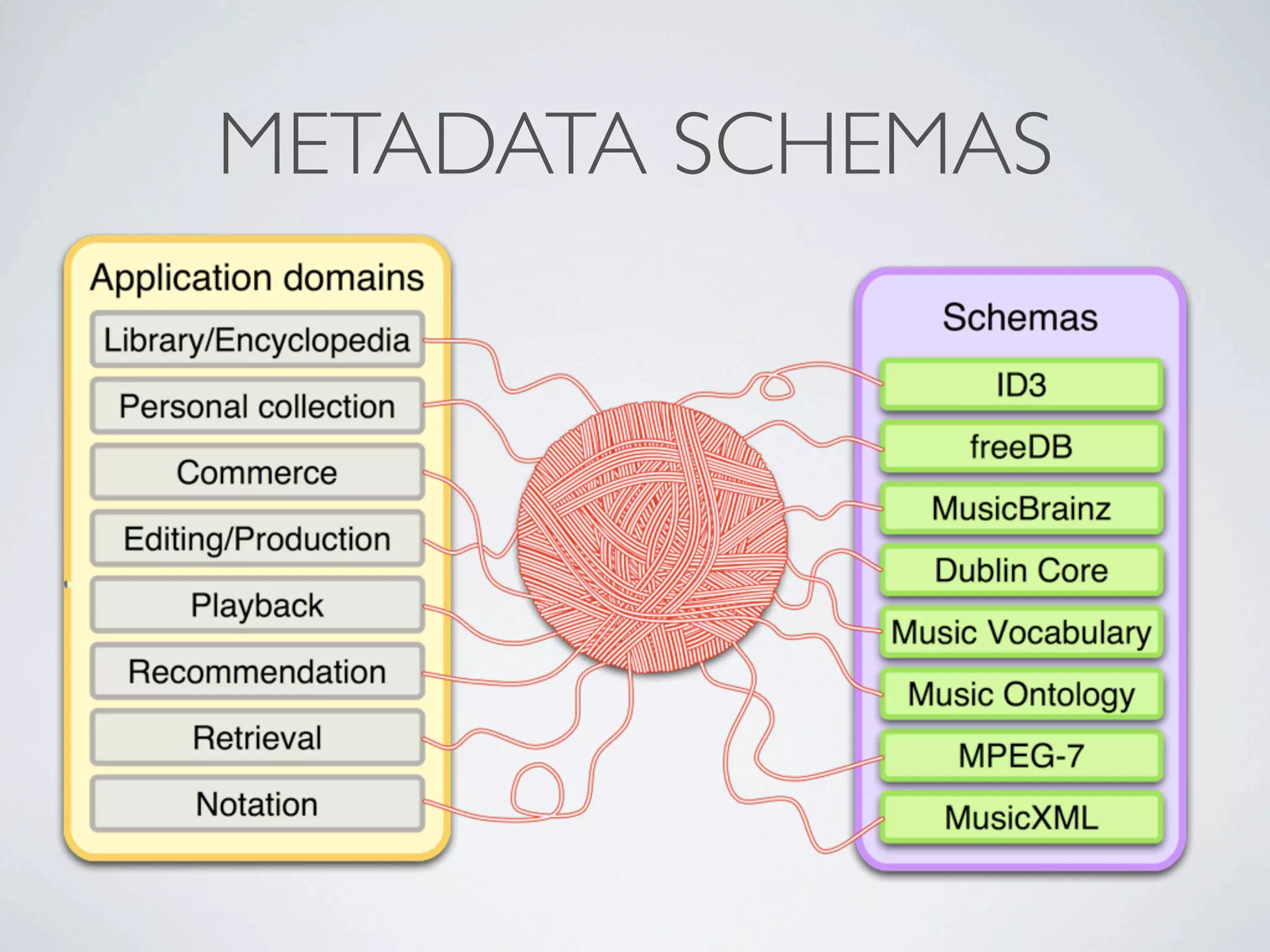

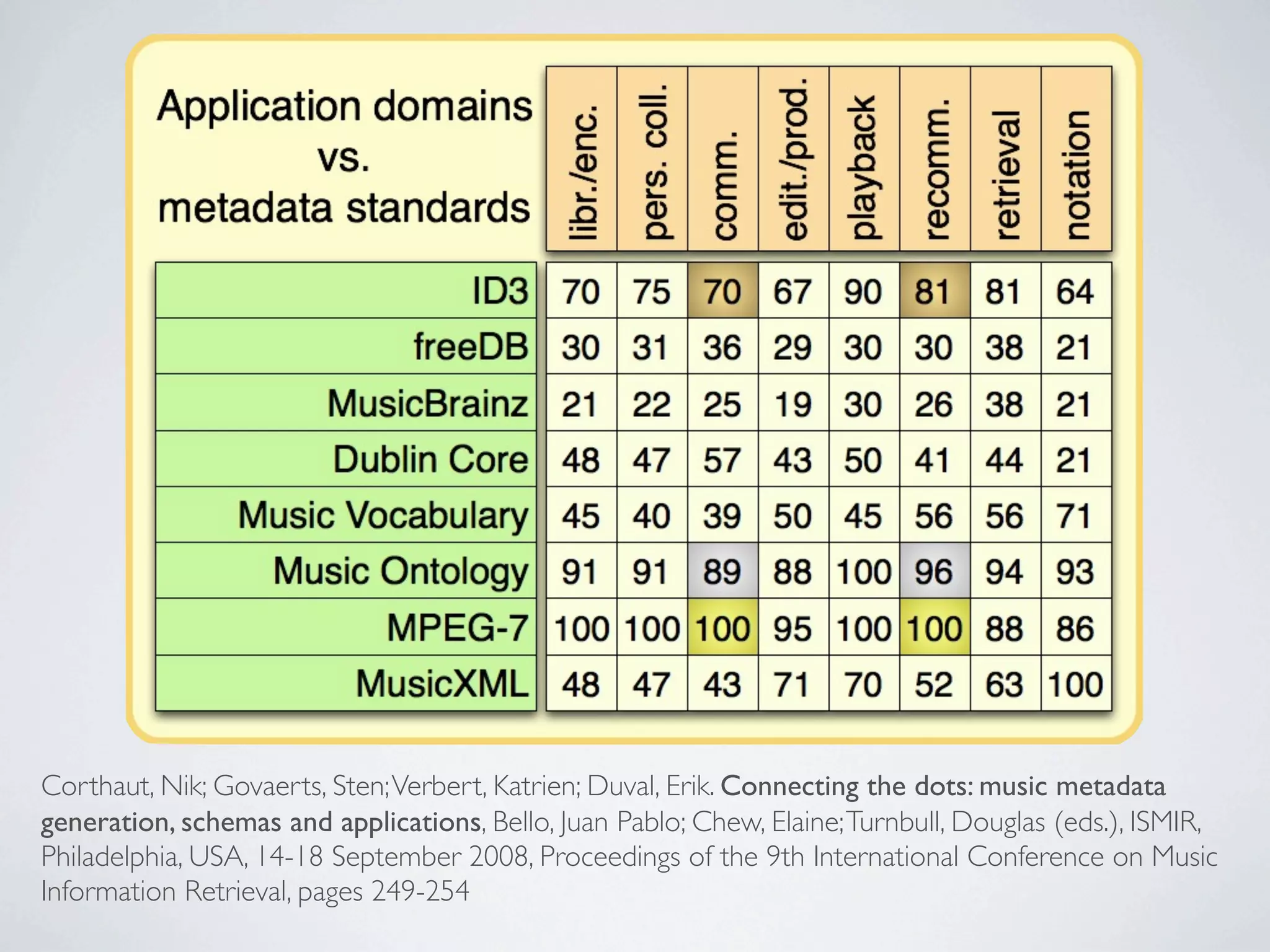

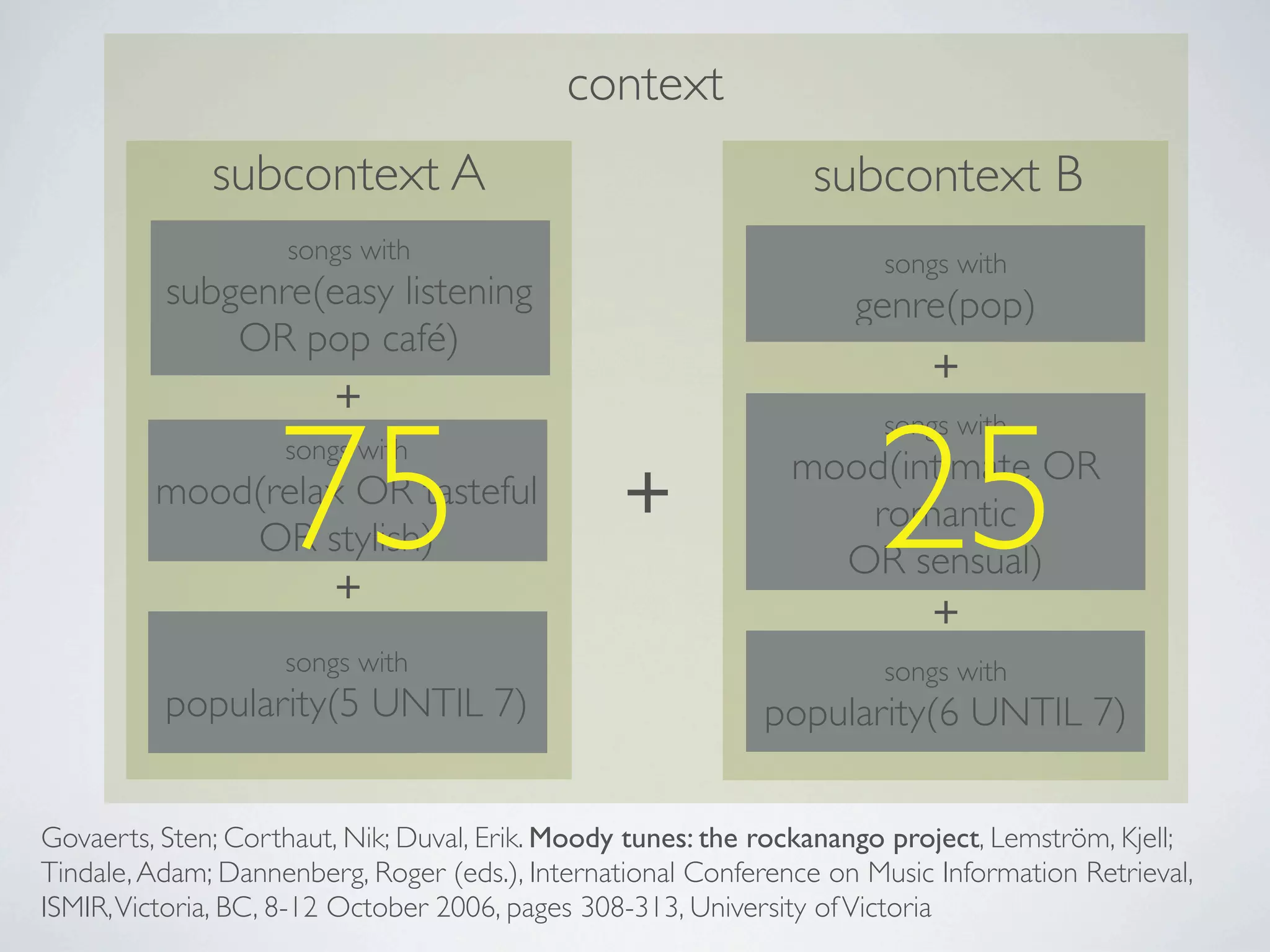

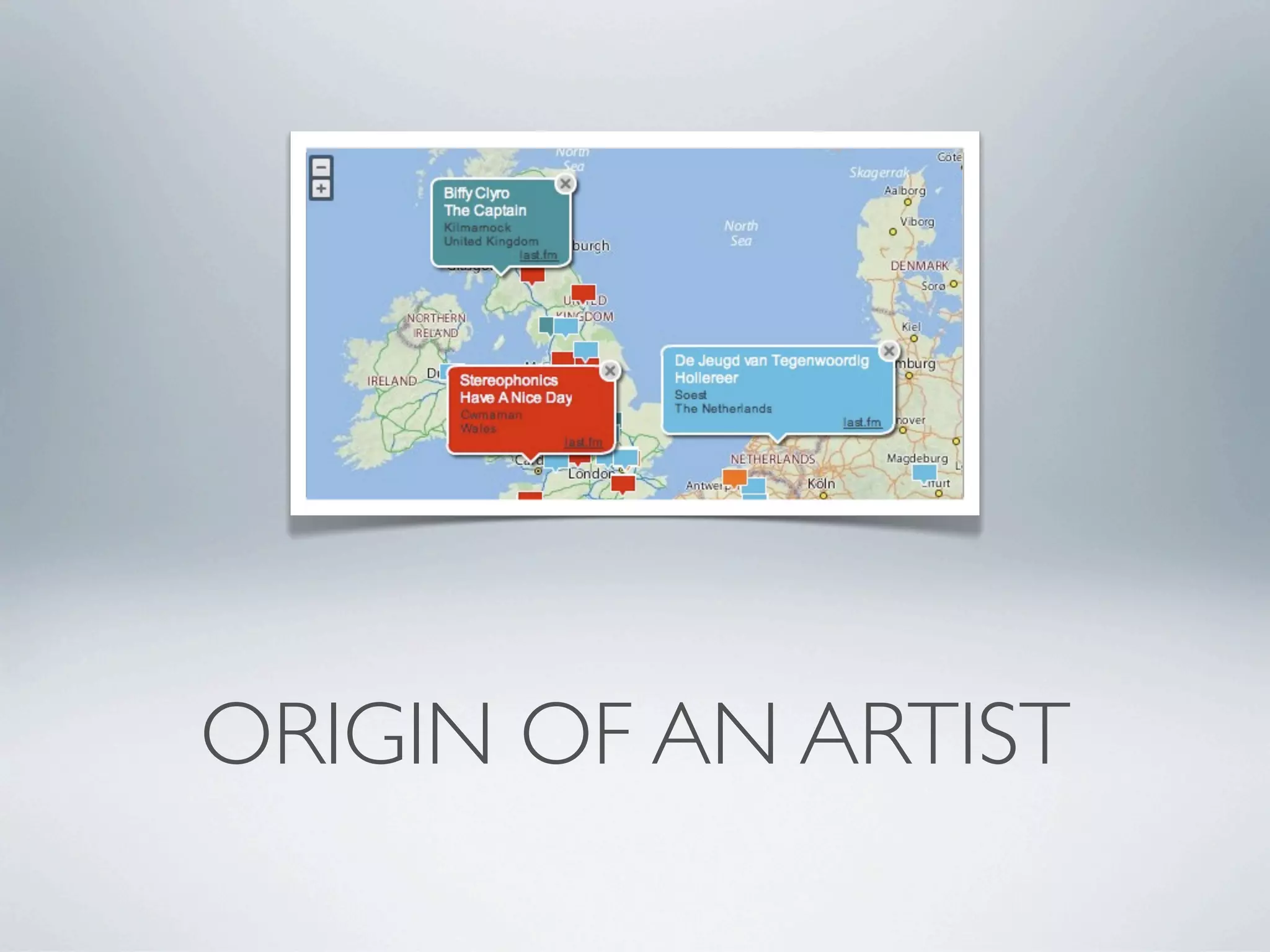

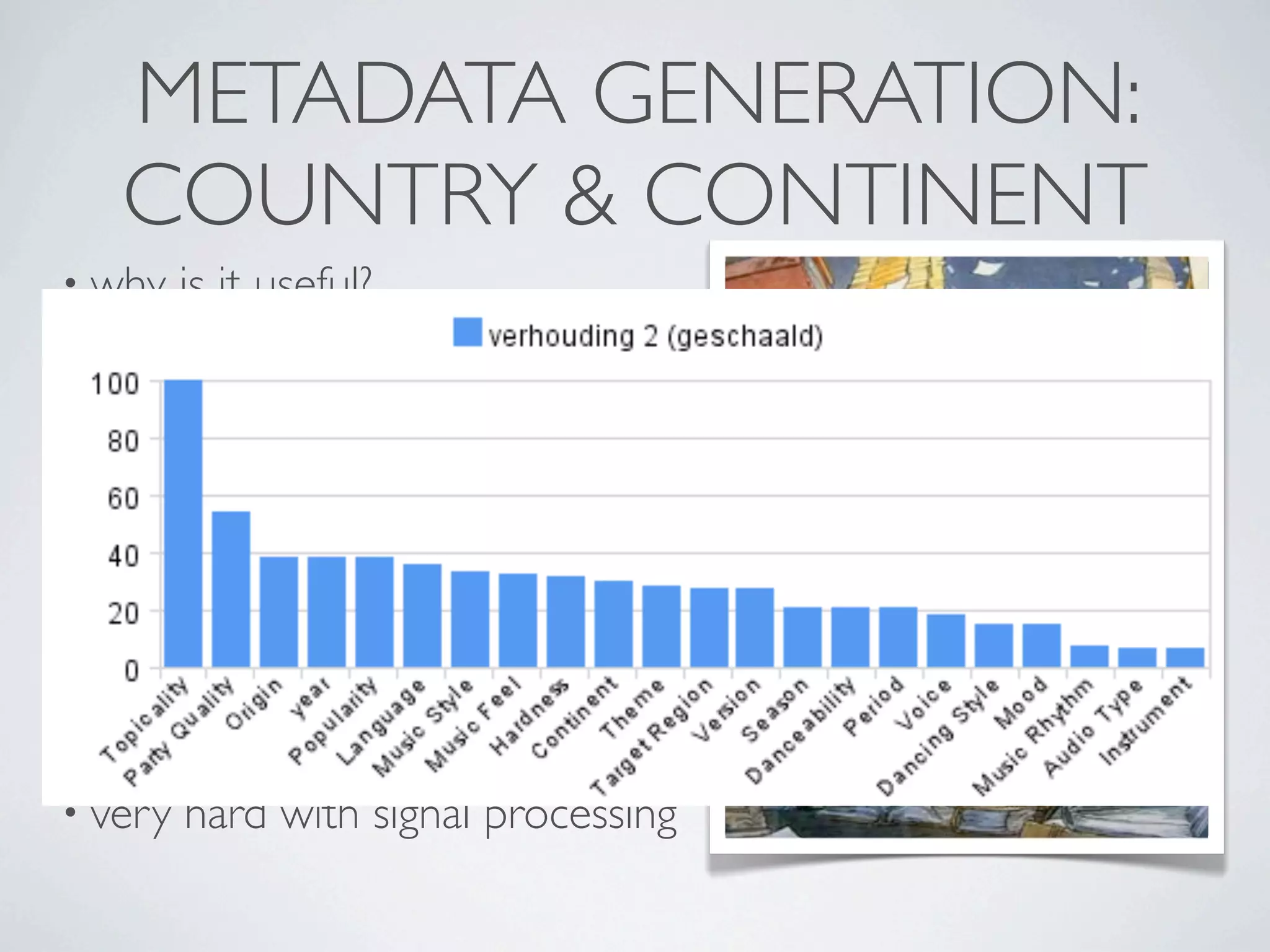

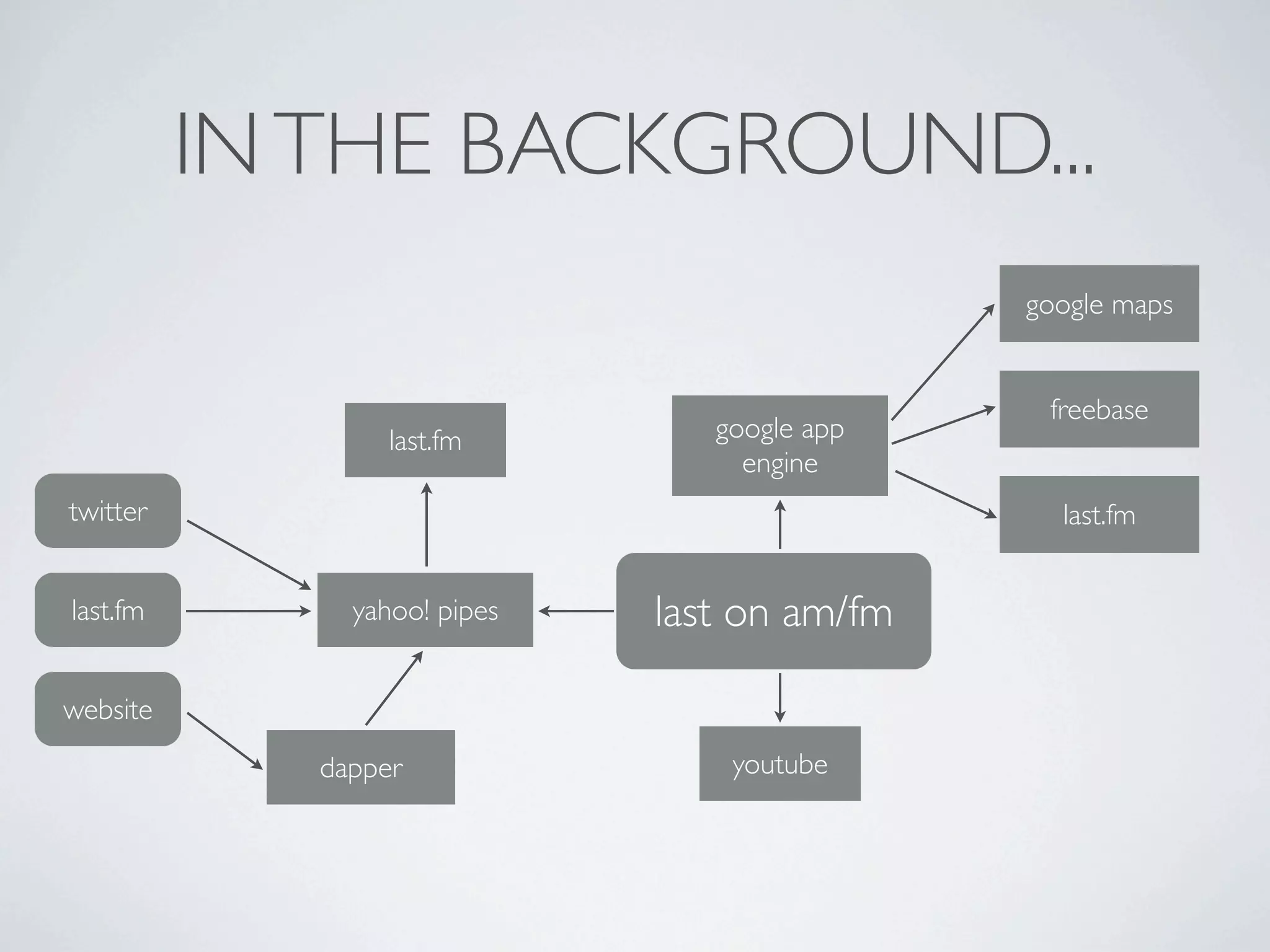

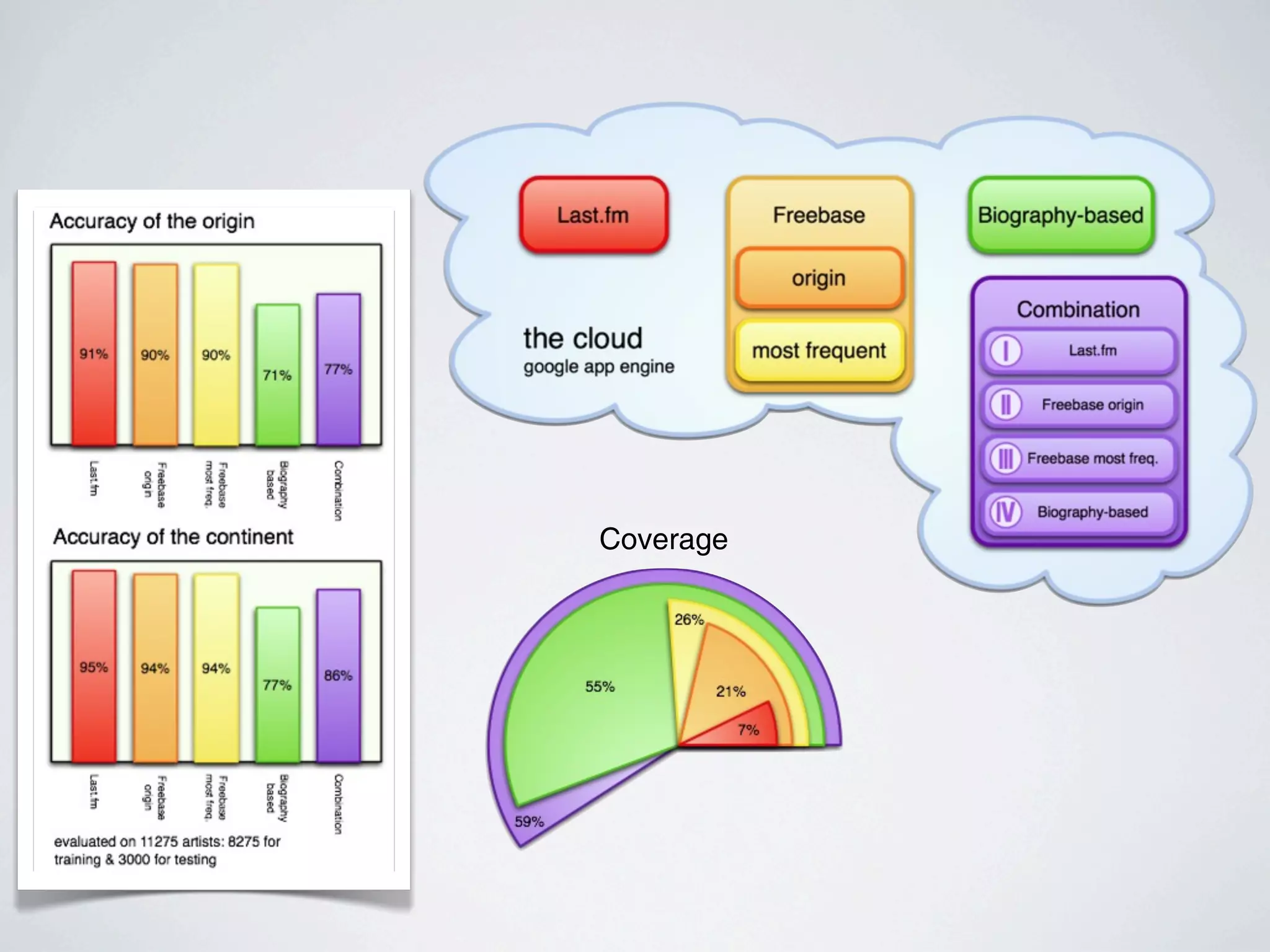

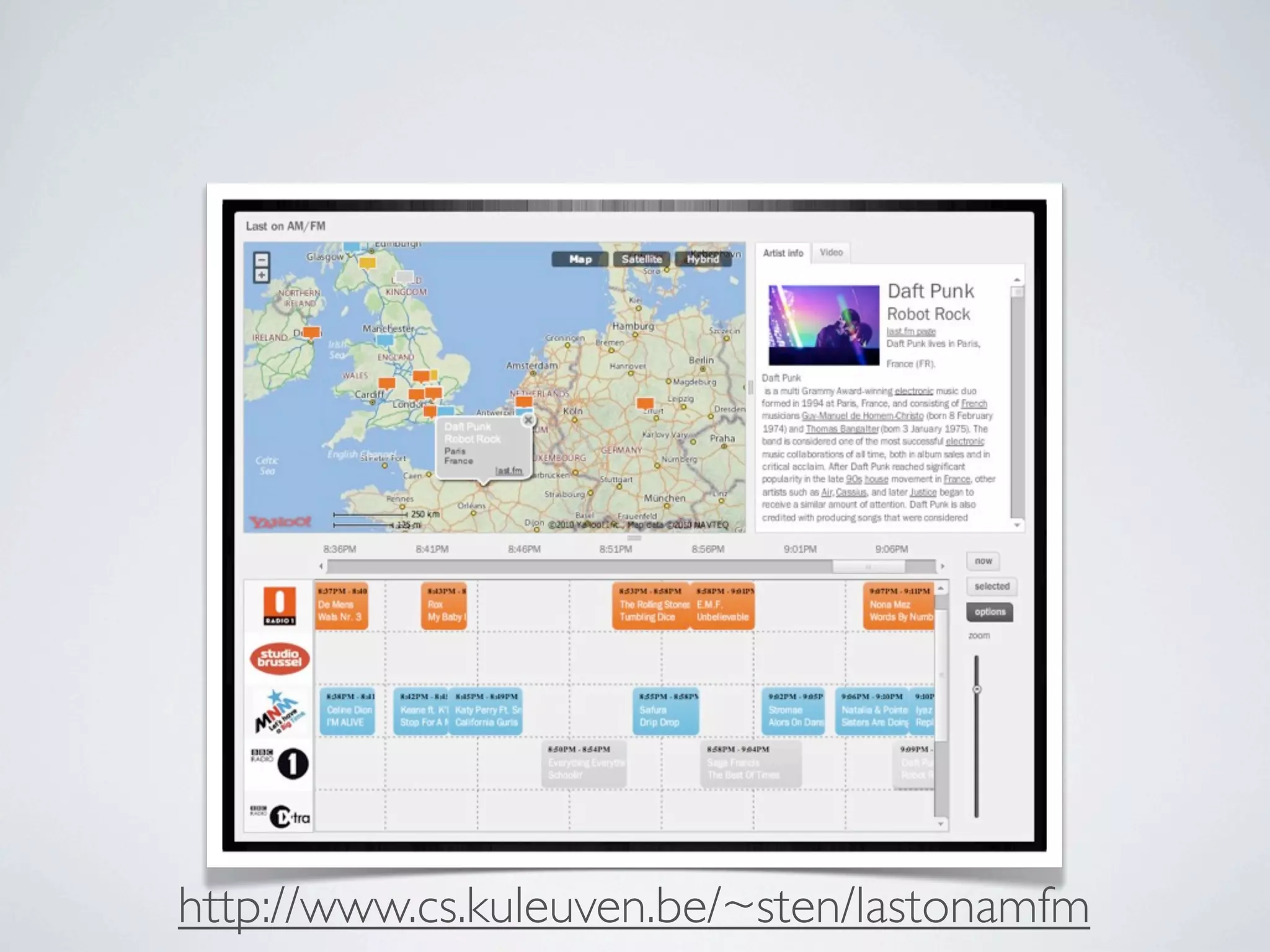

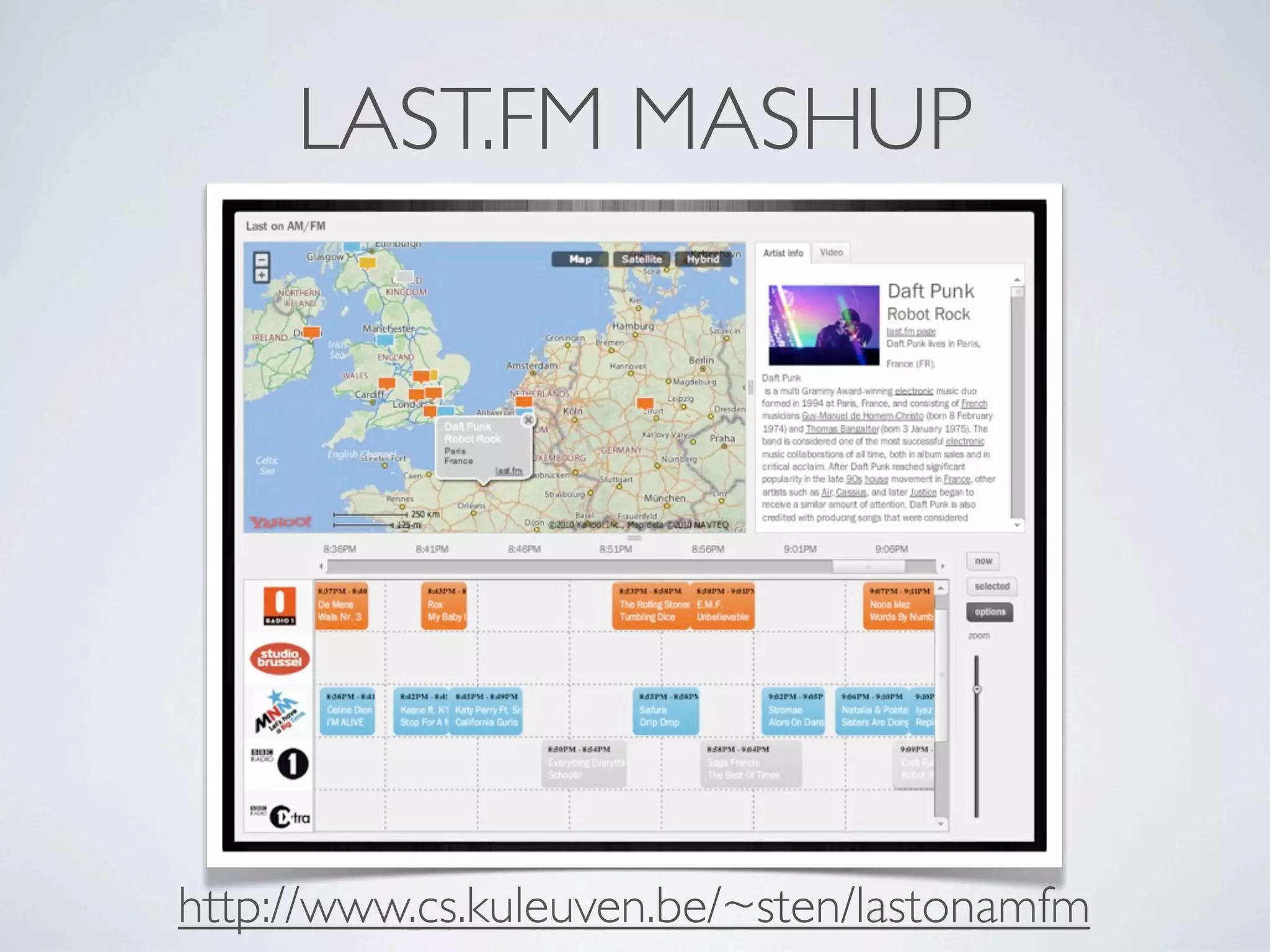

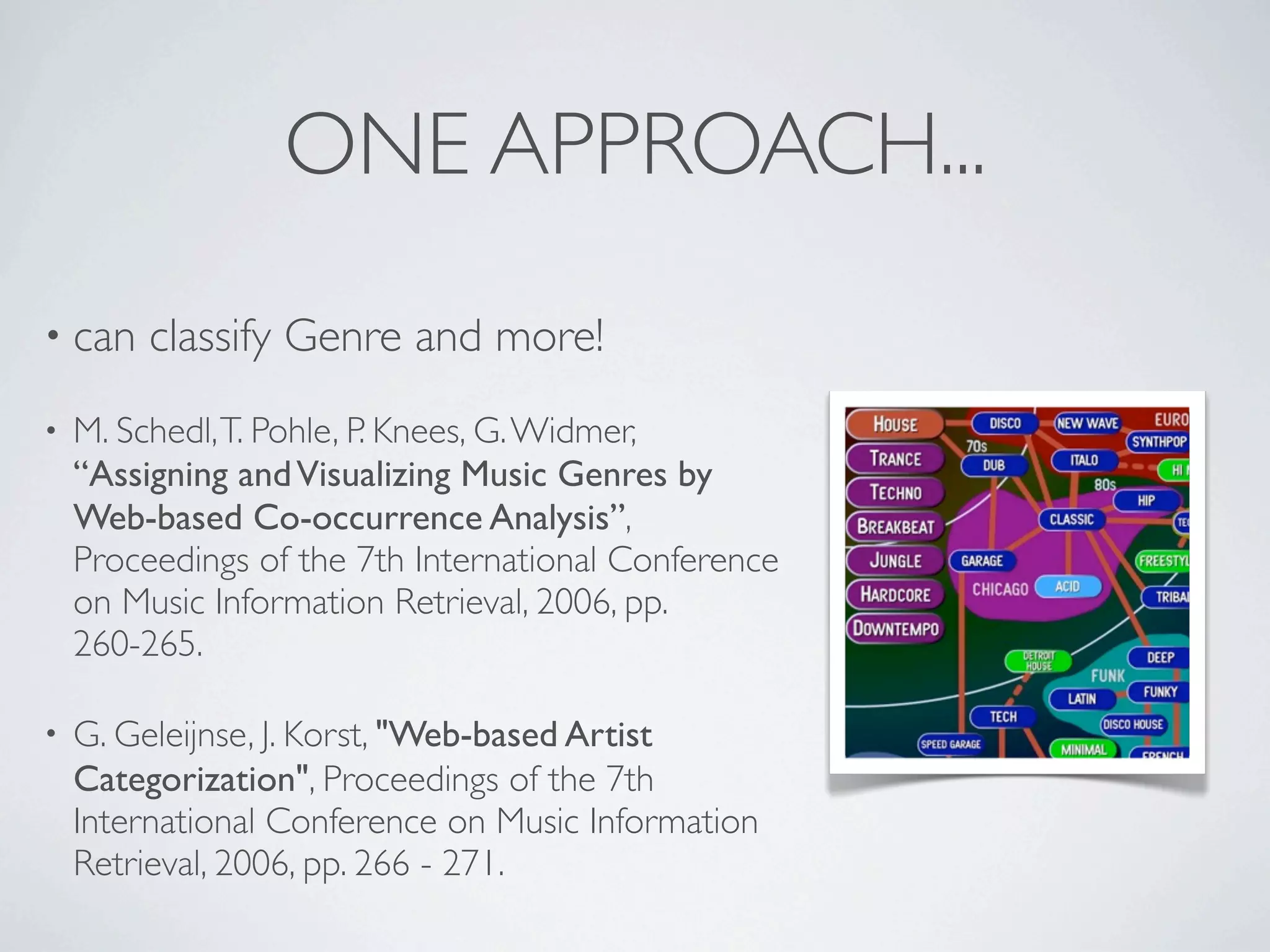

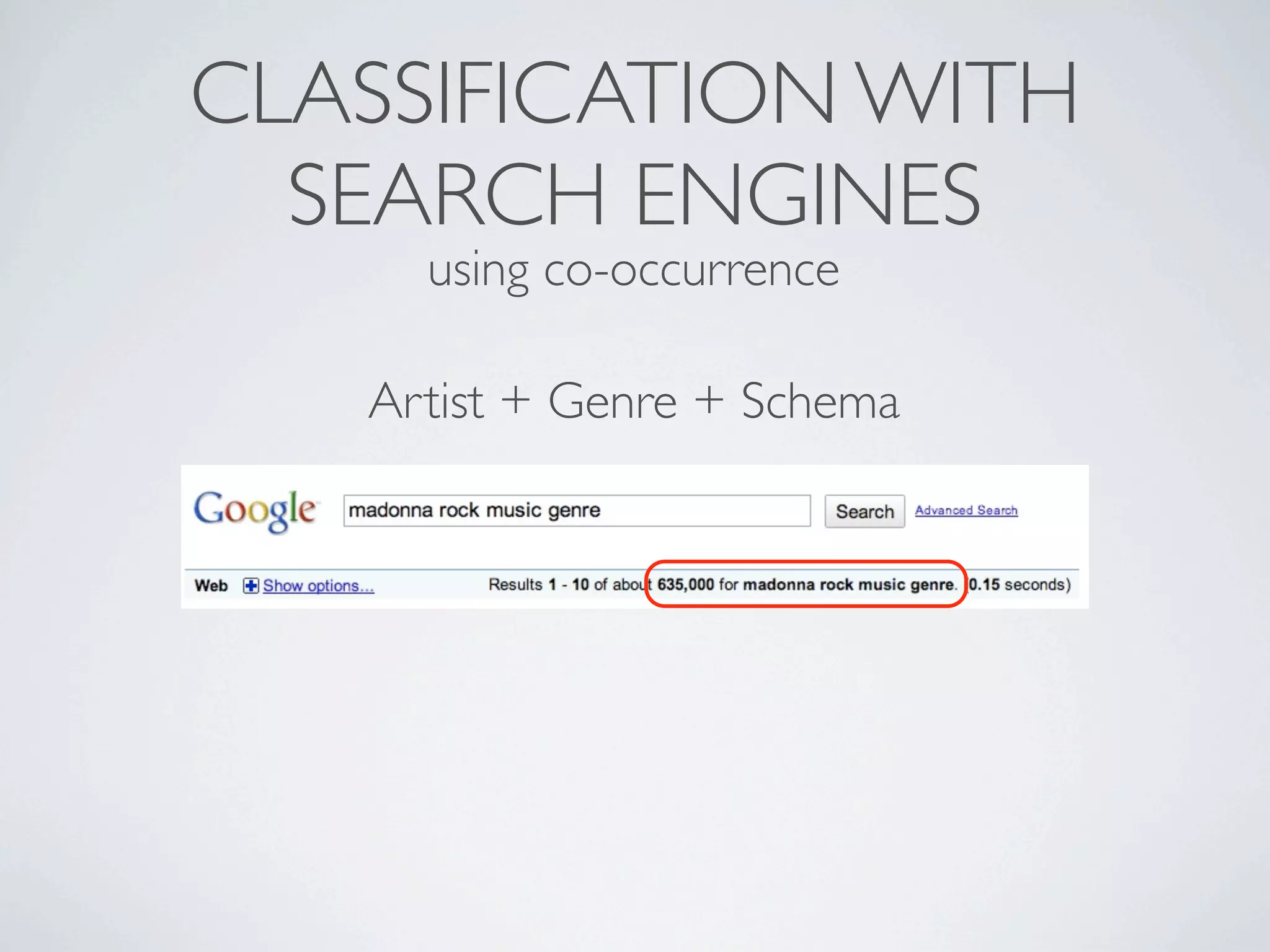

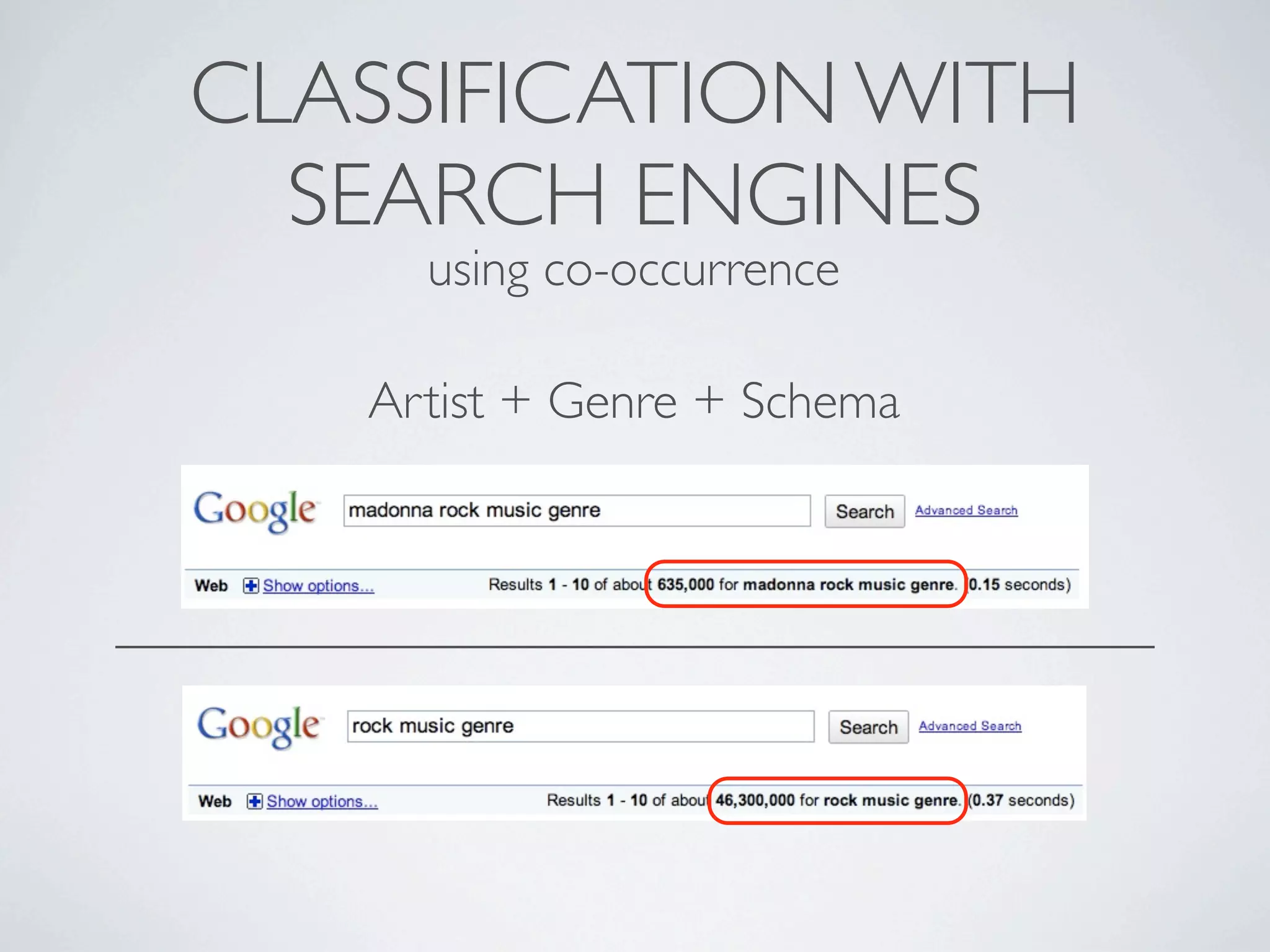

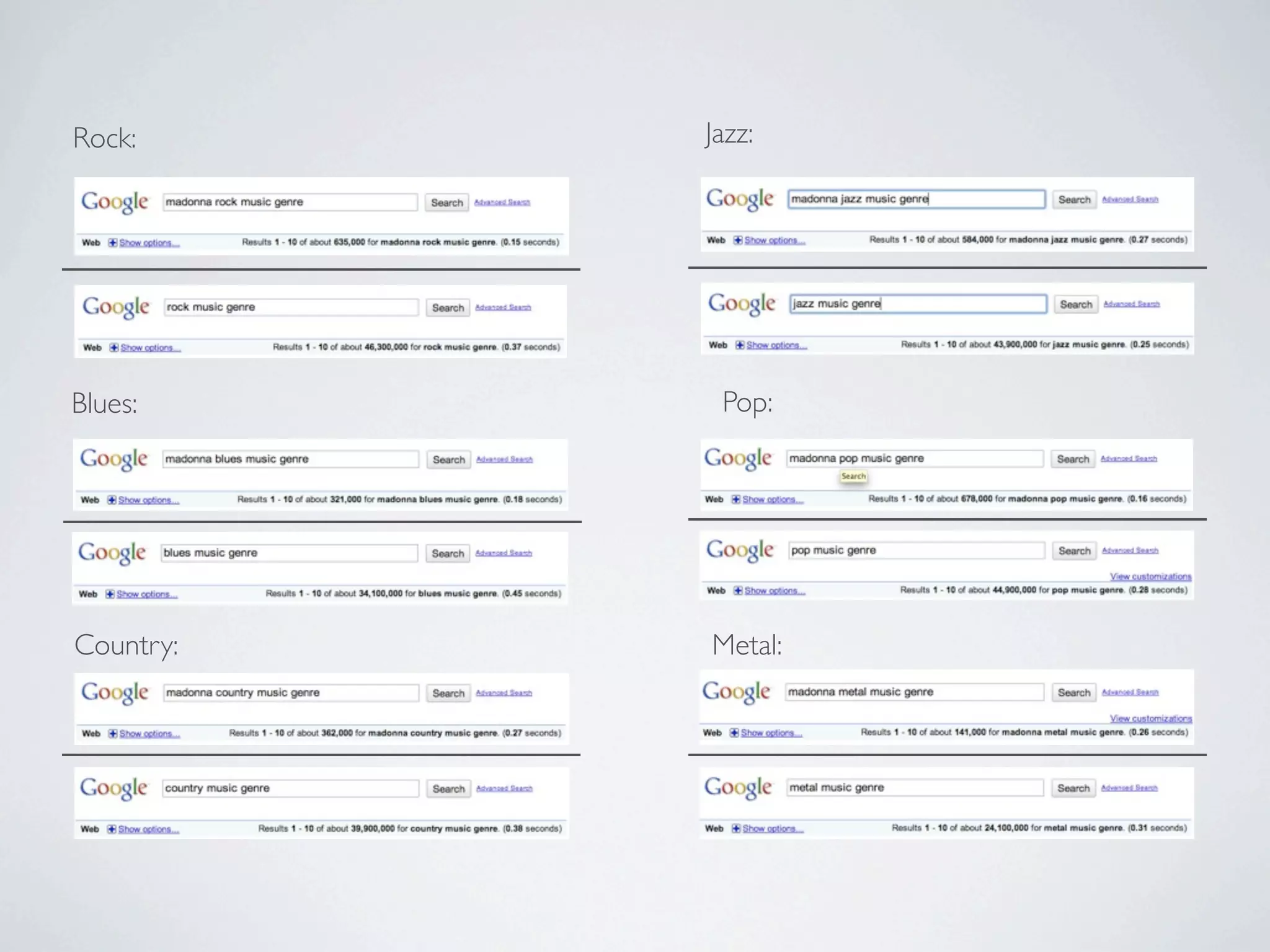

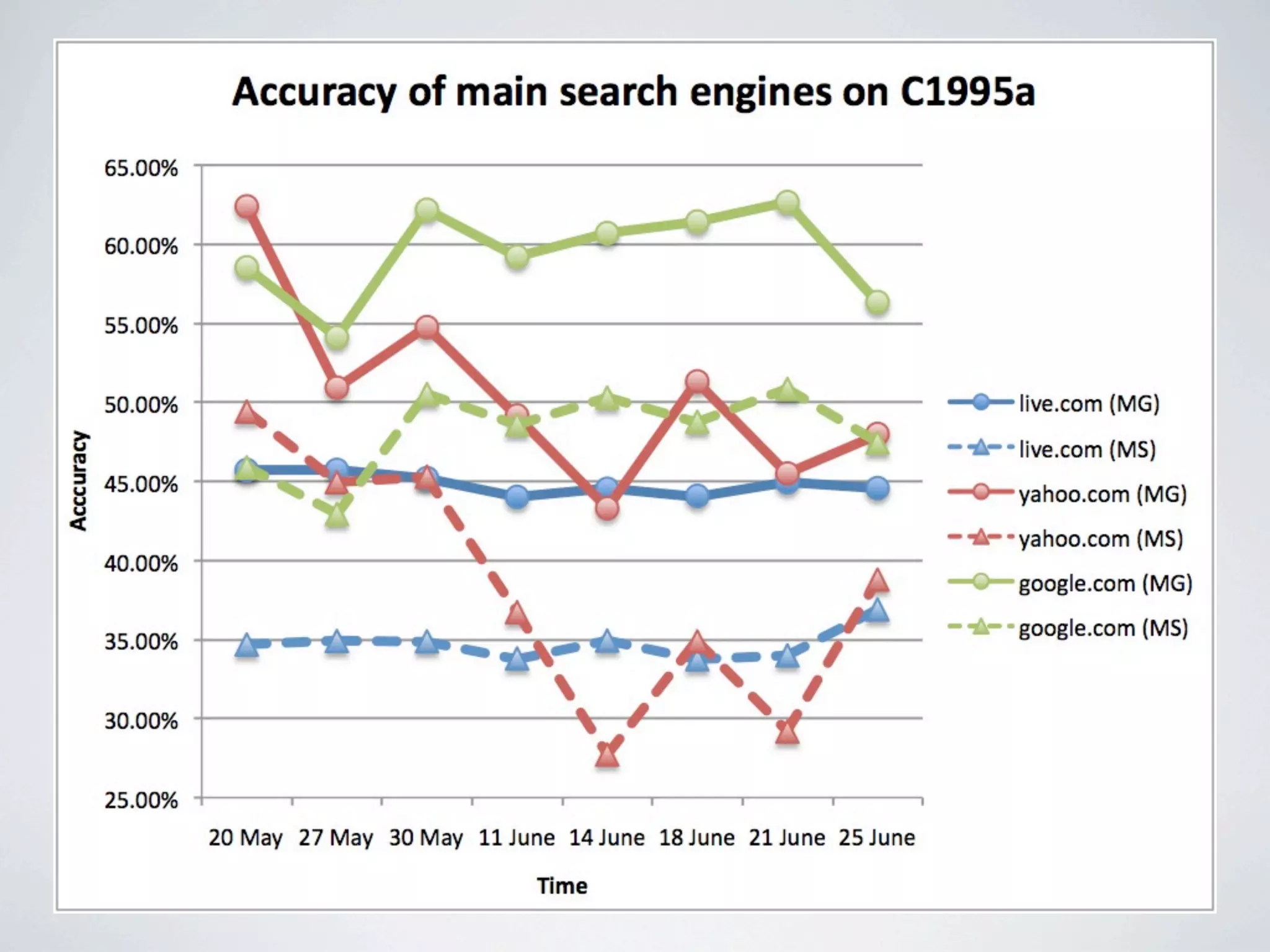

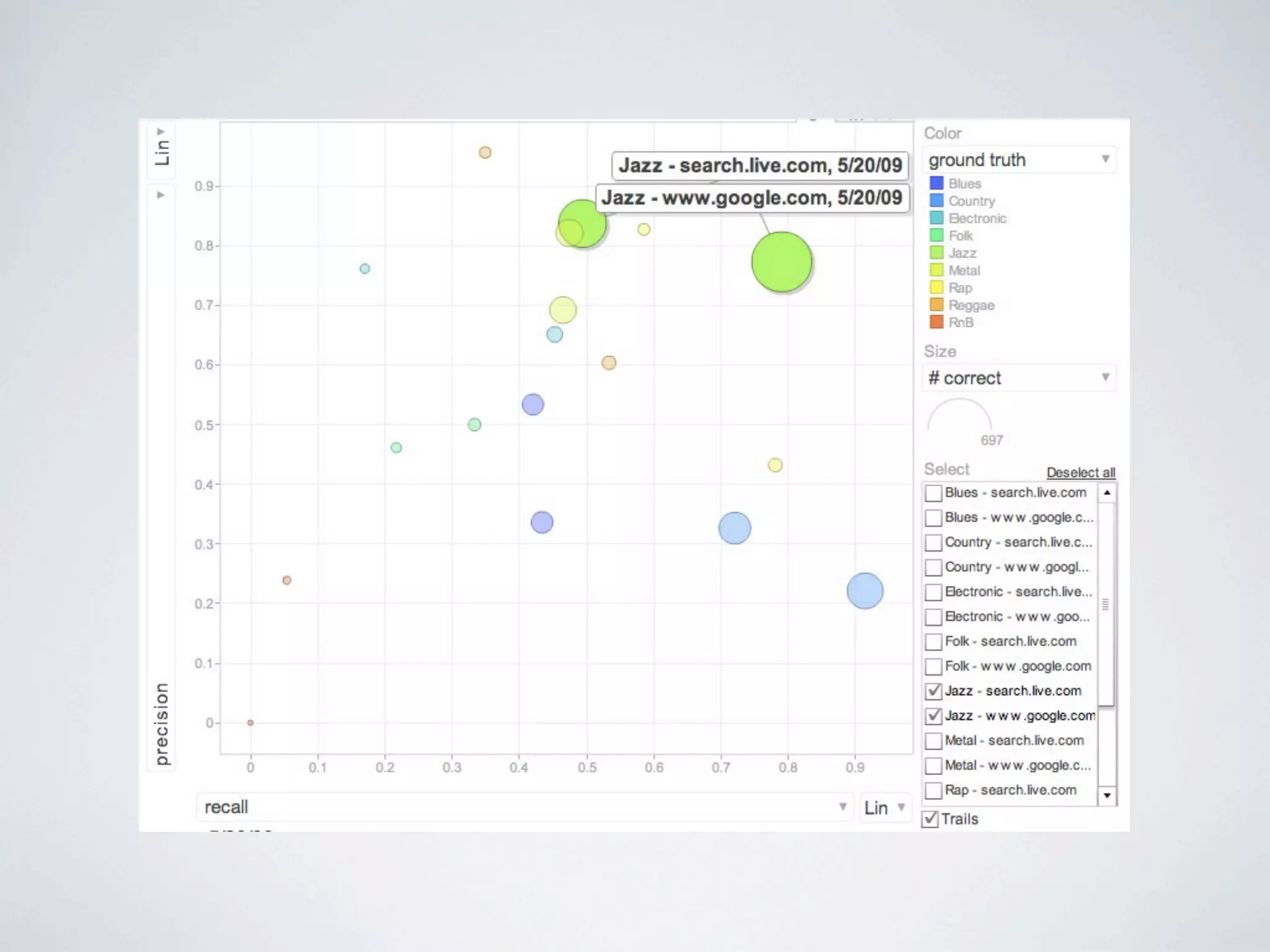

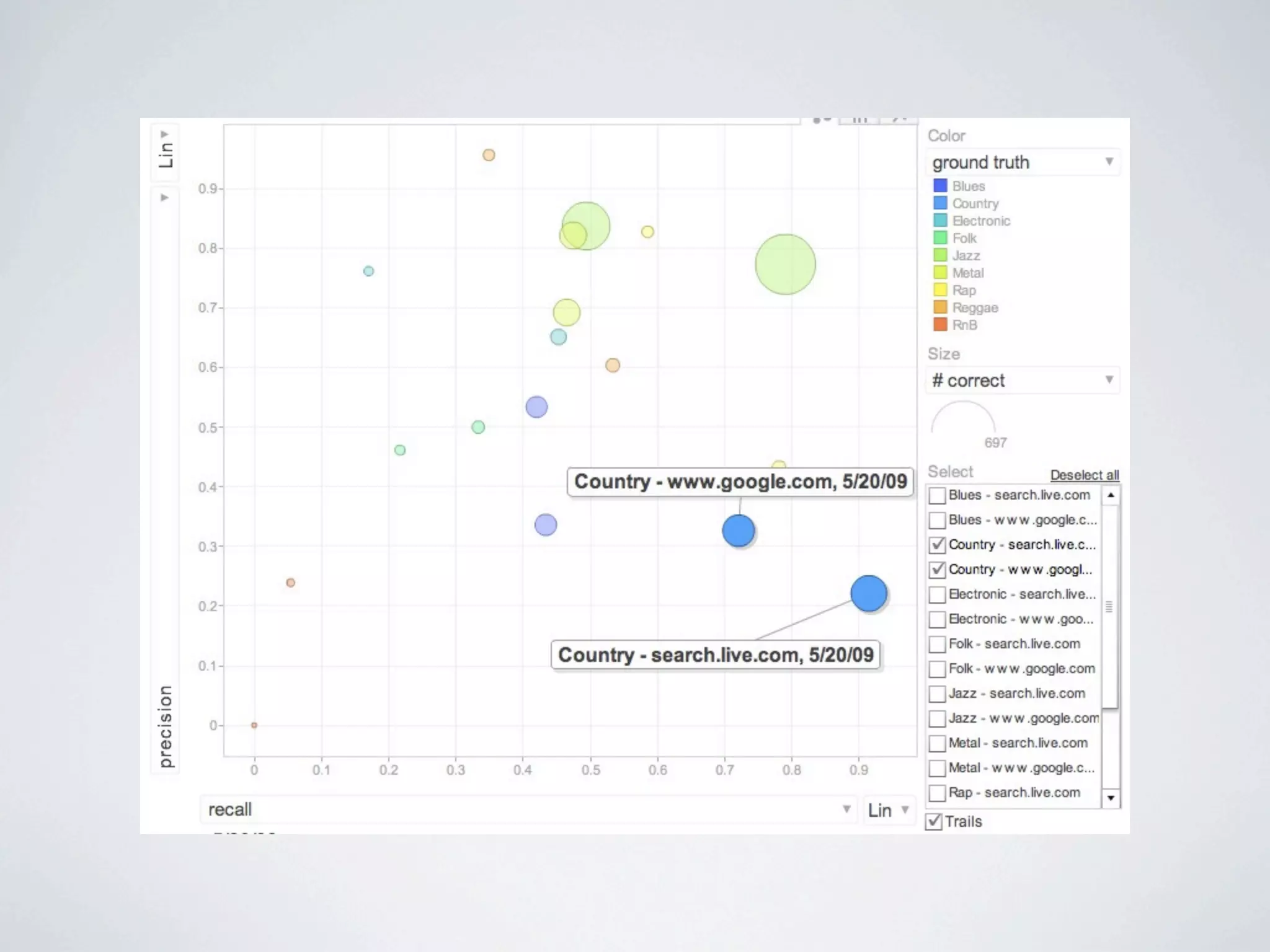

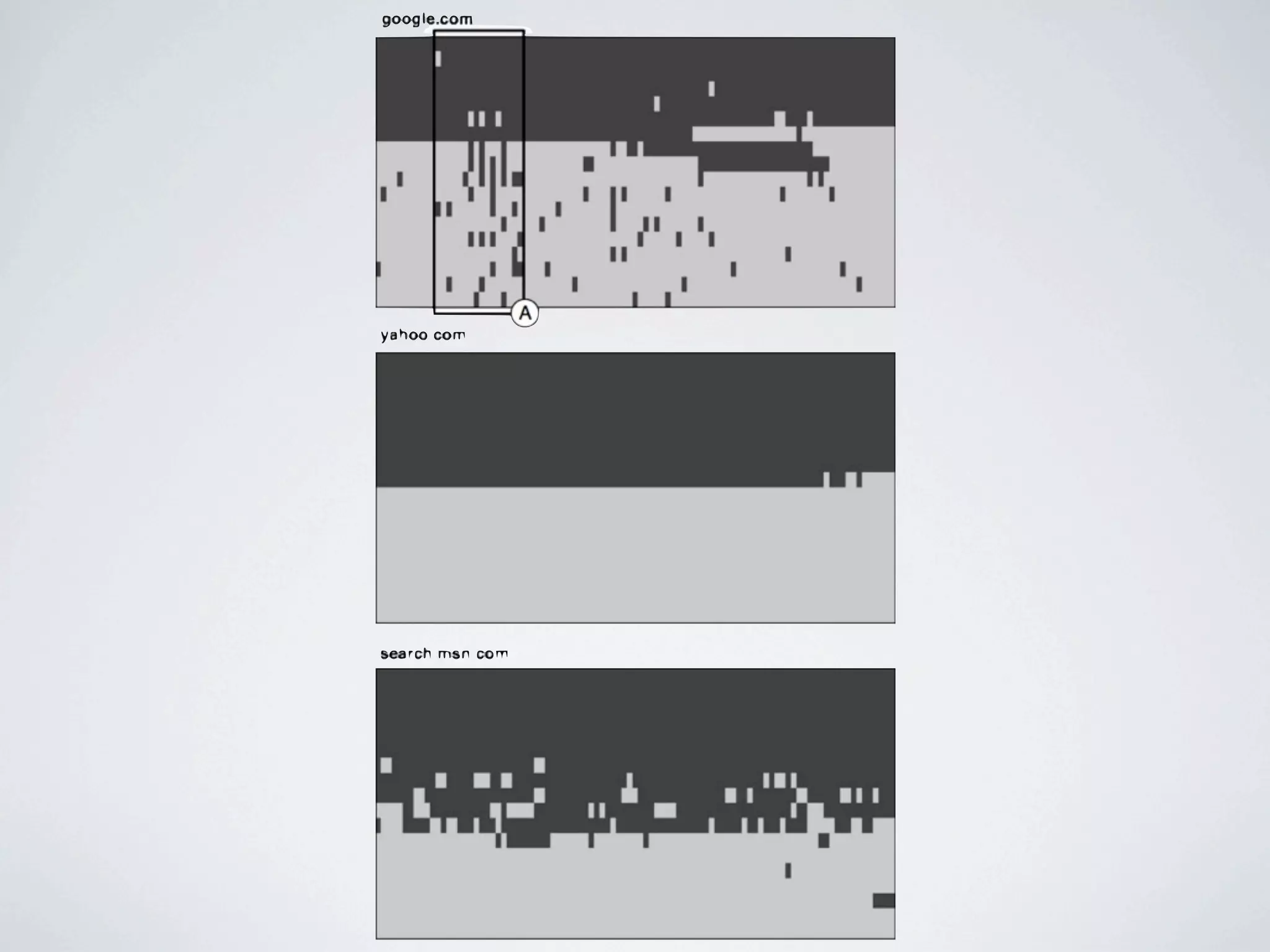

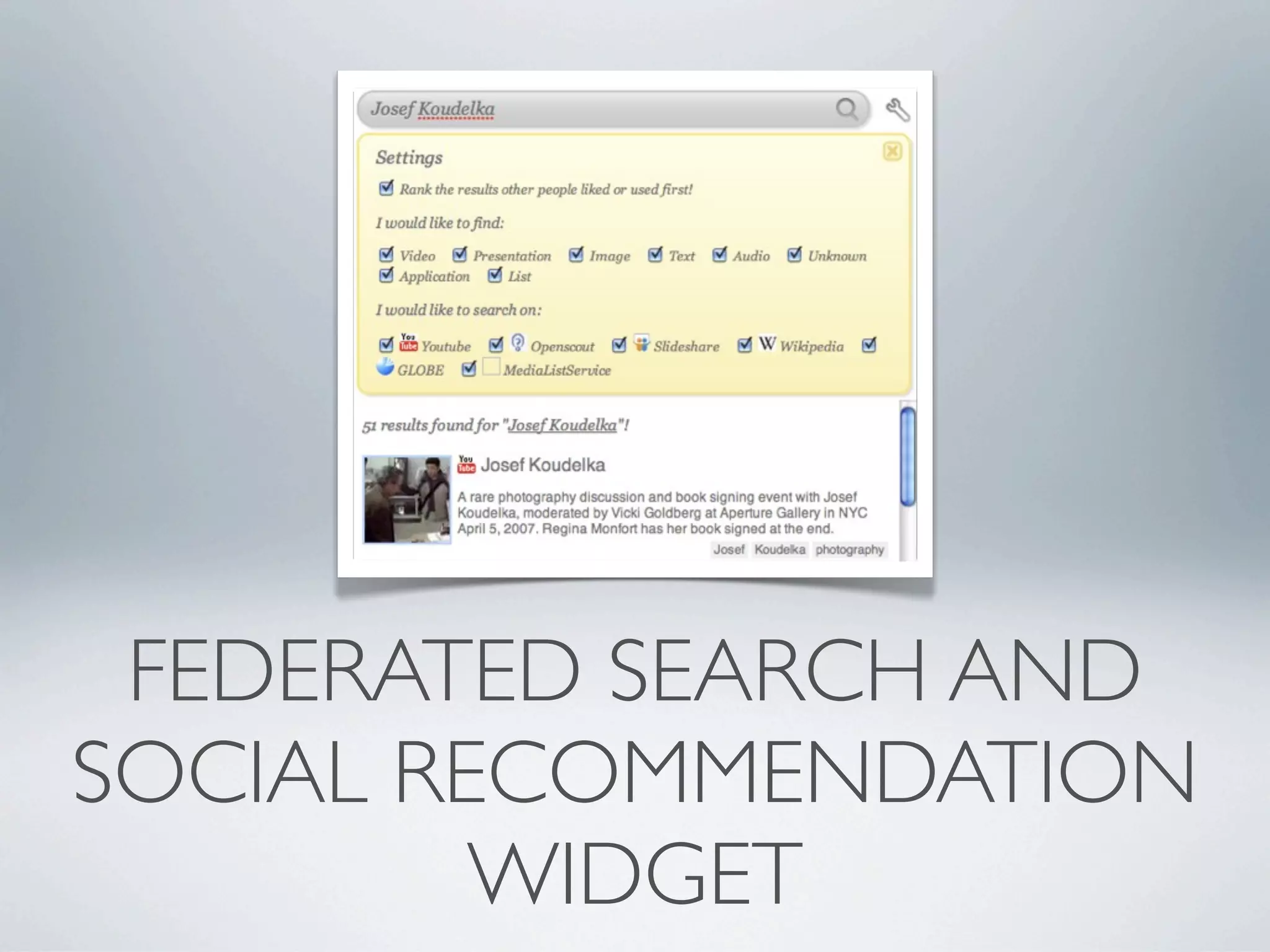

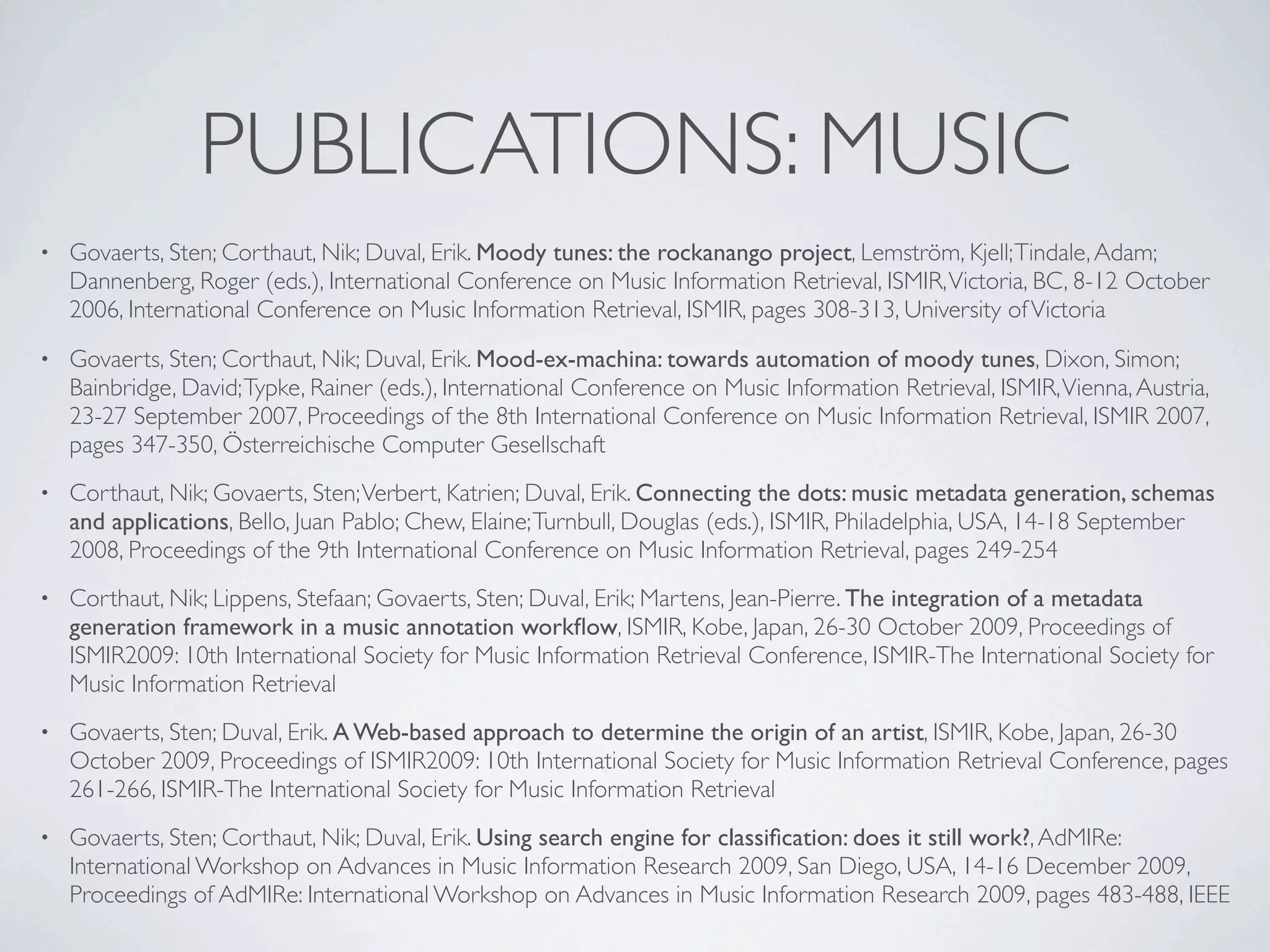

The document discusses using mashup technology to improve findability by combining data from multiple online sources to create new results. It describes a project called Rockanango that uses musical metadata from expert sources and applies it to hospitality contexts by developing musical contexts and schemas. Finally, it evaluates generating metadata for songs using various sources and techniques to classify artists by origin and genre.