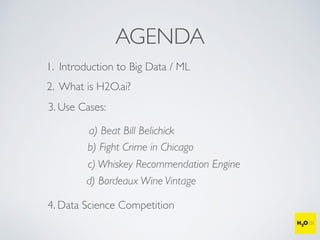

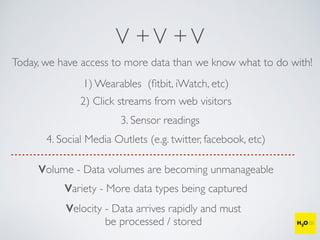

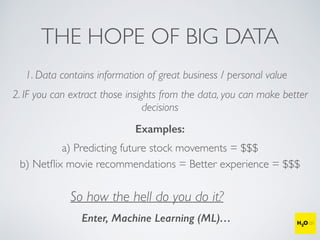

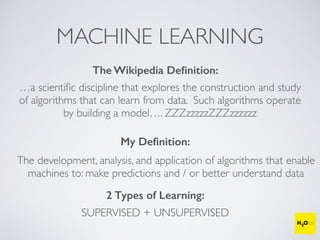

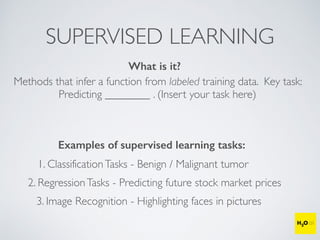

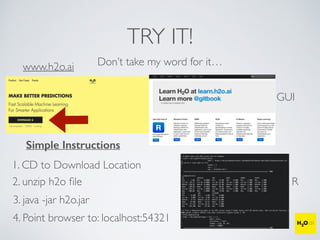

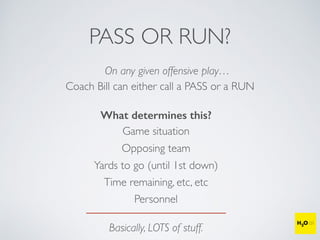

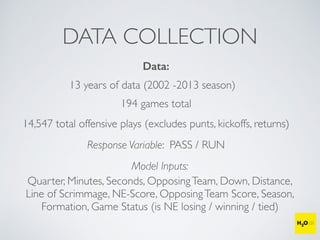

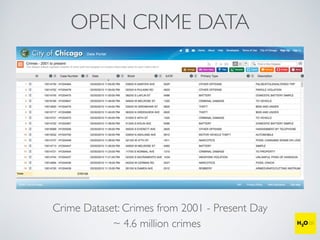

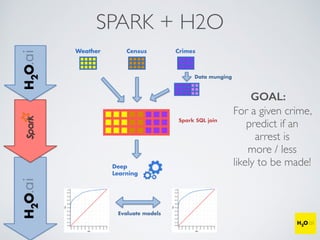

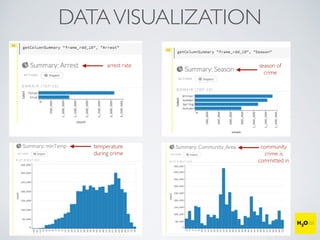

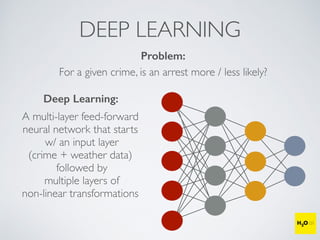

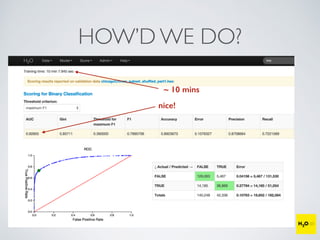

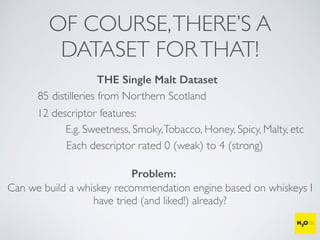

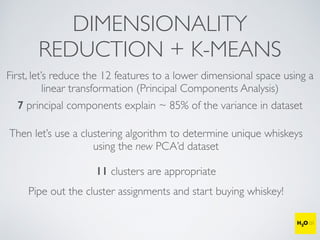

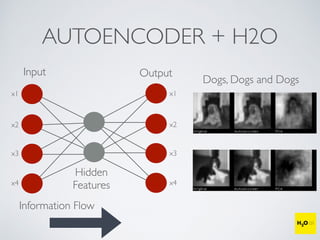

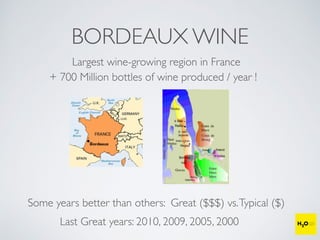

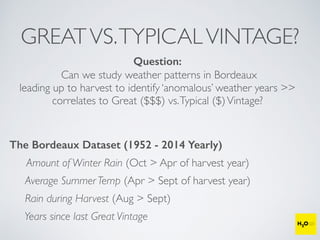

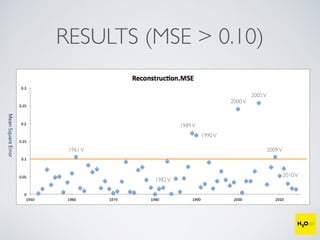

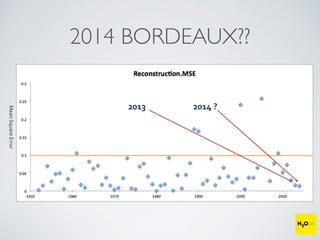

The document discusses the applications of machine learning, particularly through the H2O.ai platform, emphasizing the challenges and opportunities presented by big data. It covers various use cases including predicting football plays, crime analysis in Chicago, whiskey recommendations, and identifying exceptional Bordeaux wine vintages, supported by data science competitions. The text introduces essential machine learning concepts such as supervised and unsupervised learning, along with H2O's capabilities and techniques for processing large datasets.