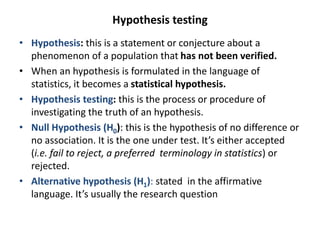

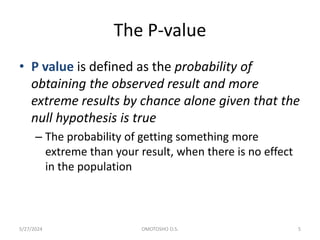

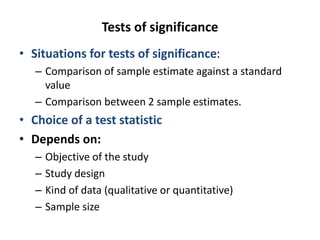

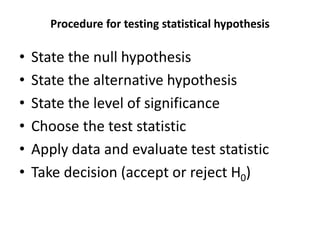

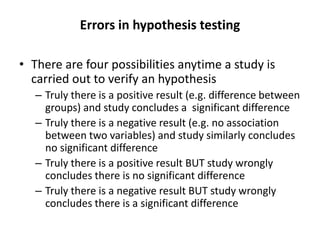

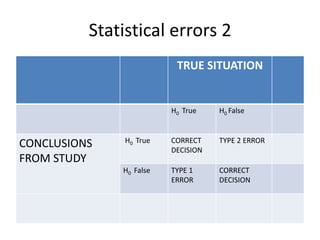

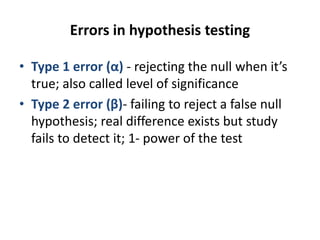

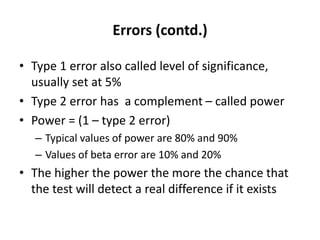

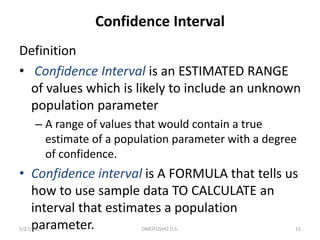

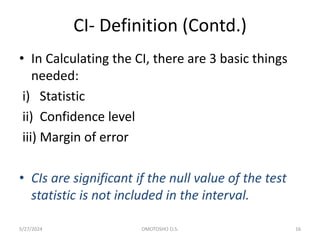

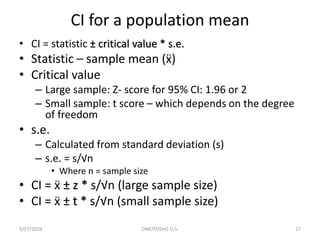

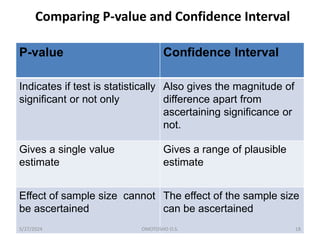

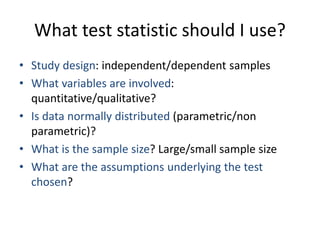

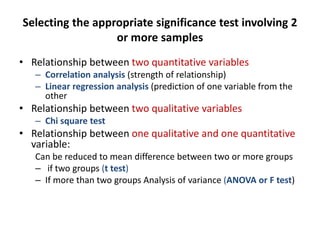

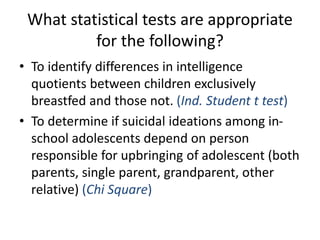

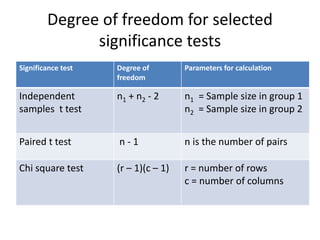

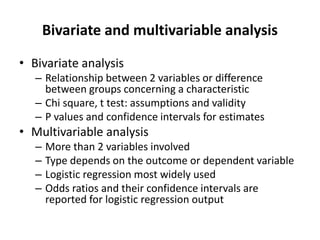

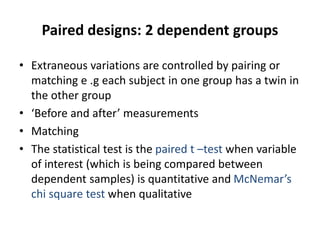

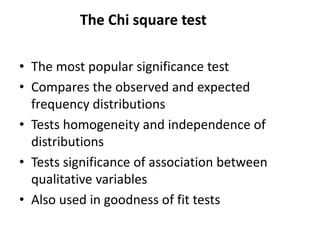

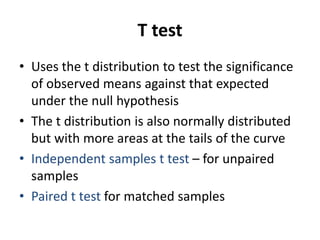

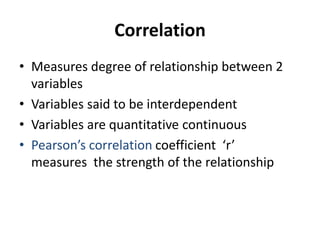

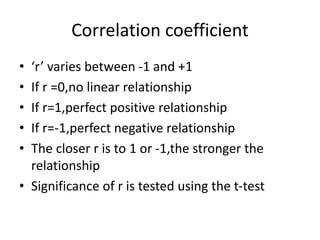

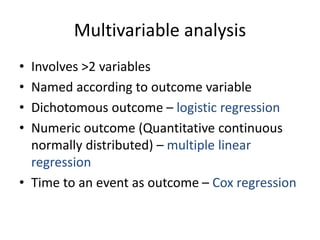

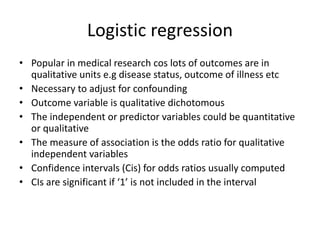

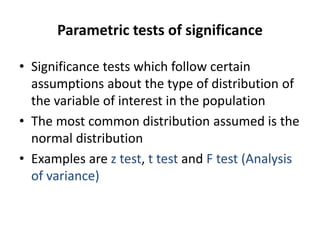

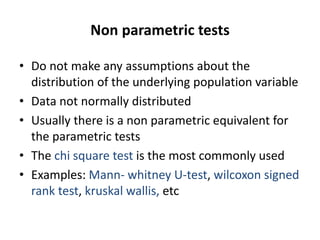

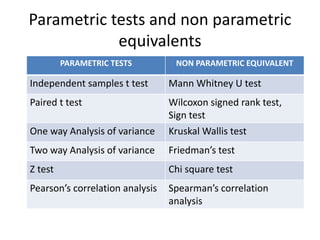

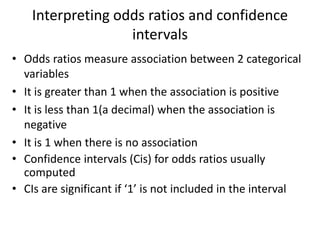

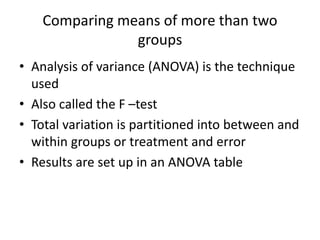

The document provides an overview of inferential statistics, including hypothesis testing, the significance of the p-value, and the concept of confidence intervals. It outlines tests of significance, types of errors in hypothesis testing, statistical methods of analysis, and the interpretation of results. Furthermore, it discusses various statistical tests, including t-tests, chi-square tests, and regression analysis, emphasizing their application in research.