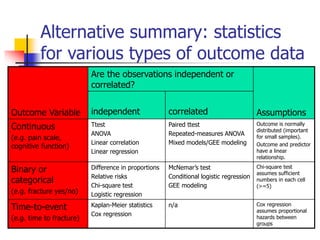

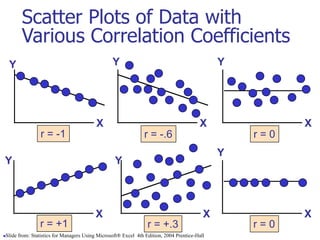

This document discusses linear correlation and linear regression. It defines linear correlation as showing the linear relationship between two continuous variables, while linear regression is a multivariate technique used when the outcome is continuous that provides slopes. Linear regression assumes a linear relationship between an independent and dependent variable, normally distributed dependent variable values, equal variances, and independence of observations. It estimates a slope and intercept through least squares estimation to minimize the squared distances between observed and predicted dependent variable values. The significance of the estimated slope can be tested using a t-test.

![Recall example: cognitive

function and vitamin D

Hypothetical data loosely based on [1];

cross-sectional study of 100 middle-

aged and older European men.

Cognitive function is measured by the Digit

Symbol Substitution Test (DSST).

1. Lee DM, Tajar A, Ulubaev A, et al. Association between 25-hydroxyvitamin D levels and cognitive performance in middle-aged

and older European men. J Neurol Neurosurg Psychiatry. 2009 Jul;80(7):722-9.](https://image.slidesharecdn.com/slidesetslr-240313133804-e00119ea/85/Slideset-Simple-Linear-Regression-models-ppt-24-320.jpg)