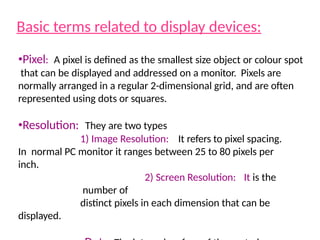

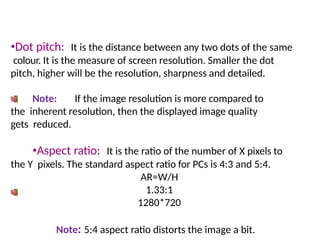

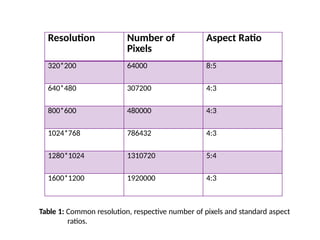

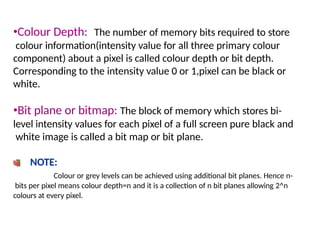

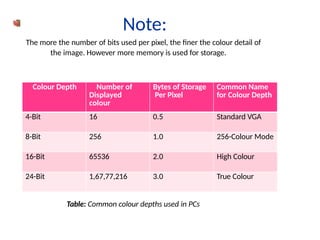

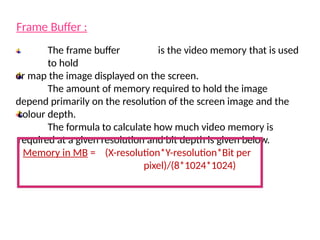

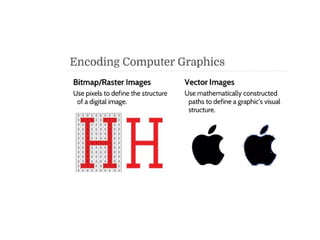

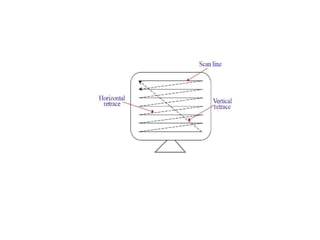

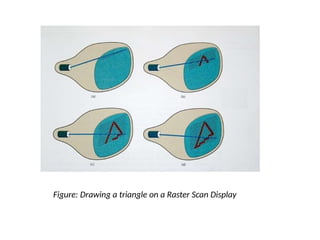

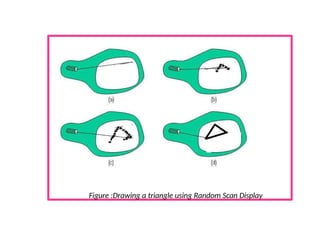

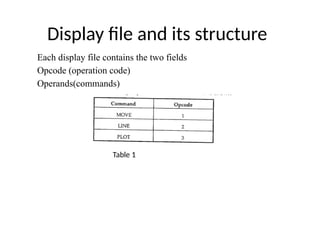

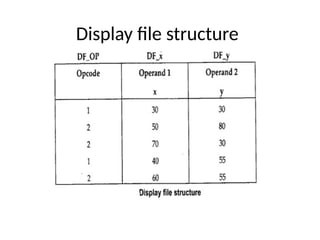

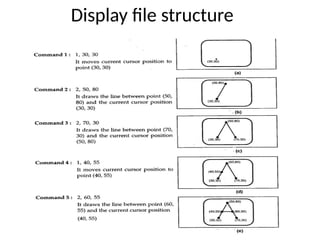

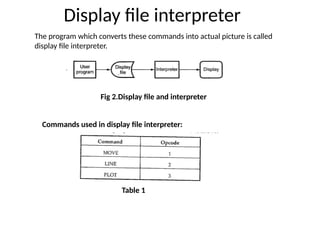

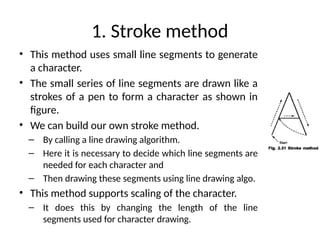

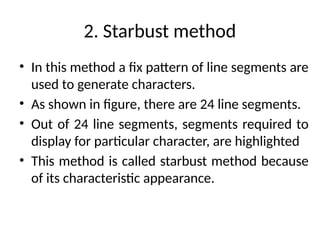

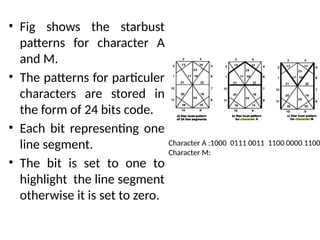

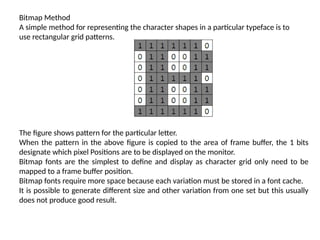

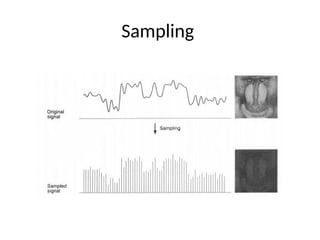

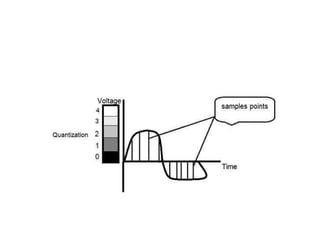

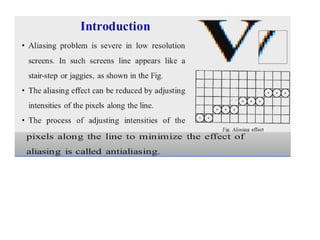

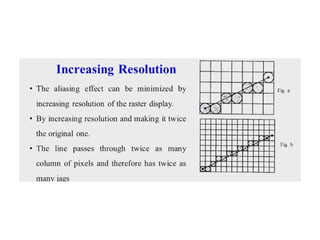

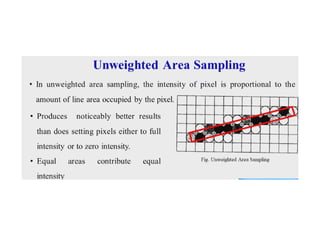

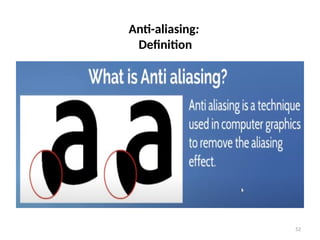

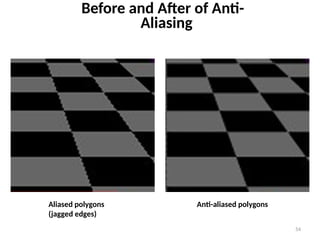

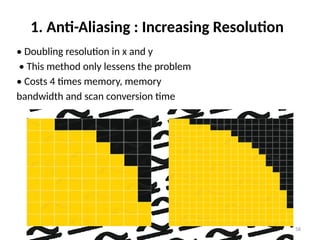

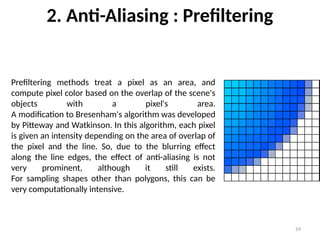

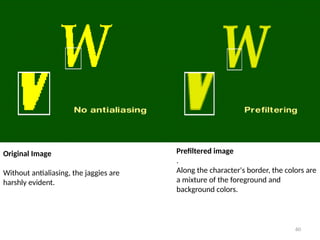

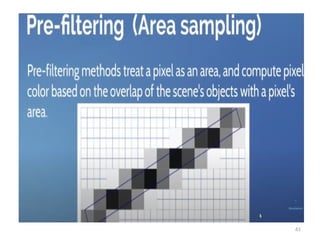

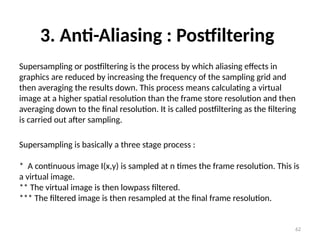

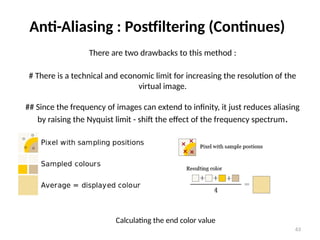

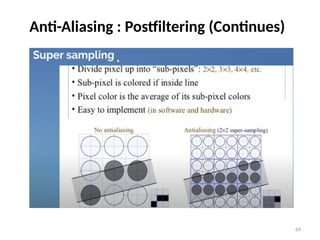

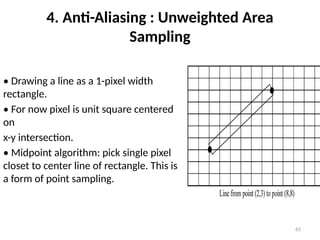

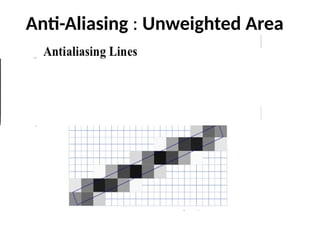

The document covers essential concepts related to display devices, including pixels, resolution, aspect ratio, color depth, and display systems. It explains the differences between raster scan and random scan displays, character generation methods, and anti-aliasing techniques used to enhance image quality. Key terms are defined, illustrated, and detailed through tables and formulas for better understanding of display technology.