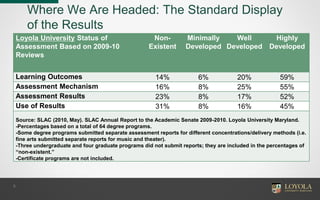

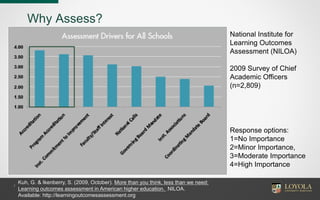

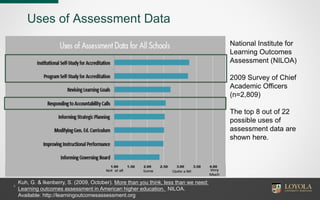

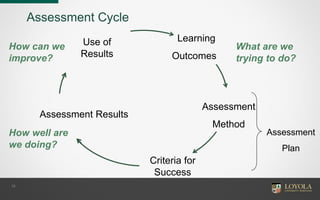

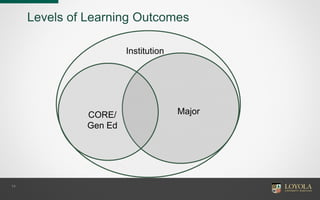

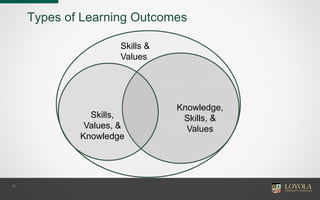

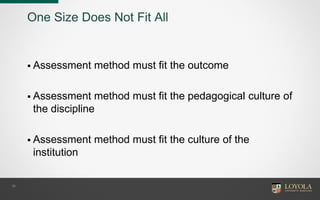

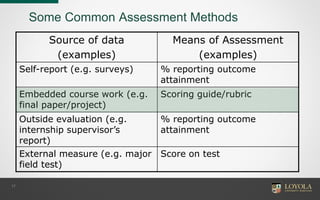

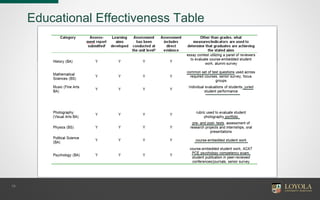

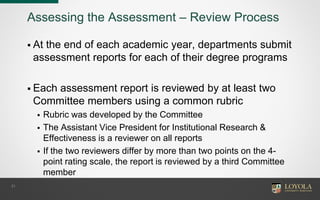

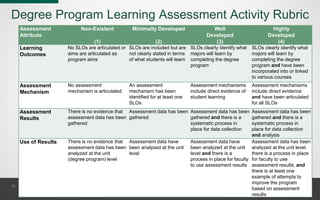

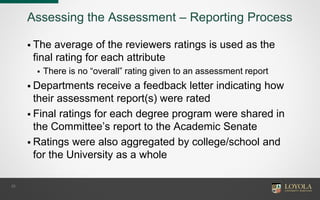

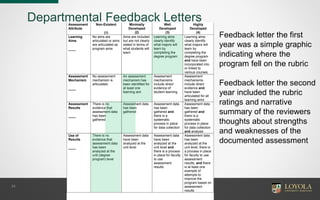

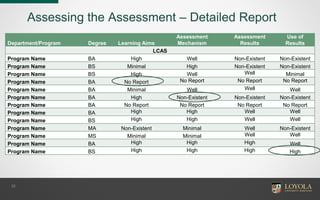

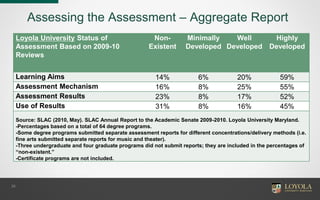

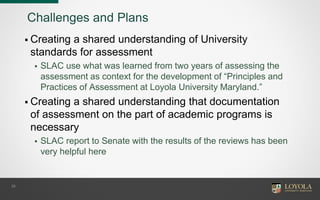

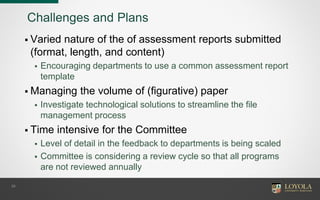

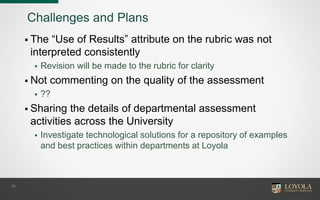

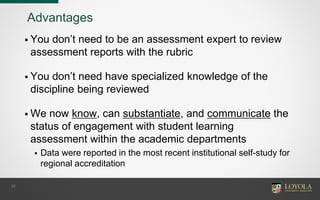

The document describes Loyola University Maryland's process for assessing student learning assessment at the program level. It discusses the university's institutional context, student learning assessment committee, assessment reporting process, and rubric used to rate program-level assessment reports. Programs receive feedback on how their assessment reports were rated in order to continuously improve the assessment of student learning.