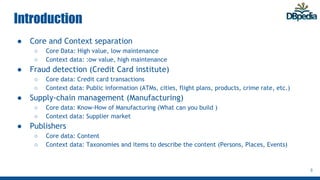

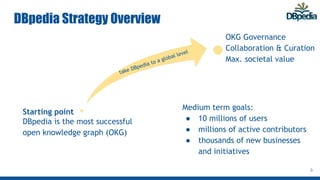

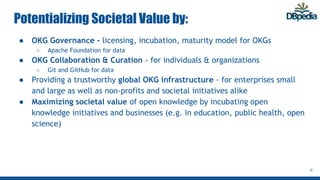

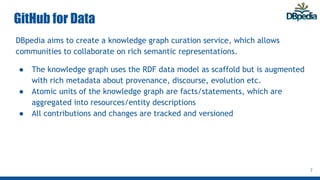

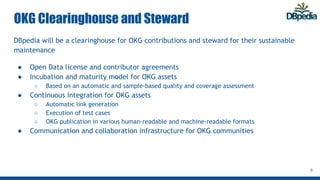

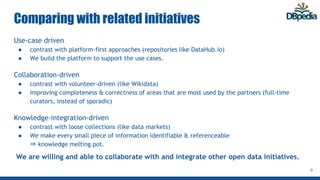

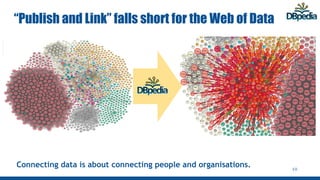

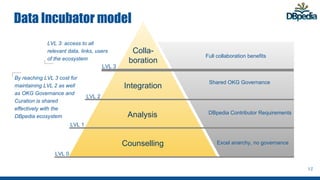

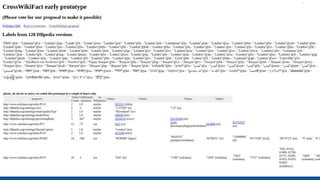

This document outlines DBpedia's strategy to become a global open knowledge graph by facilitating collaboration on data. It discusses establishing governance and curation processes to improve data quality and enable organizations to incubate their knowledge graphs. The goals are to have millions of users and contributors collaborating on data through services like GitHub for data. Technologies like identifiers, schema mapping, and test-driven development help integrate data. The vision is for DBpedia to connect many decentralized data sources so data becomes freely available and easier to work with.