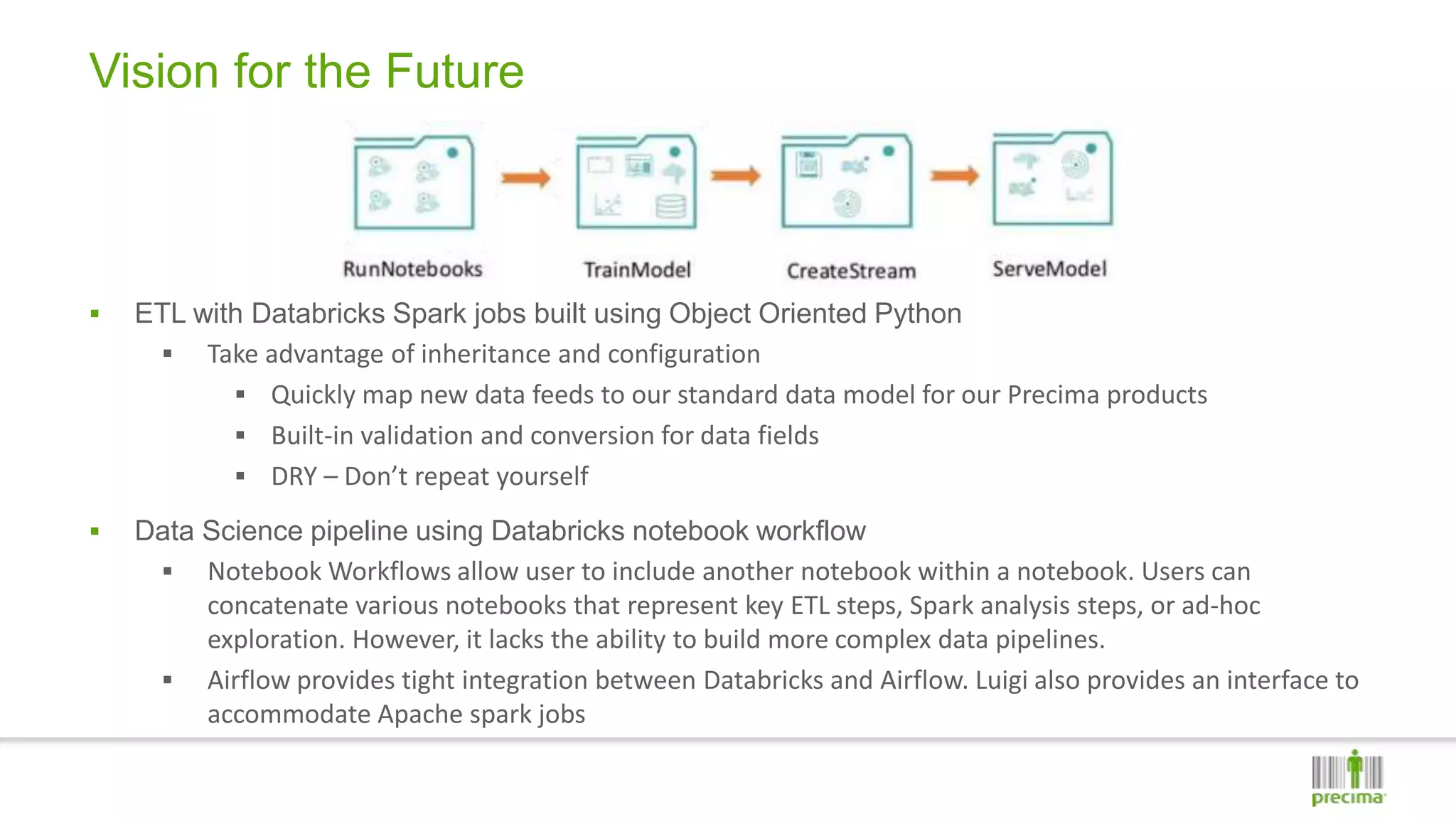

The document discusses Precima's analytics processes and pipeline. It describes moving from on-premise systems like SAS and shell scripting to using AWS services like S3, Control-M, Luigi, and Redshift. It outlines considerations for pipeline design and reviews both past and current systems. The future vision involves using Databricks for data pipelines and Snowflake for queries, allowing decoupled, scalable computing and storage.