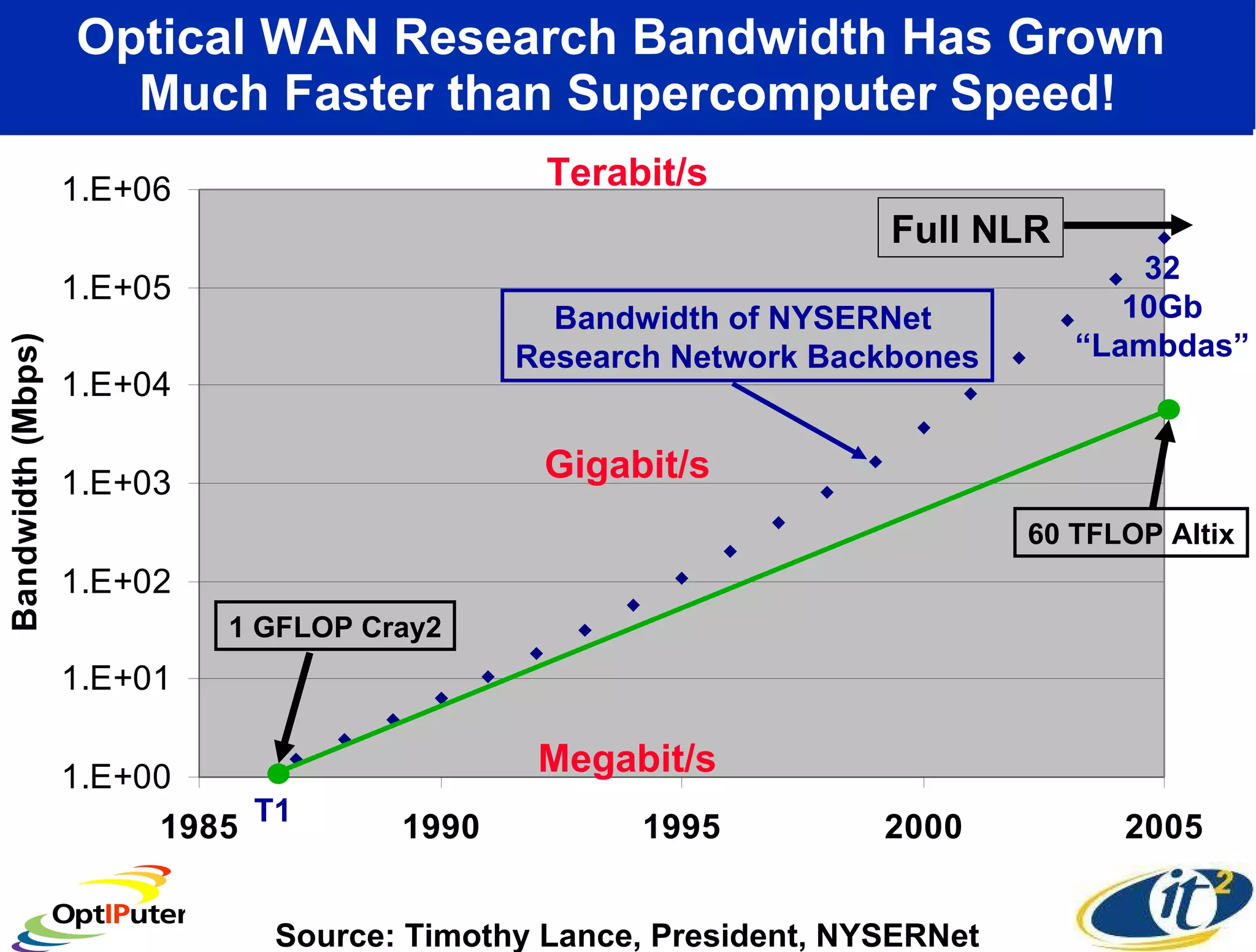

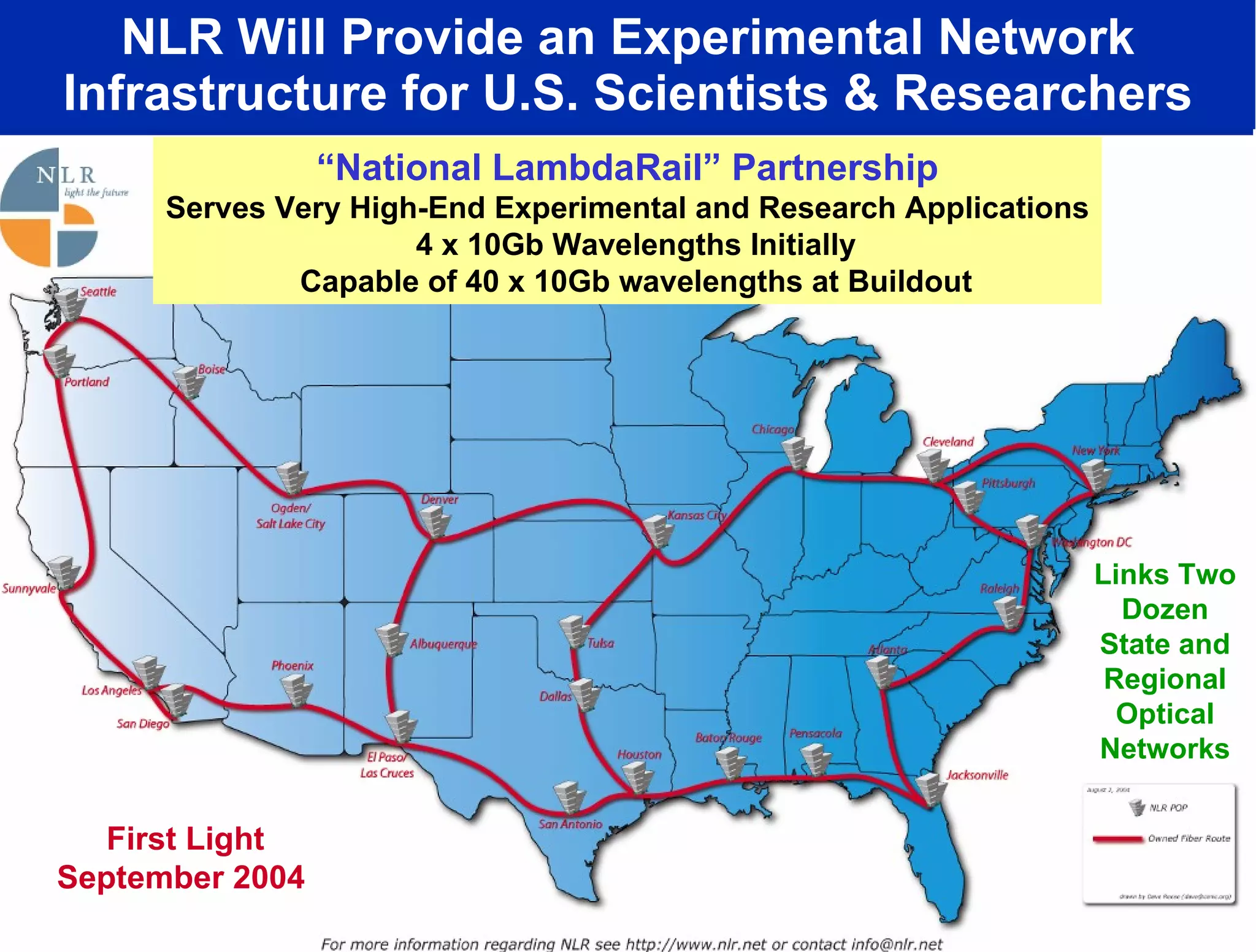

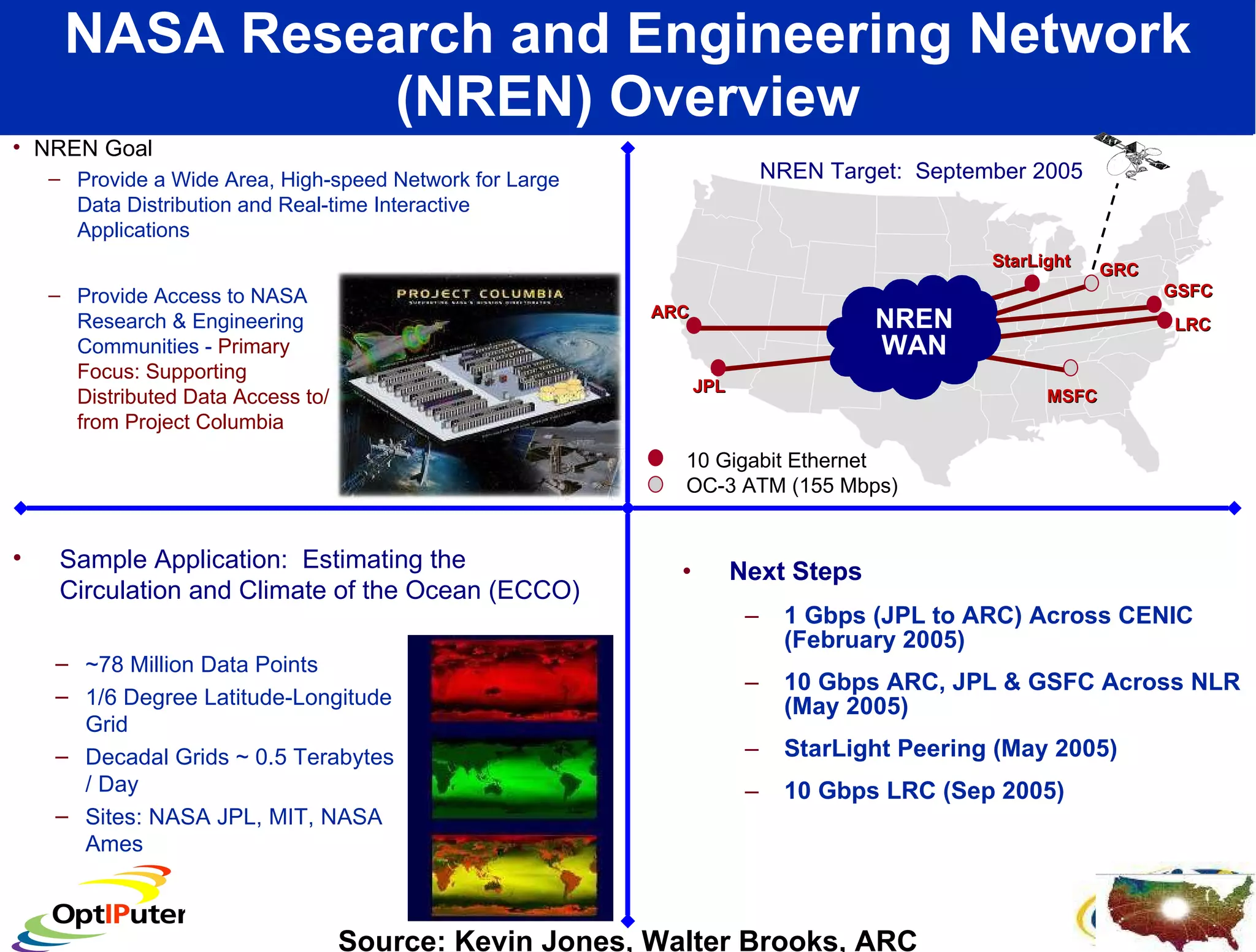

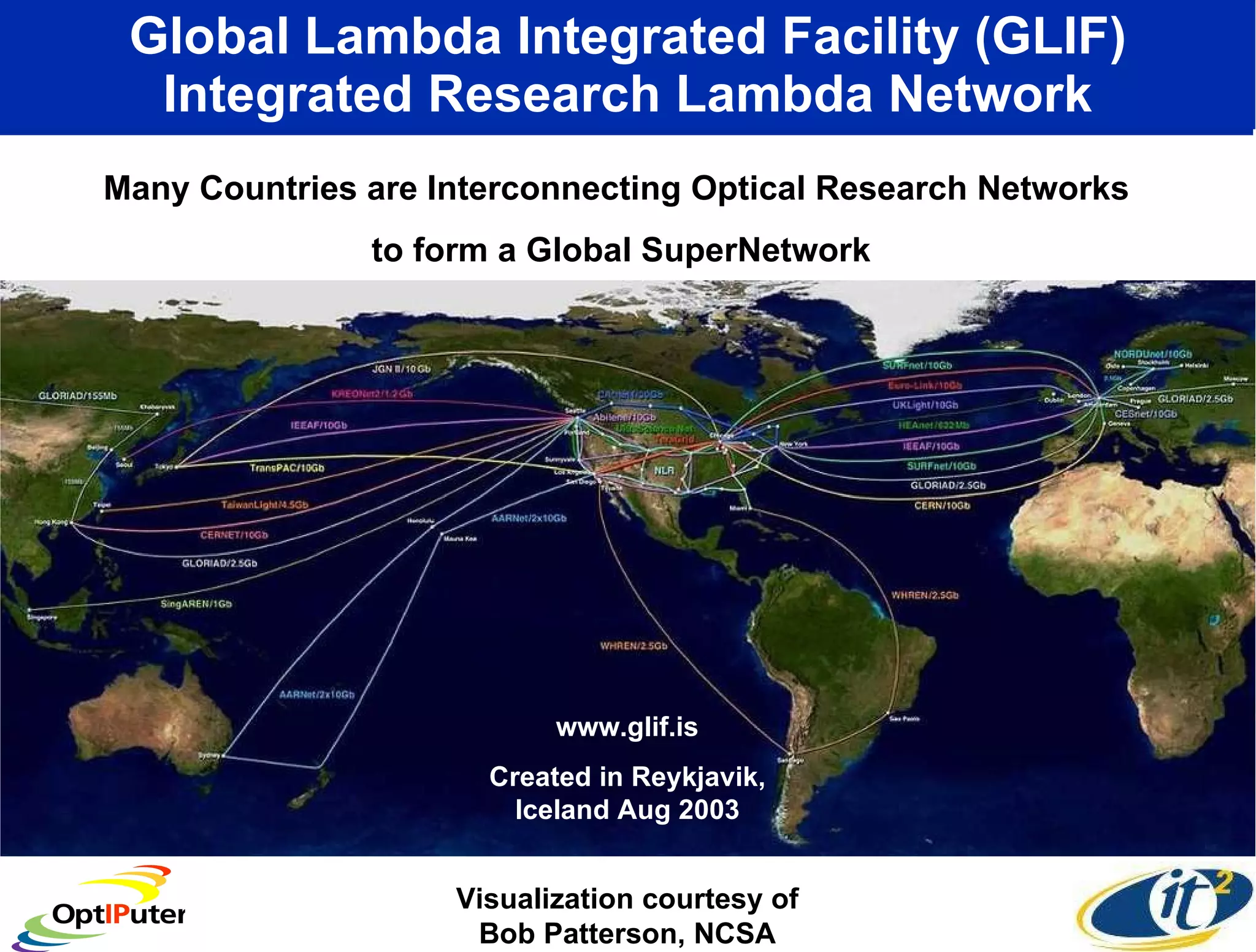

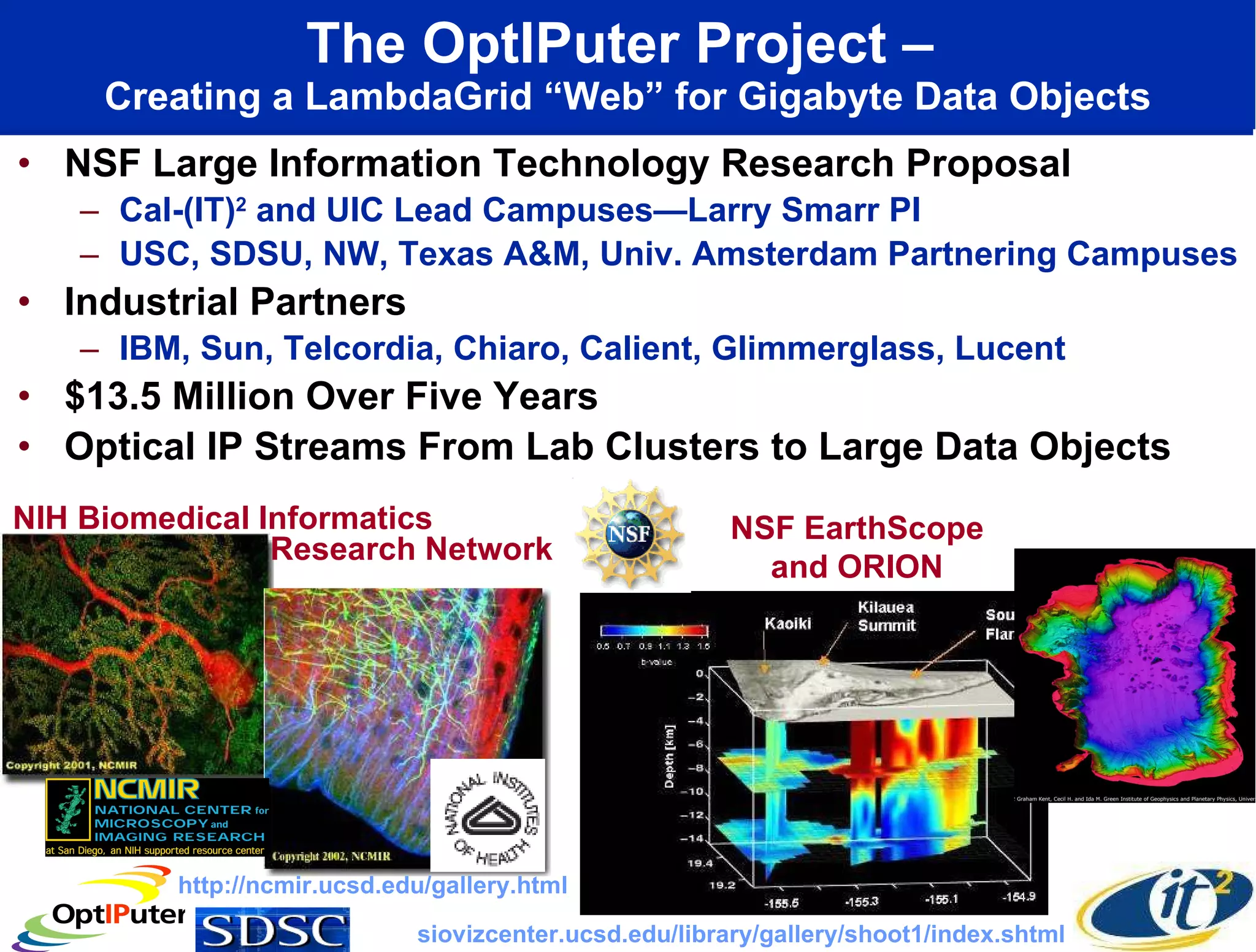

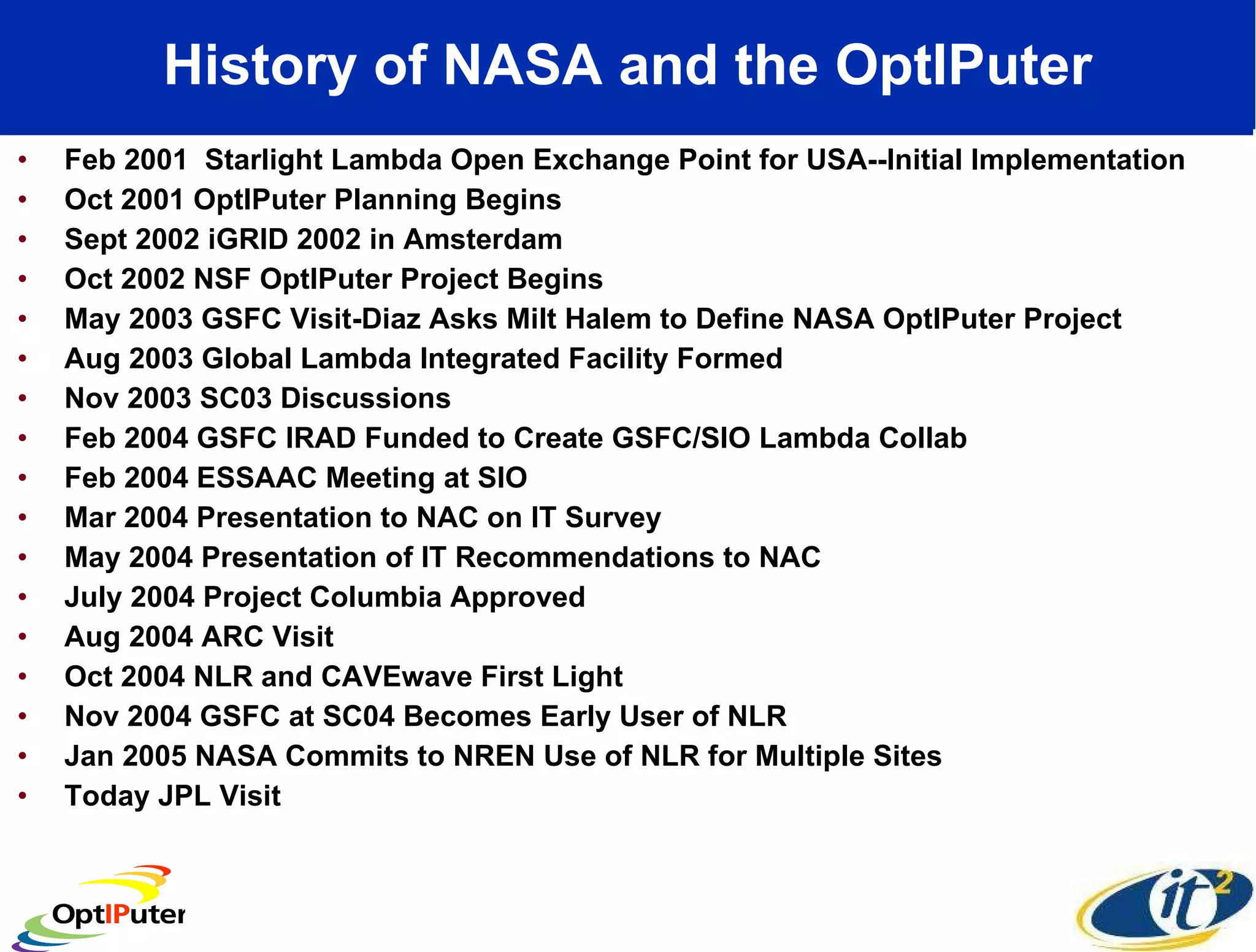

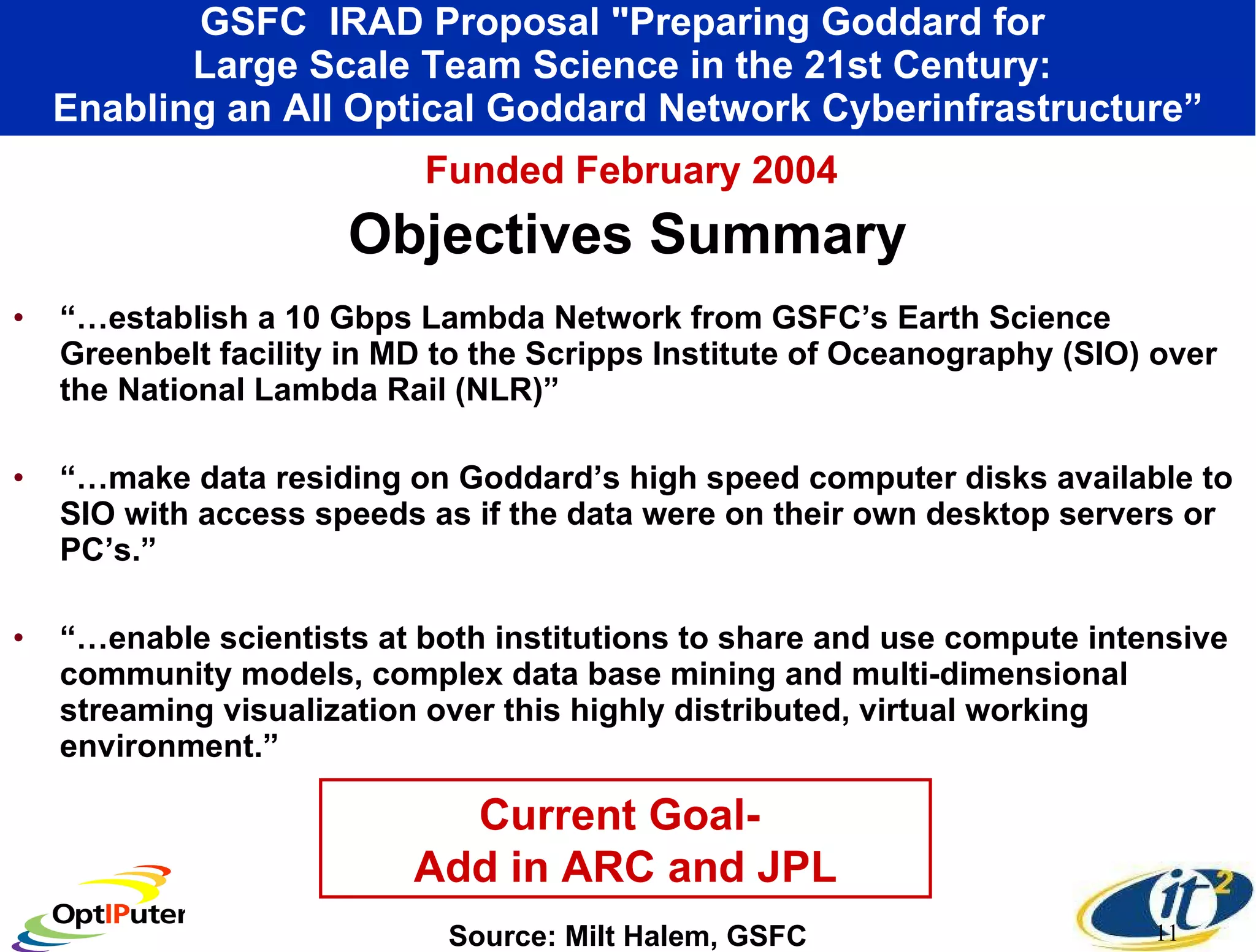

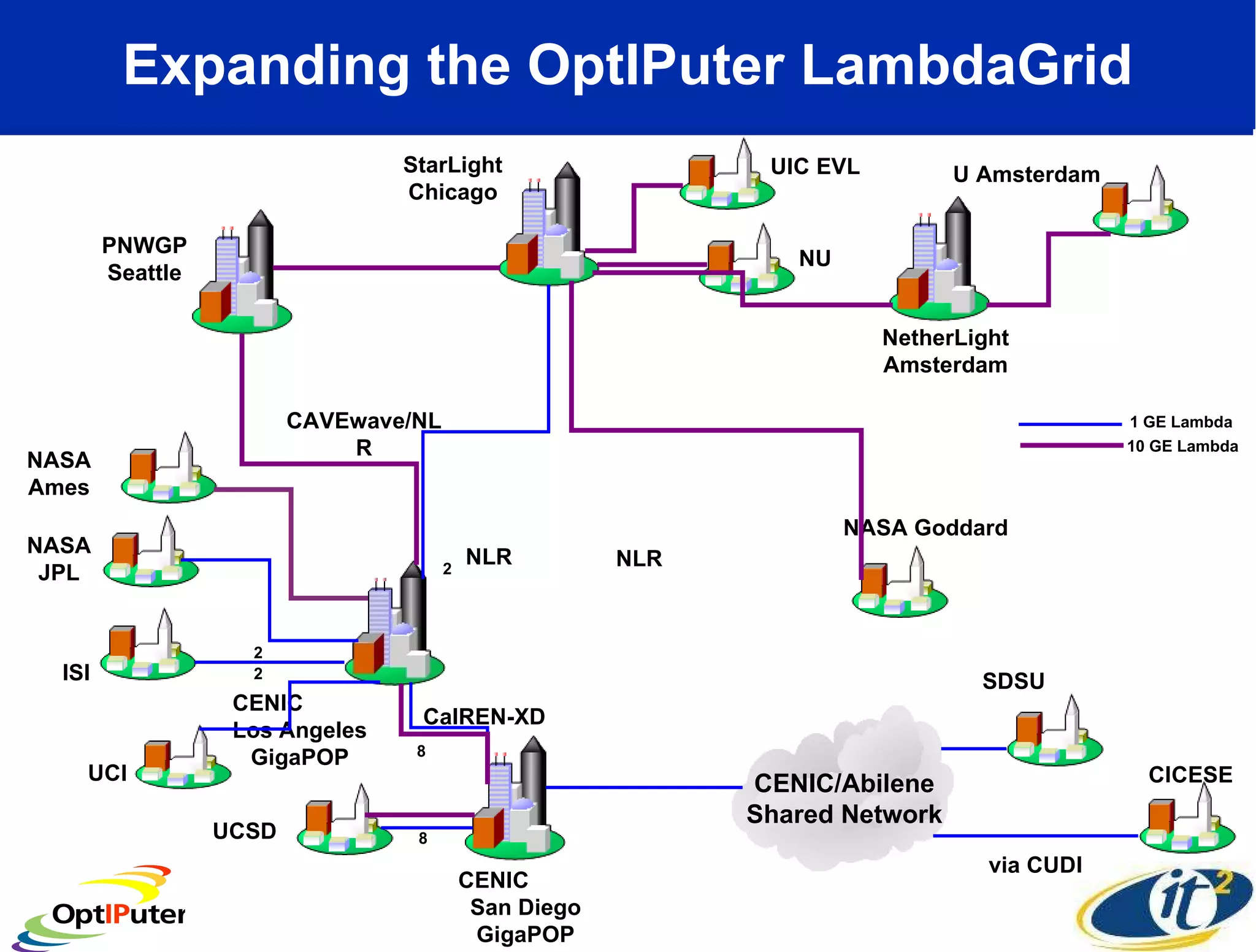

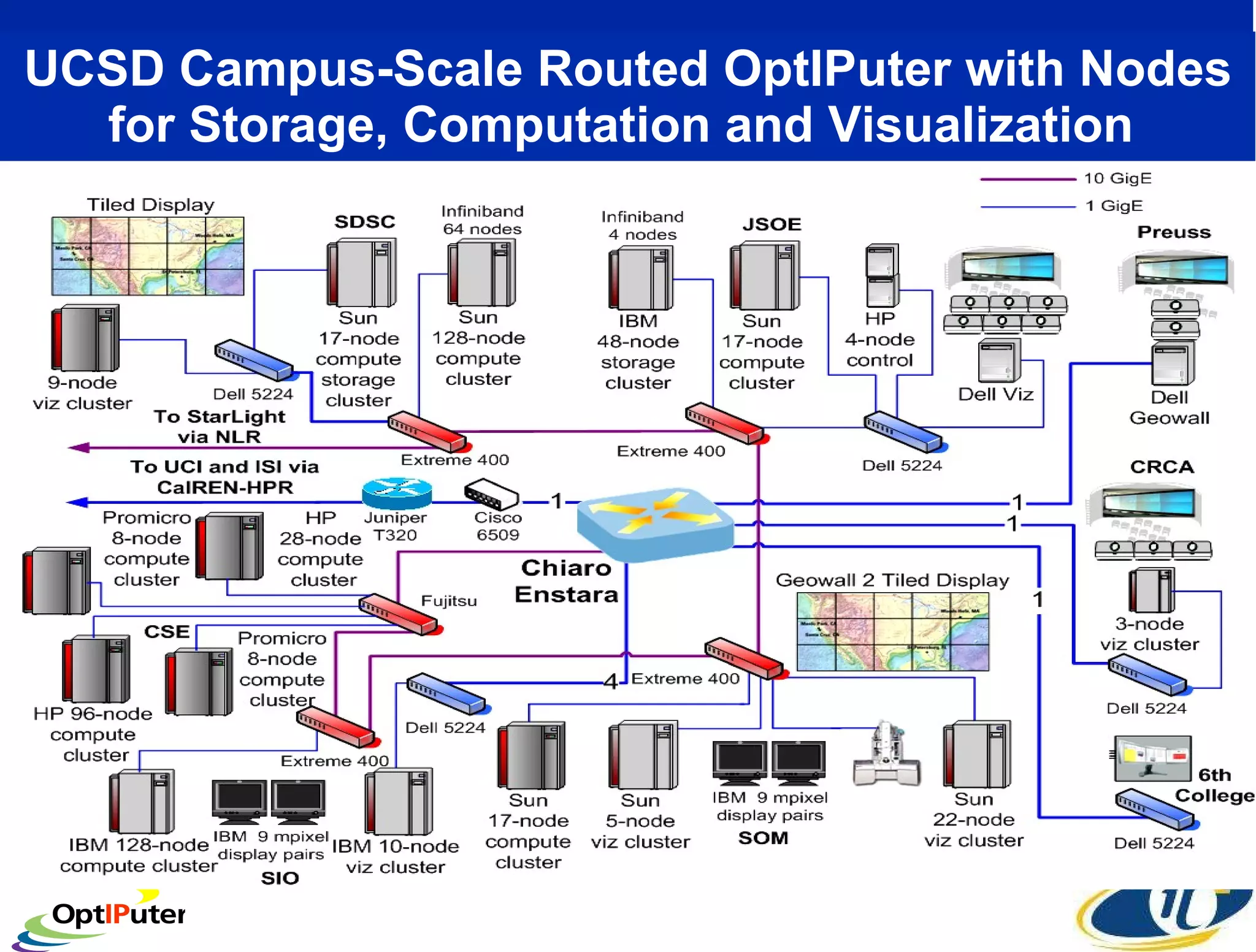

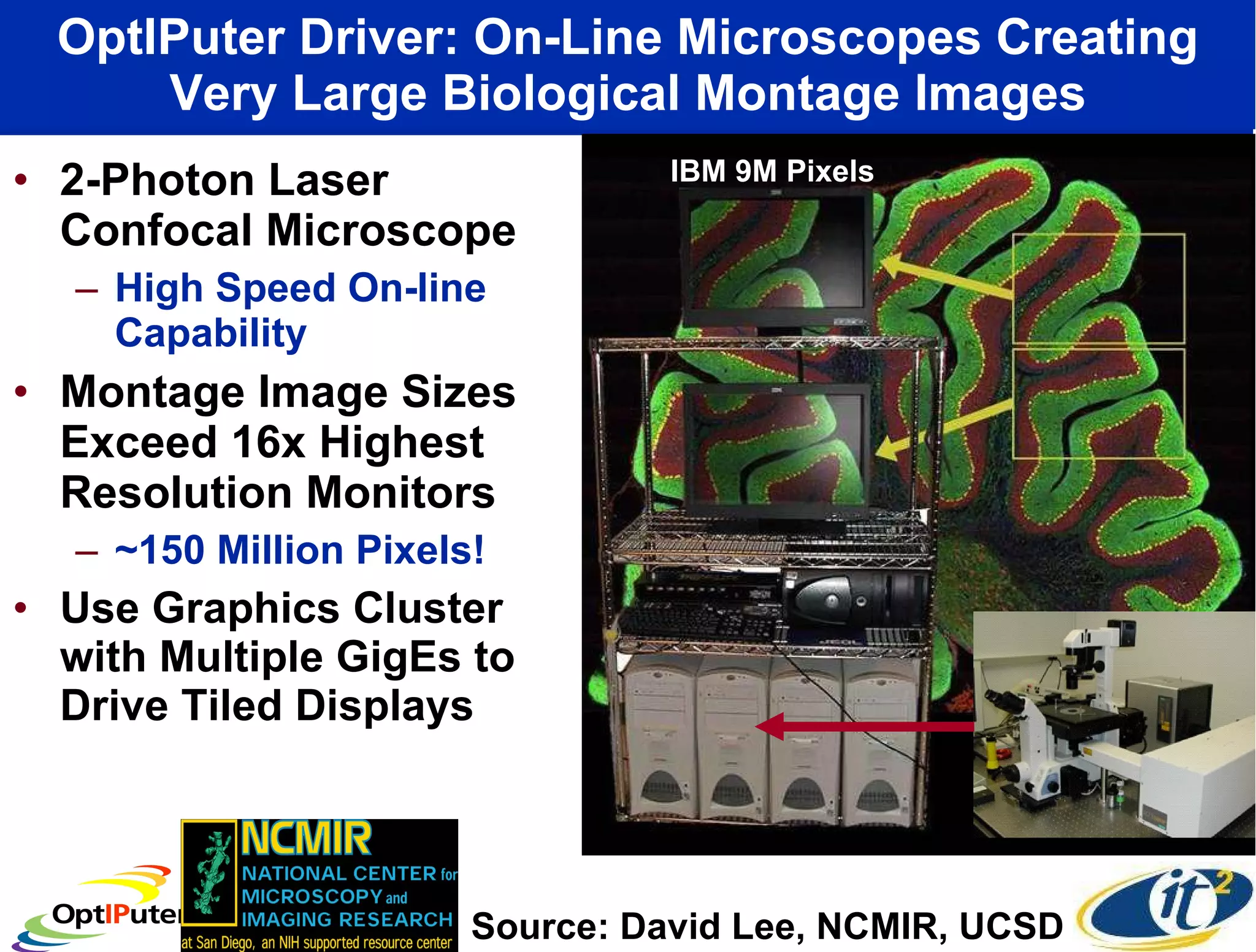

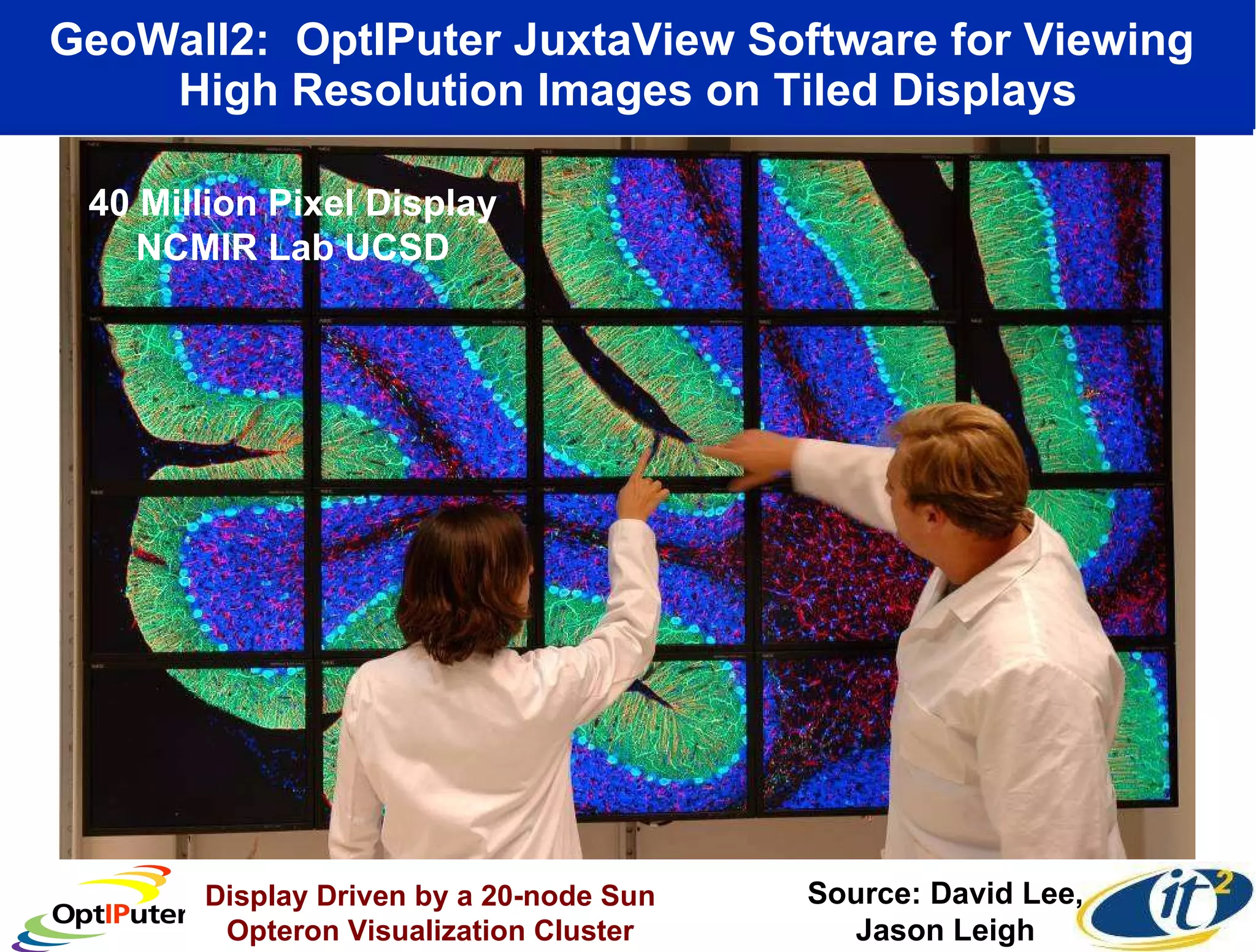

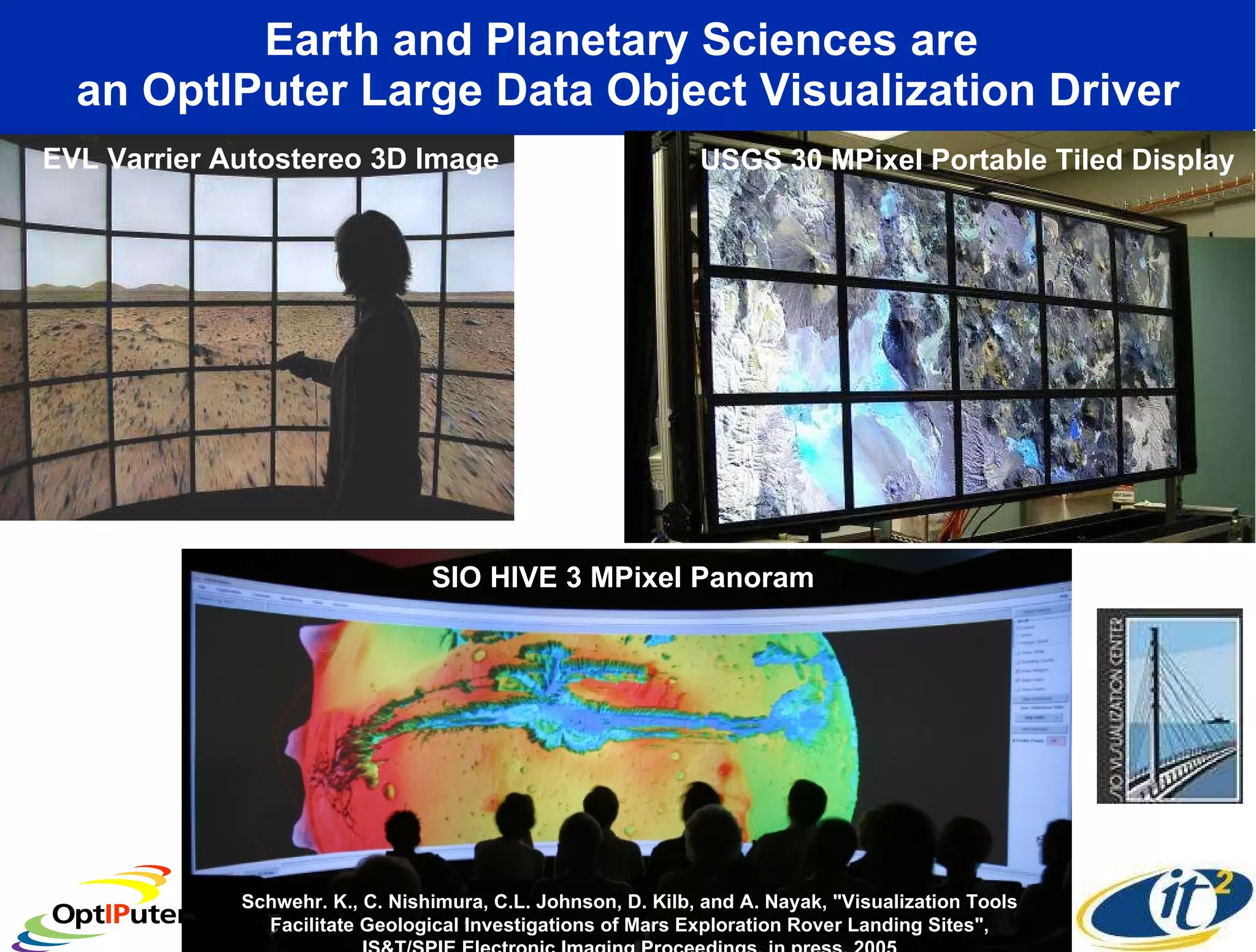

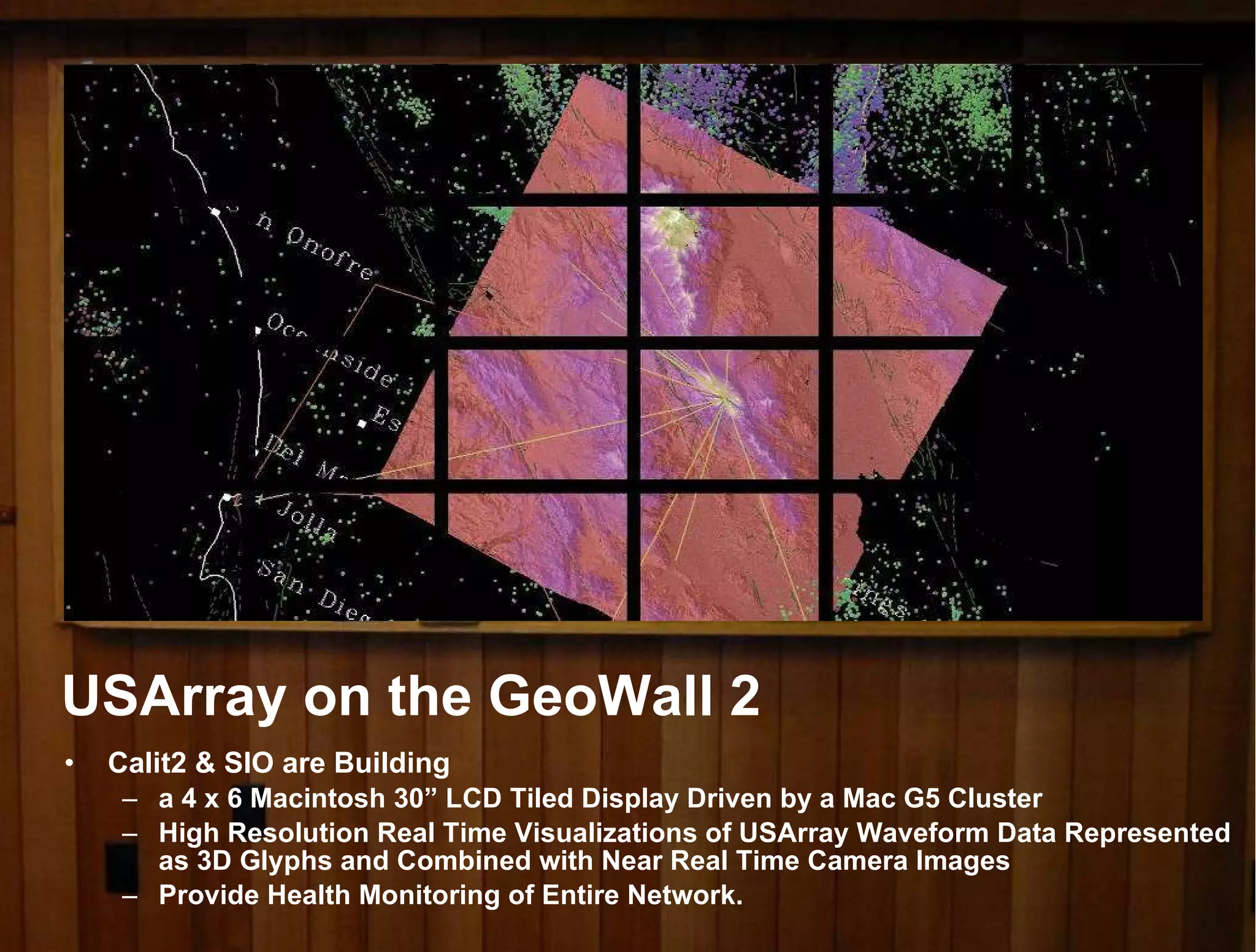

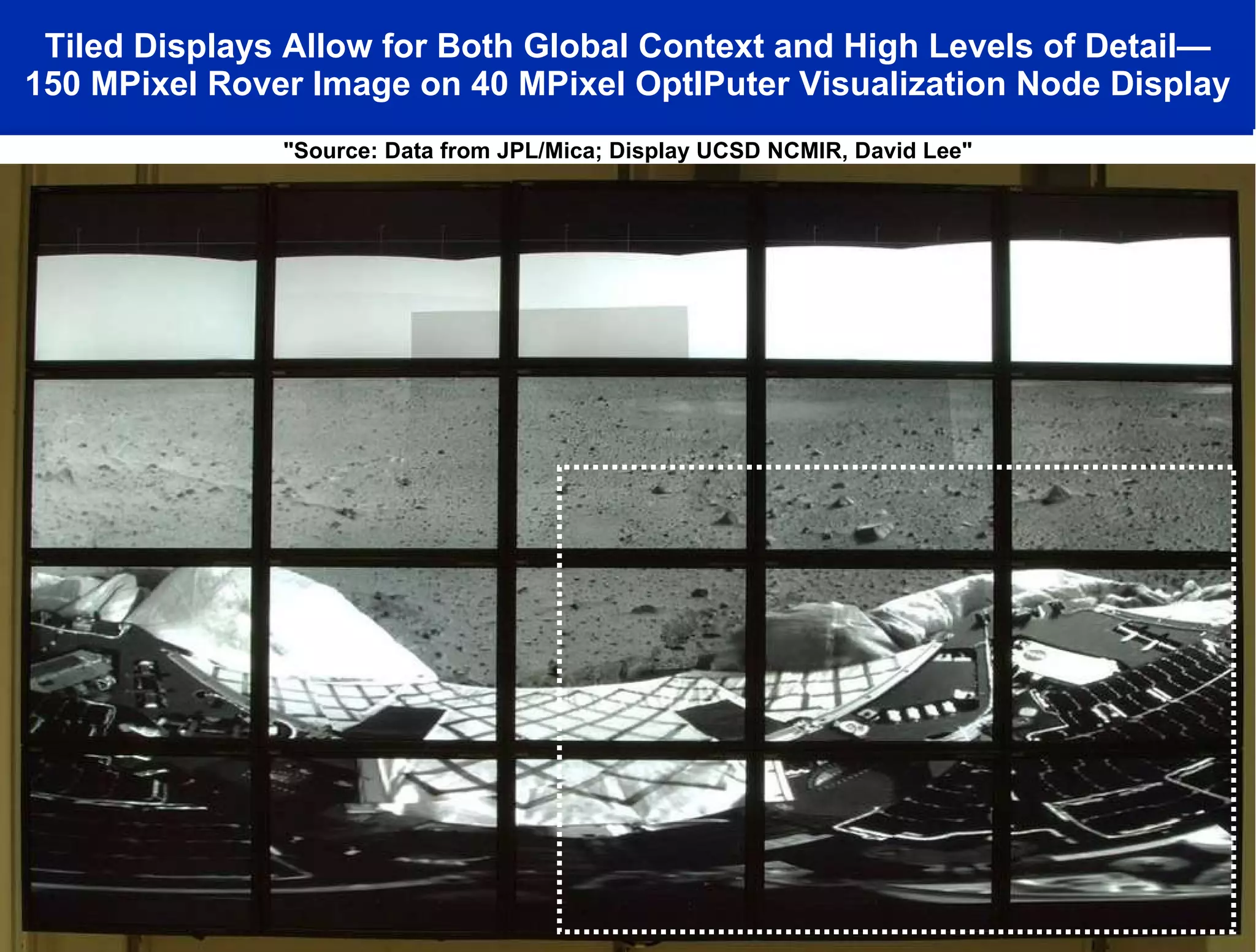

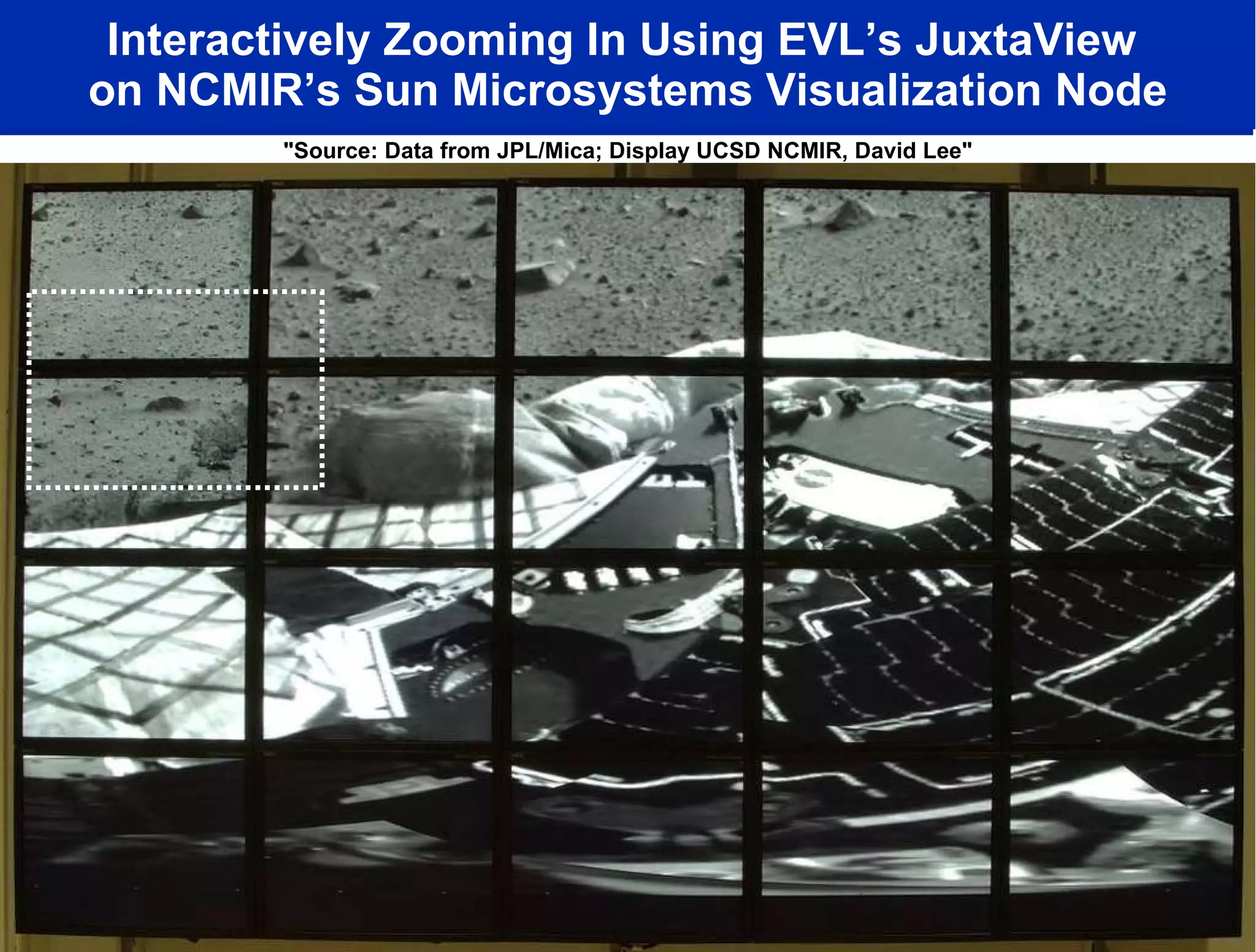

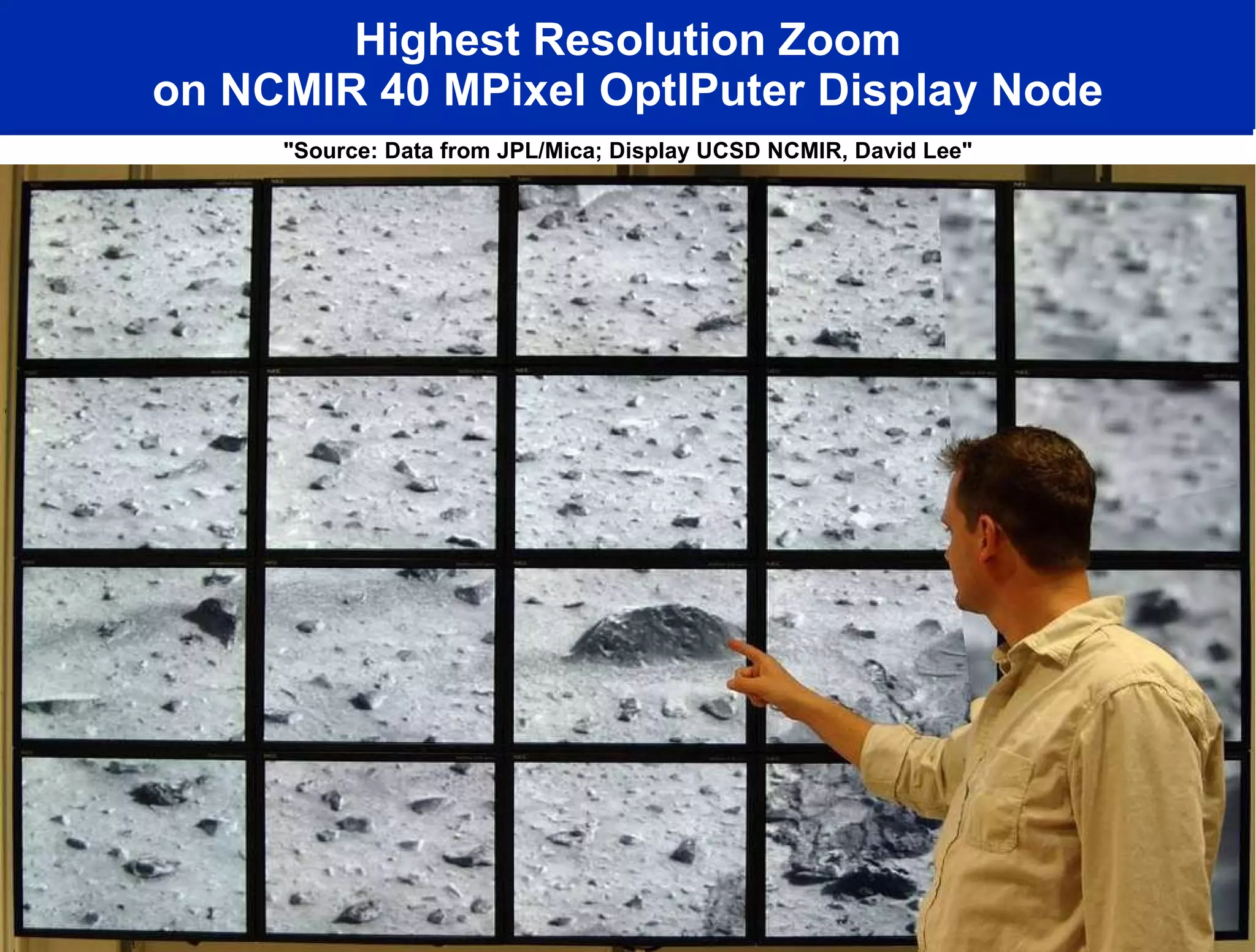

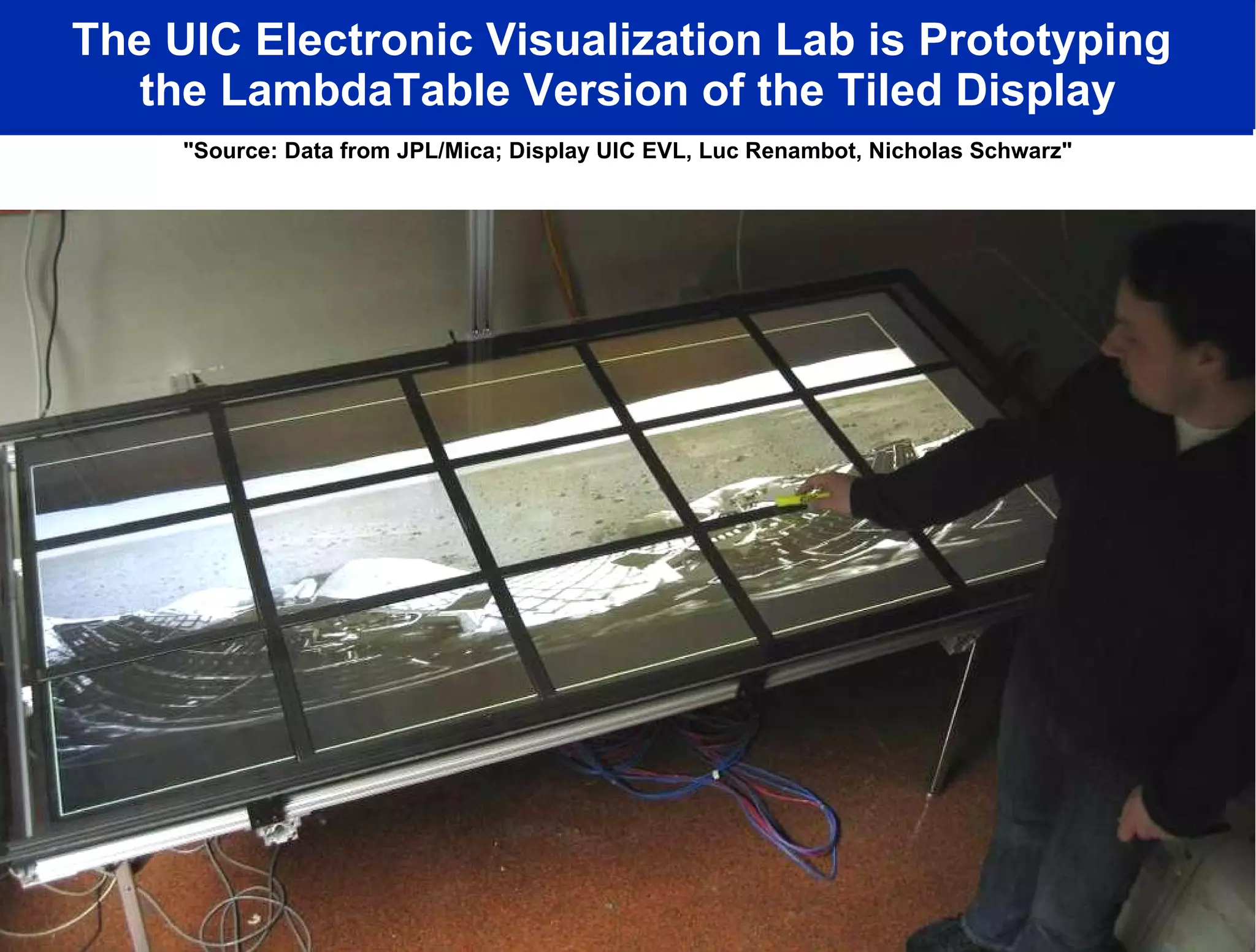

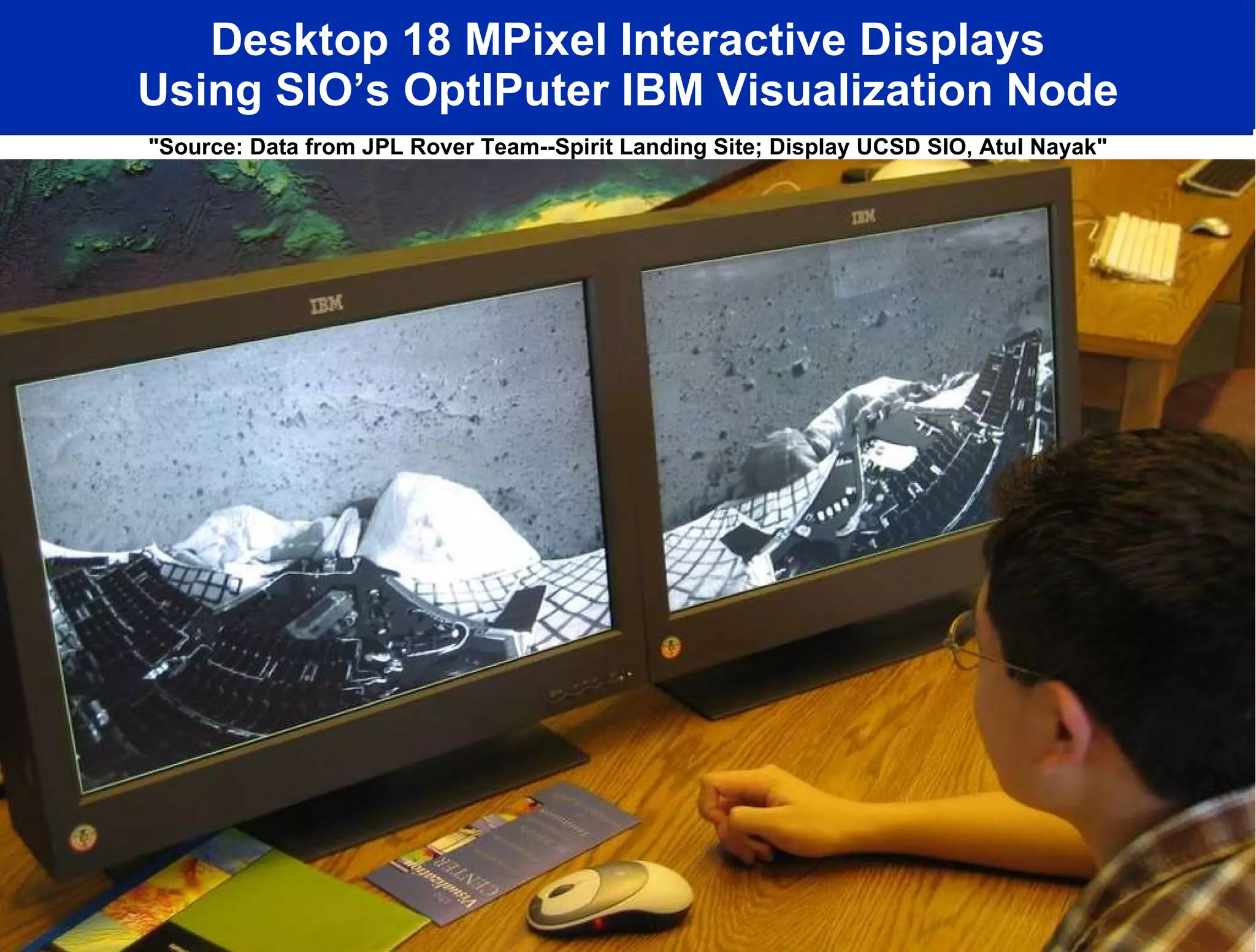

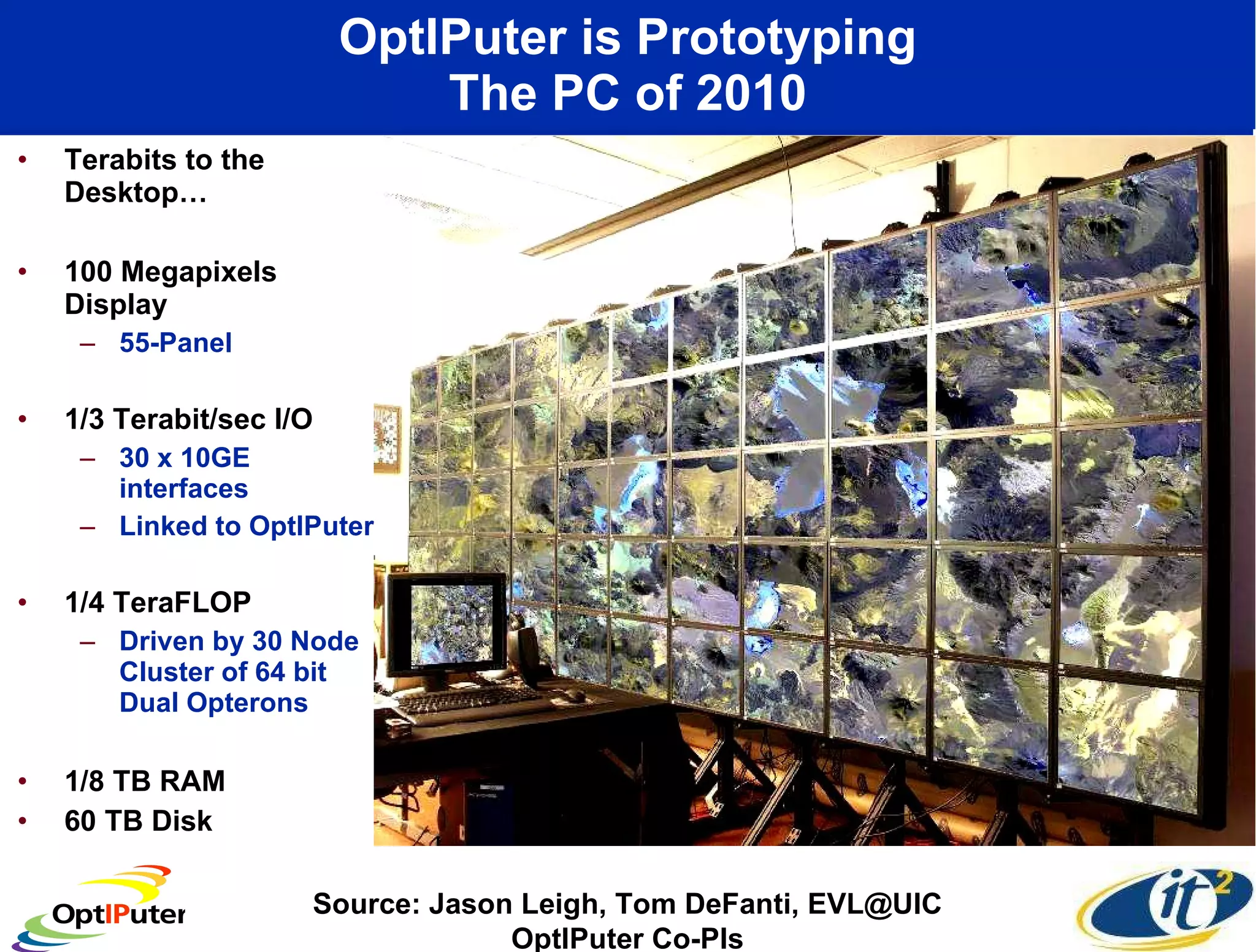

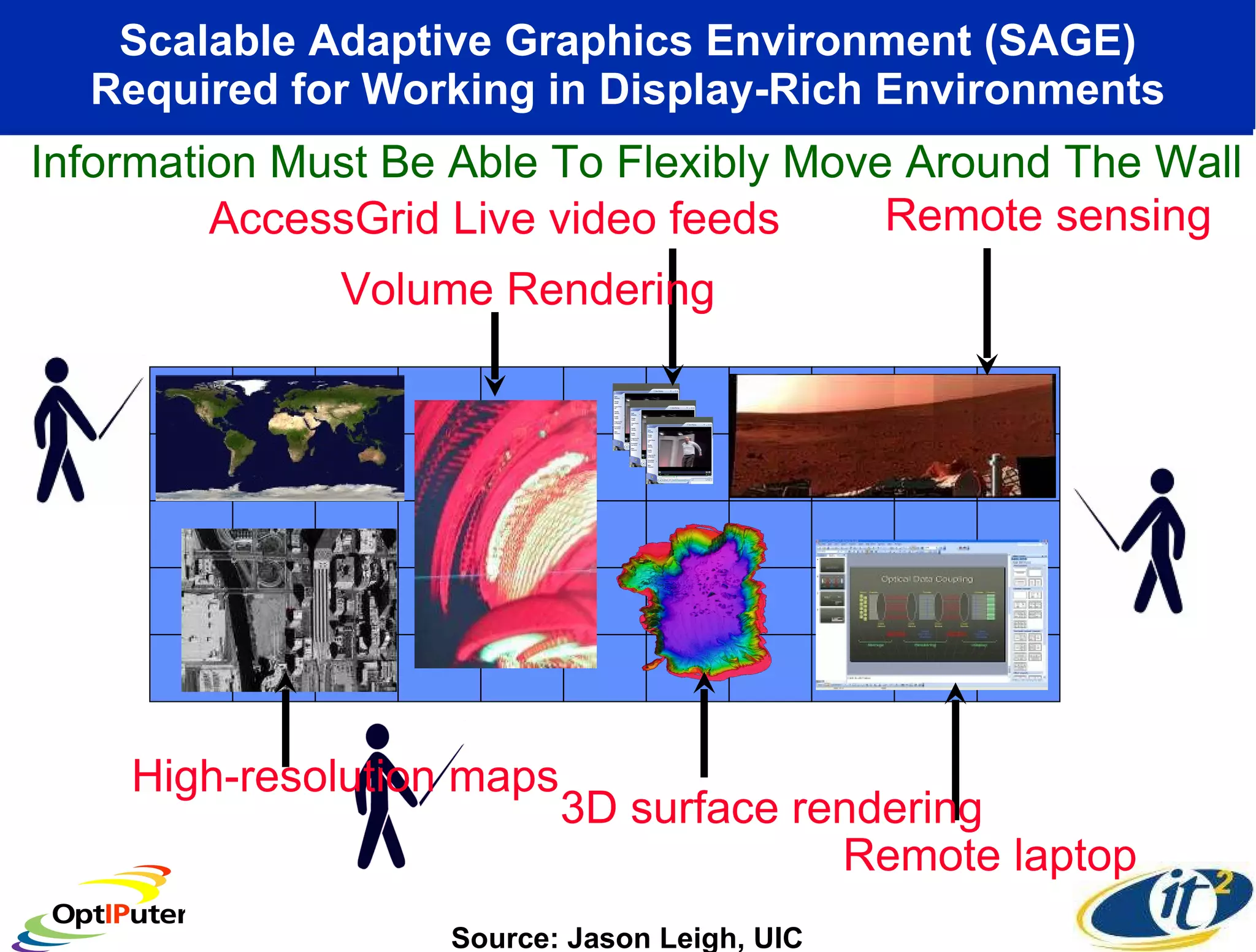

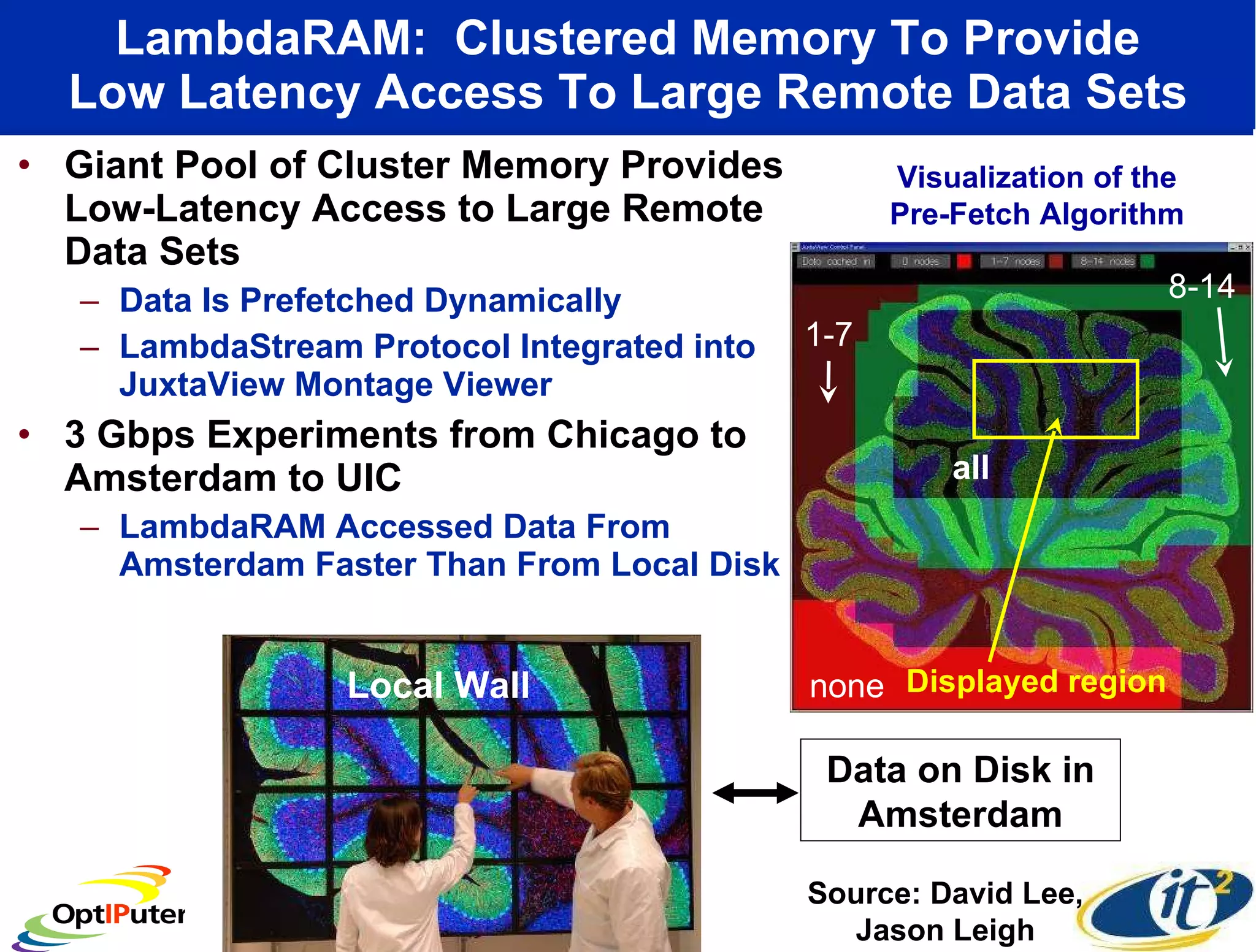

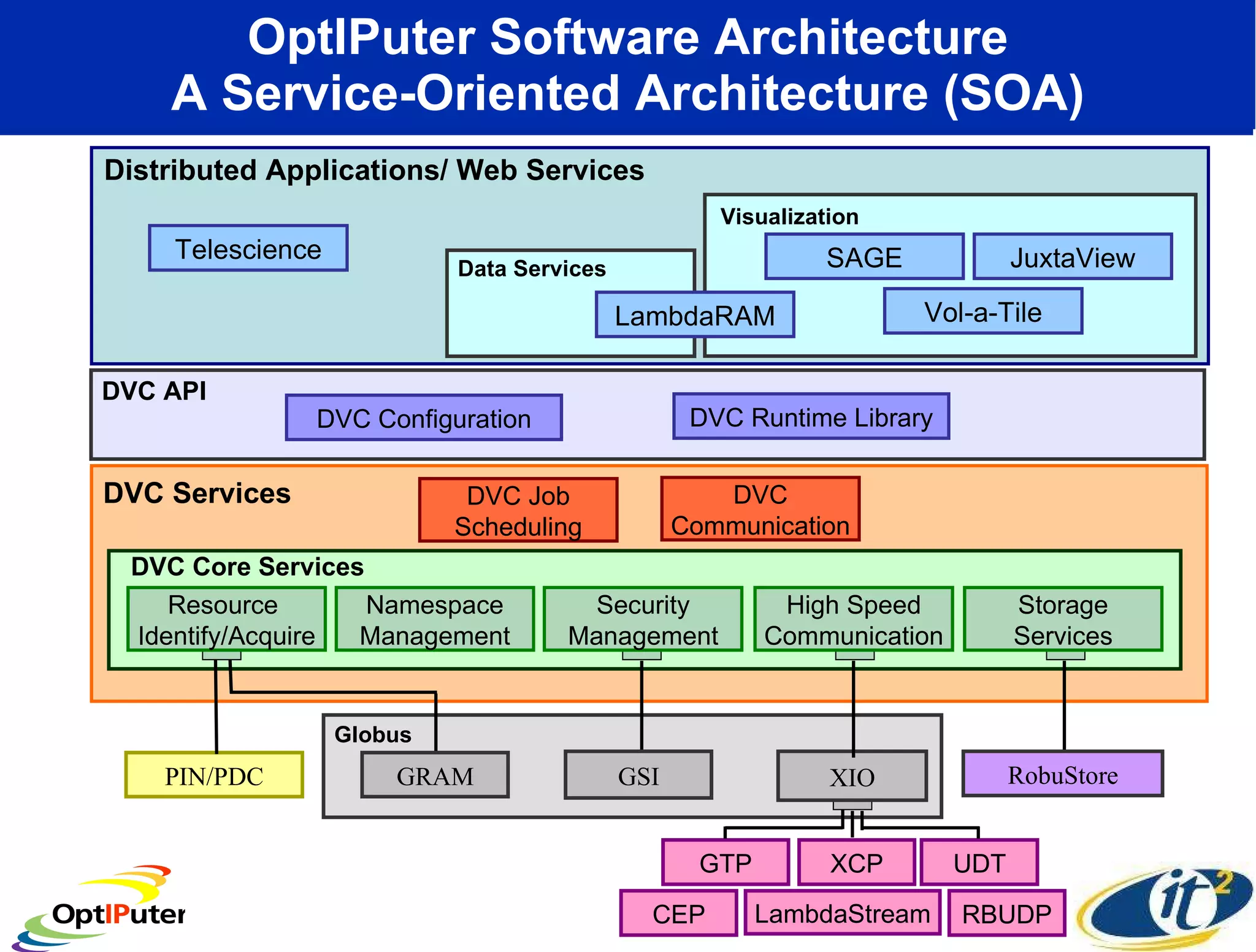

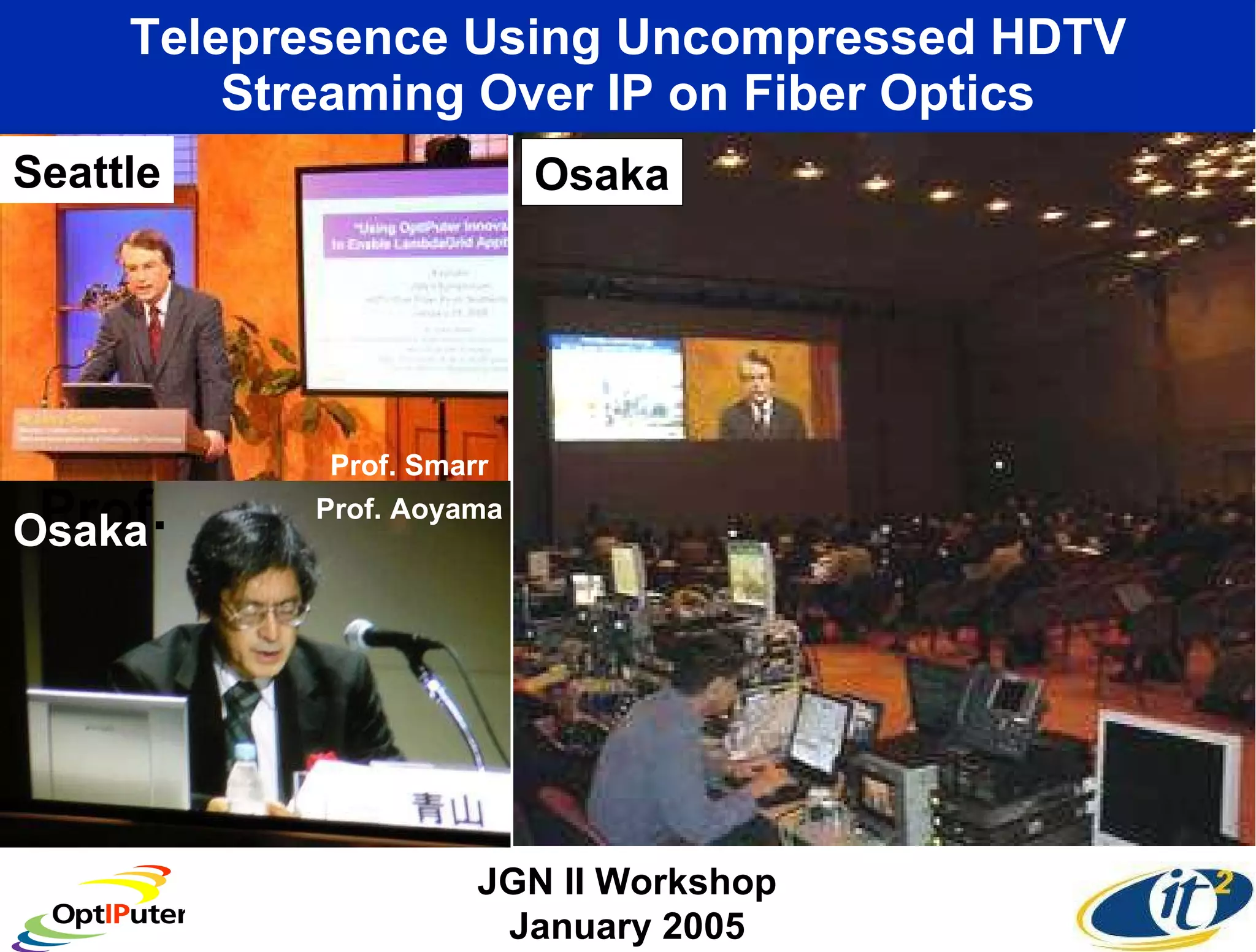

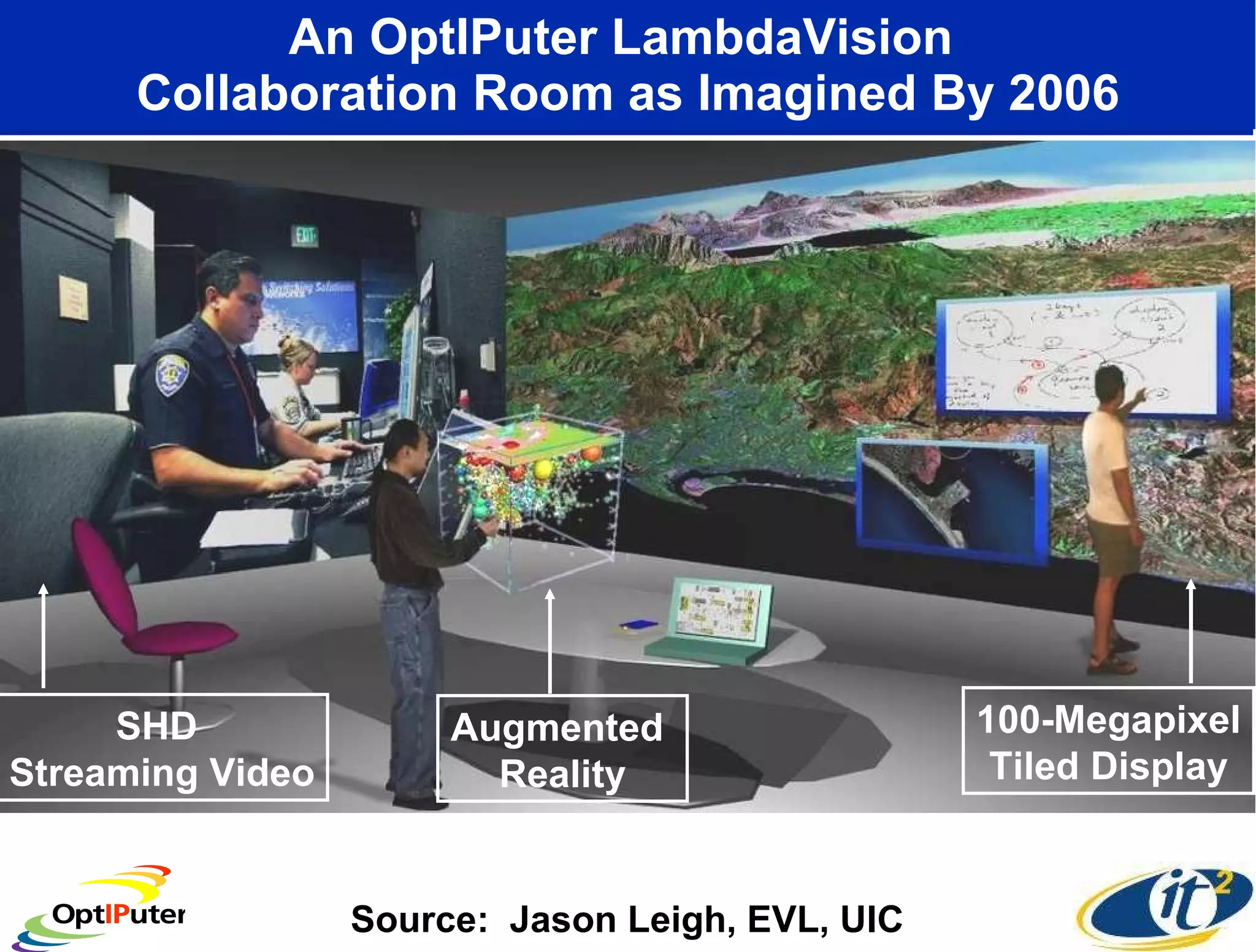

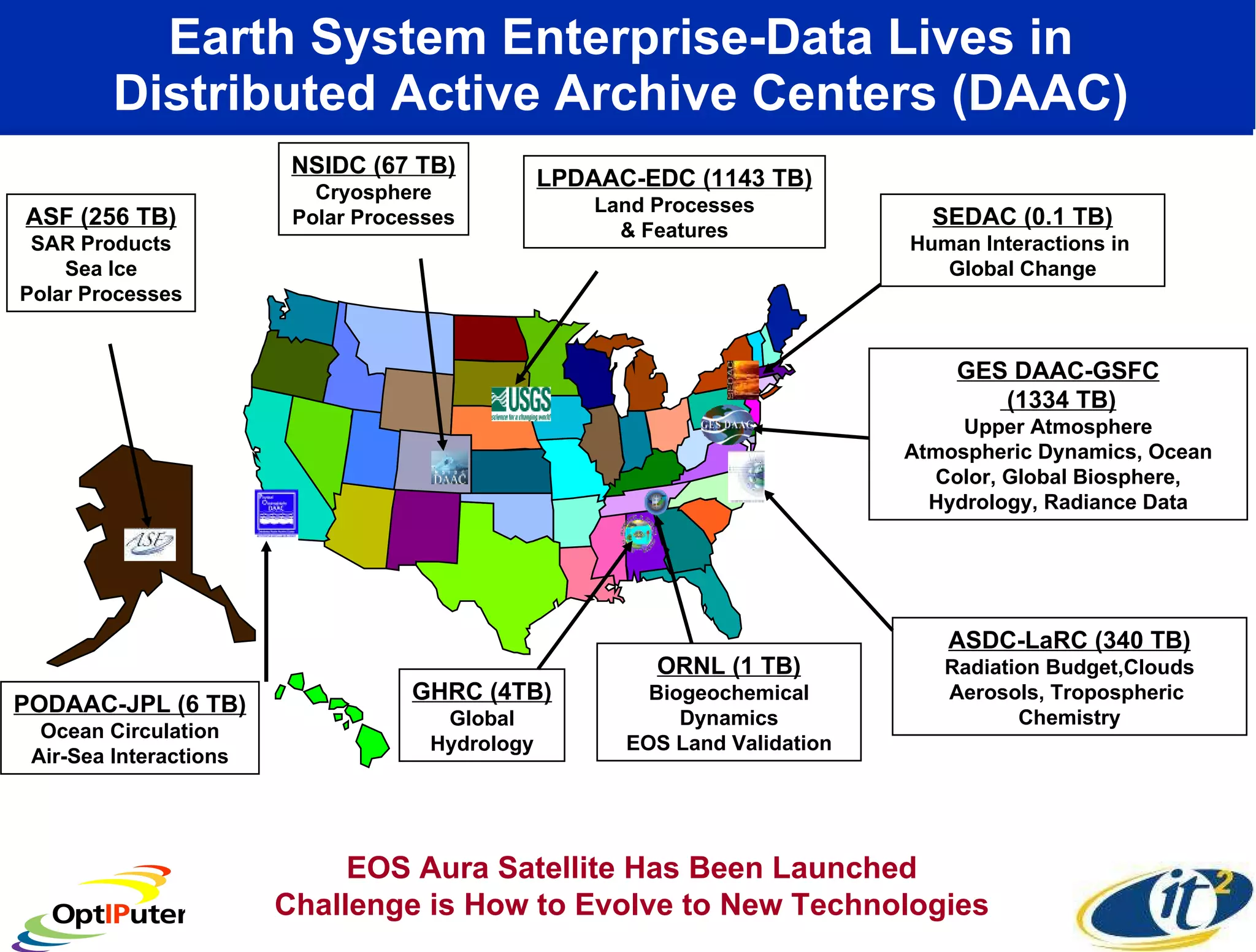

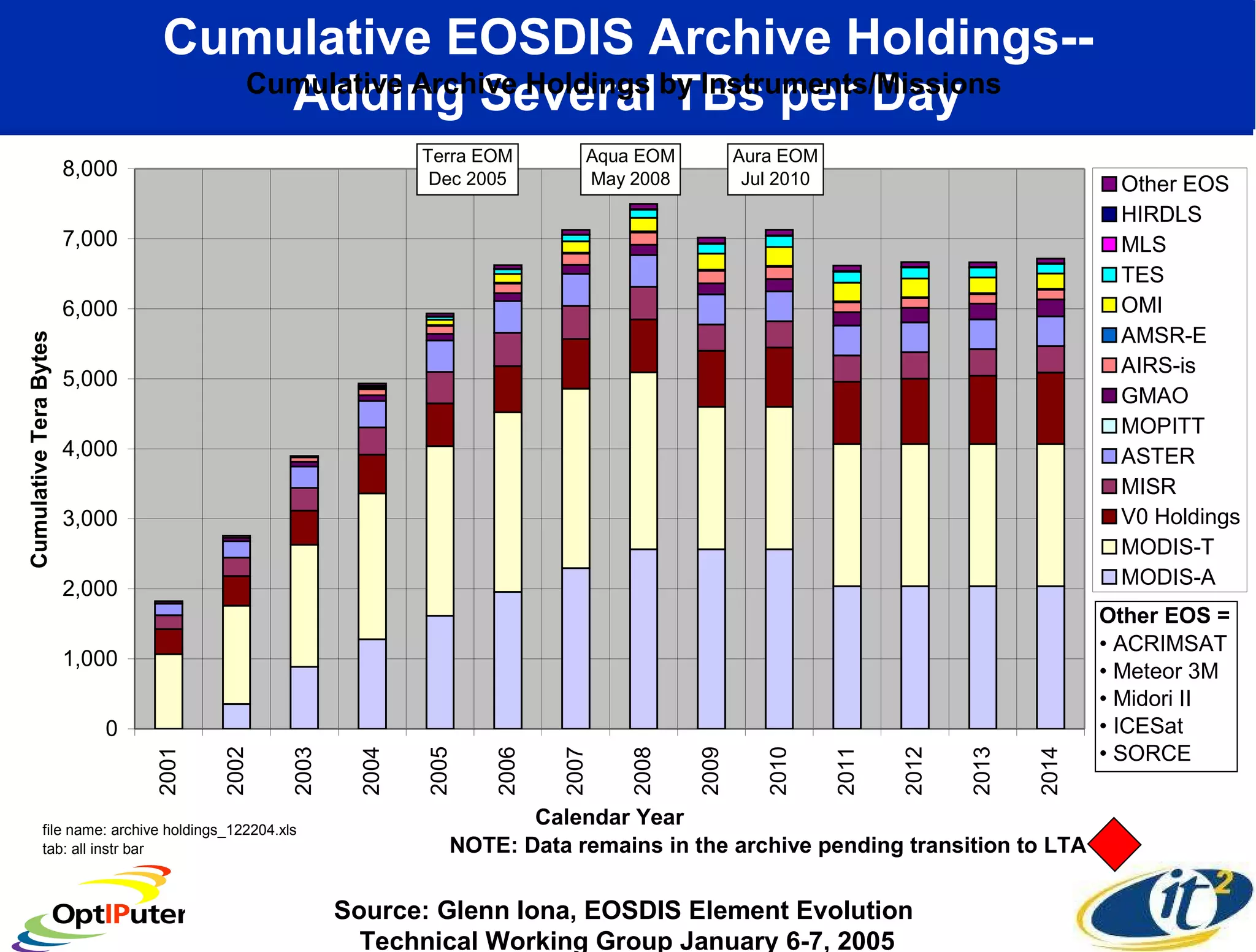

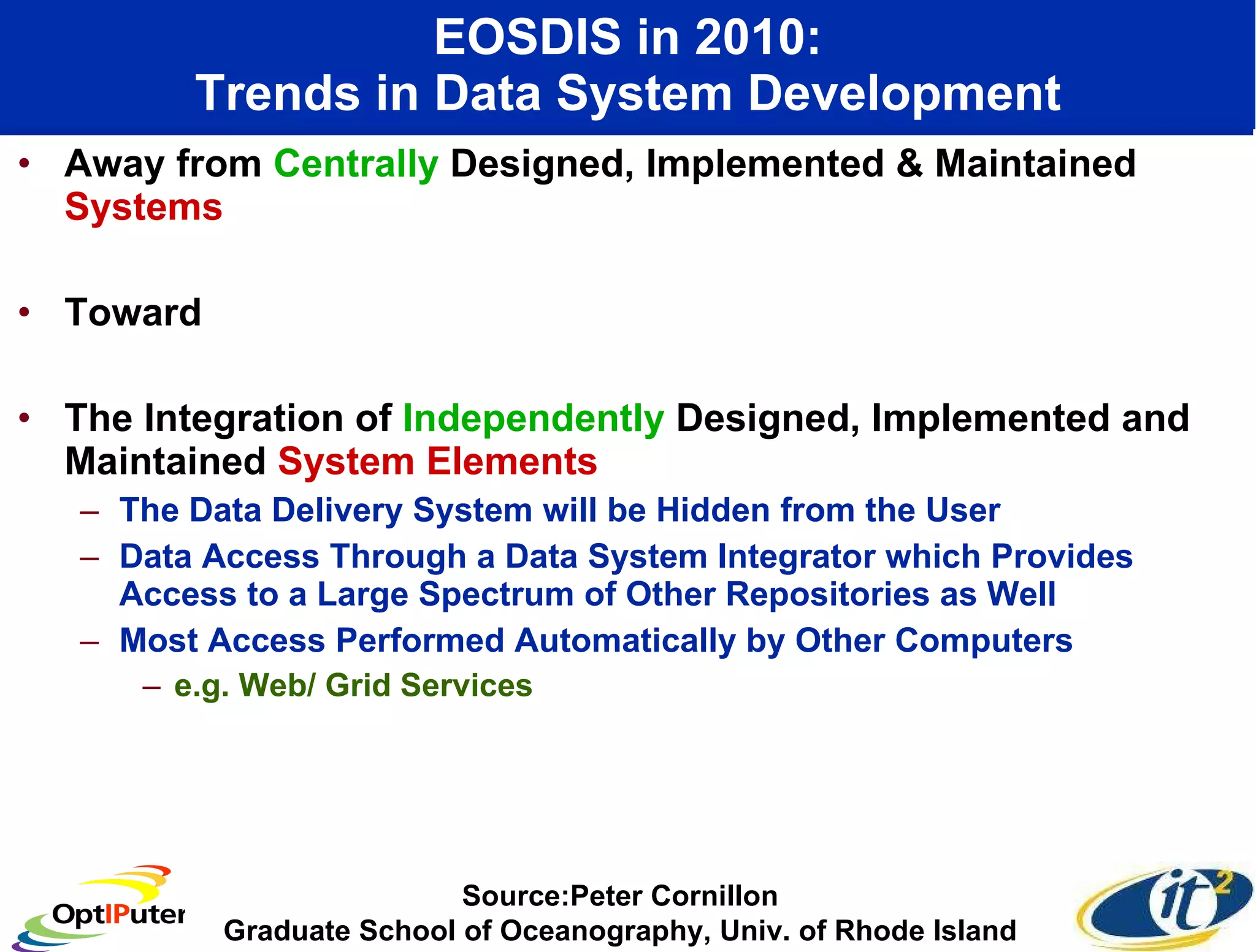

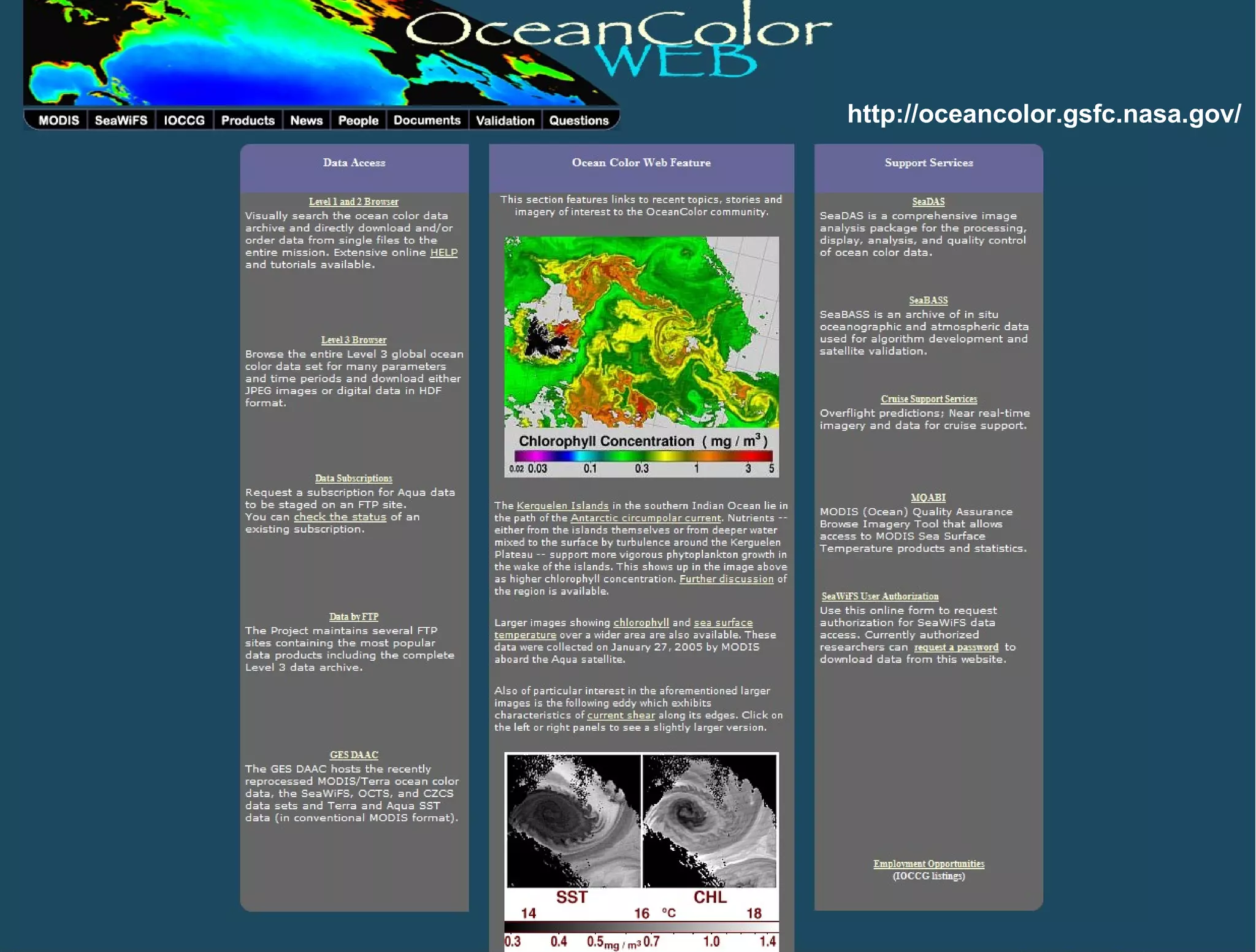

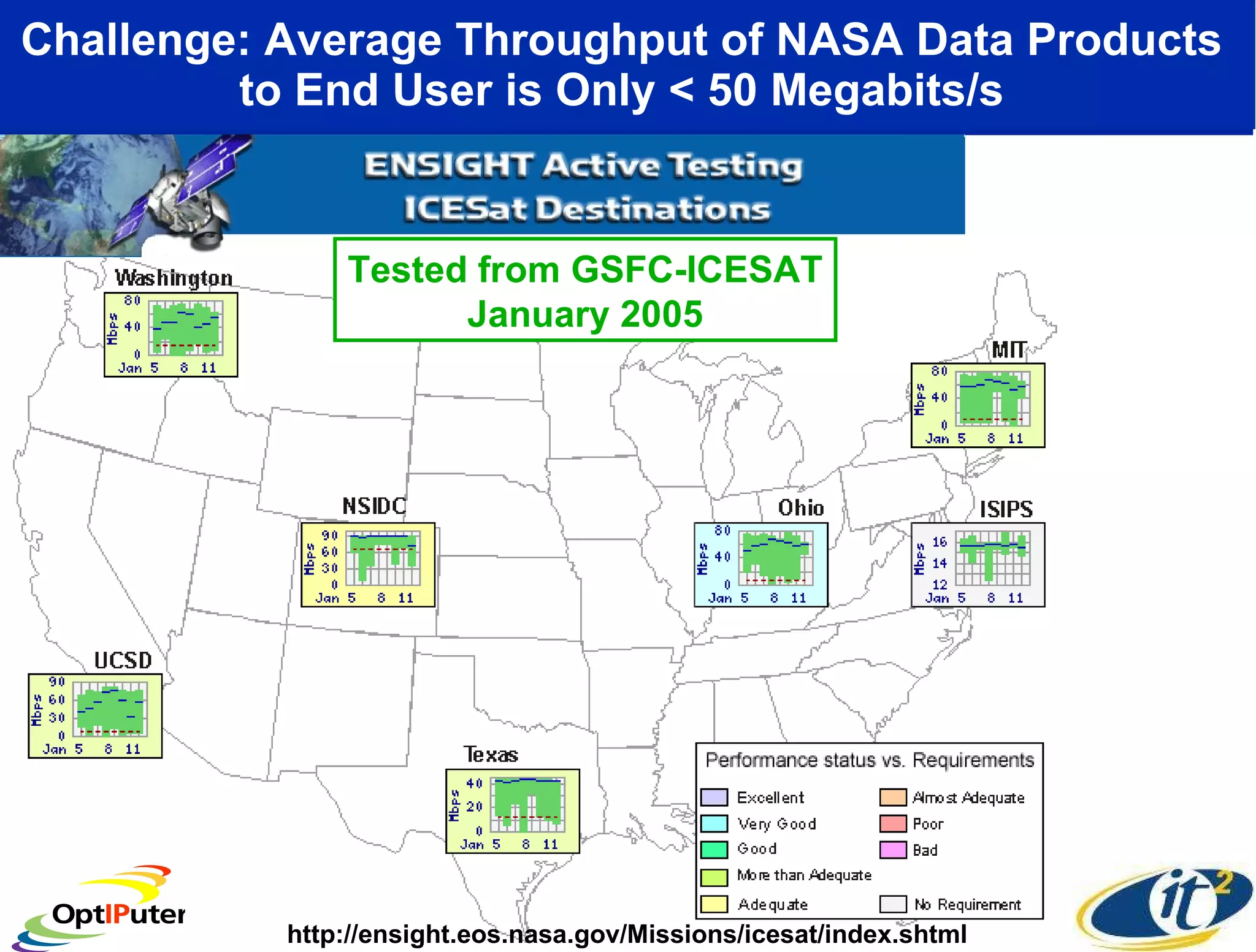

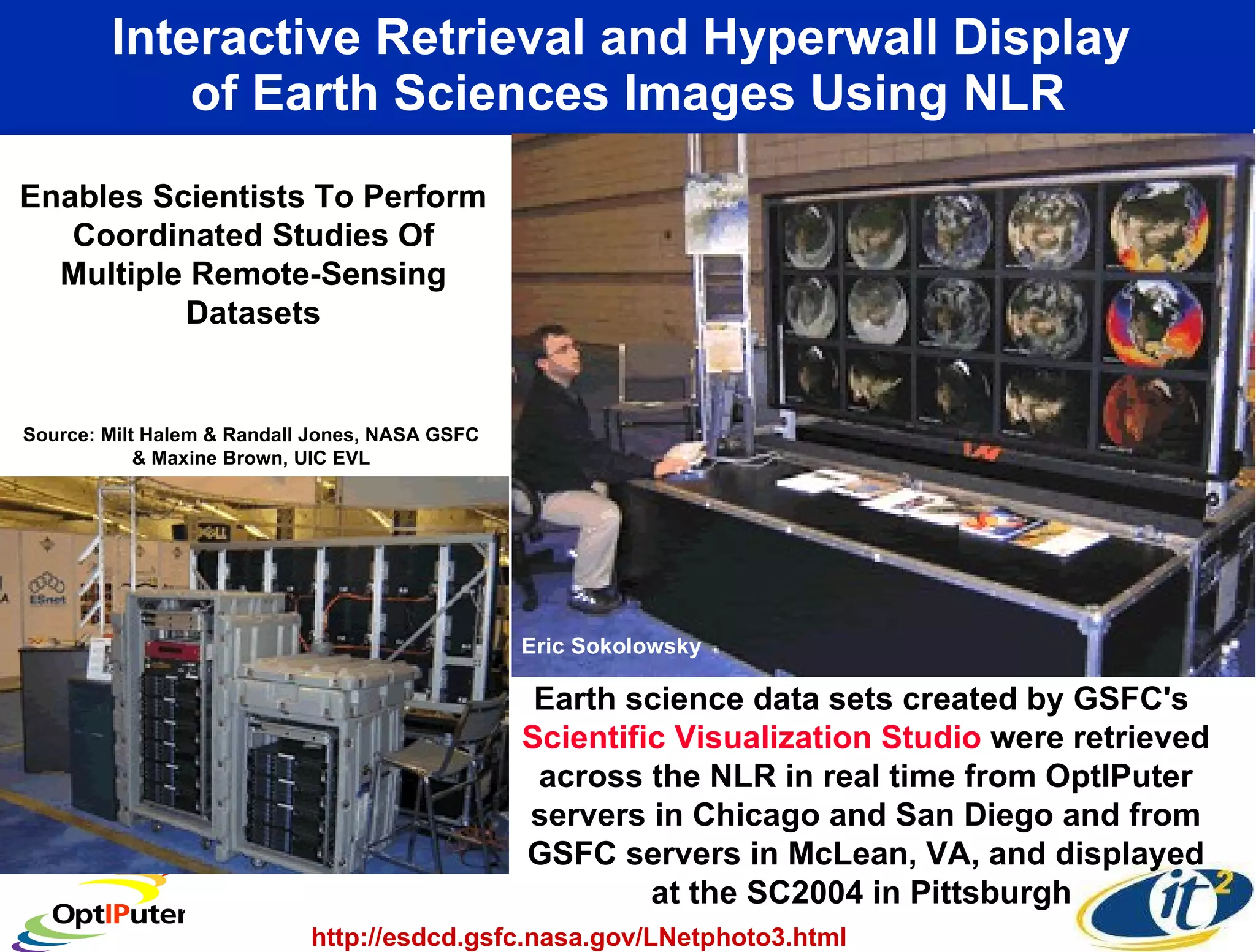

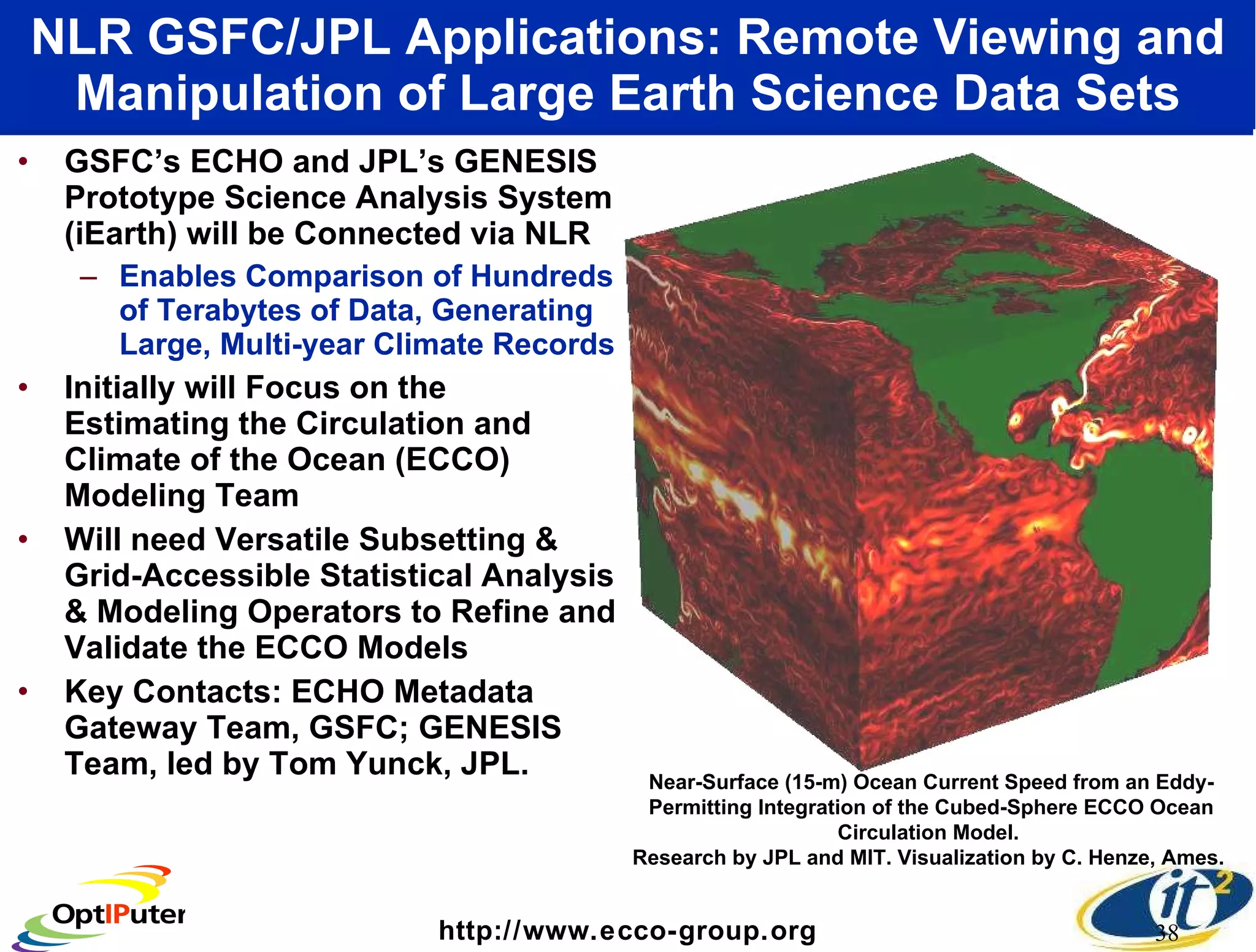

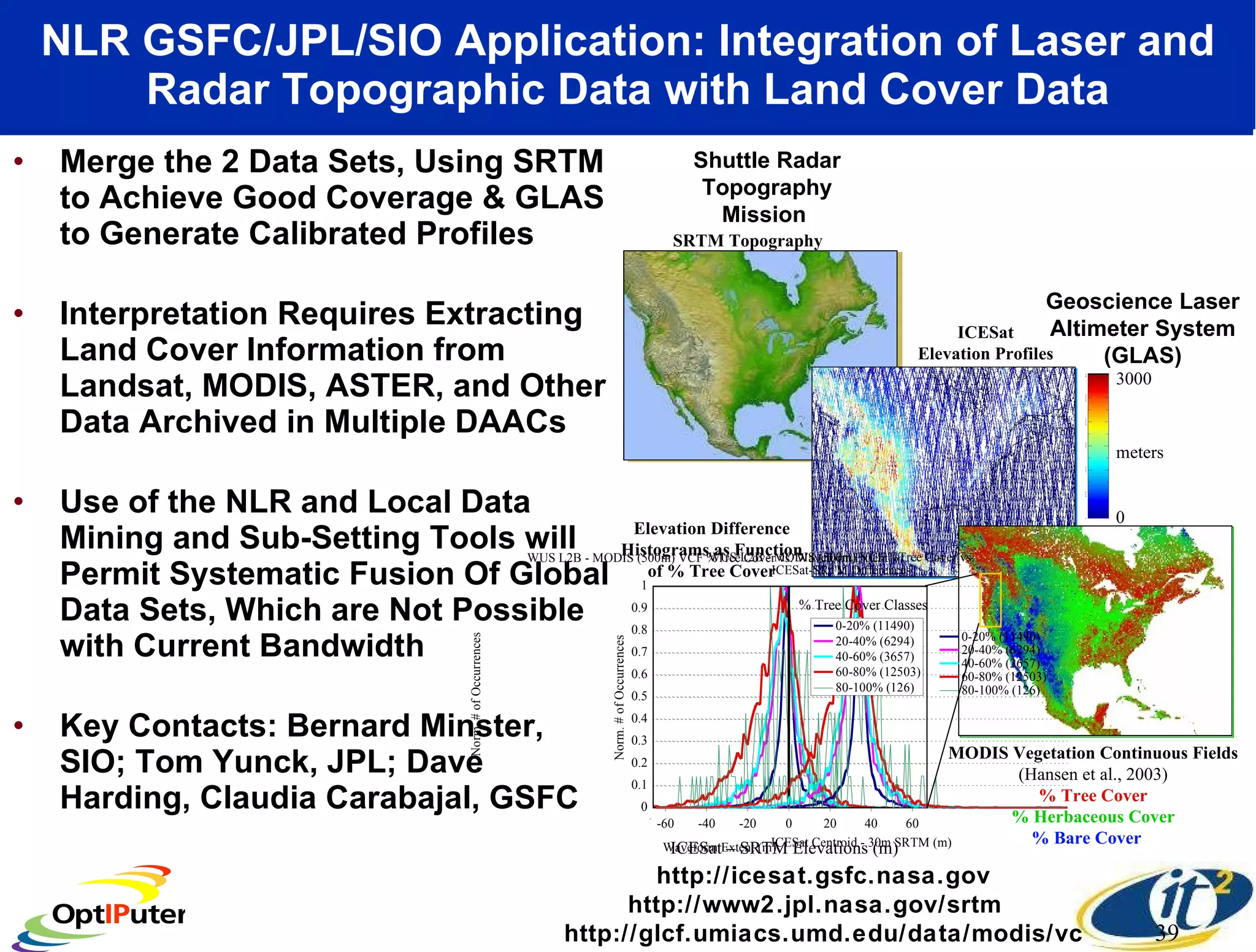

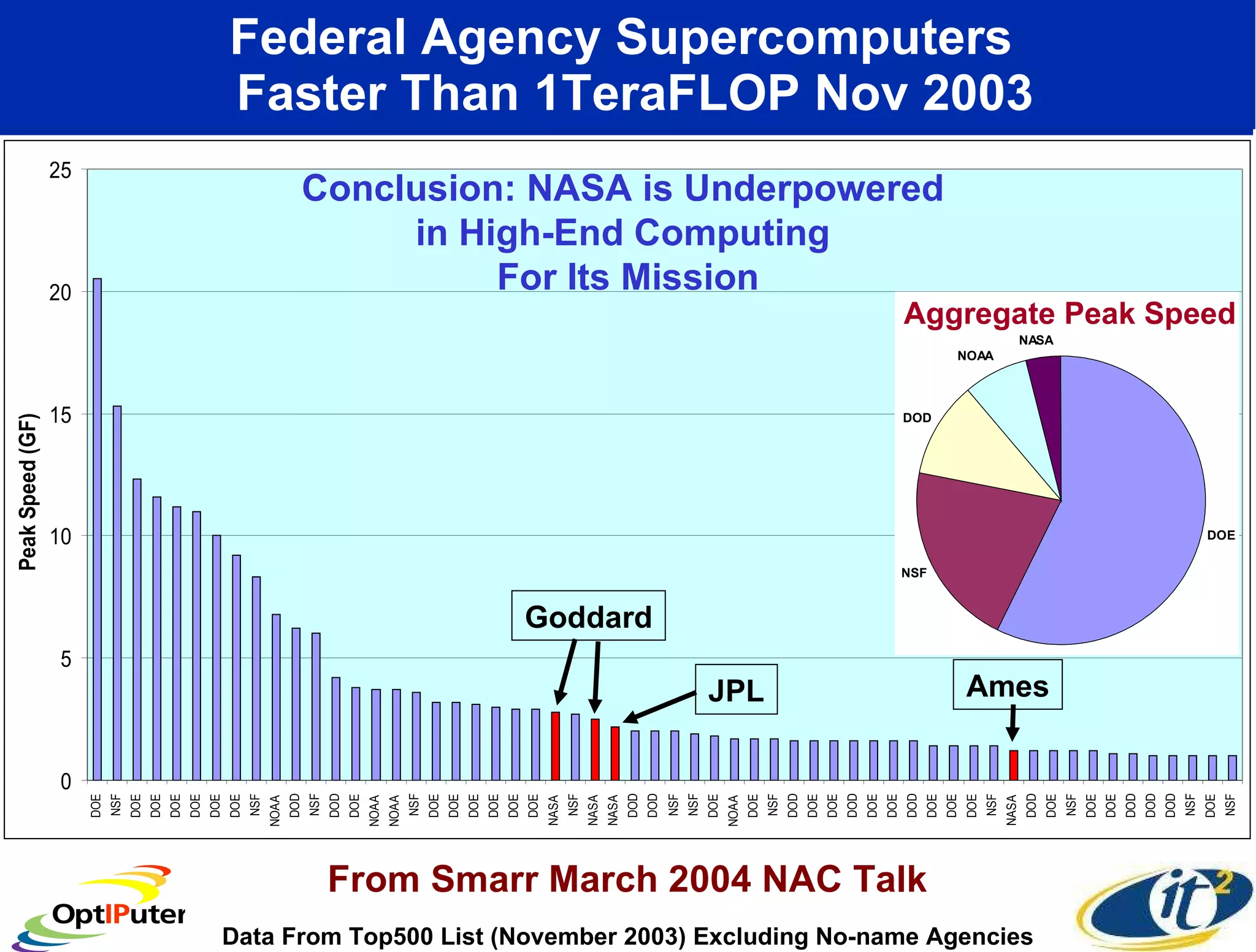

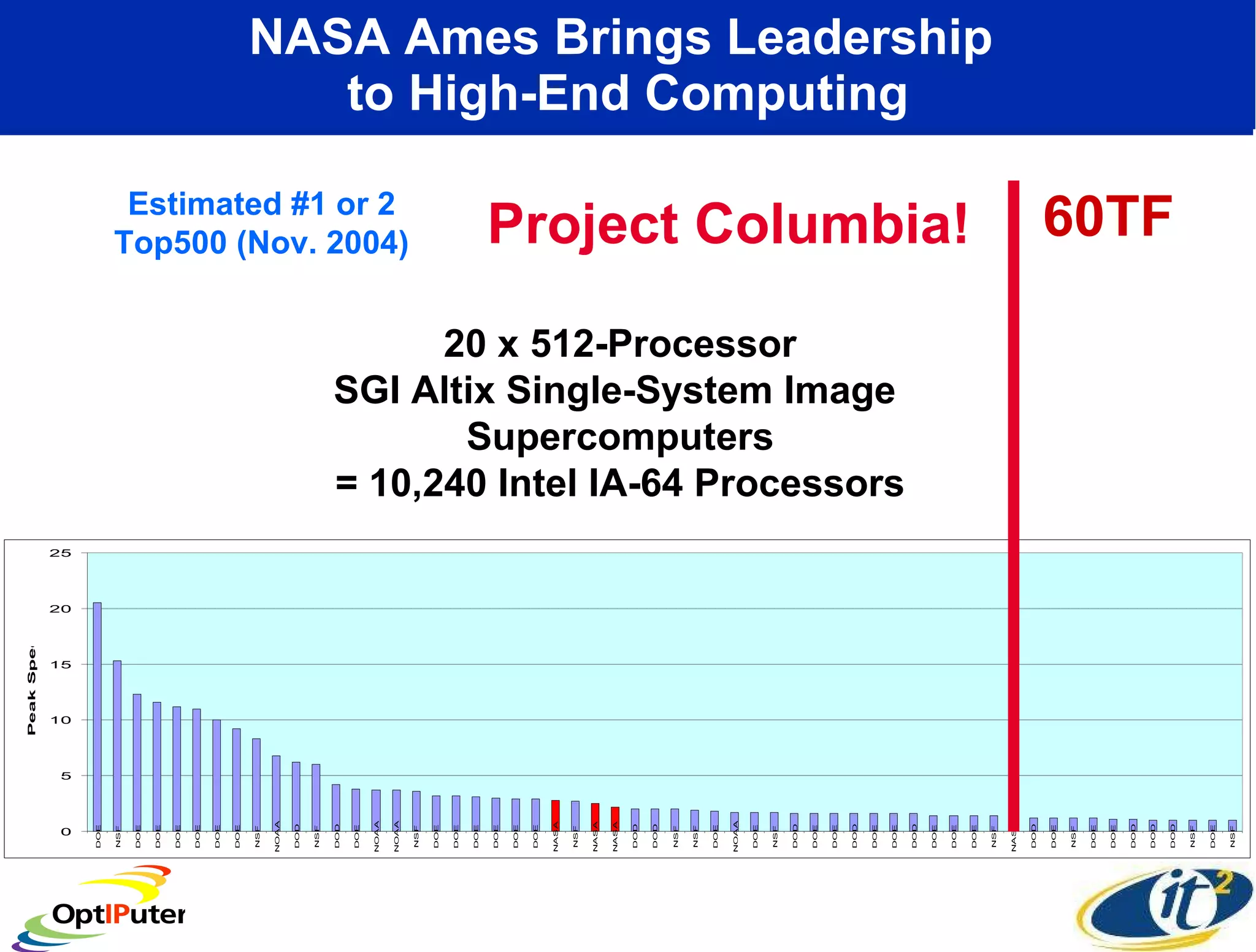

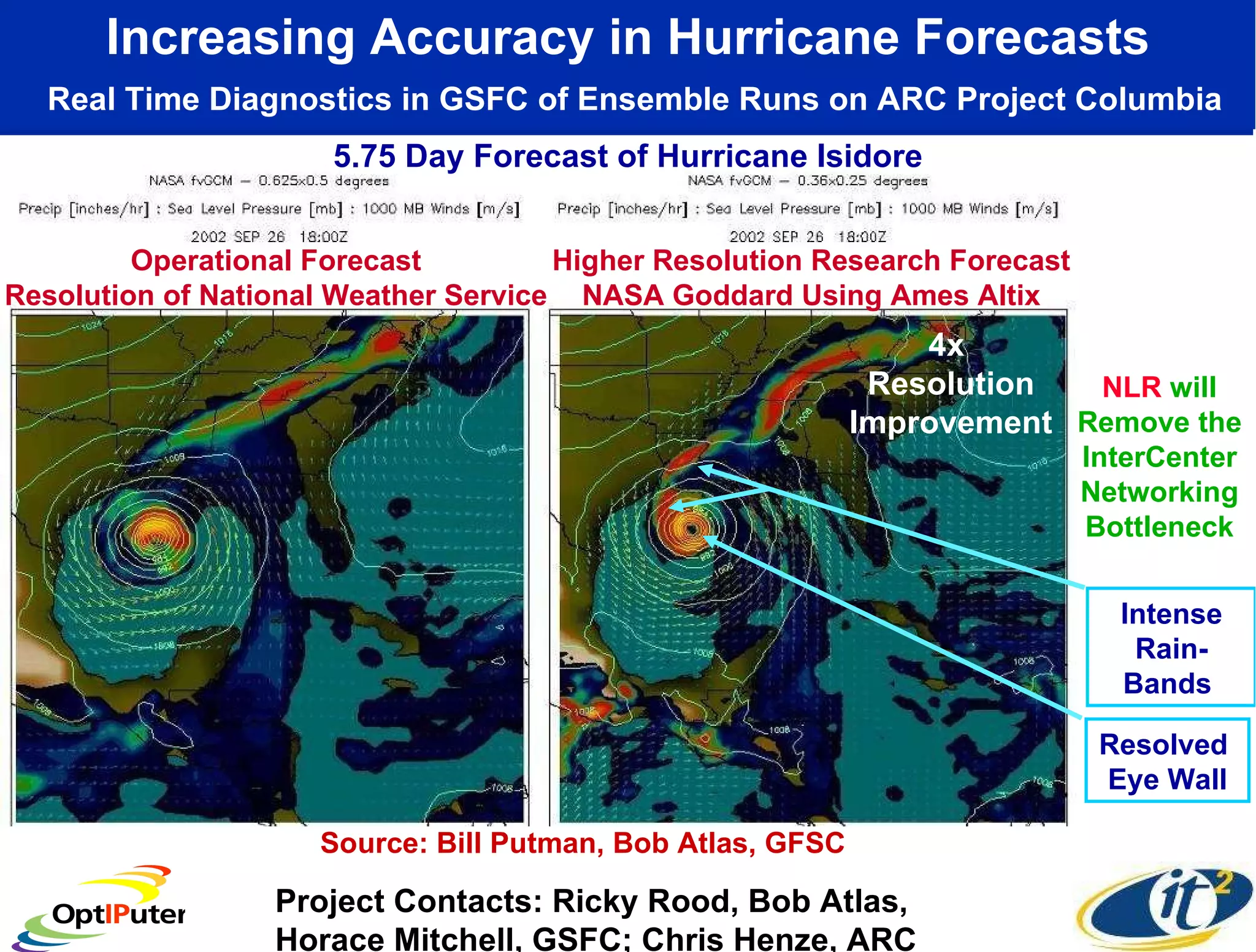

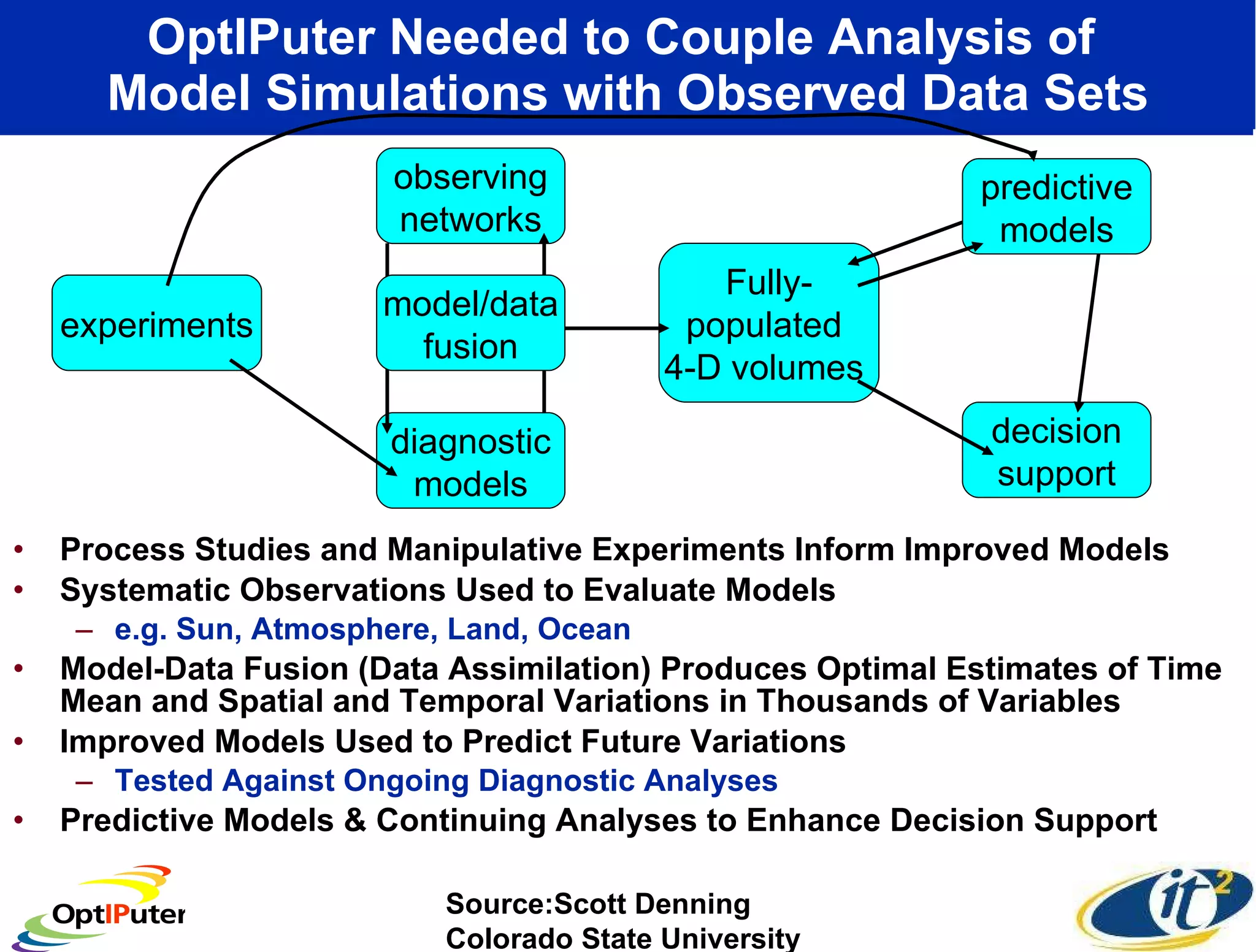

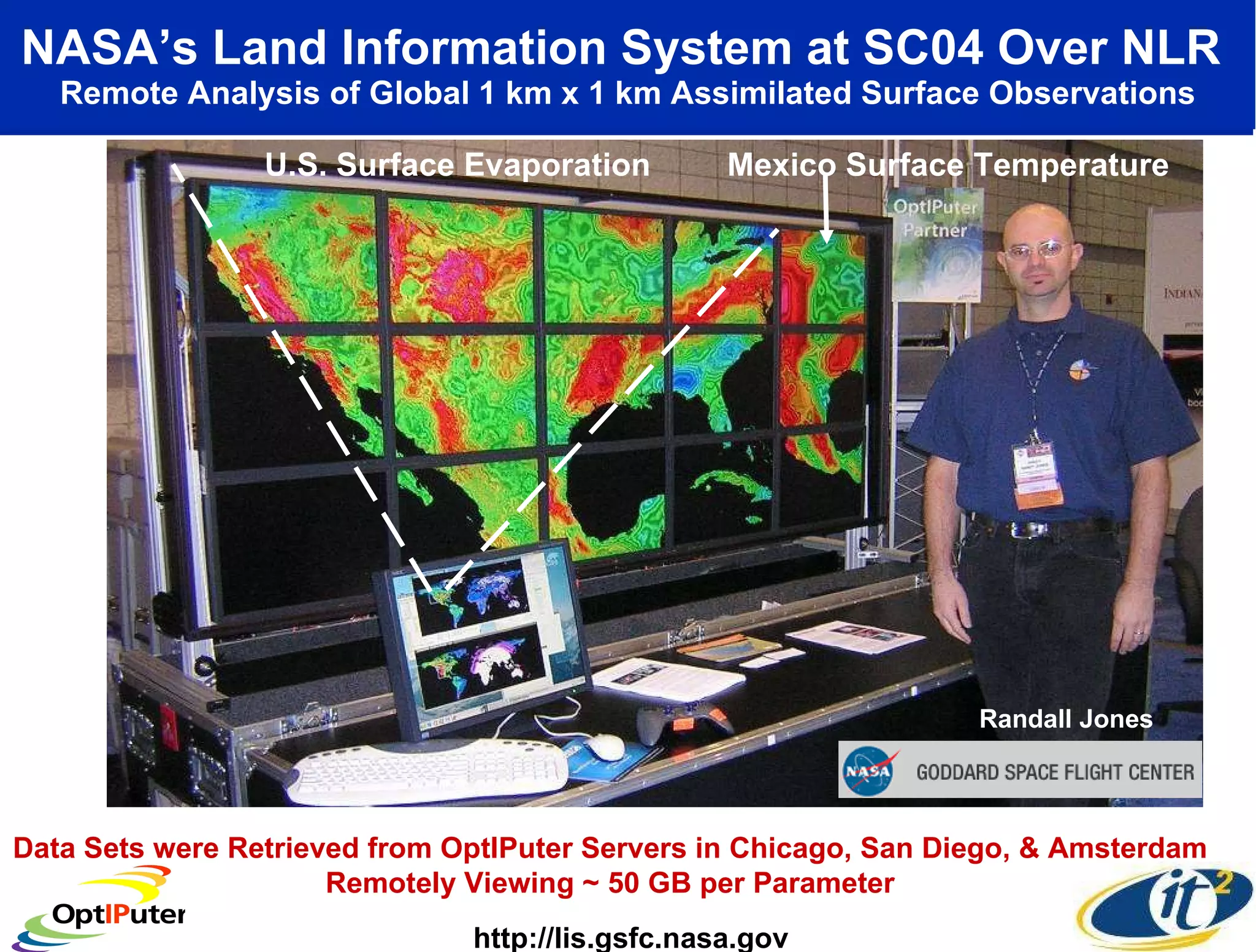

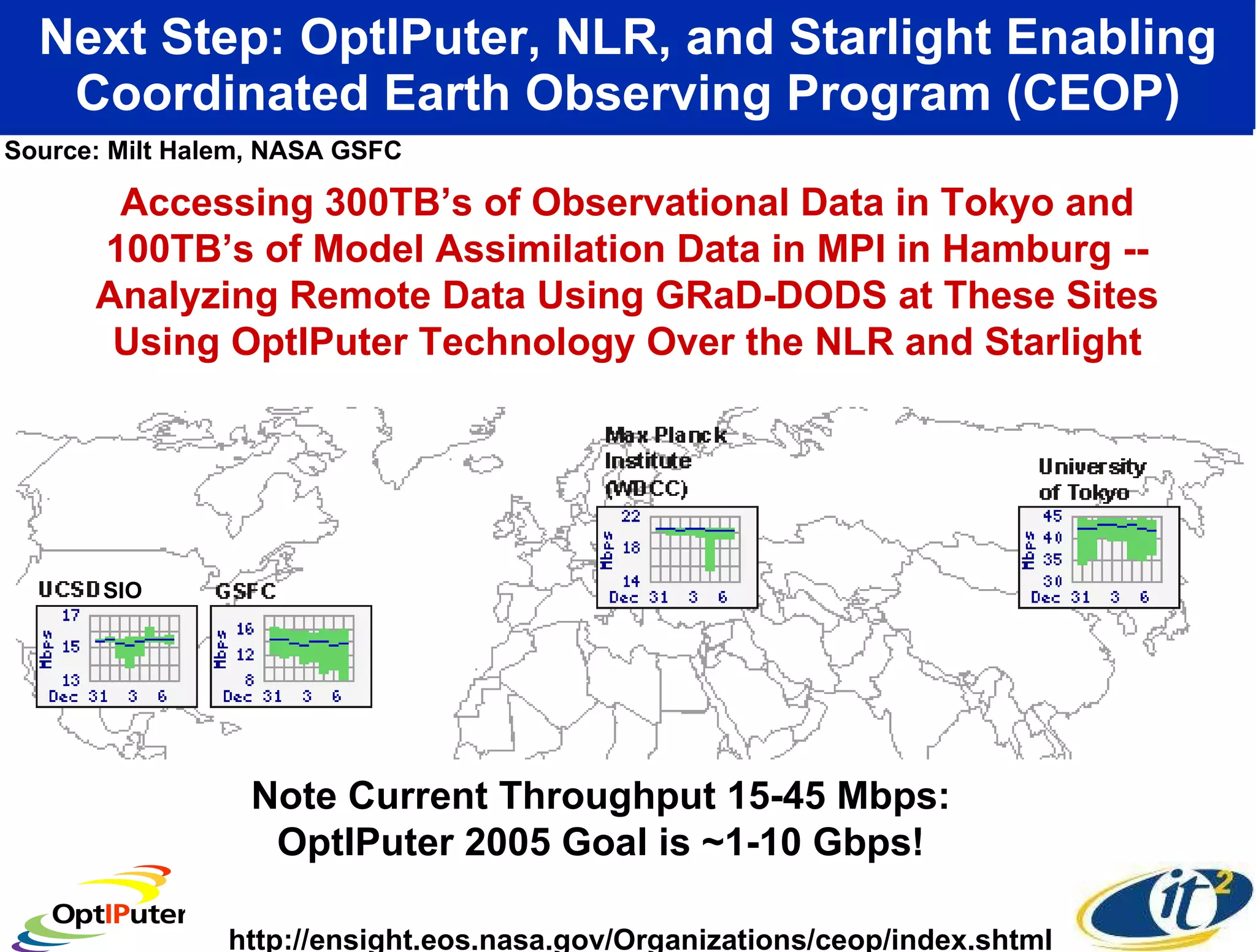

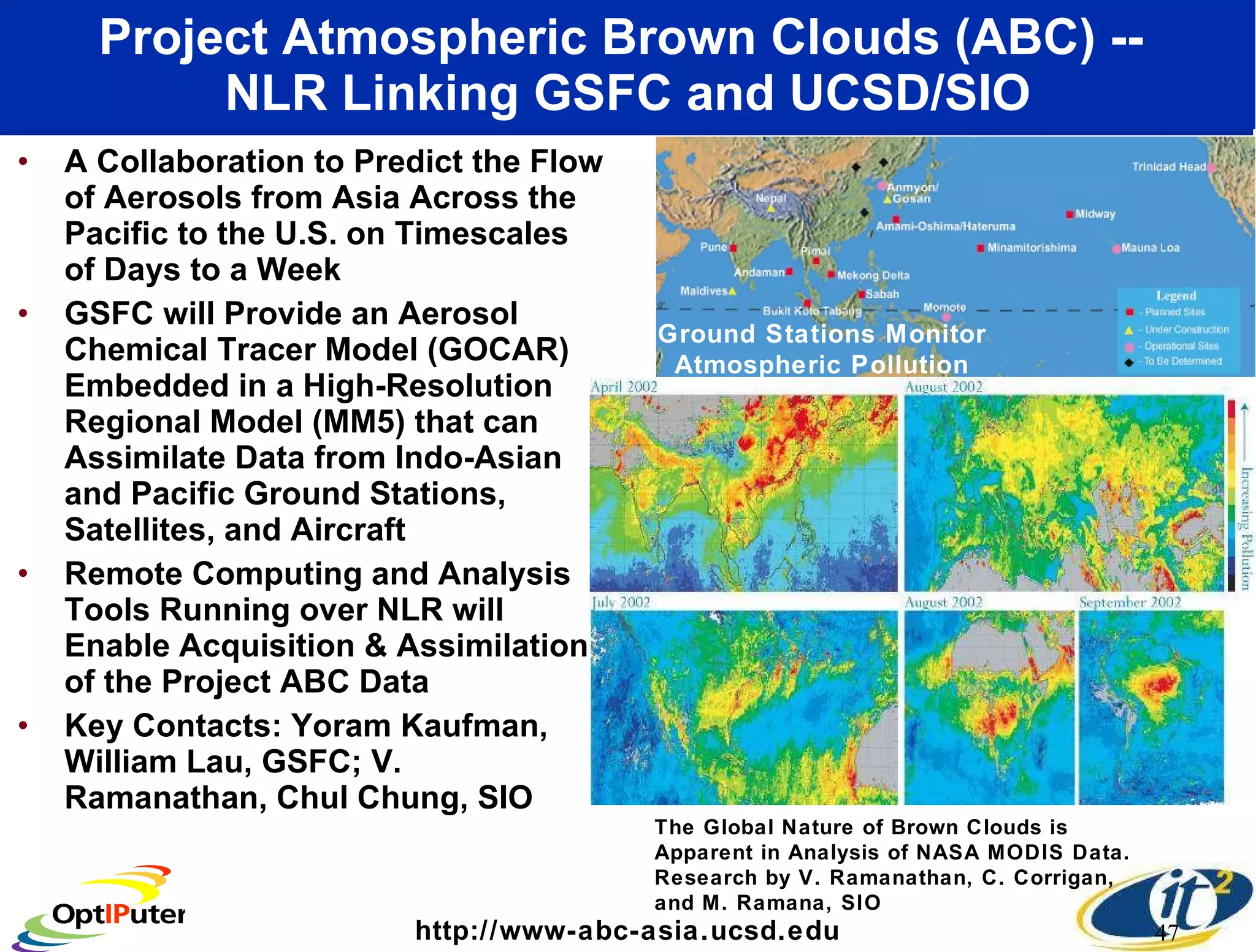

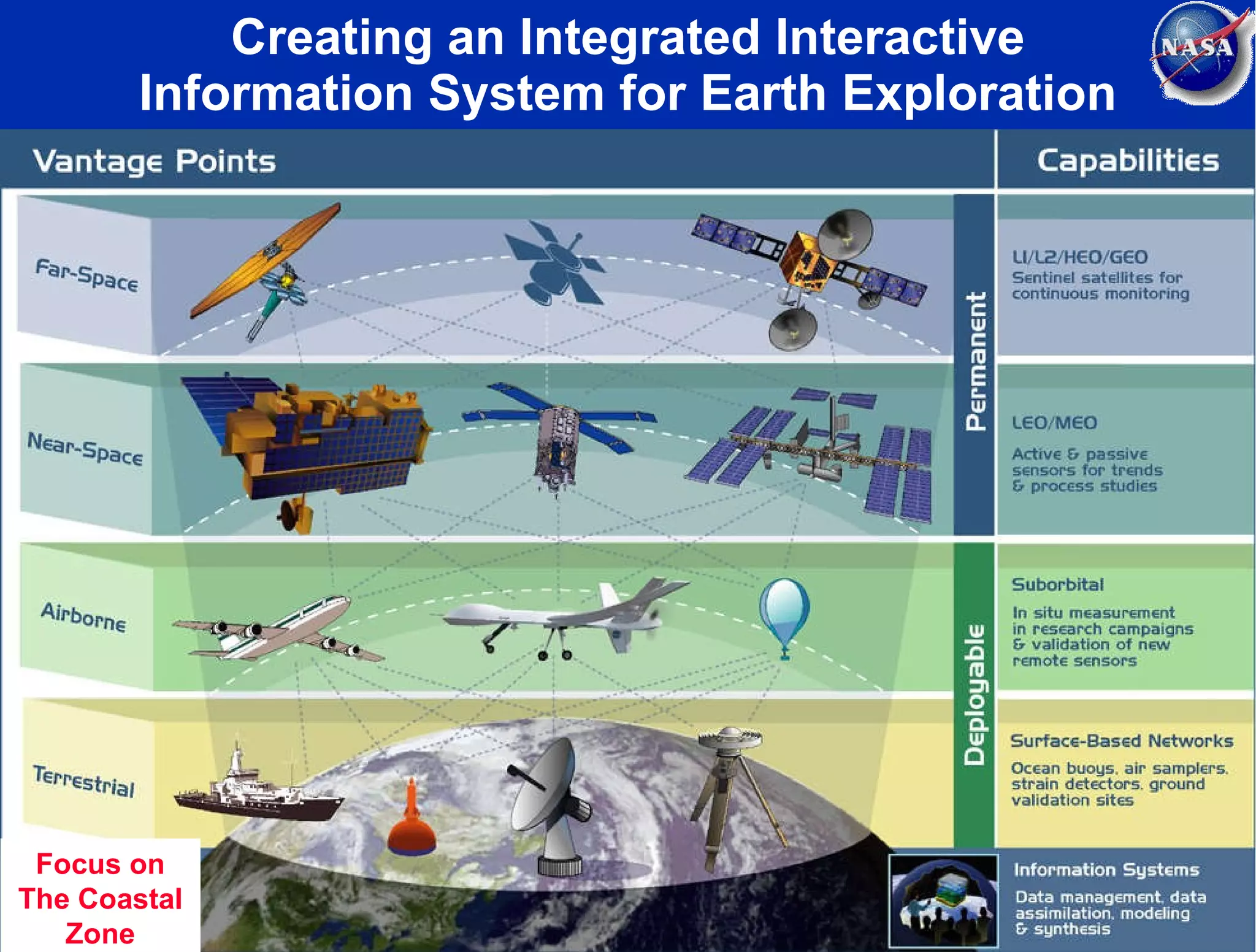

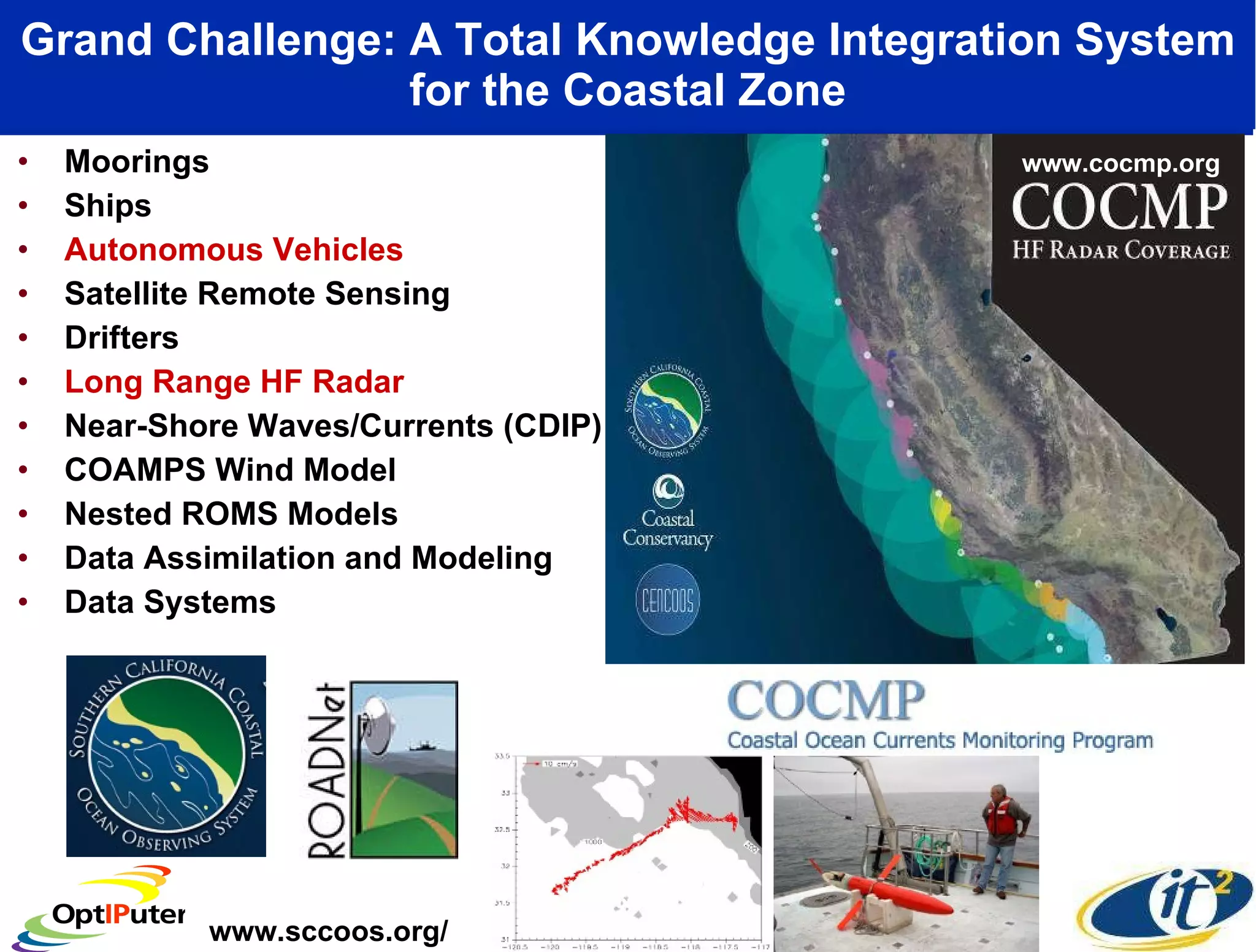

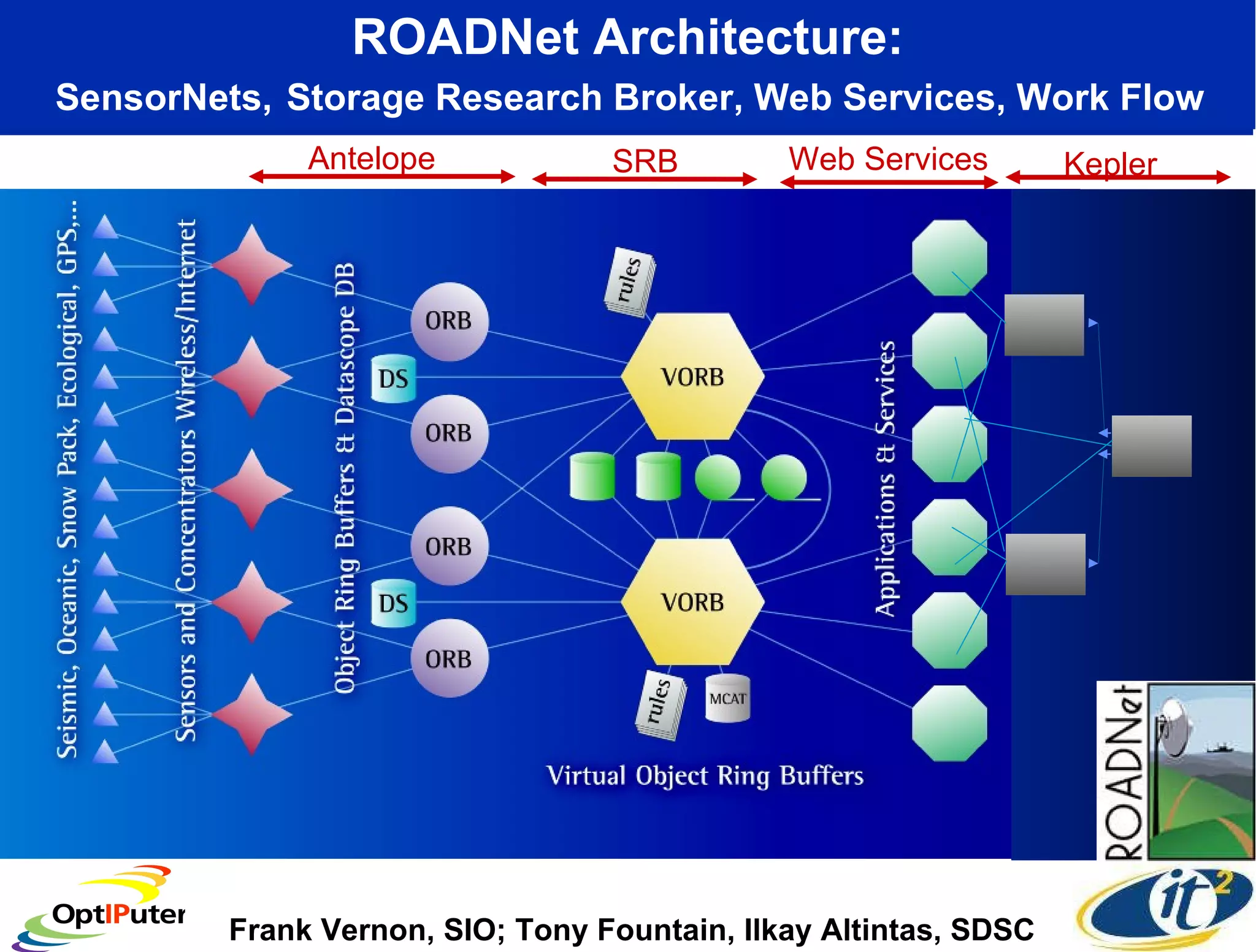

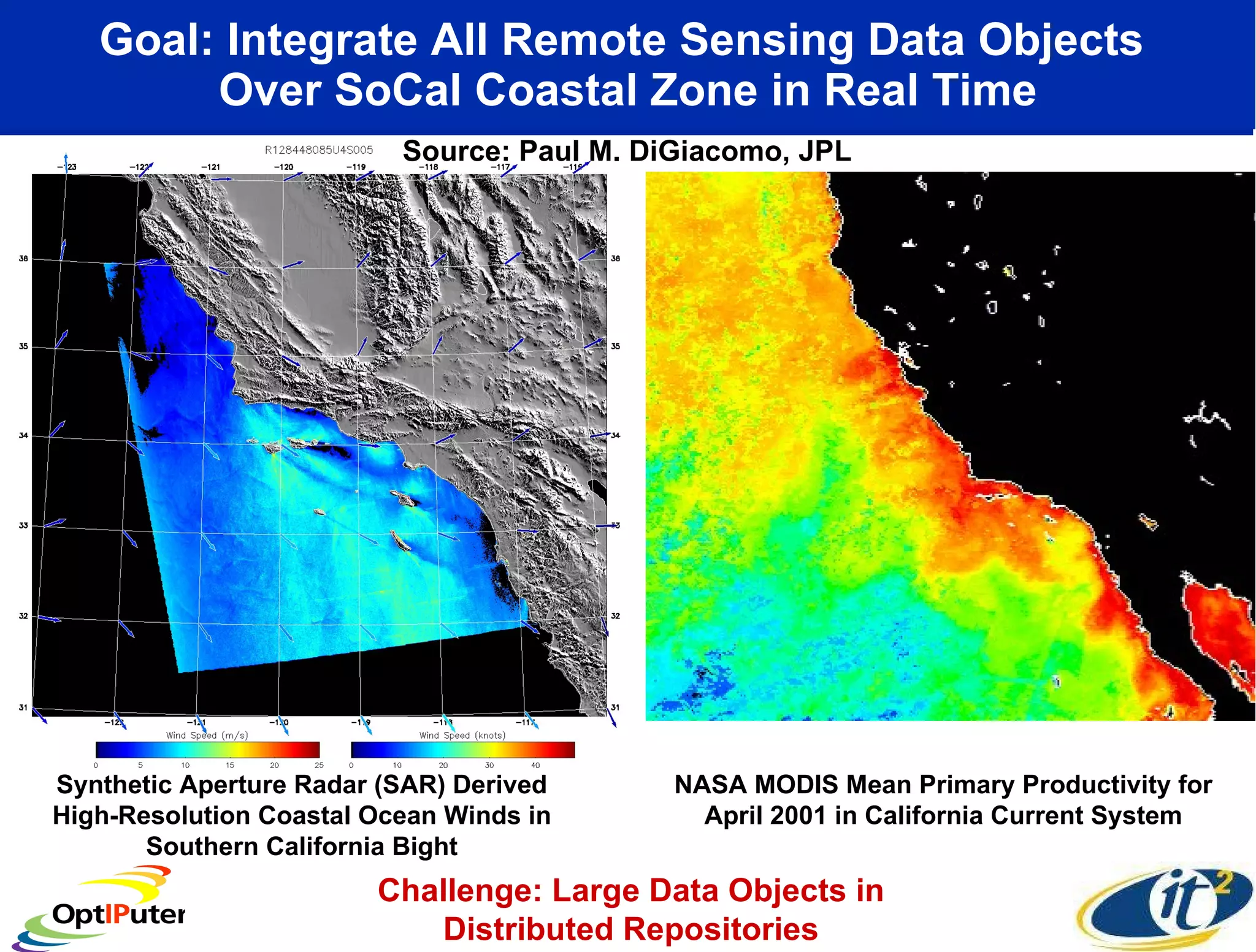

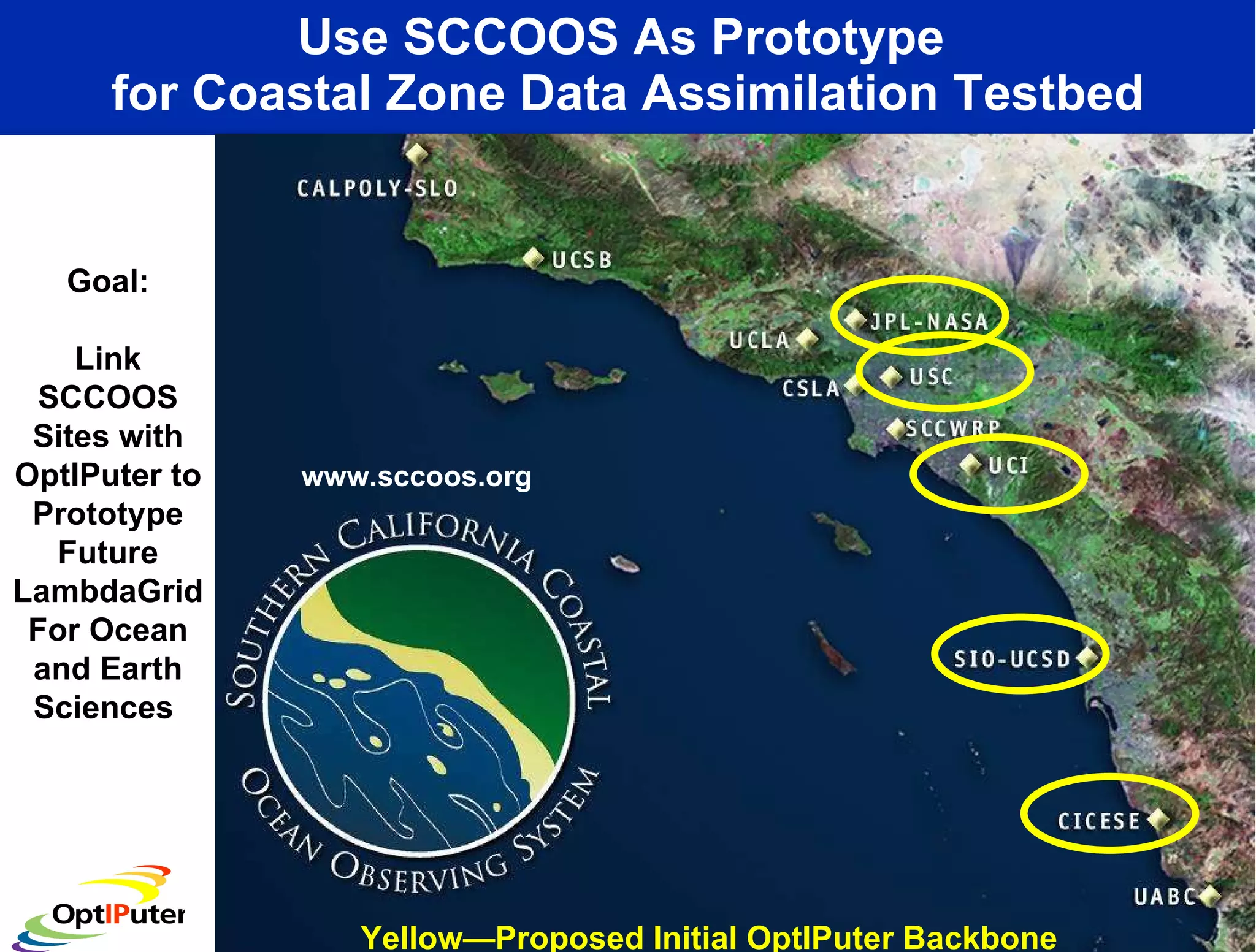

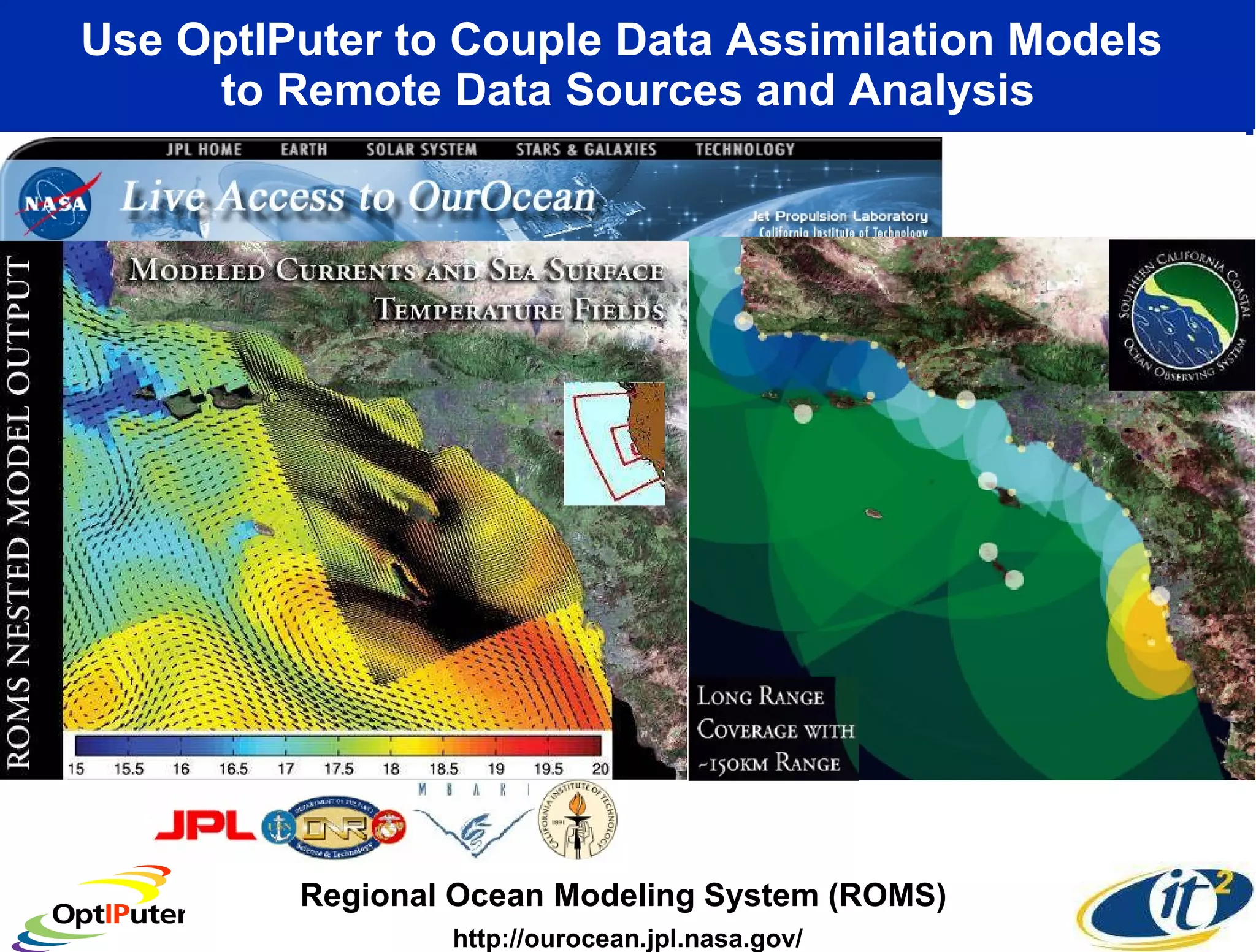

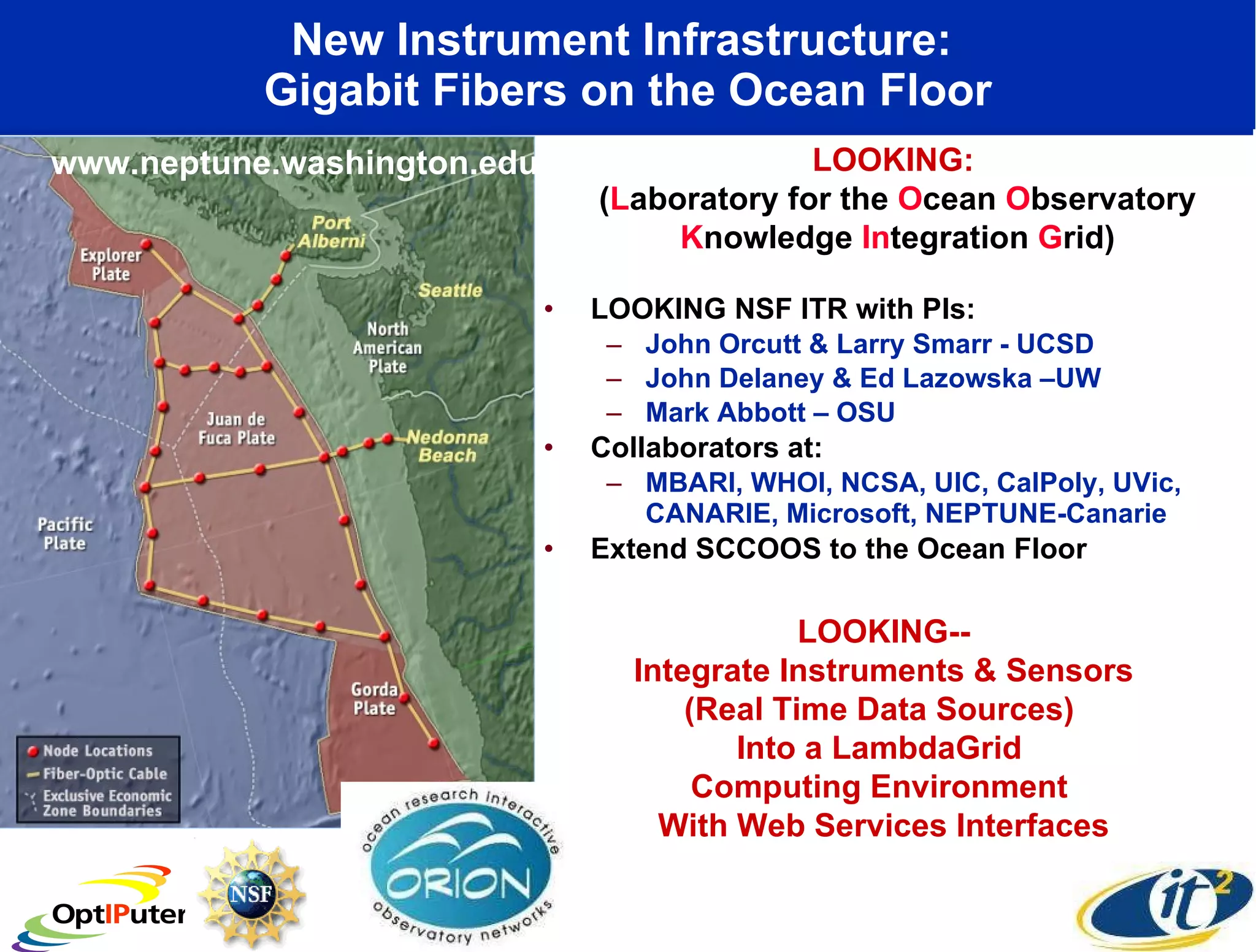

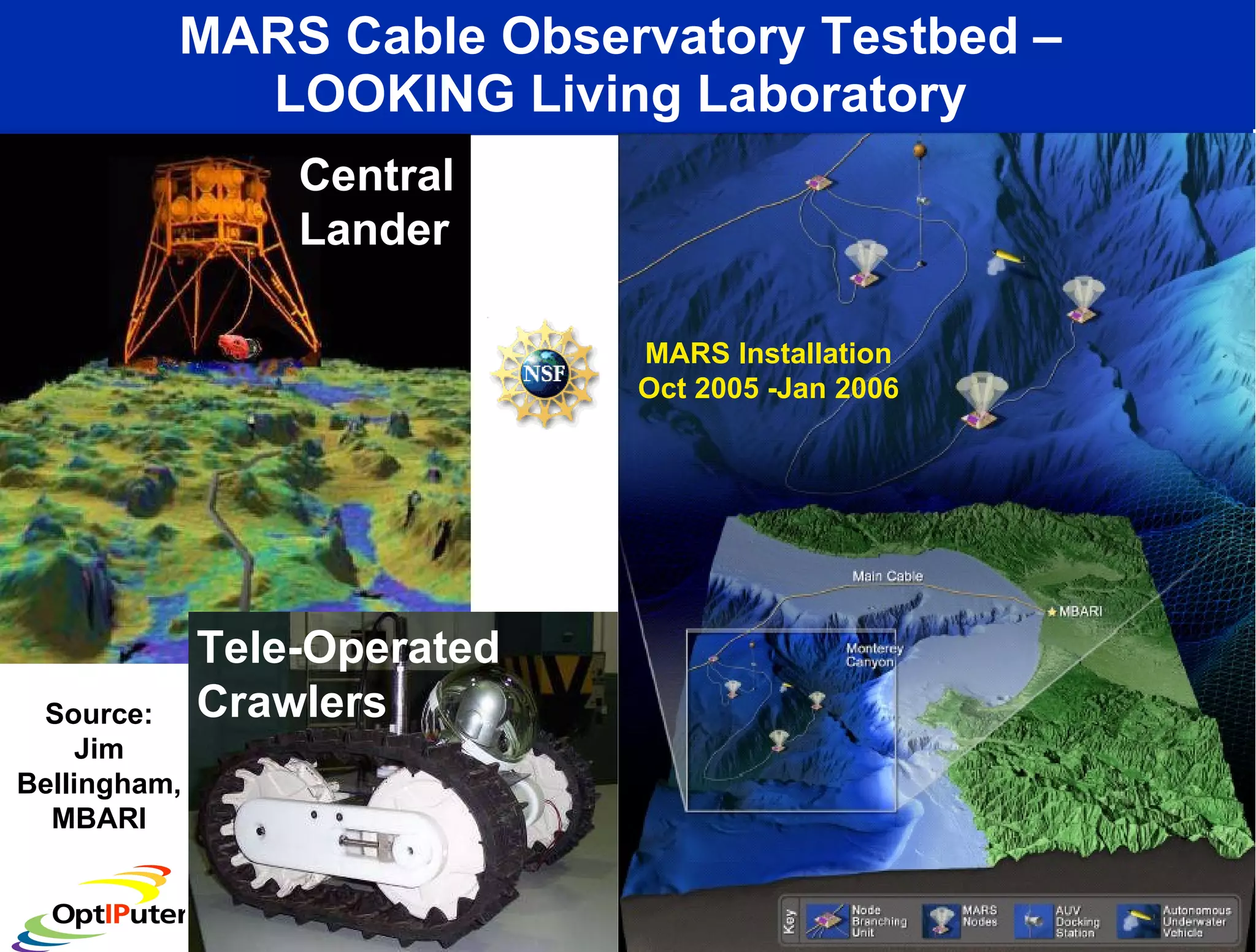

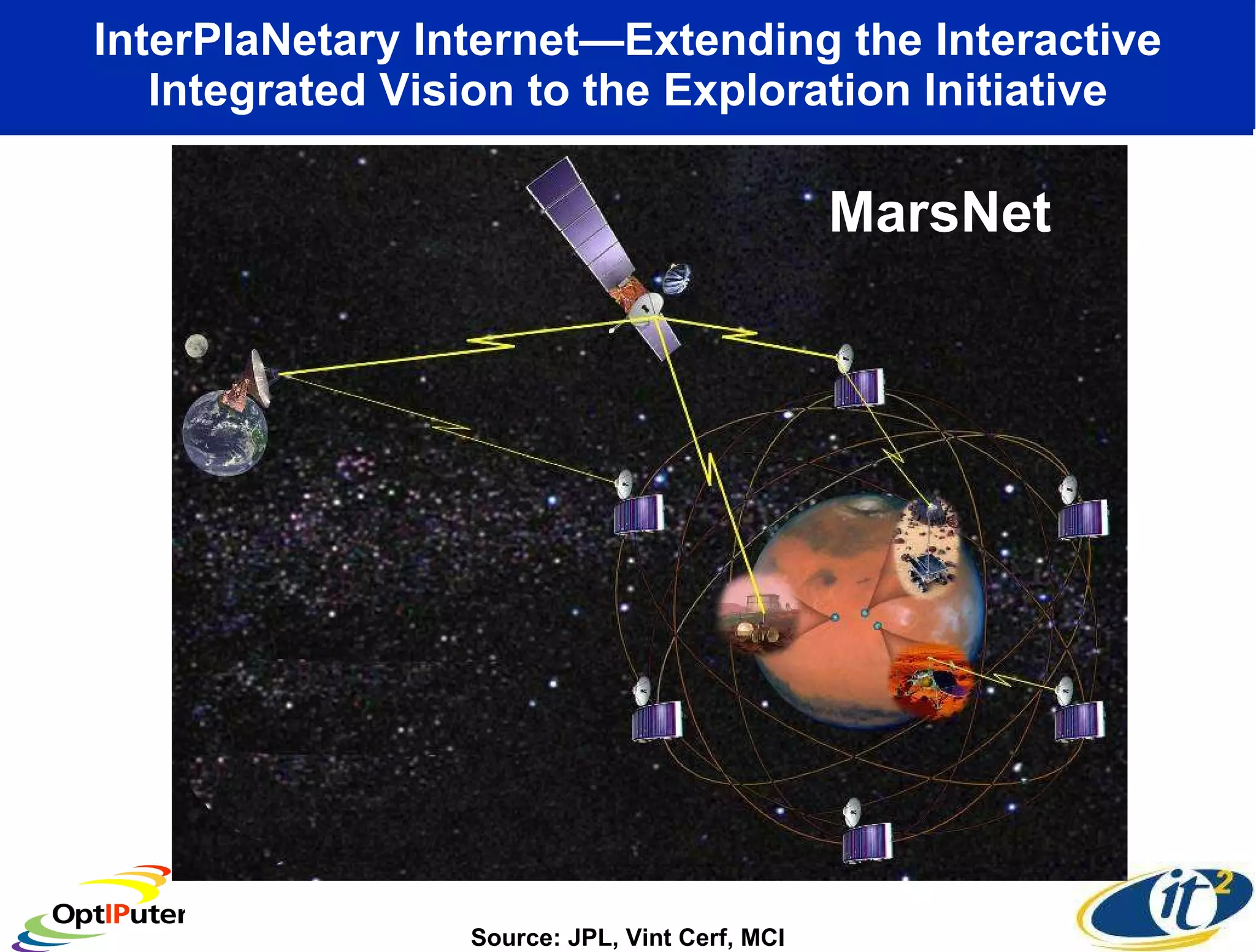

Dr. Larry Smarr's talk highlights the emerging capabilities of lambdagrids, which provide researchers with private high-speed optical connections (1-10 Gbps) for data-intensive applications in Earth and planetary sciences. Key projects like the NSF-funded Optiputer and Looking aim to enhance real-time visualization and collaboration on large data sets, enabling advancements in climate modeling and observational data analysis. The National Lambda Rail (NLR) and other infrastructures are set to support extensive distributed data access and integration across various scientific facilities, improving our ability to manage and analyze massive data flows.