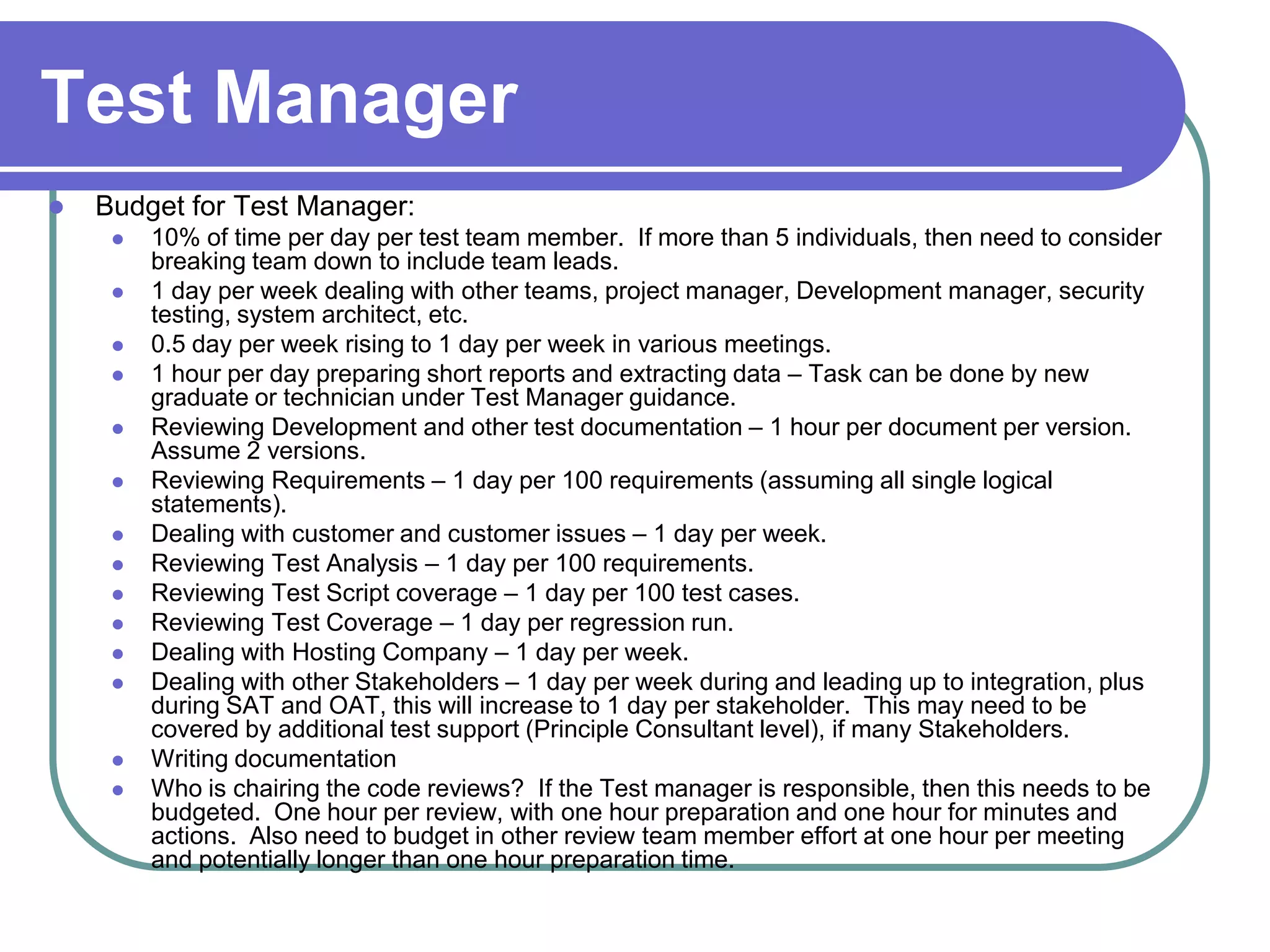

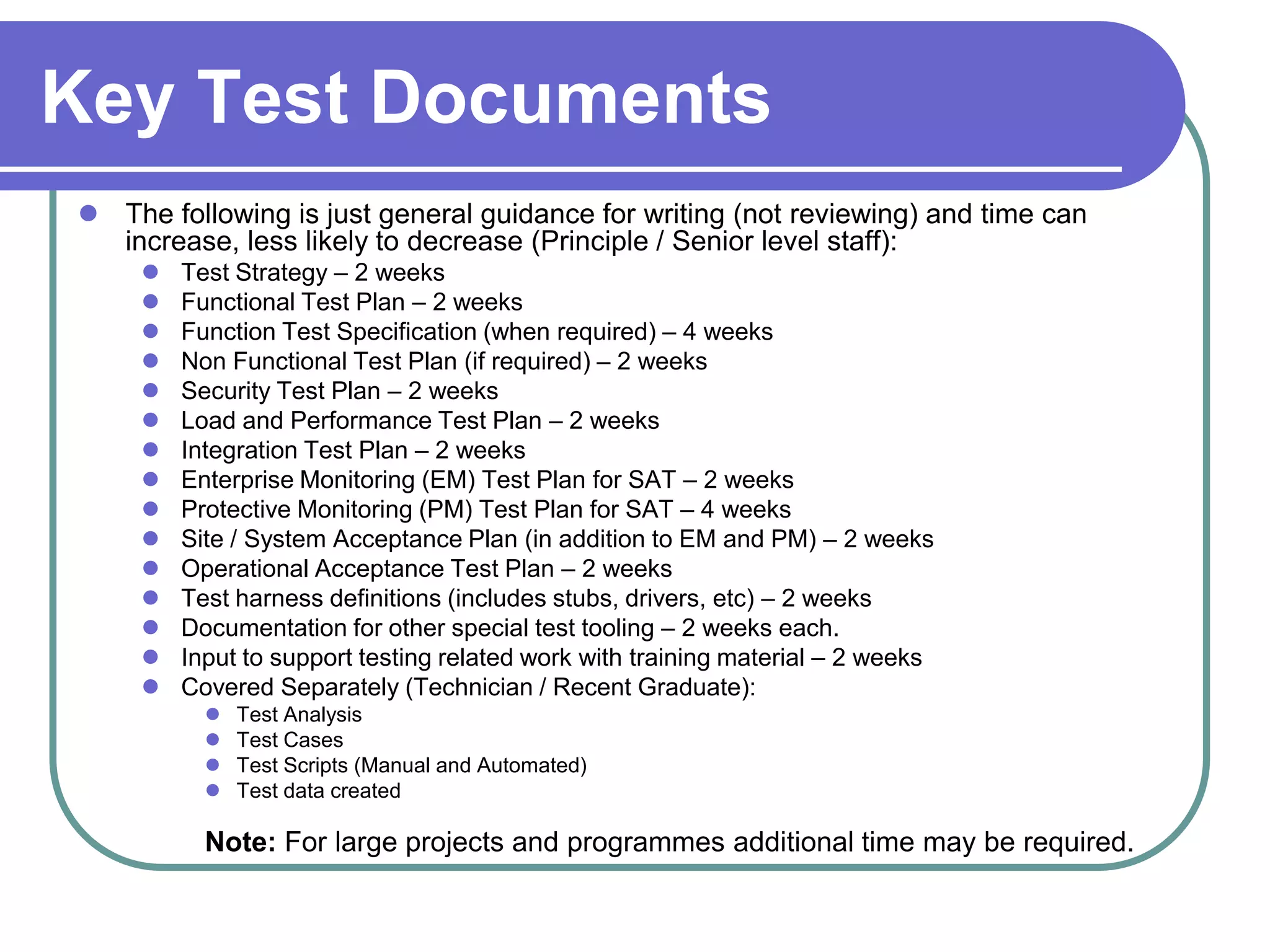

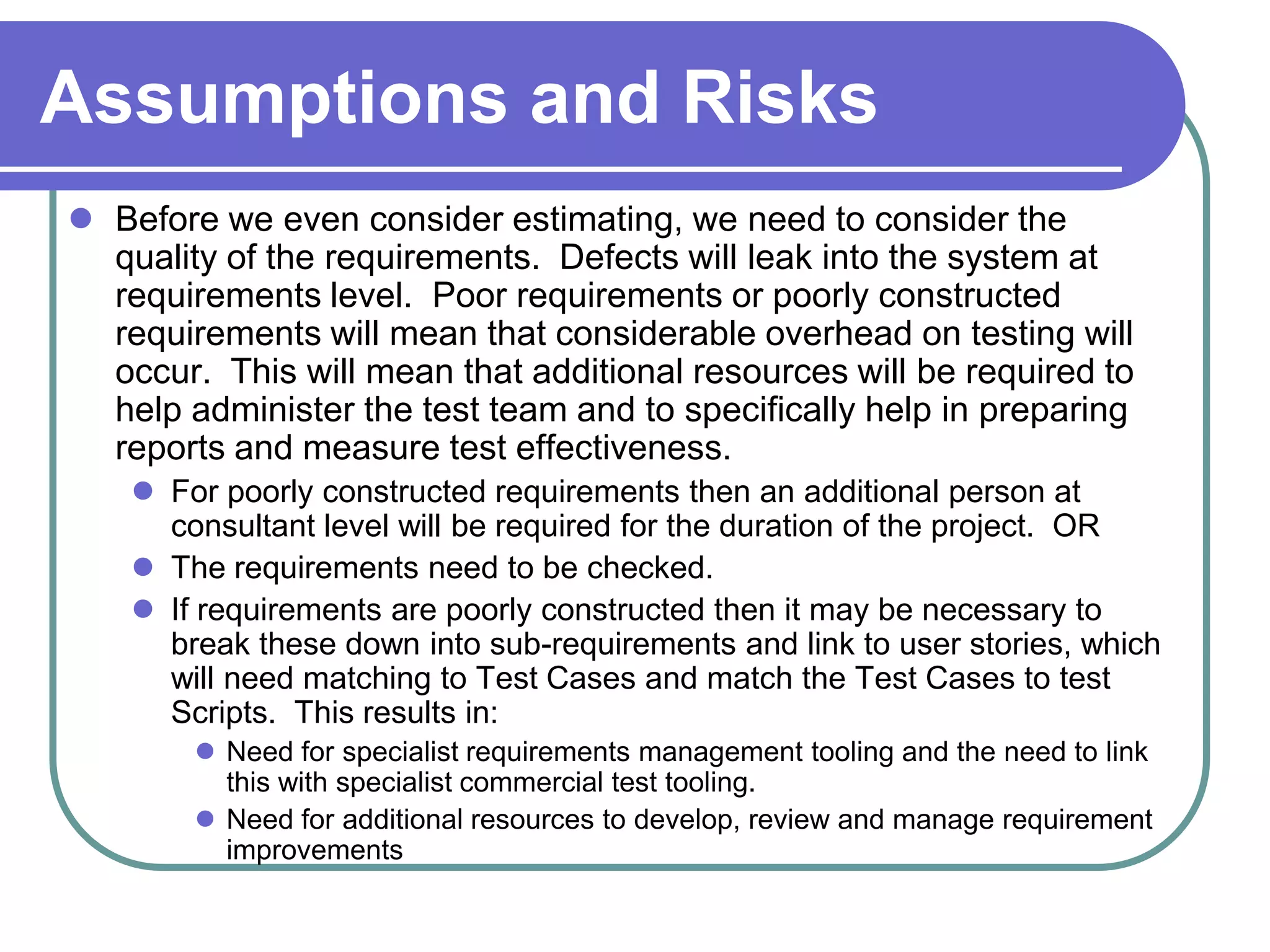

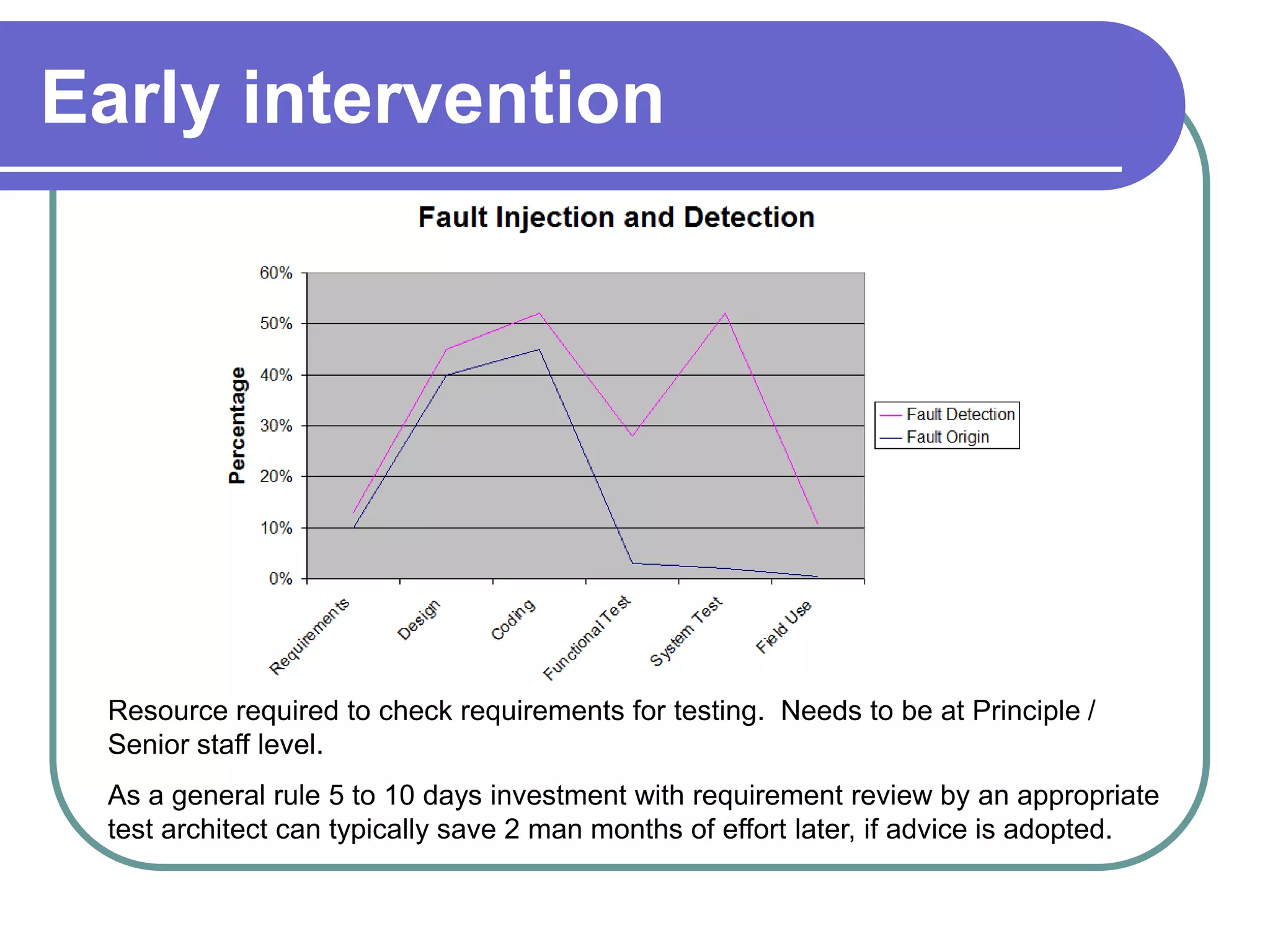

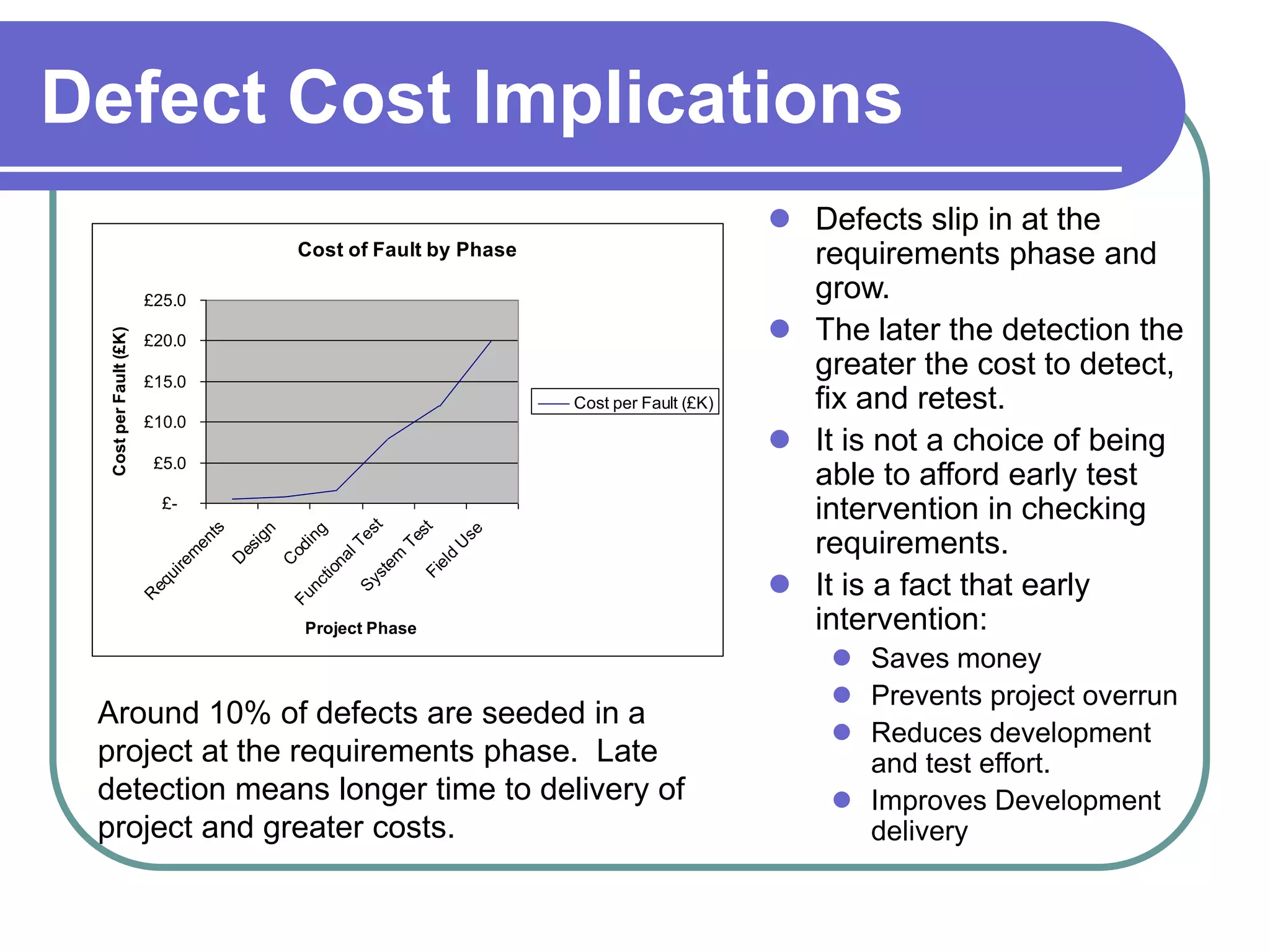

This document provides guidance for estimating testing efforts, with a focus on activities often overlooked that can stress test teams and risk project delivery. It notes that testing estimates should not simply be a percentage of development time, as many test tasks are underestimated. The document outlines factors to consider for tooling, staffing, documentation, and testing at various phases. It emphasizes the importance of thorough requirements reviews to avoid defects leaking into later phases and increasing costs. Early intervention to check requirements can significantly reduce later effort.