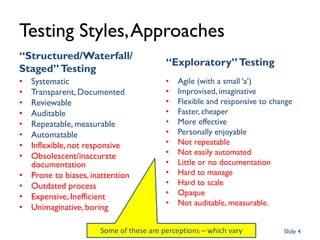

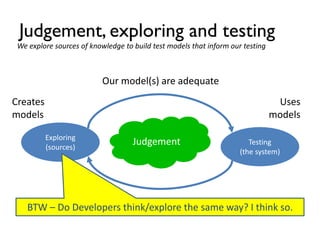

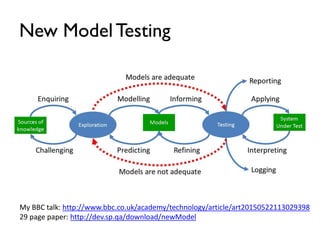

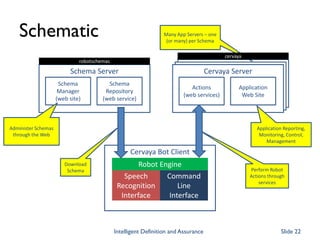

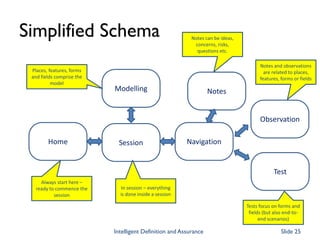

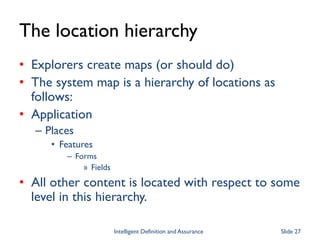

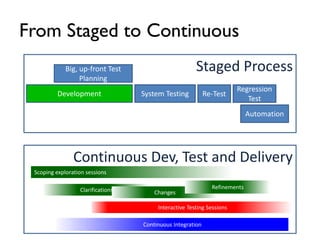

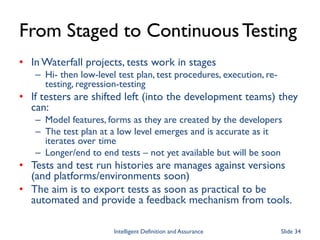

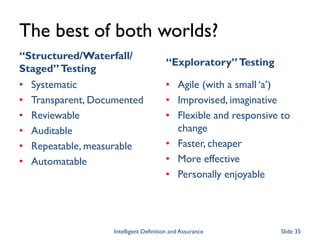

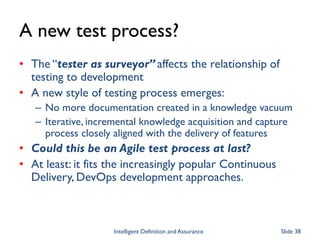

Paul Gerrard presented a new test process and tool called Cervaya that combines elements of structured and exploratory testing. The process involves testers surveying features using Cervaya to iteratively build system models and test plans. This shifts testing earlier in the development process. Cervaya logs tester activity, supports real-time collaboration, and could generate documentation. The goal is to make testing more aligned with agile and continuous delivery approaches. Gerrard invited collaboration on further developing Cervaya.