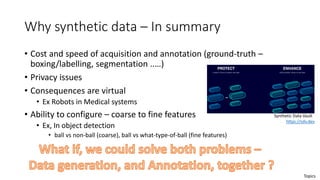

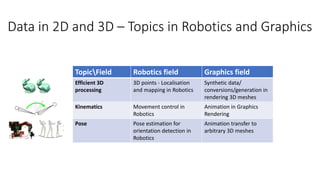

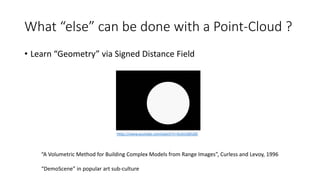

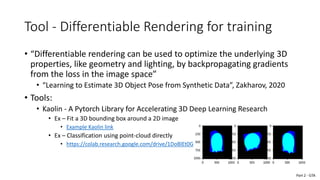

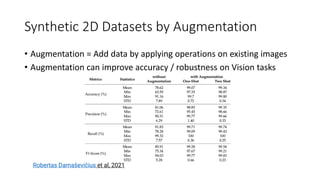

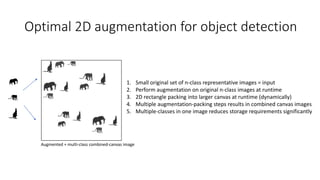

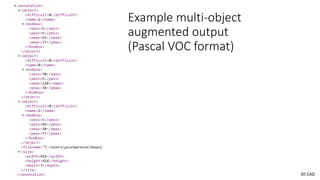

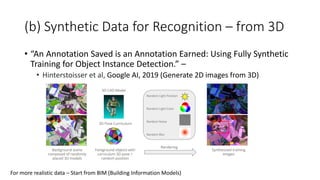

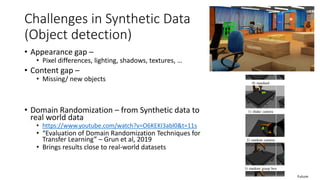

The document discusses the role of synthetic data and graphics techniques in robotics, detailing various data types, applications, and the significance of synthetic data in improving intelligent systems. It emphasizes challenges faced by robotic systems in navigation and vision processing, as well as the growing need for efficient data generation due to cost, privacy concerns, and complexity. Key advancements in synthetic data methods for enhancing recognition systems and future prospects for leveraging synthetic data in robotics are also addressed.

![(a) 2D Image Augmentation in Frameworks

PyTorch

random_crop = torchvision.transforms.RandomCrop(size)

fivecrop = torchvision.transforms.FiveCrop(size)

horizontal_flip = torchvision.transforms.RandomHorizontalFlip()

vertical_flip = torchvision.transforms.RandomVerticalFlip()

random_persp = torchvision.transforms.RandomPerspective()

random_jitter = torchvision.transforms.ColorJitter()

normalize = torchvision.transforms.Normalize([0, 0, 0], [1, 1, 1])

https://pytorch.org/vision/main/transforms.html

Examples from TensorFlow

https://www.tensorflow.org/api_docs/python/tf/image

Tools in industry:

Roboflow,

CVEDIA (Synthetic data) …

Optimal Aug](https://image.slidesharecdn.com/intelligent-systems-220805112550-e6e6ded0/85/Synthetic-Data-and-Graphics-Techniques-in-Robotics-18-320.jpg)

![COLMAP steps

1.Feature detection and extraction

2.Feature matching and geometric verification

3.Structure and motion reconstruction

• up vector was [-0.90487011 0.10419316 0.41273947]

• computing center of attention...

• [-12.27771833 1.27148092 -0.09314177]

• avg camera distance from origin 12.701290776903985

• 55 frames

• writing transforms.json](https://image.slidesharecdn.com/intelligent-systems-220805112550-e6e6ded0/85/Synthetic-Data-and-Graphics-Techniques-in-Robotics-30-320.jpg)