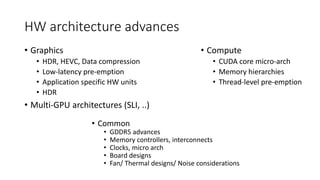

This document provides an overview of GPU algorithms and trends in mid-2018. It discusses why GPUs are useful, the evolution of GPU programming models, and typical algorithms like image processing, deep learning, and graphics. It also covers bandwidth analysis tools, hardware advances, programming models like CUDA and C++ AMP, and improving performance through profiling and optimization. Emerging areas discussed include compute-in-flash, deep learning and operating systems, and using AI for space travel challenges.