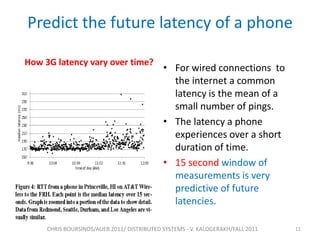

The document presents 'Switchboard', a matchmaking system designed for multiplayer mobile games that addresses cellular network latency issues by effectively grouping players. It highlights the significance of estimating latency for establishing viable game sessions, particularly in peer-to-peer gaming, thereby reducing server costs and improving player experience. The implementation and evaluations demonstrate how Switchboard can optimize matchmaking efficiency and latency management across various game types over cellular networks.