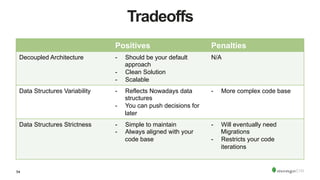

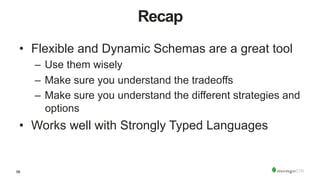

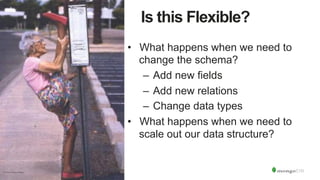

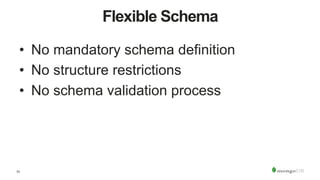

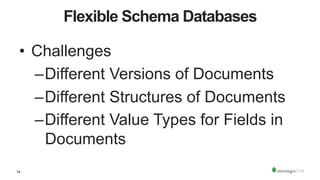

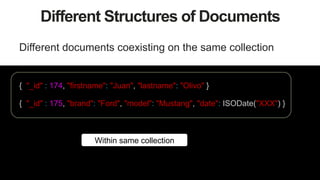

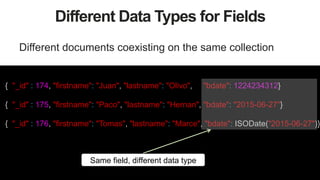

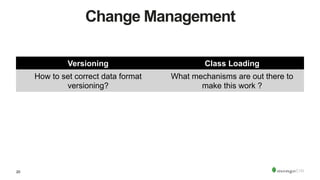

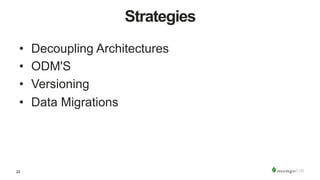

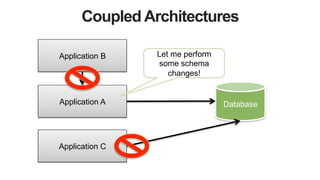

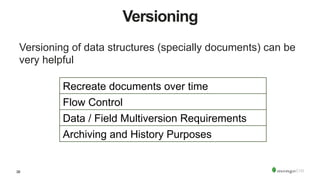

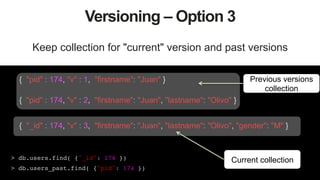

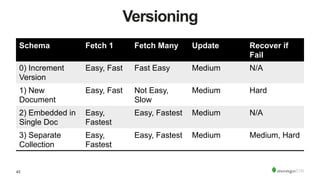

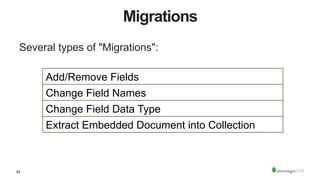

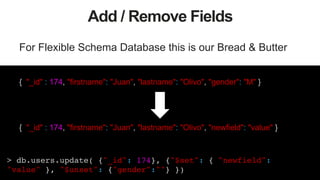

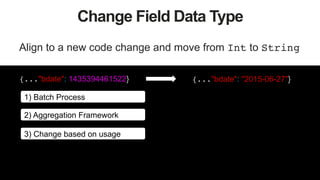

The document discusses the differences between strongly typed languages and flexible schema databases, highlighting how flexible schemas allow for dynamic data structures without mandatory validation or restrictions. It details various change management strategies for adapting data structures, such as versioning and migrations, and emphasizes the benefits and trade-offs of each approach. The document concludes by advocating for a thoughtful usage of flexible schemas while considering their complexities and potential impacts on code management.

![12

We start from code

public class CatPicture {

int size;

byte[] blob;

}

public class User {

int id;

String firstname;

String lastname;

CatPicture[] cat_pictures;

}](https://image.slidesharecdn.com/stronglytypedlanguagesdynamicdatabases-150627161640-lva1-app6892/85/Strongly-Typed-Languages-and-Flexible-Schemas-12-320.jpg)

![13

Document Structure

{

_id: 1234,

firstname: 'Juan',

lastname: 'Olivo',

cat_pictures: [ {

size: 10,

picture: BinData("0x133334299399299432"),

}

]

}

Rich Data Types

Embedded

Documents](https://image.slidesharecdn.com/stronglytypedlanguagesdynamicdatabases-150627161640-lva1-app6892/85/Strongly-Typed-Languages-and-Flexible-Schemas-13-320.jpg)

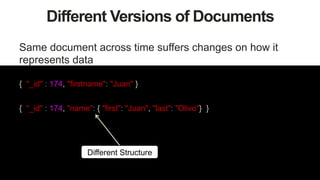

![15

Different Versions of Documents

Same document across time suffers changes on how it

represents data

{ "_id" : 174, "firstname": "Juan" }

{ "_id" : 174, "firstname": "Juan", "lastname": "Olivo" }

First Version

Second Version

{ "_id" : 174, "firstname": "Juan", "lastname": "Olivo" , "cat_pictures":

[{"size": 10, picture: BinData("0x133334299399299432")}]

}

Third Version](https://image.slidesharecdn.com/stronglytypedlanguagesdynamicdatabases-150627161640-lva1-app6892/85/Strongly-Typed-Languages-and-Flexible-Schemas-15-320.jpg)

![32

Spring Data Document Structure

{

"_id": 1,

"first_name": "first",

"lastname": "last",

"catpictures": [

{

"size": 10,

"blob": BinData(0, "Kr3AqmvV1R9TJQ==")

},

]

}](https://image.slidesharecdn.com/stronglytypedlanguagesdynamicdatabases-150627161640-lva1-app6892/85/Strongly-Typed-Languages-and-Flexible-Schemas-32-320.jpg)

![35

Morphia Document Structure

{

"_id": 1,

"className": "examples.odms.morphia.pojos.User",

"firstname": "first",

"lastname": "last",

"catpictures": [

{

"size": 10,

"blob": BinData(0, "Kr3AqmvV1R9TJQ==")

},

]

}

Class Definition](https://image.slidesharecdn.com/stronglytypedlanguagesdynamicdatabases-150627161640-lva1-app6892/85/Strongly-Typed-Languages-and-Flexible-Schemas-35-320.jpg)

![41

Versioning – Option 2

Store all document versions inside a single document.

> db.users.update( {"_id": 174 } , { {"$set" :{ "current": ... }, !

{"$inc": { "current.v": 1 }}, {"$addToSet": {"prev": {... }}} } )!

!

Current value

{ "_id" : 174, "current" : { "v" :3, "attr1": 184, "attr2" : "A-1" },

"prev" : [

{ "v" : 1, "attr1": 165 },

{ "v" : 2, "attr1": 165, "attr2": "A-1" }

]

}

Previous values](https://image.slidesharecdn.com/stronglytypedlanguagesdynamicdatabases-150627161640-lva1-app6892/85/Strongly-Typed-Languages-and-Flexible-Schemas-41-320.jpg)

![52

Extract Document into Collection

Normalize your schema

{"size": 10, picture: BinData("0x133334299399299432")}

{ "_id" : 174, "firstname": "Juan",

"lastname": "Olivo",}

> db.users.aggregate( [ !

{$unwind: "$cat_pictures"},!

{$project: { "_id":0, "uid":"$_id", "size": "$cat_pictures.size",

"picture": "$cat_pictures.picture"}}, !

{$out:"cats"}])!

{ "_id" : 174, "firstname": "Juan", "lastname": "Olivo" , "cat_pictures":

[{"size": 10, picture: BinData(0, "m/lhLlLmoNiUKQ==")}]

}

{"size": 10, "picture": BinData(0, "m/lhLlLmoNiUKQ==")}](https://image.slidesharecdn.com/stronglytypedlanguagesdynamicdatabases-150627161640-lva1-app6892/85/Strongly-Typed-Languages-and-Flexible-Schemas-52-320.jpg)