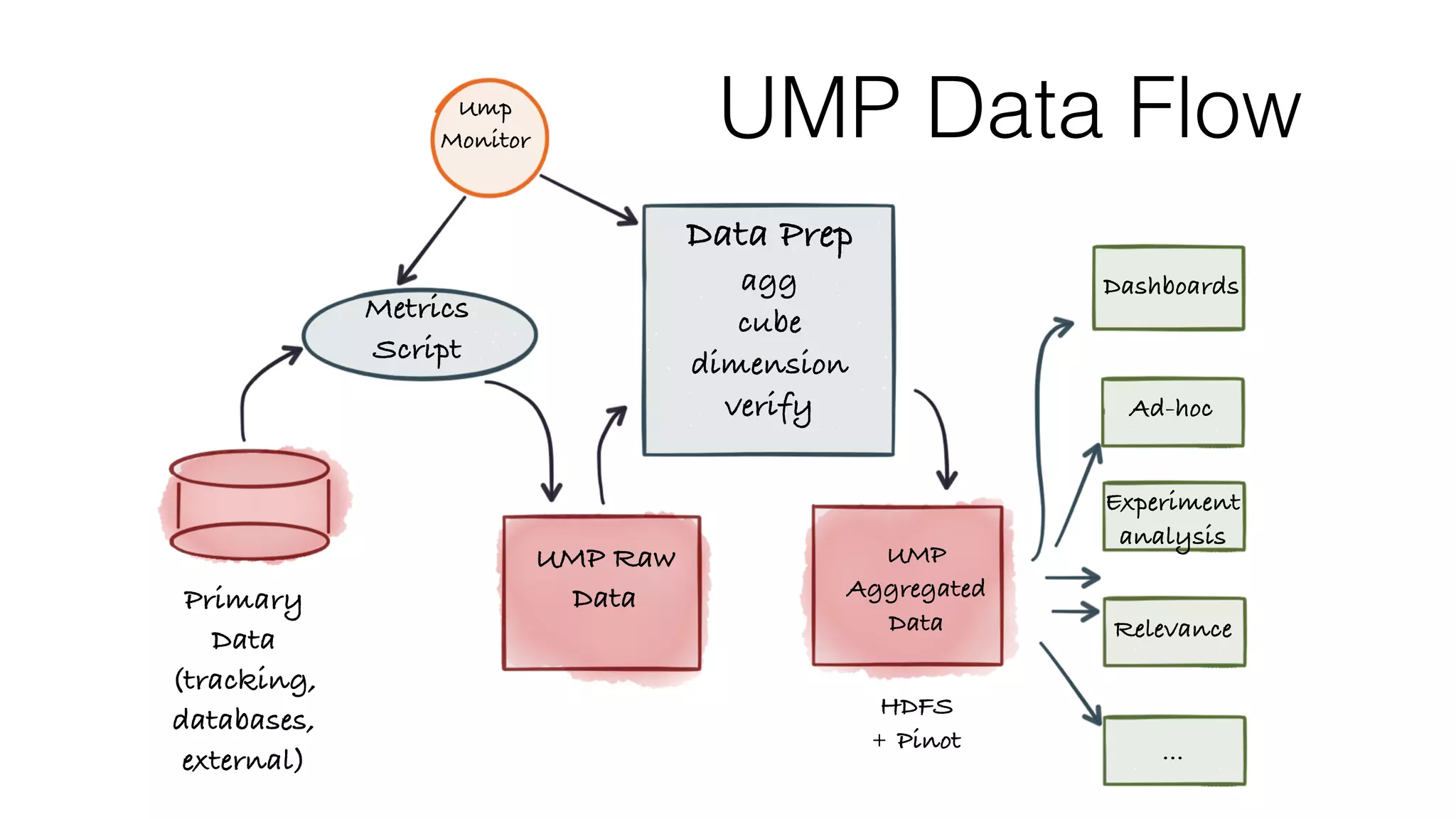

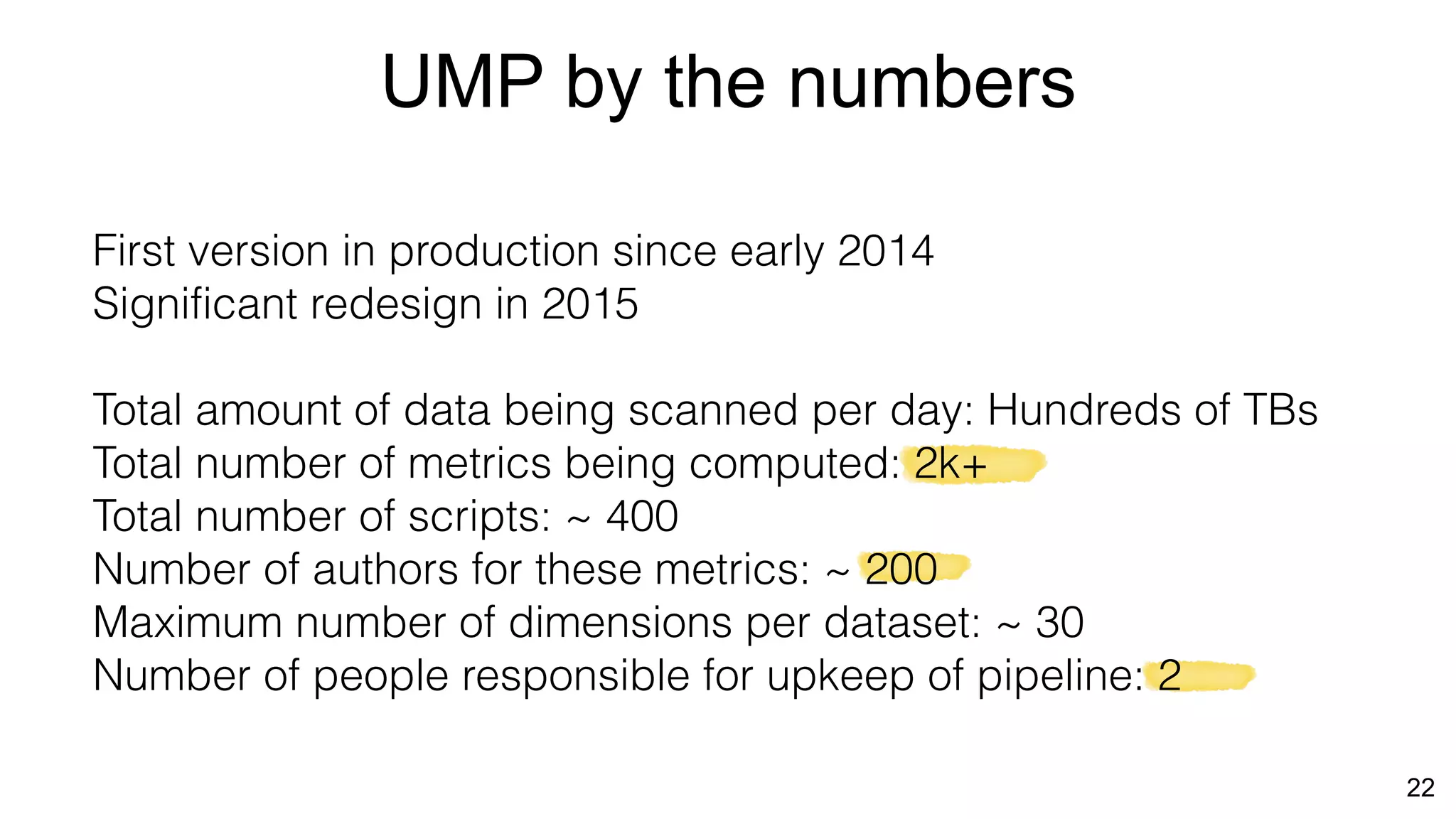

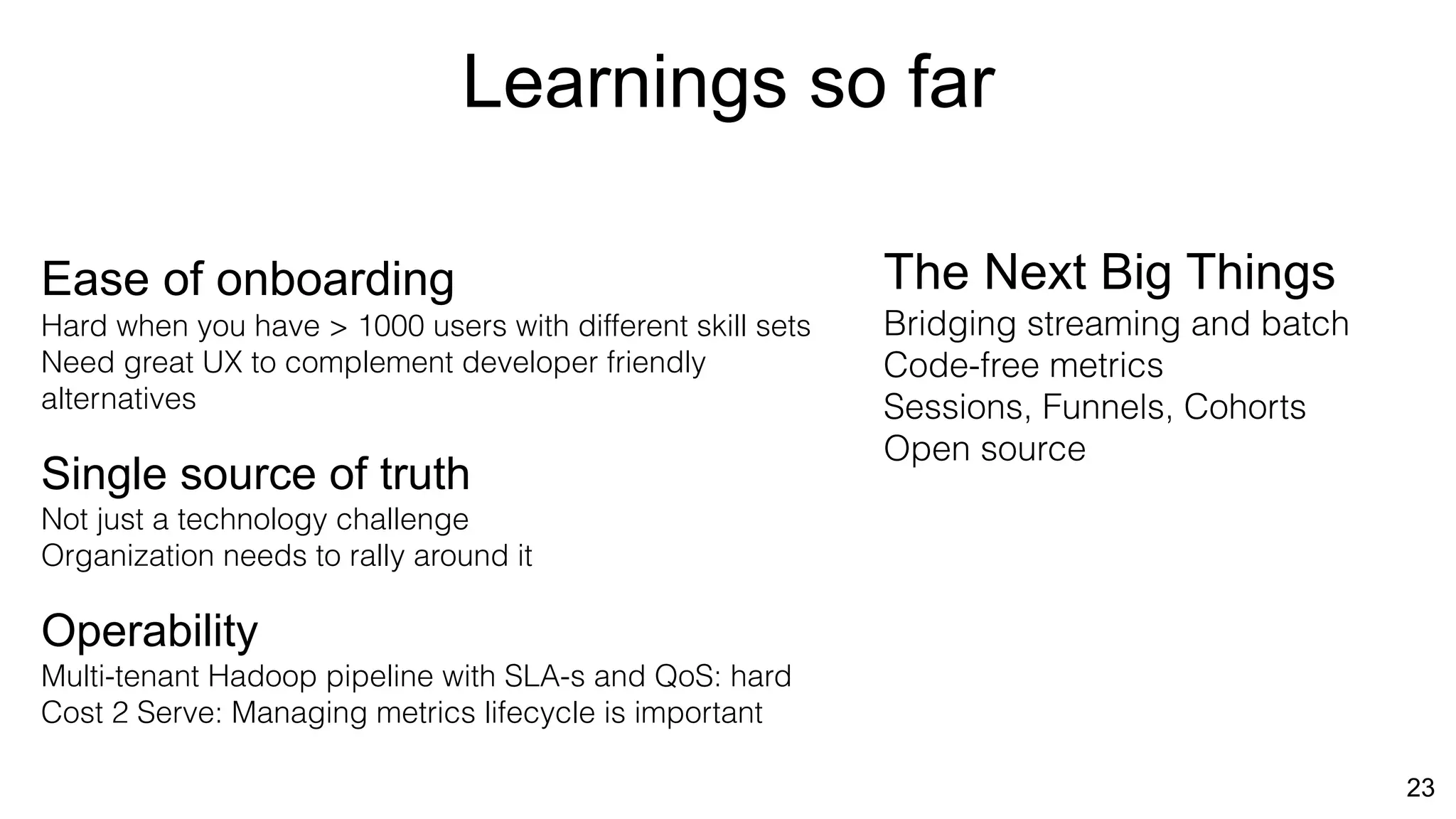

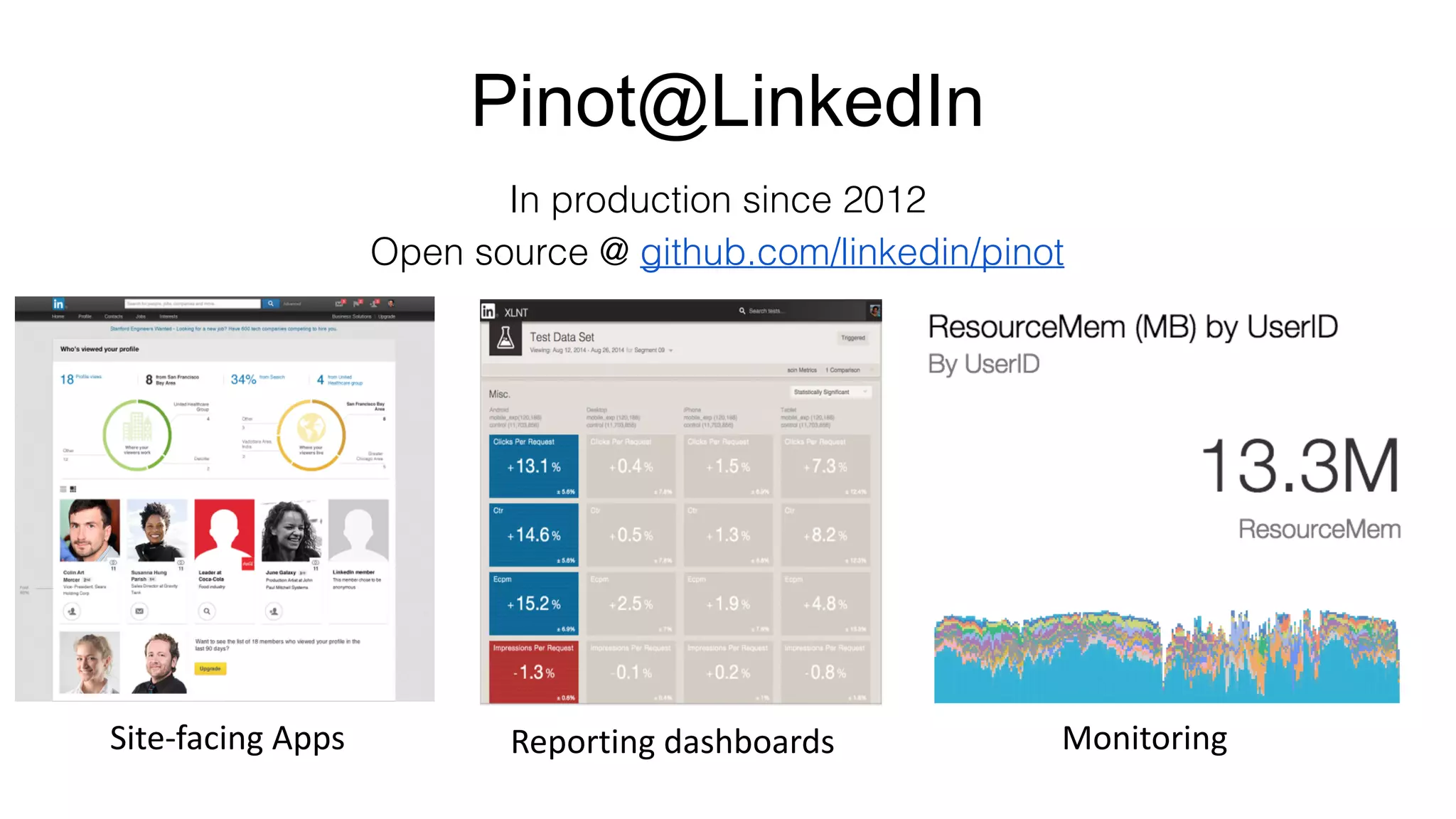

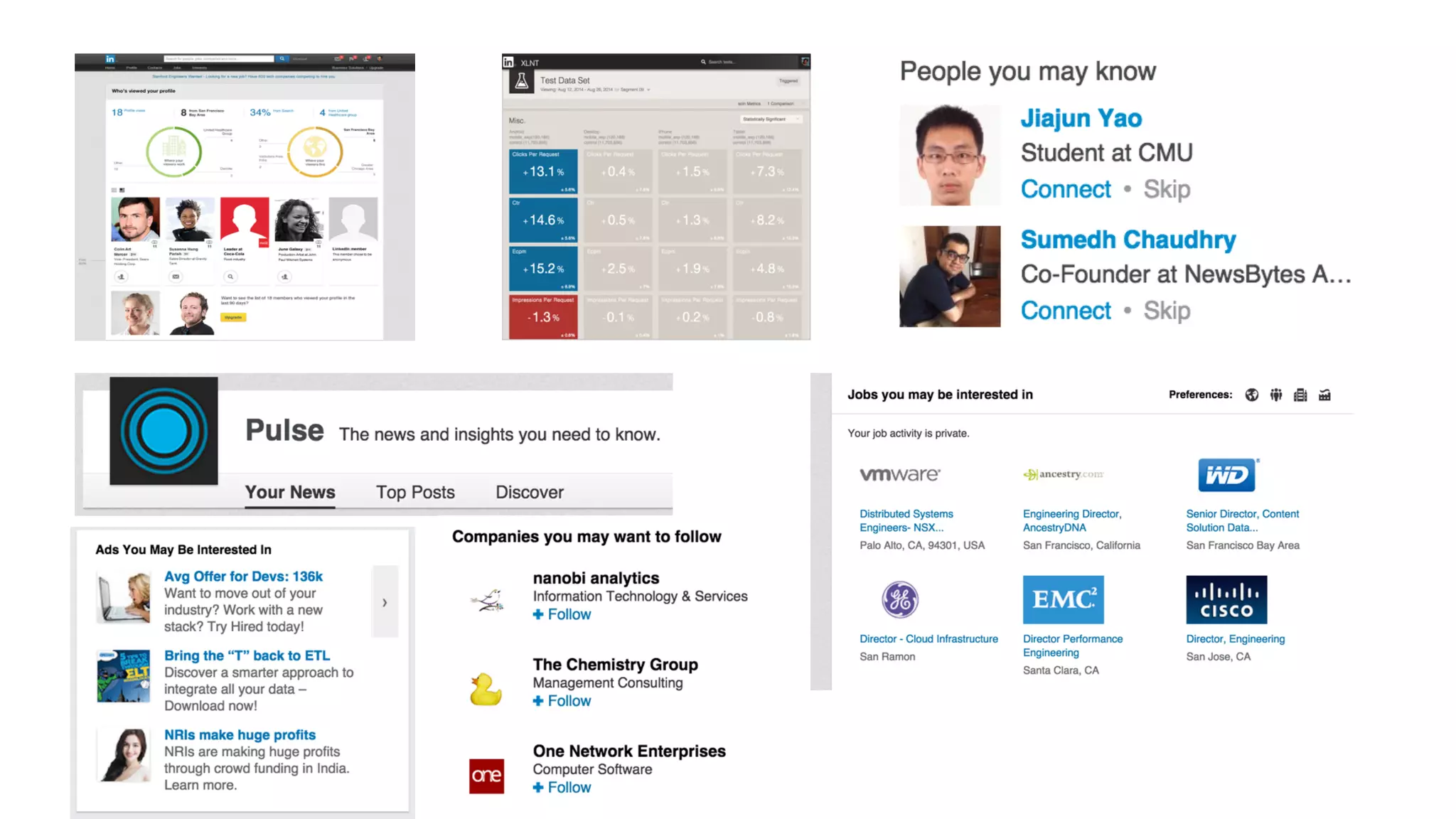

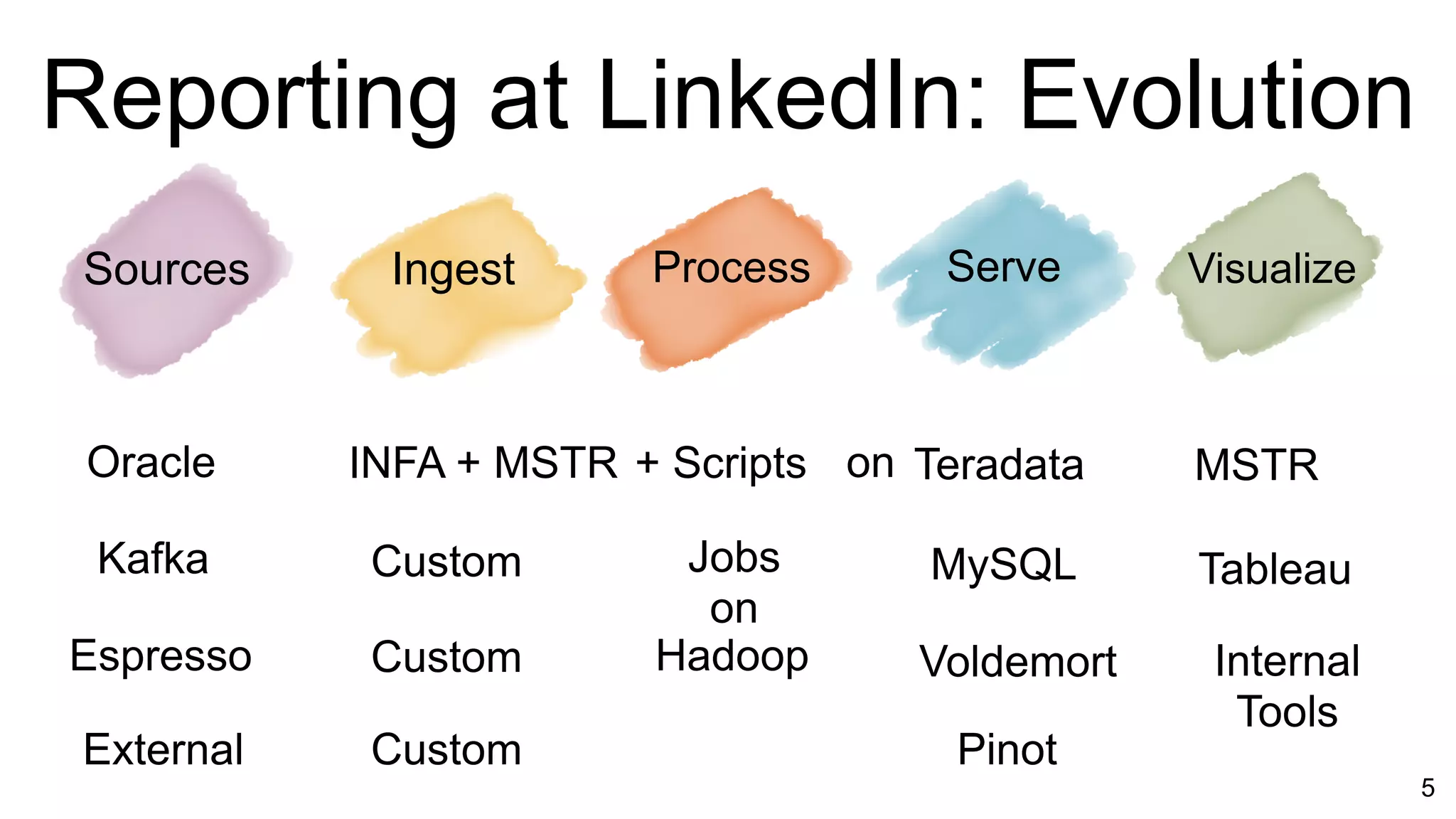

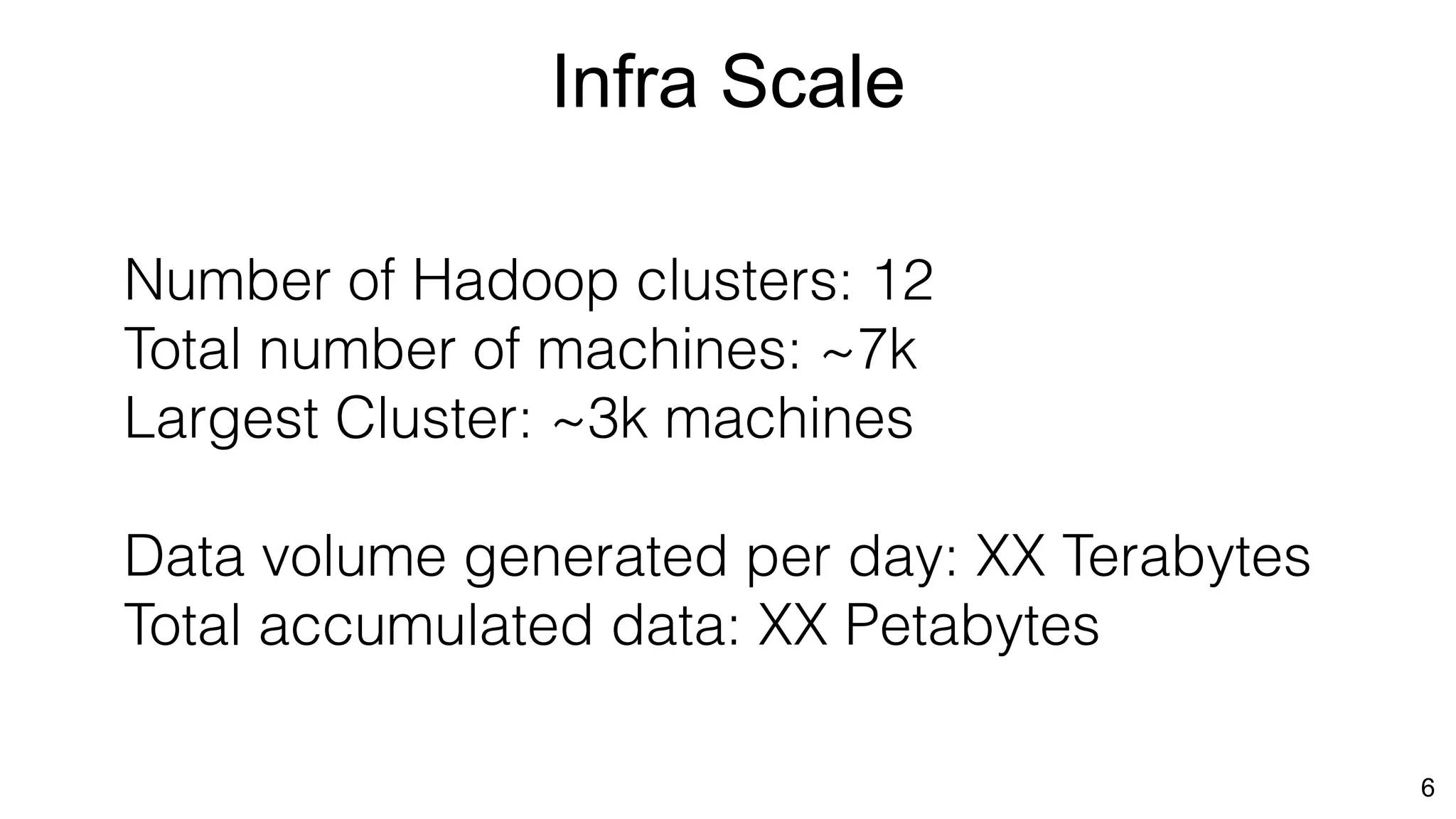

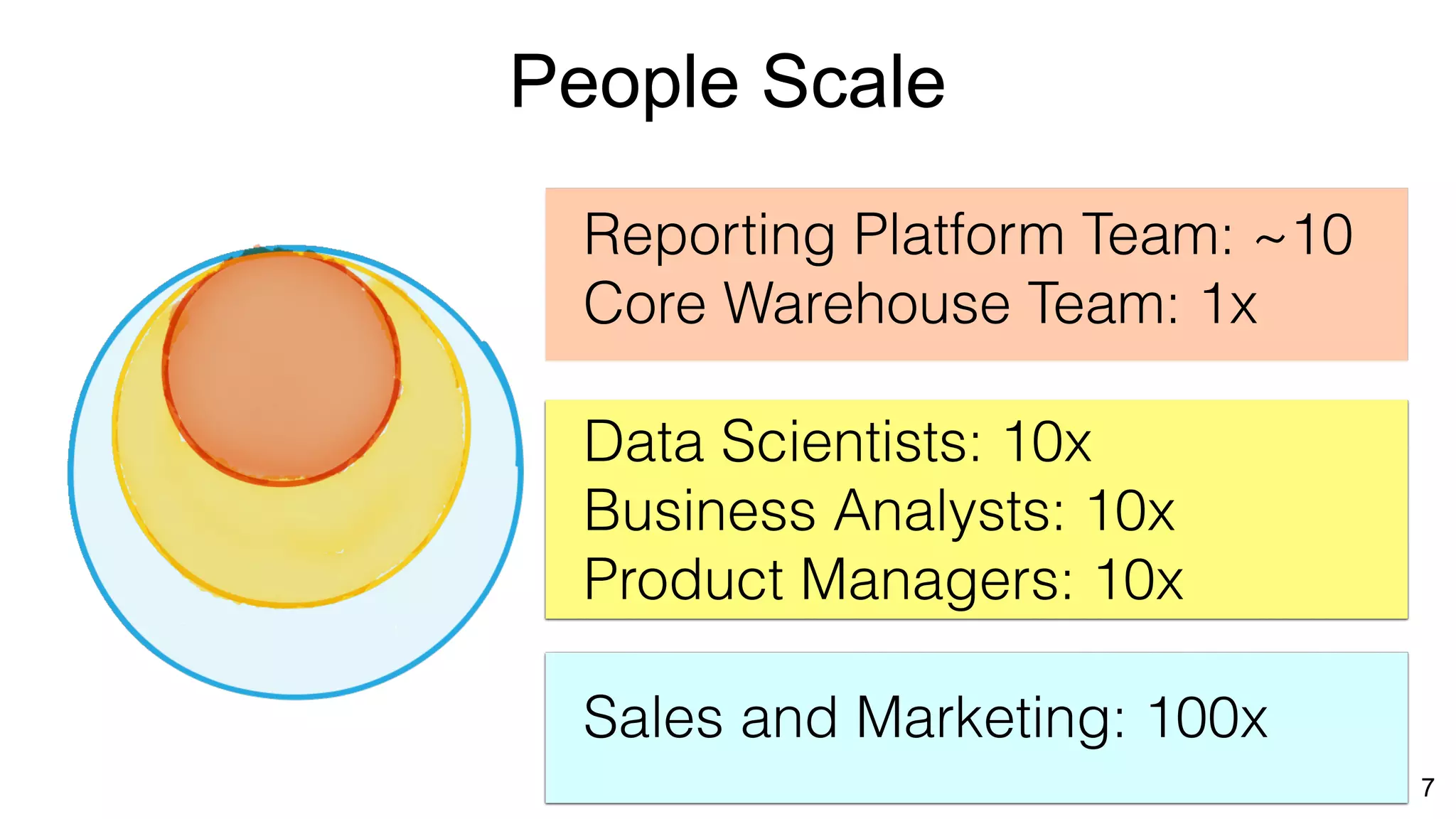

The document outlines the self-serve reporting platform developed at LinkedIn, primarily focusing on their Hadoop infrastructure and the various challenges faced in data ingestion and visualization processes. It details the structure of the reporting teams, data sources, and metrics involved, as well as the evolution of tools like the Unified Metrics Platform and Raptor for improved analytics. Key figures include significant data volumes handled daily and the collaborative efforts made to create a centralized source of truth for reporting metrics across the organization.

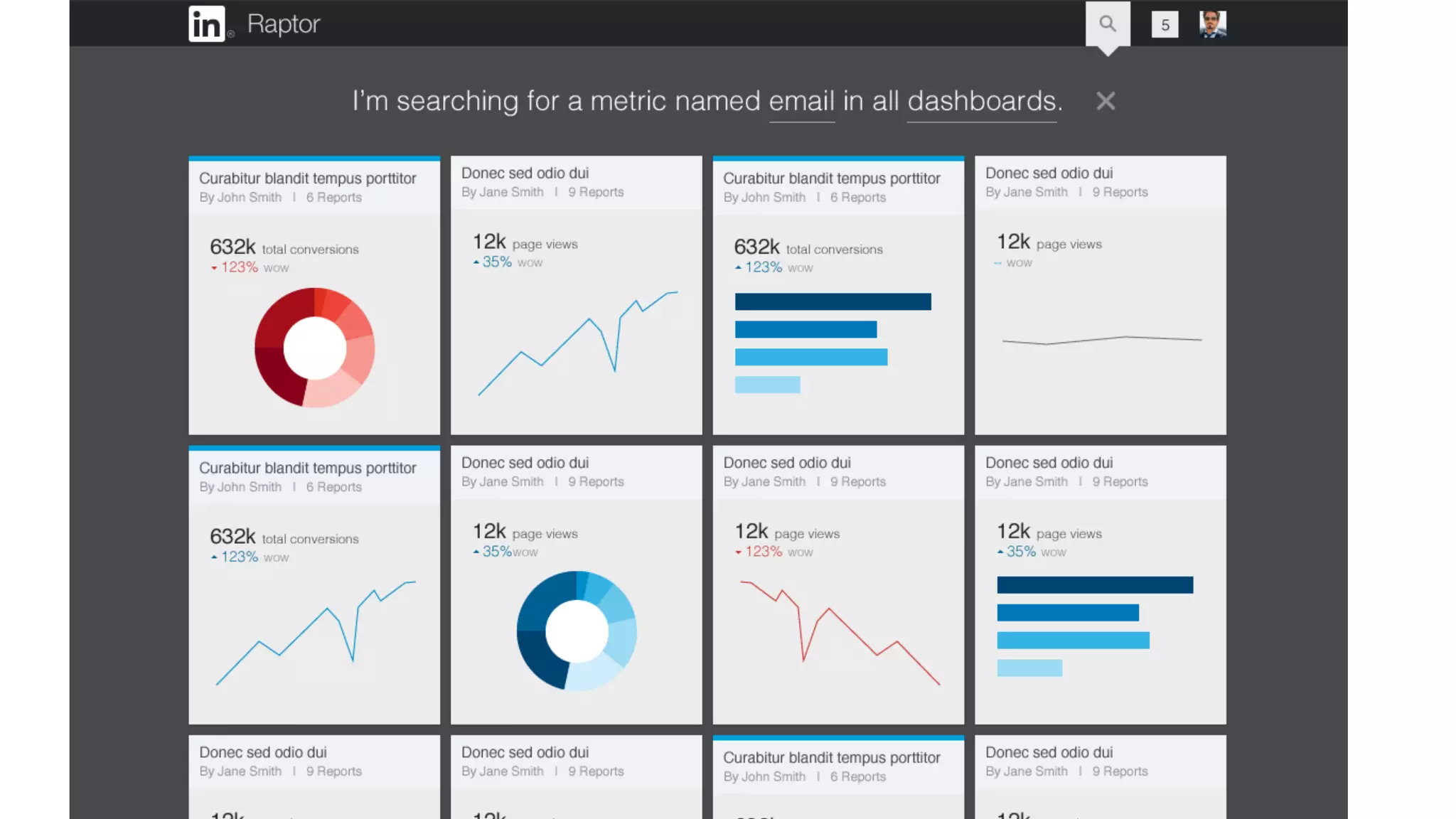

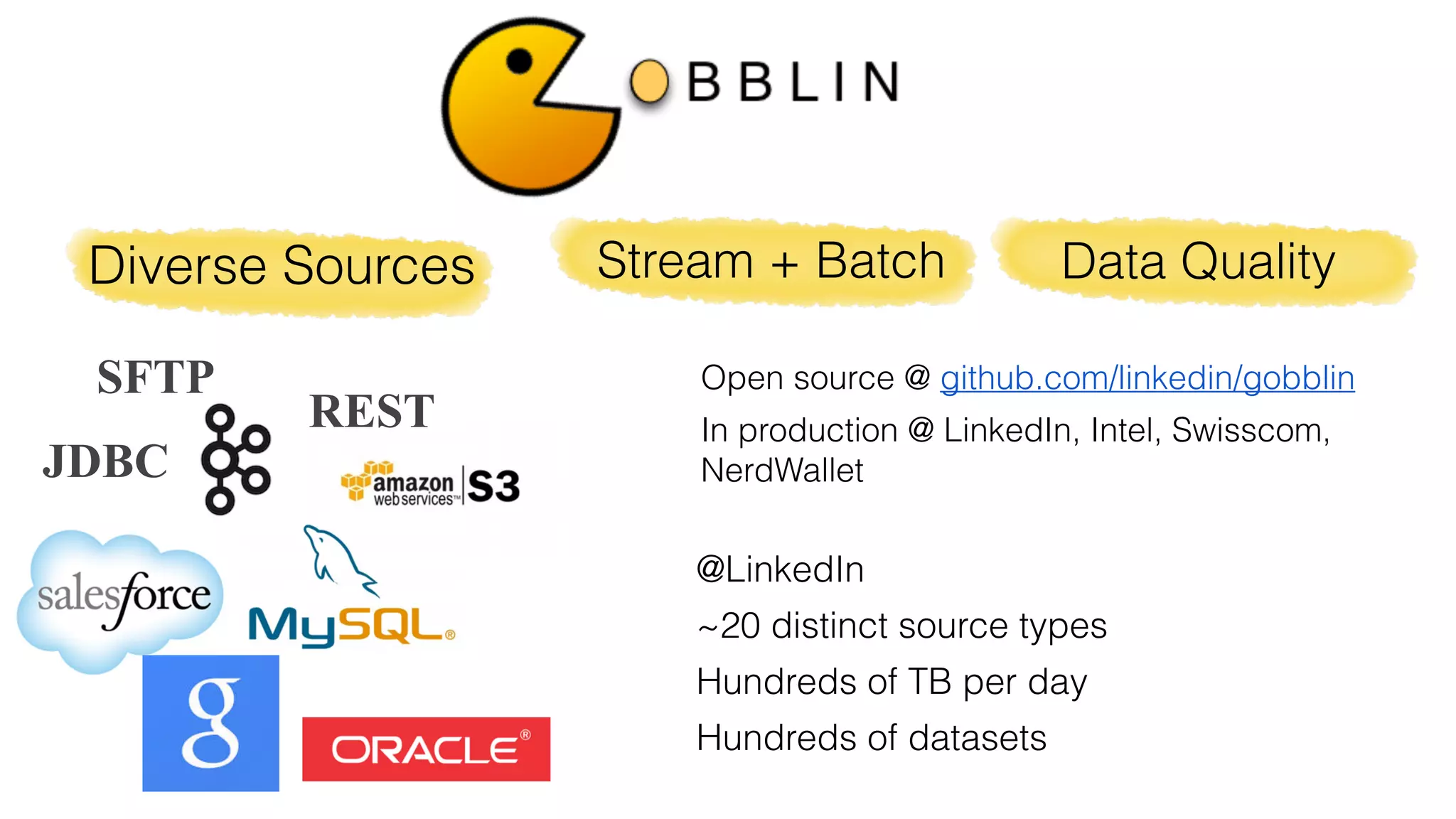

![An example: video play analysis

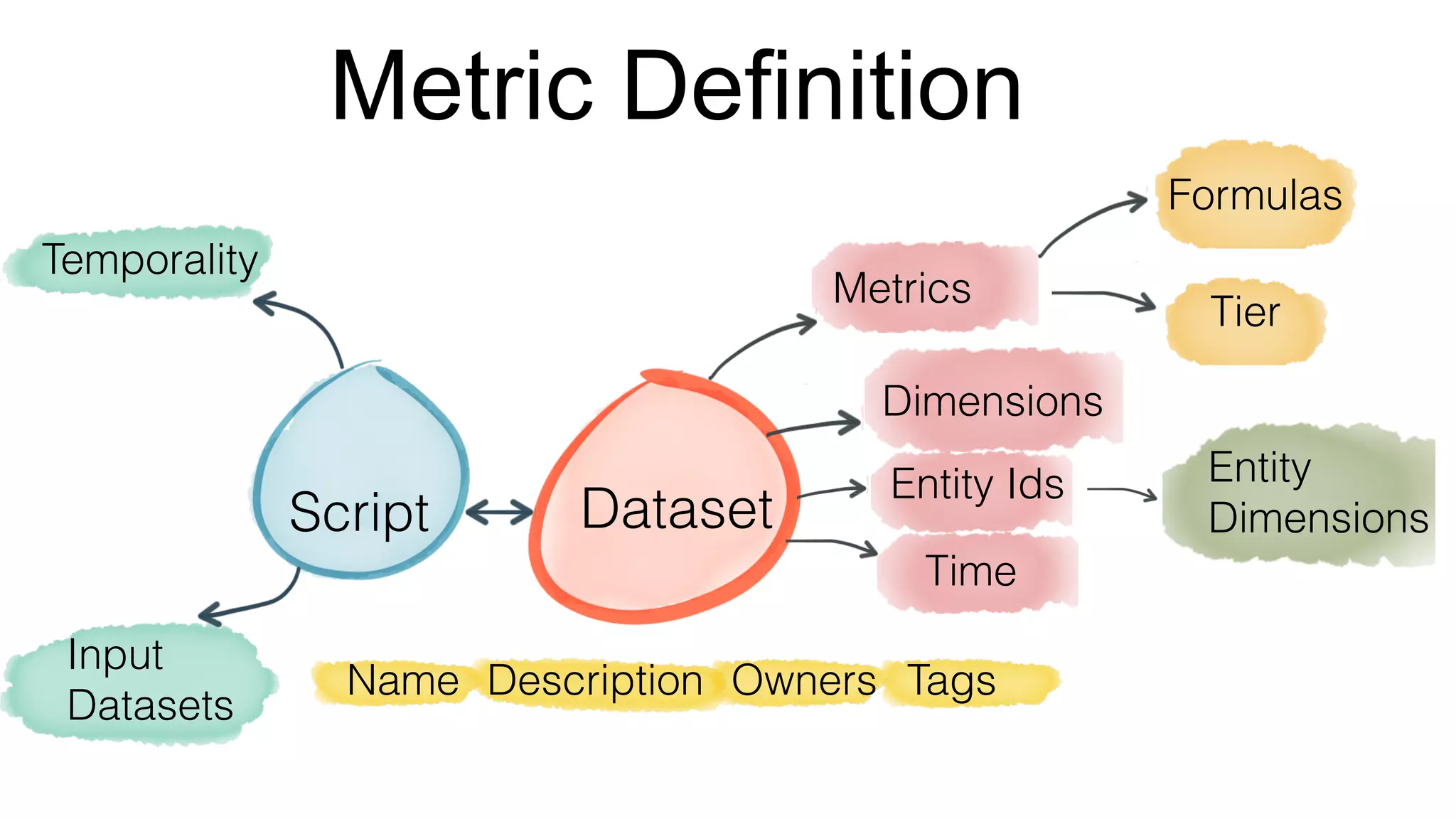

name: "video"

description: “Metrics for video tracking”

label: “video”

tags: [flagship, feed]

owners: [jdoe, jsmith]

enabled: true

retention: 90d

timestamp: timestamp

frequency: daily

script: video_play.pig

output_window: 1d

dimensions:[

{

name: platform

doc: “phone, tablet or desktop"

}

{

name: action_type

doc: “click play or auto-play“

}

]

input_datasets

[

{

name: actionsRaw

path: Tracking.ActionEvent

range: 1d

}

]](https://image.slidesharecdn.com/reportinglinkedinstratafinal-151207084404-lva1-app6891/75/Strata-SG-2015-LinkedIn-Self-Serve-Reporting-Platform-on-Hadoop-19-2048.jpg)

![An example contd…

metrics: [

name: unique_viewers

doc: “Count of unique viewers”

formula: “unique(member_id)”

tier: 2

good_direction: "up"

}

{

name: play_actions

doc: “Sum of play actions"

tier: 2

formula: “sum(play_actions)"

good_direction: "up"

}

]

entity_ids: [

{

name: member_id

category: member

}

{

name:video_id

category: video

}

]](https://image.slidesharecdn.com/reportinglinkedinstratafinal-151207084404-lva1-app6891/75/Strata-SG-2015-LinkedIn-Self-Serve-Reporting-Platform-on-Hadoop-20-2048.jpg)