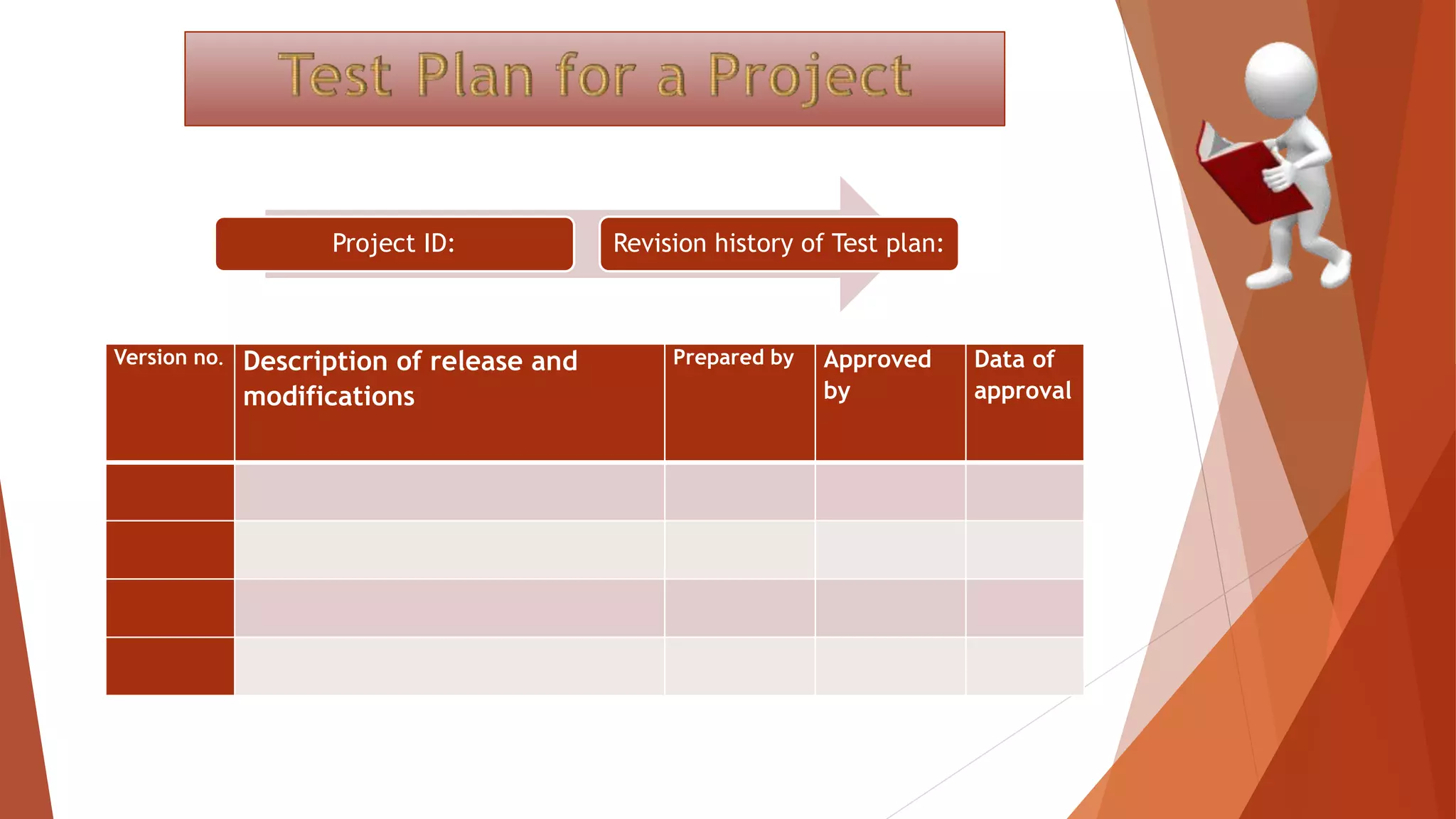

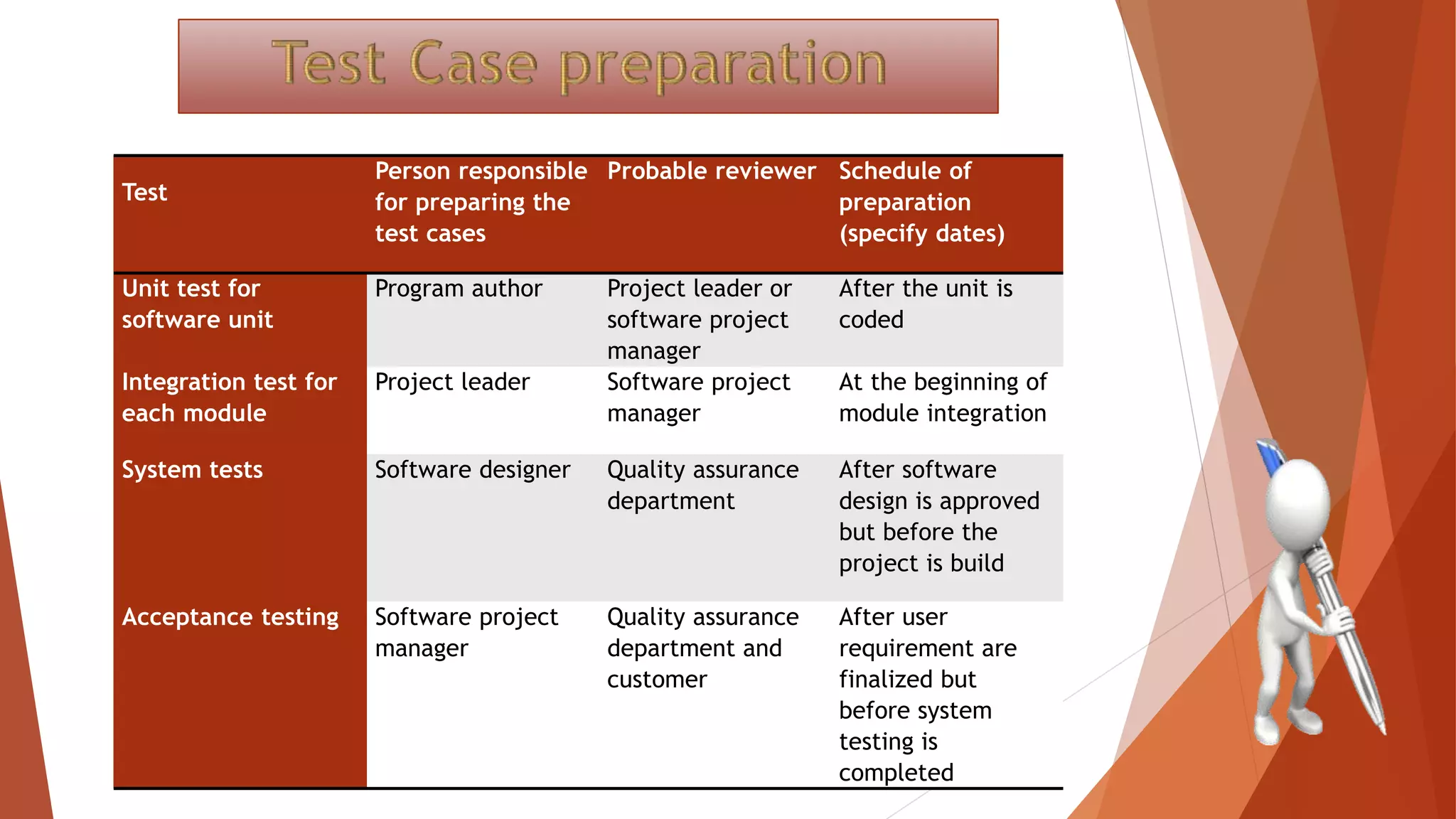

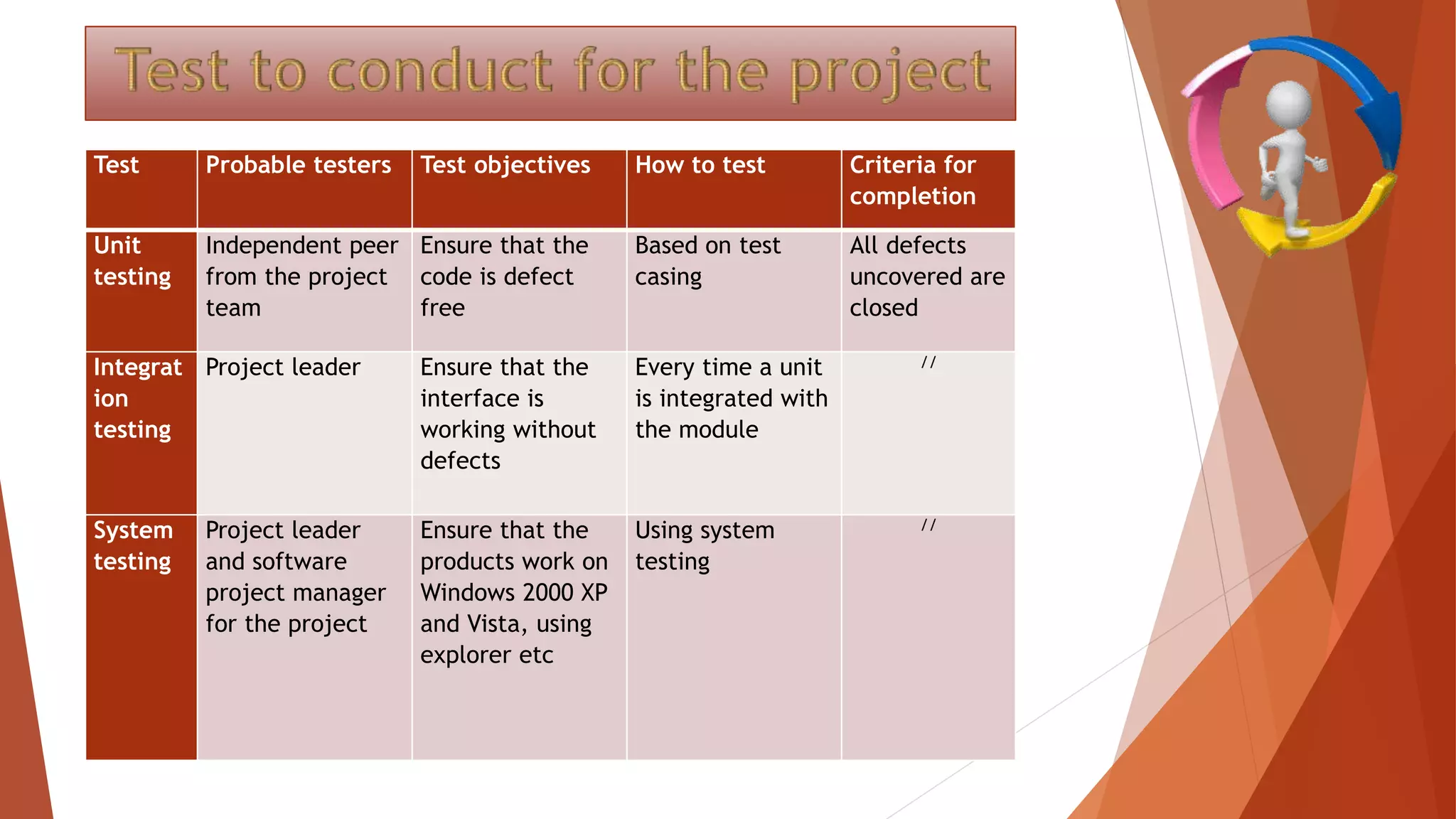

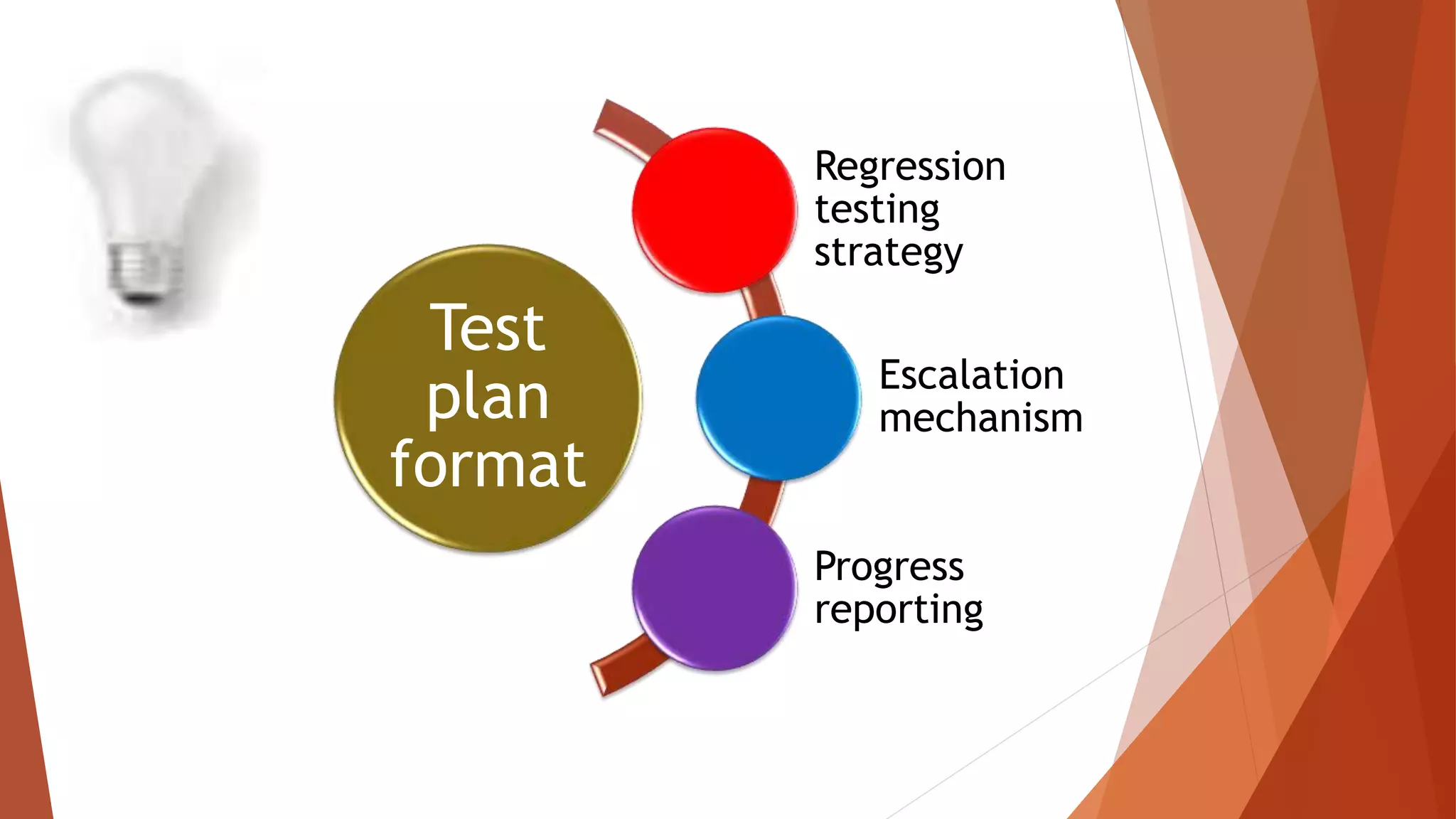

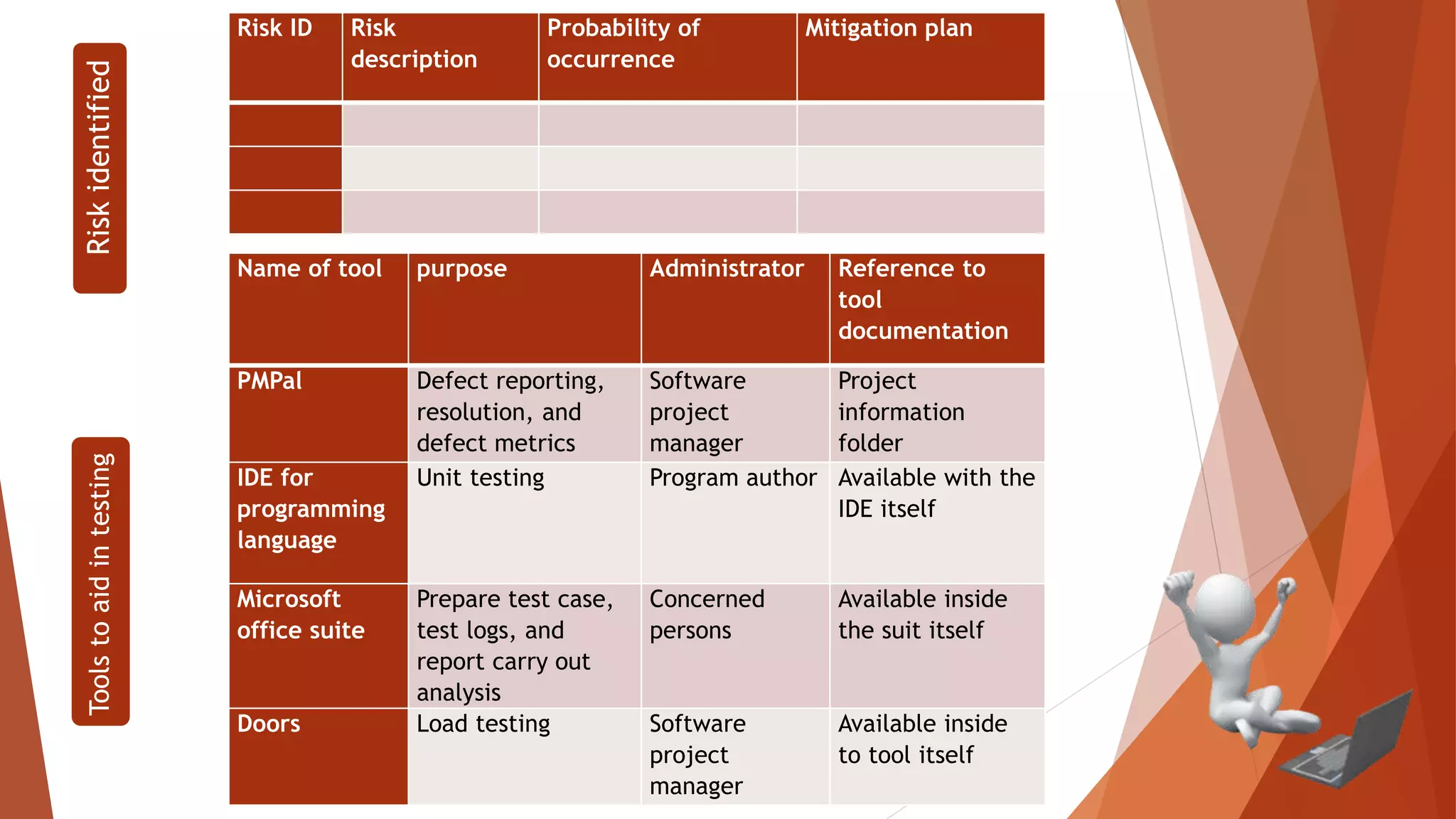

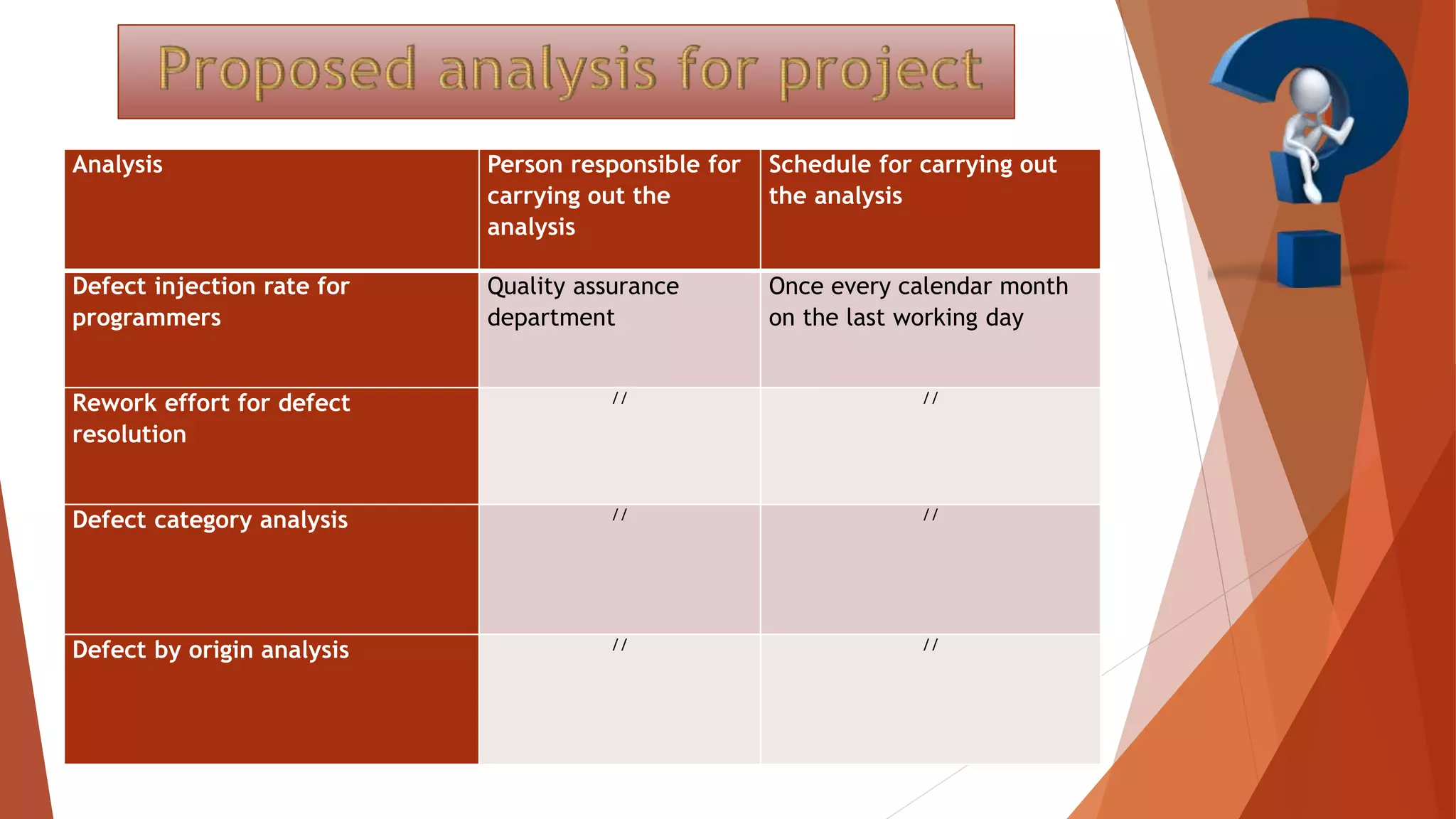

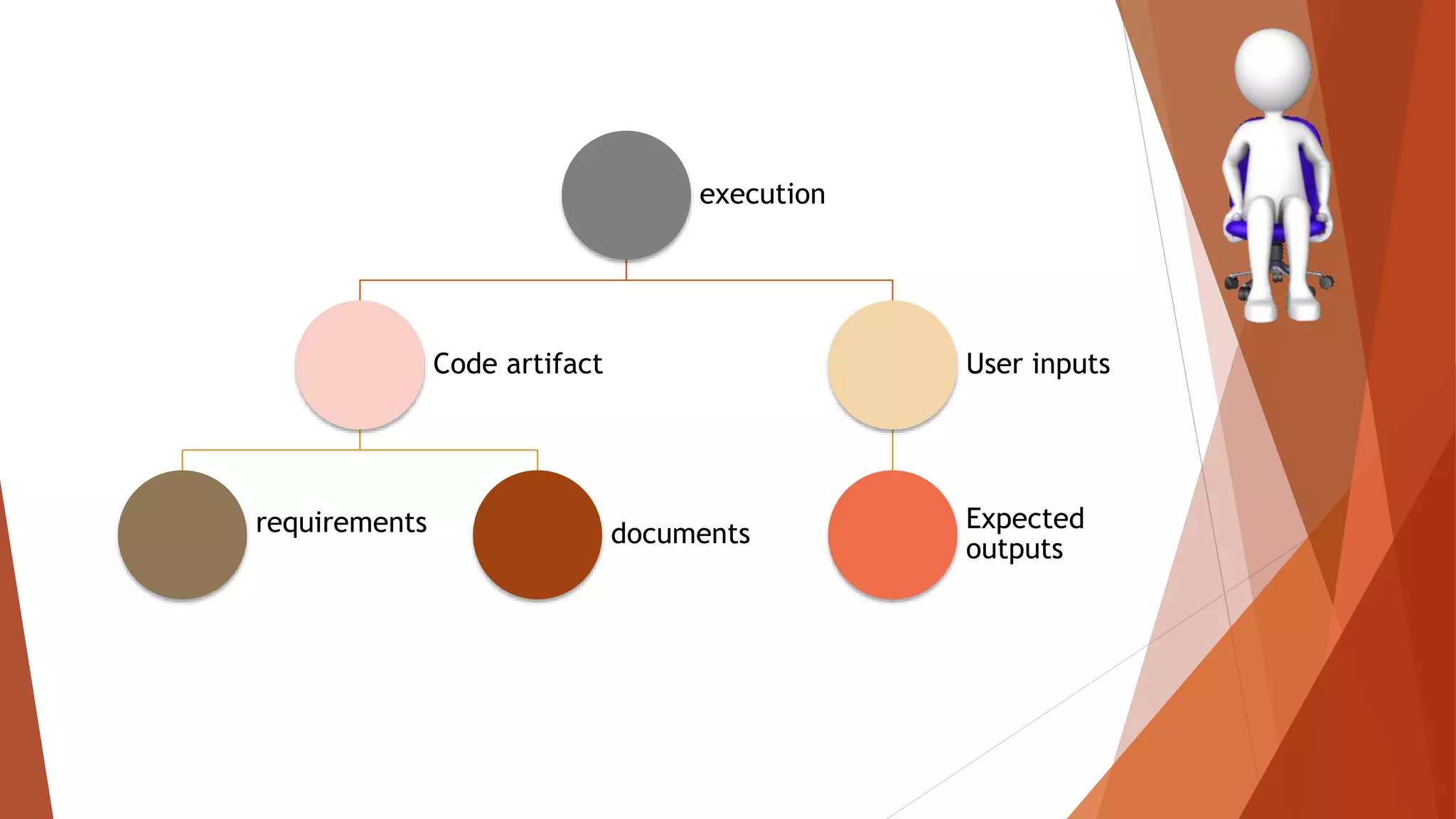

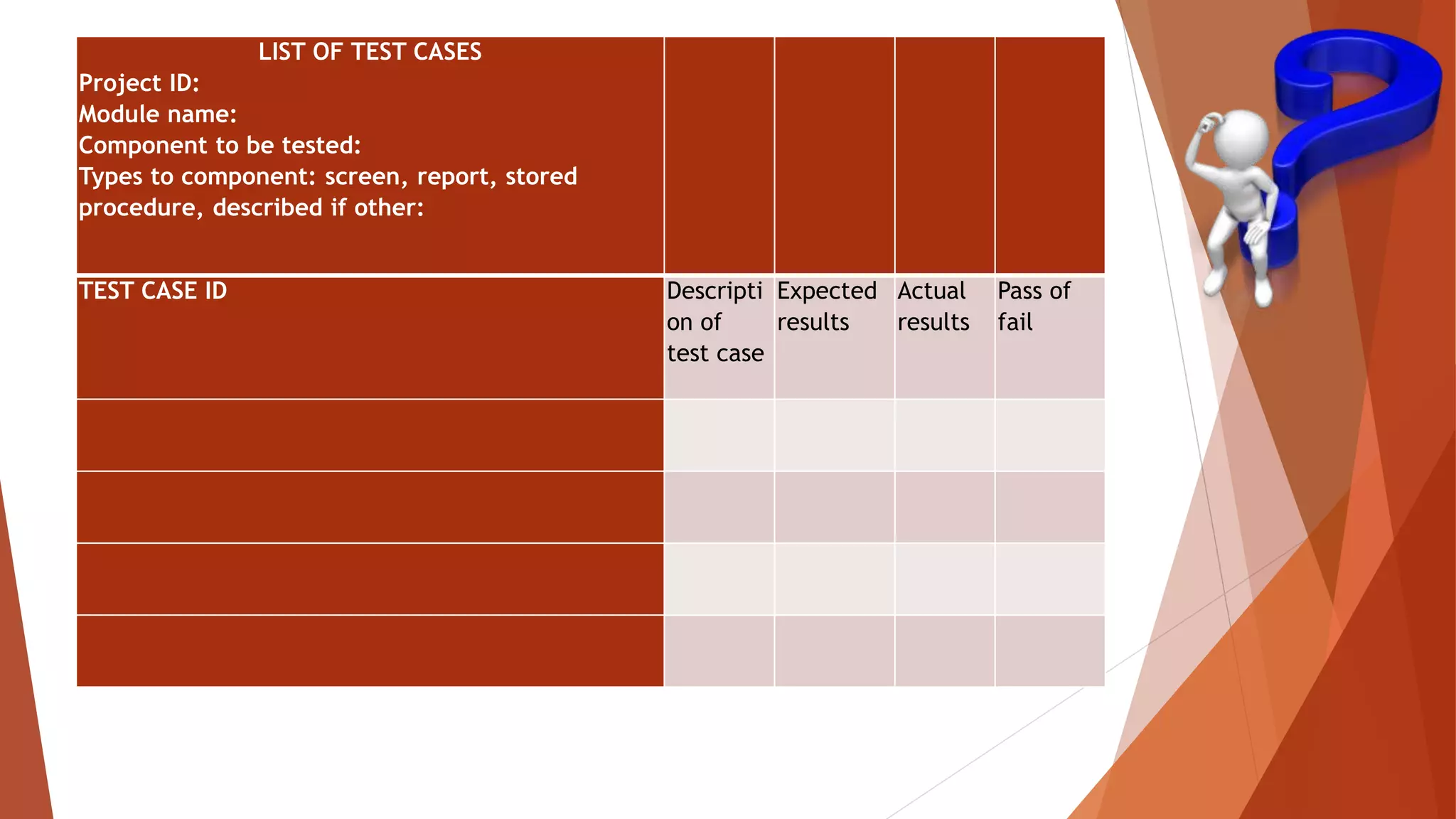

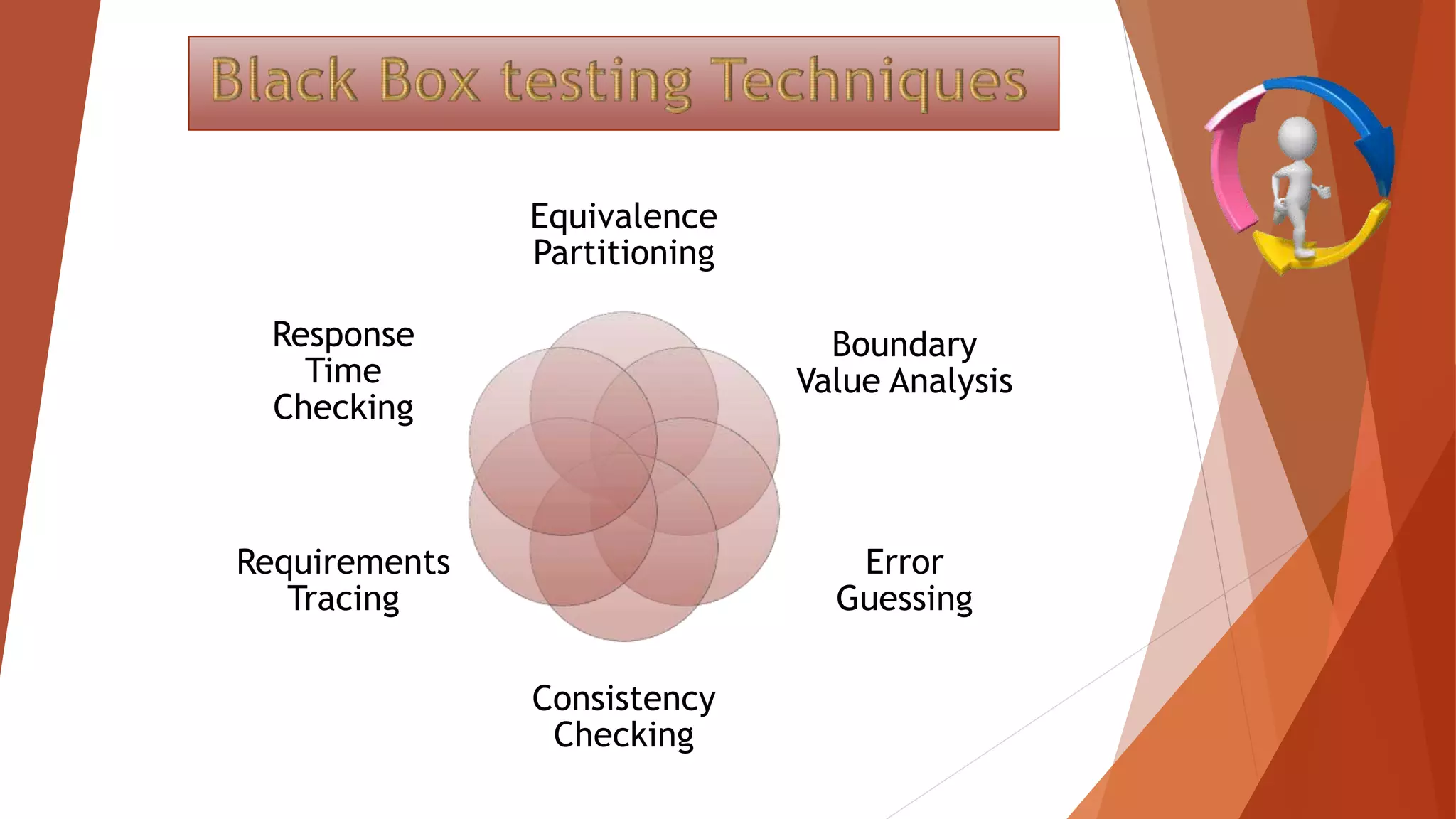

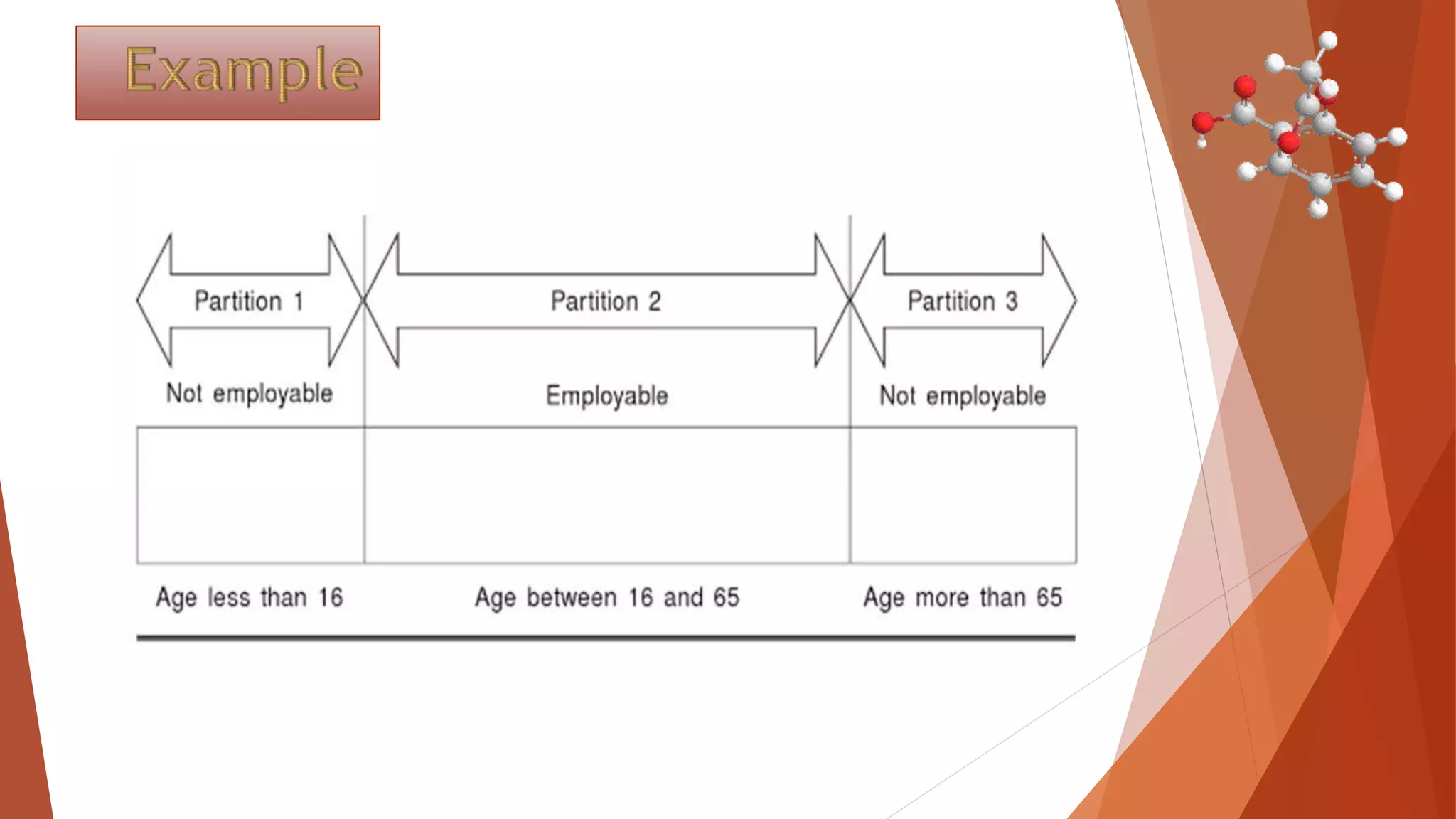

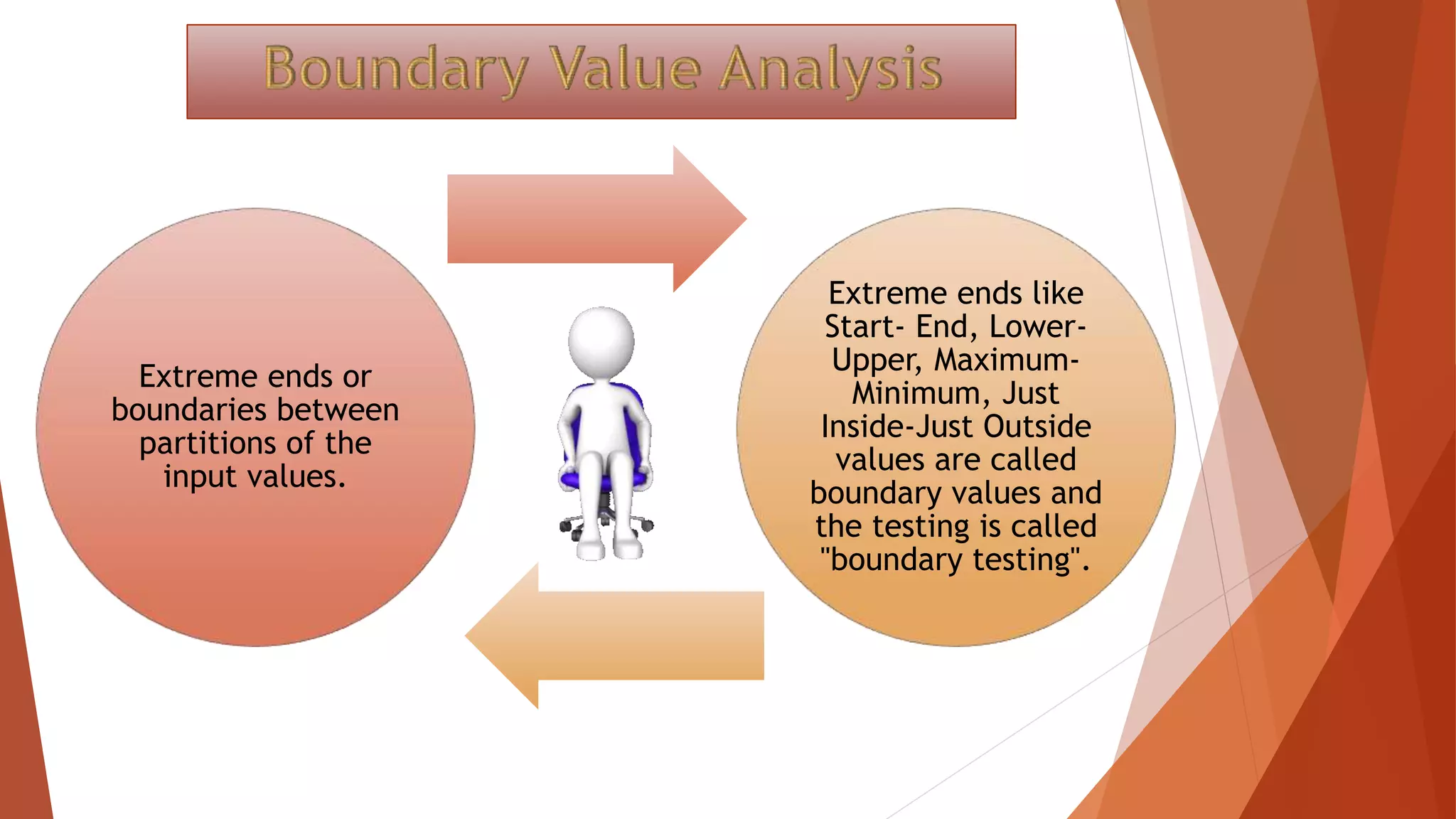

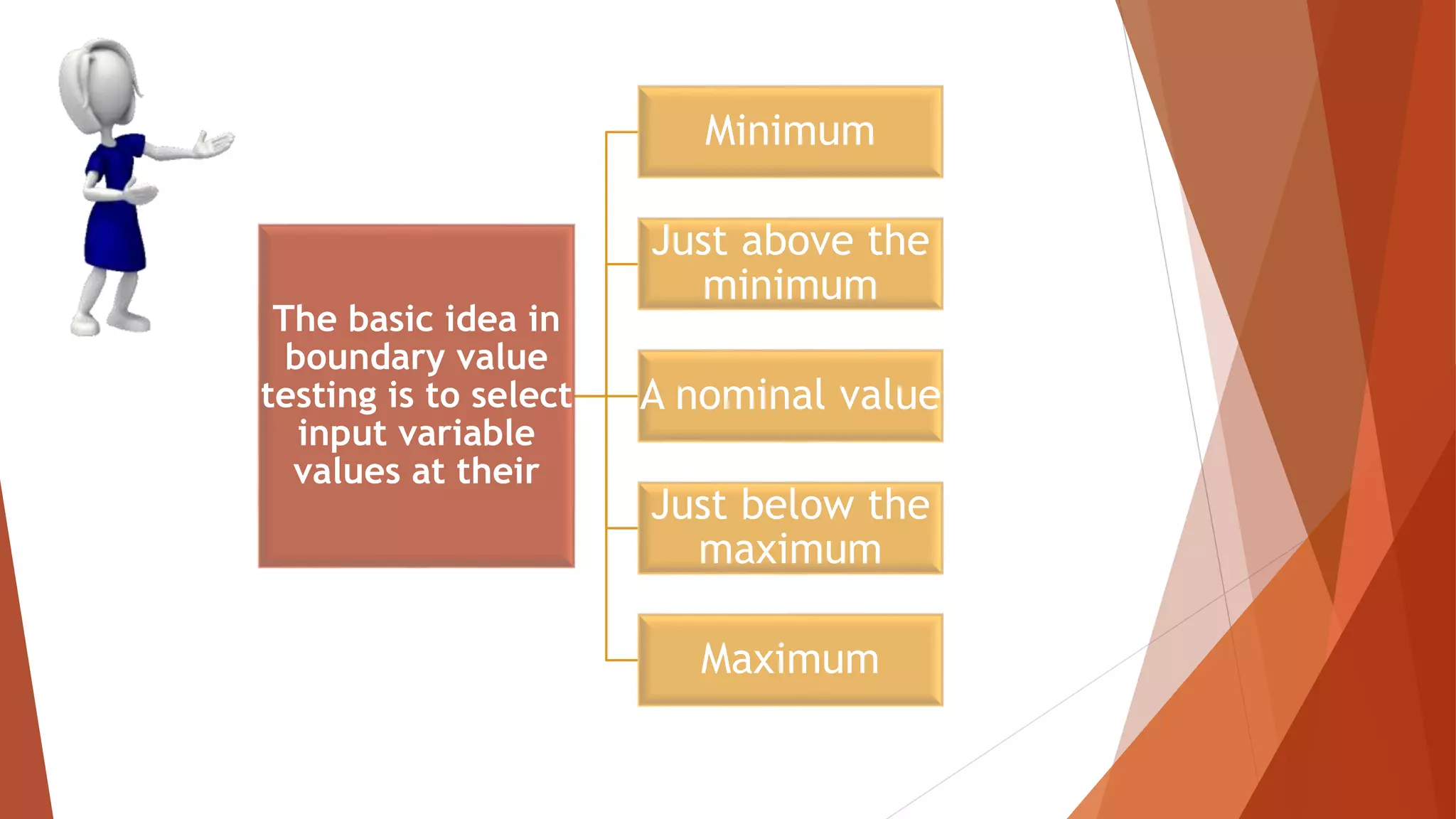

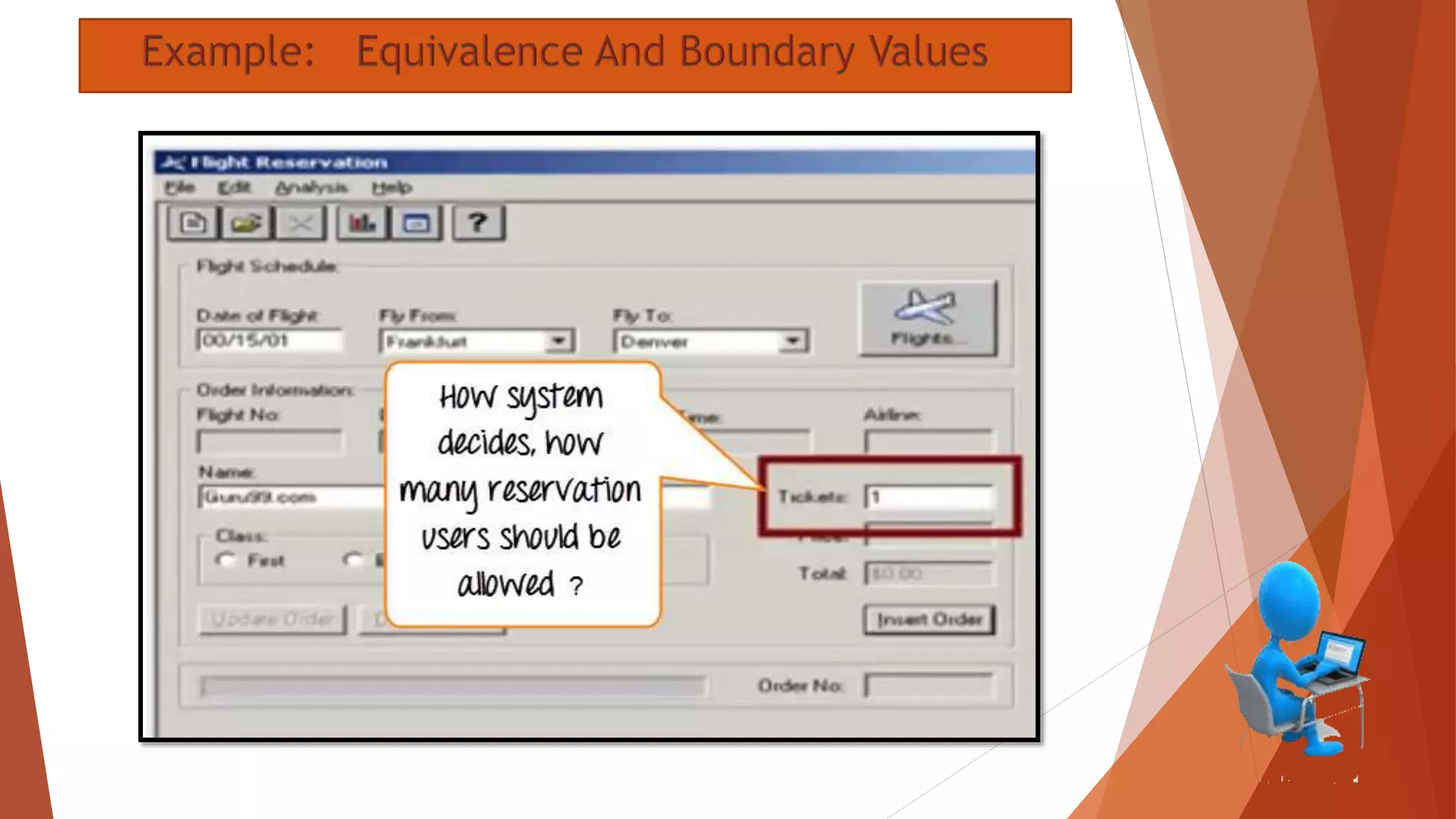

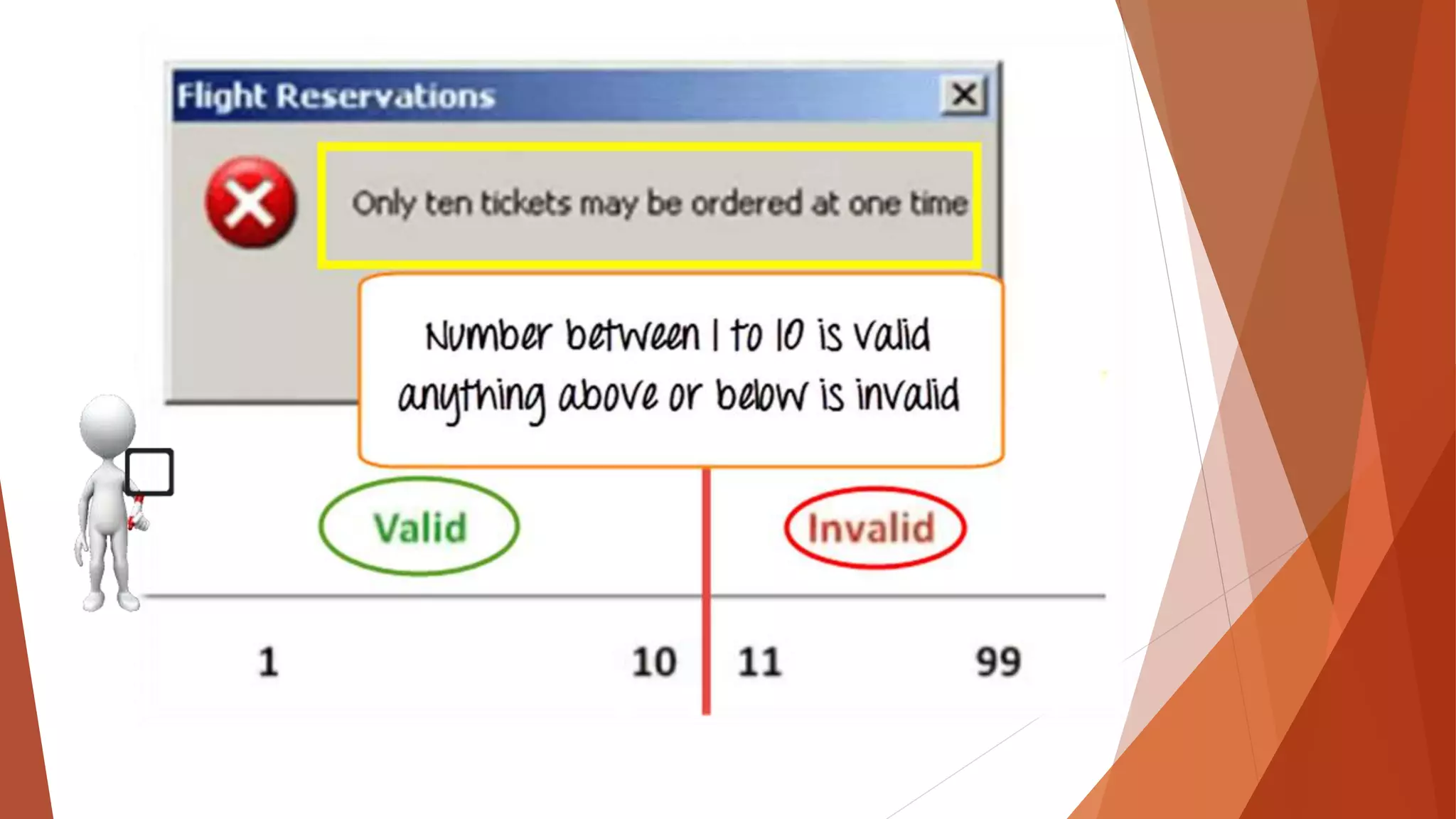

The document discusses software quality assurance and testing techniques. It provides an introduction and lists group members and discussion topics which include test case design, test plan format, and black box testing techniques like equivalence partitioning, boundary value analysis, error guessing, consistency checking, and requirements tracing. Tools for aiding testing are also listed.