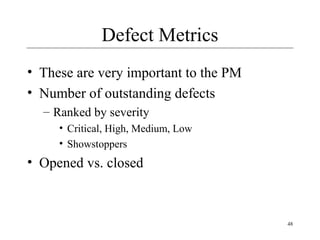

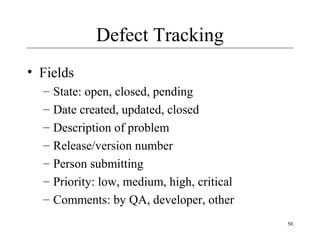

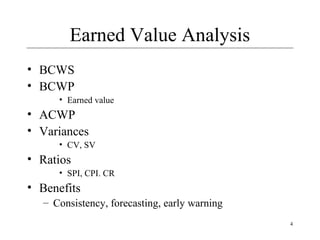

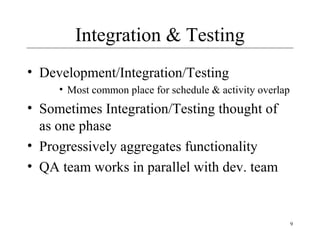

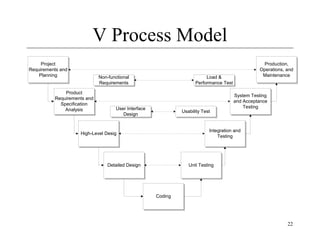

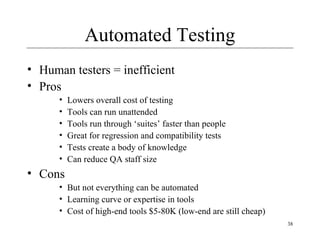

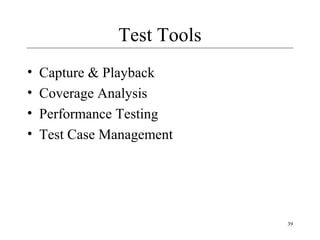

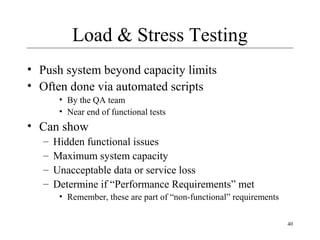

The document provides an overview of software integration and testing. It discusses integration approaches like top-down and bottom-up integration. It also covers various types of testing like unit testing, integration testing, system testing, and user acceptance testing. The document discusses test planning, metrics, tools, and environments. It provides details on defect tracking, metrics, and strategies for stopping testing.

![42

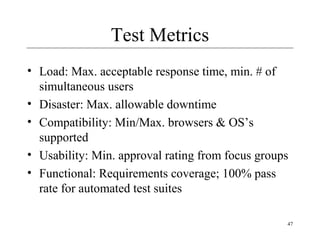

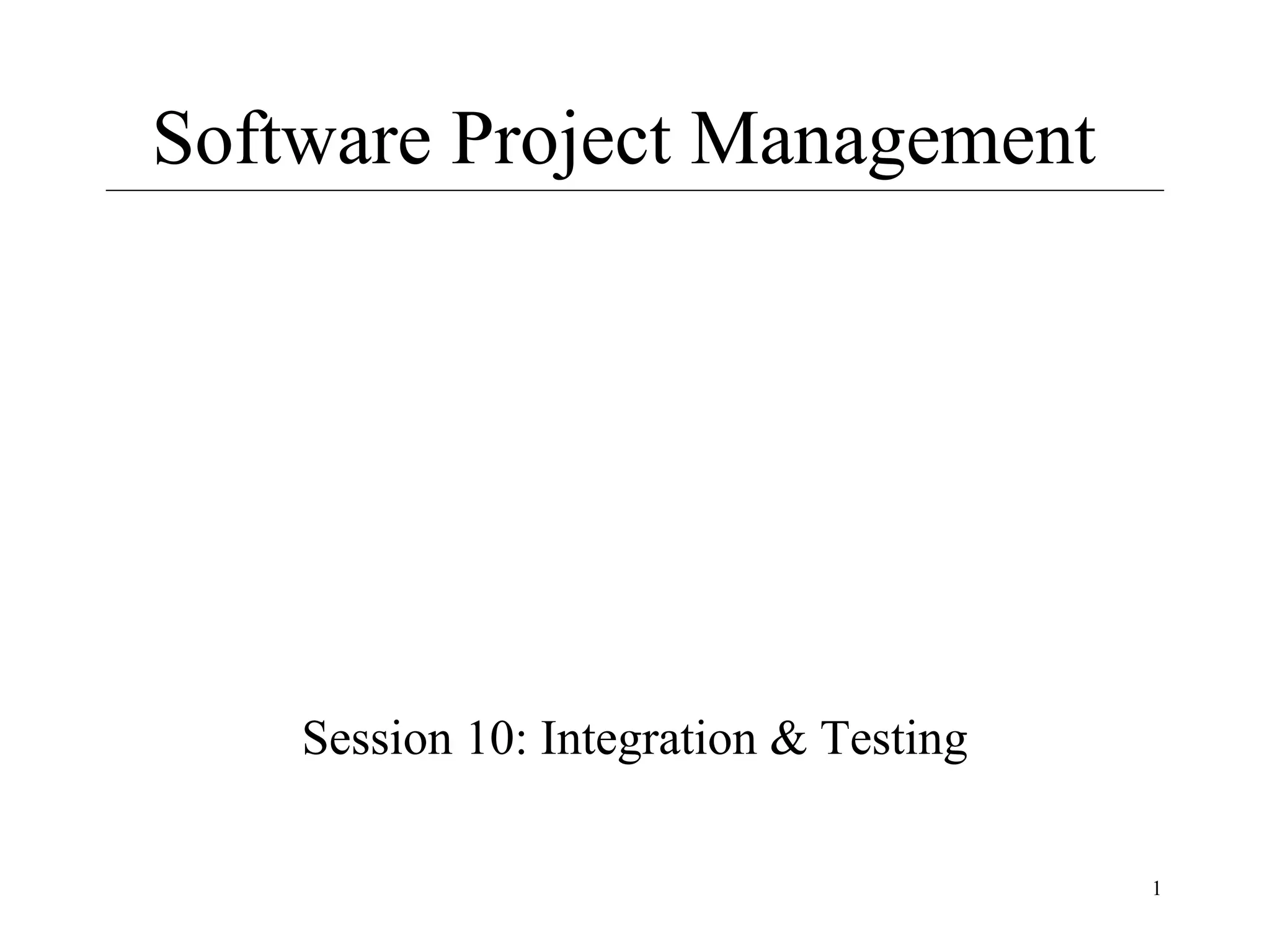

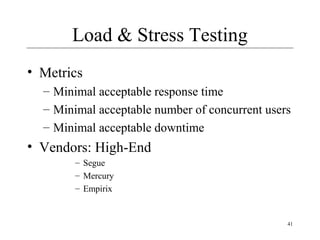

Performance Metrics

Source: Athens Consulting Group

Bad Good

Must support 500 users Must support 500

simultaneous users

10 second response time [Average|Maximum|90th

percentile] response time

must be X seconds

Must handle 1M hits per

day

Must handle peak load

of 28 page requests per

second](https://image.slidesharecdn.com/10-140315000450-phpapp01/85/Software-Project-Management-lecture-10-42-320.jpg)