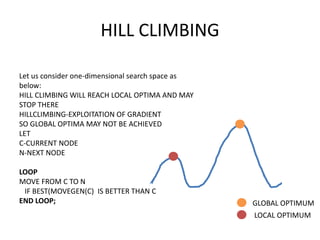

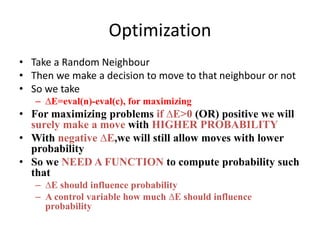

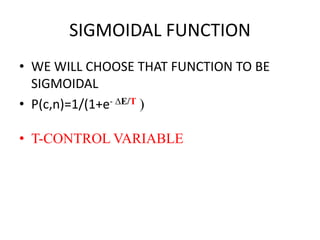

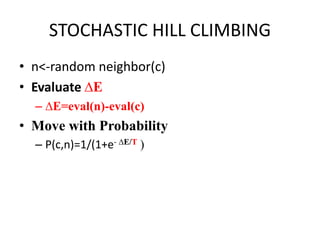

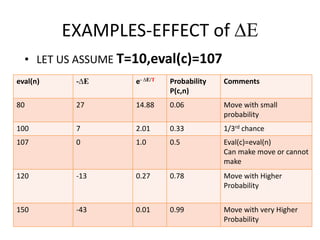

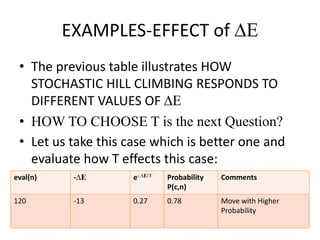

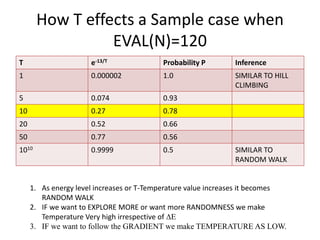

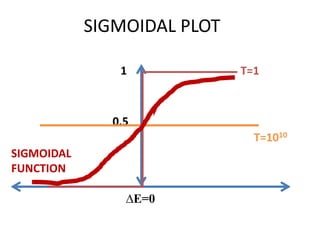

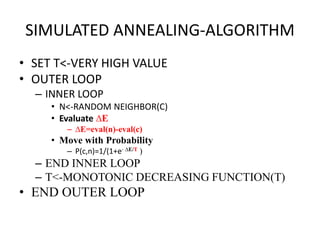

This document discusses simulated annealing, a stochastic optimization algorithm inspired by the physical annealing process. It can help avoid getting stuck in local optima like hill climbing algorithms. It works by randomly exploring different solutions and probabilistically accepting moves that decrease fitness, controlled by a temperature parameter. The probability of accepting worse moves decreases over time, guiding the search toward better solutions like hill climbing, but also allowing some exploration to avoid local optima.