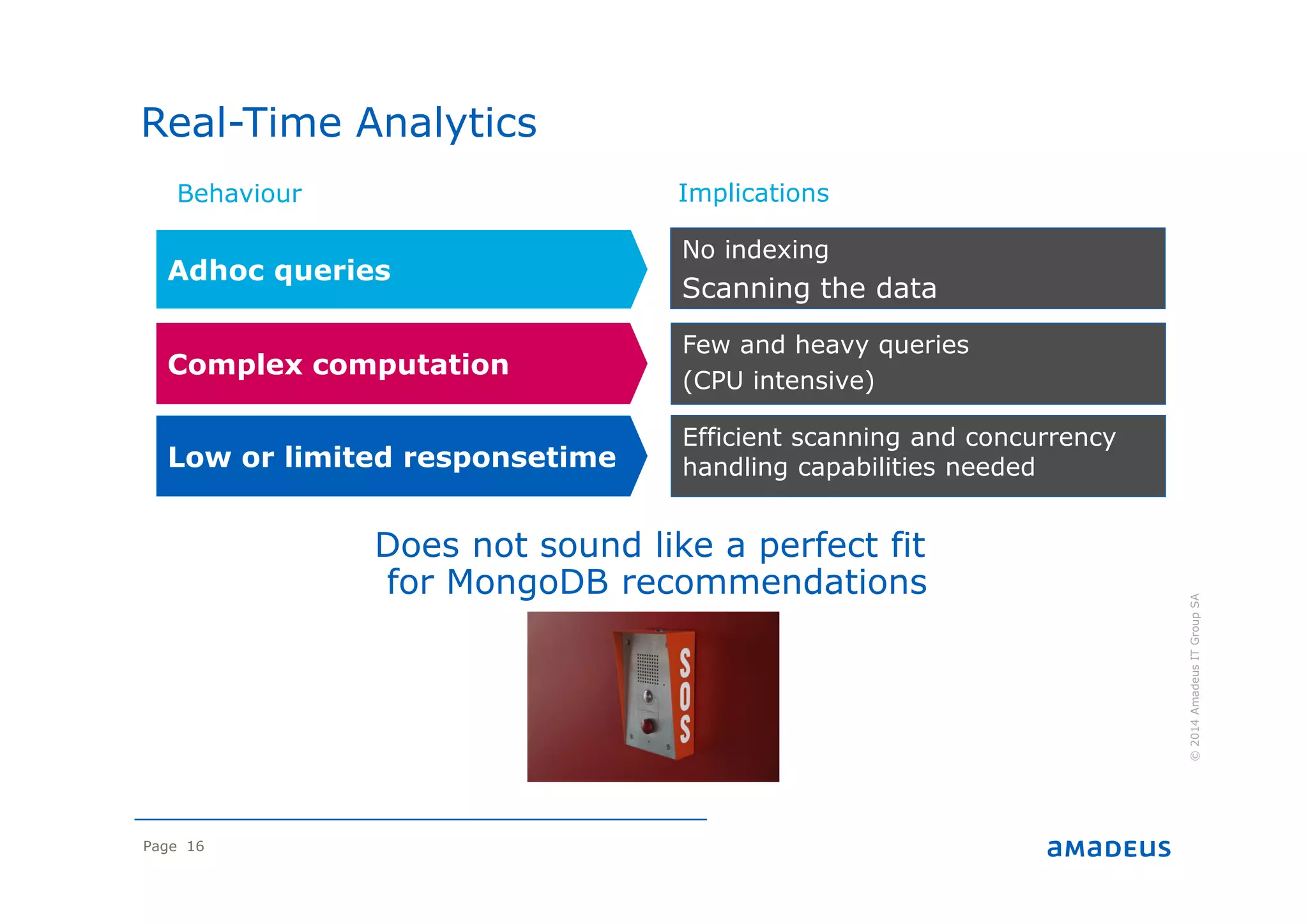

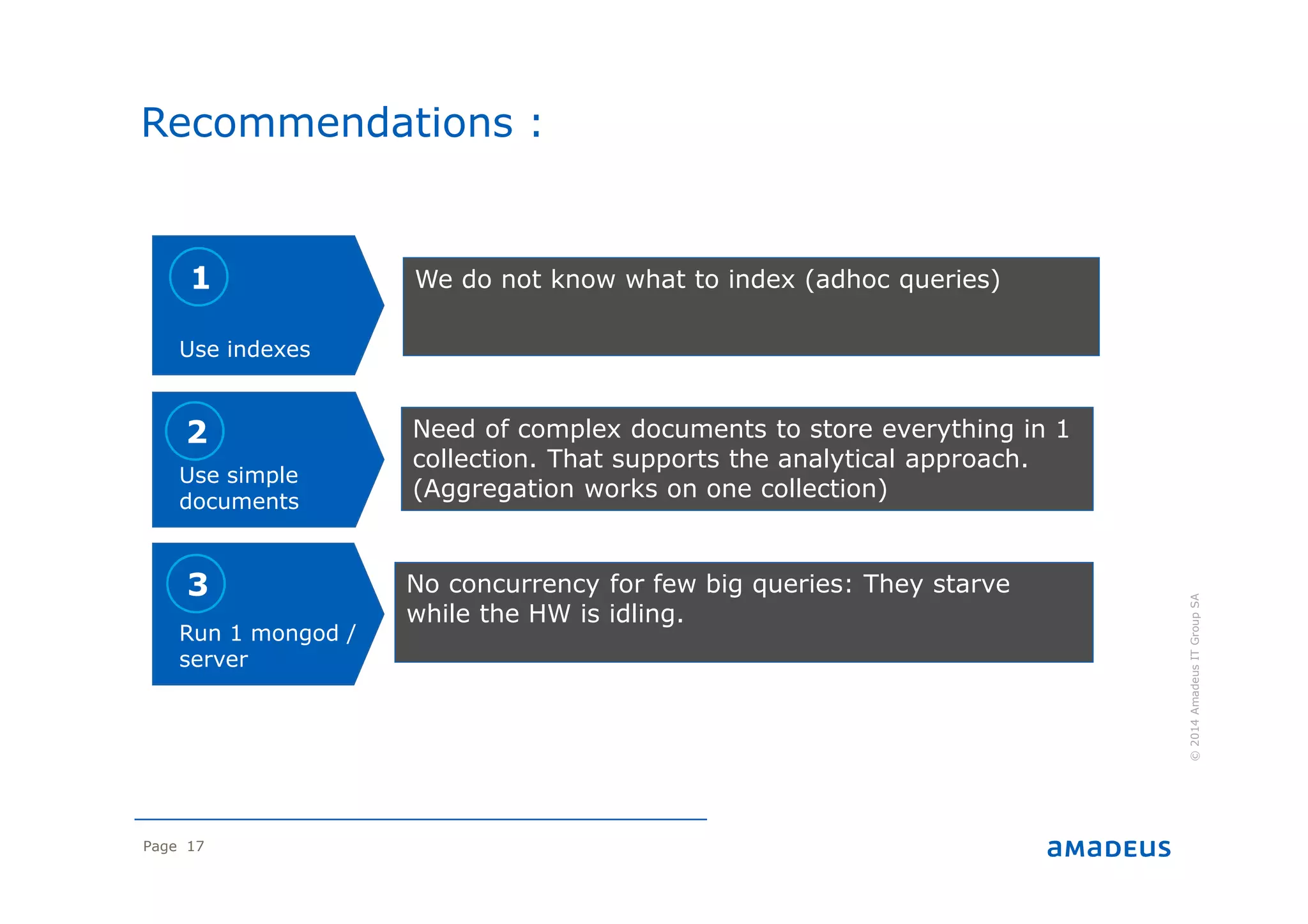

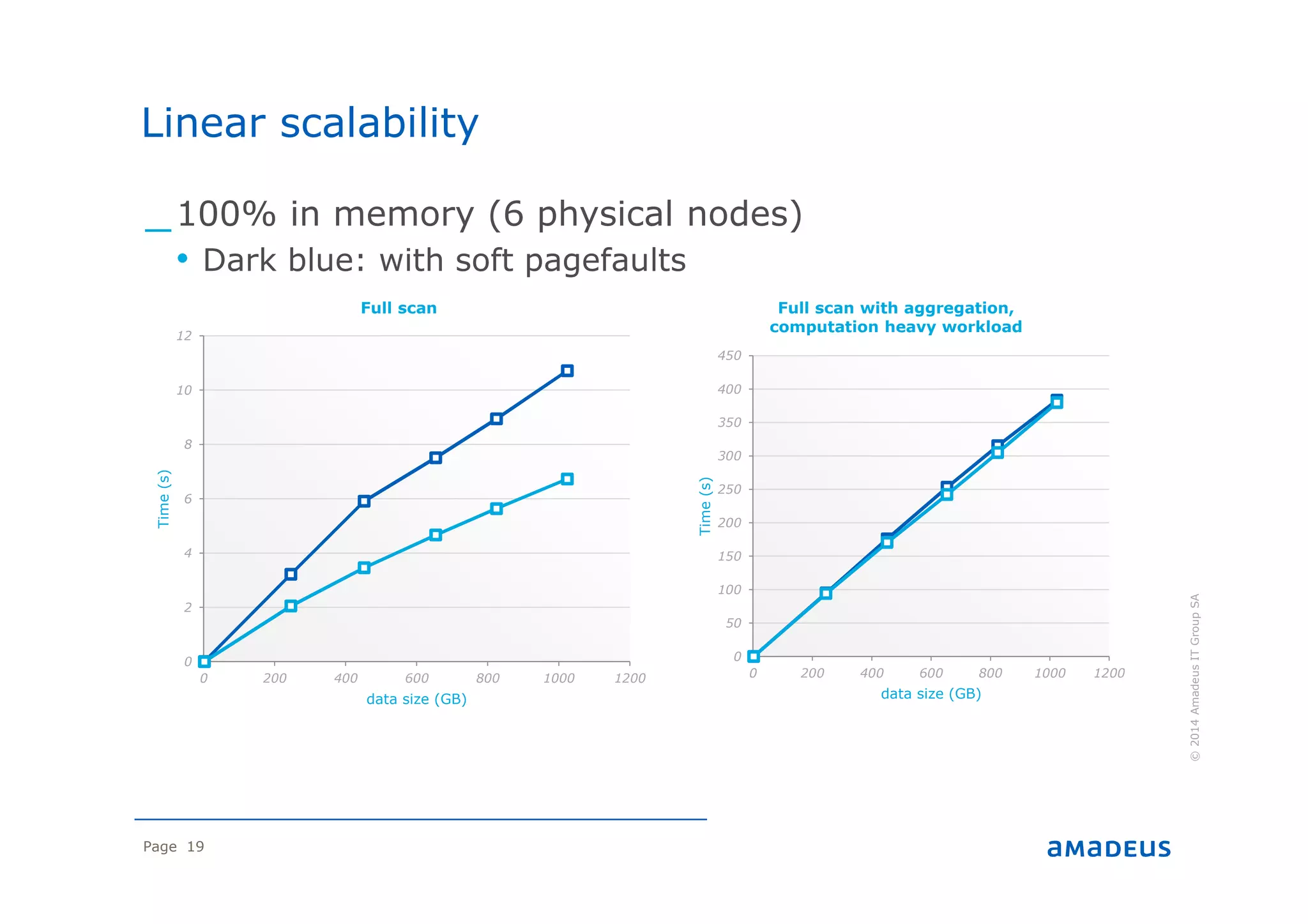

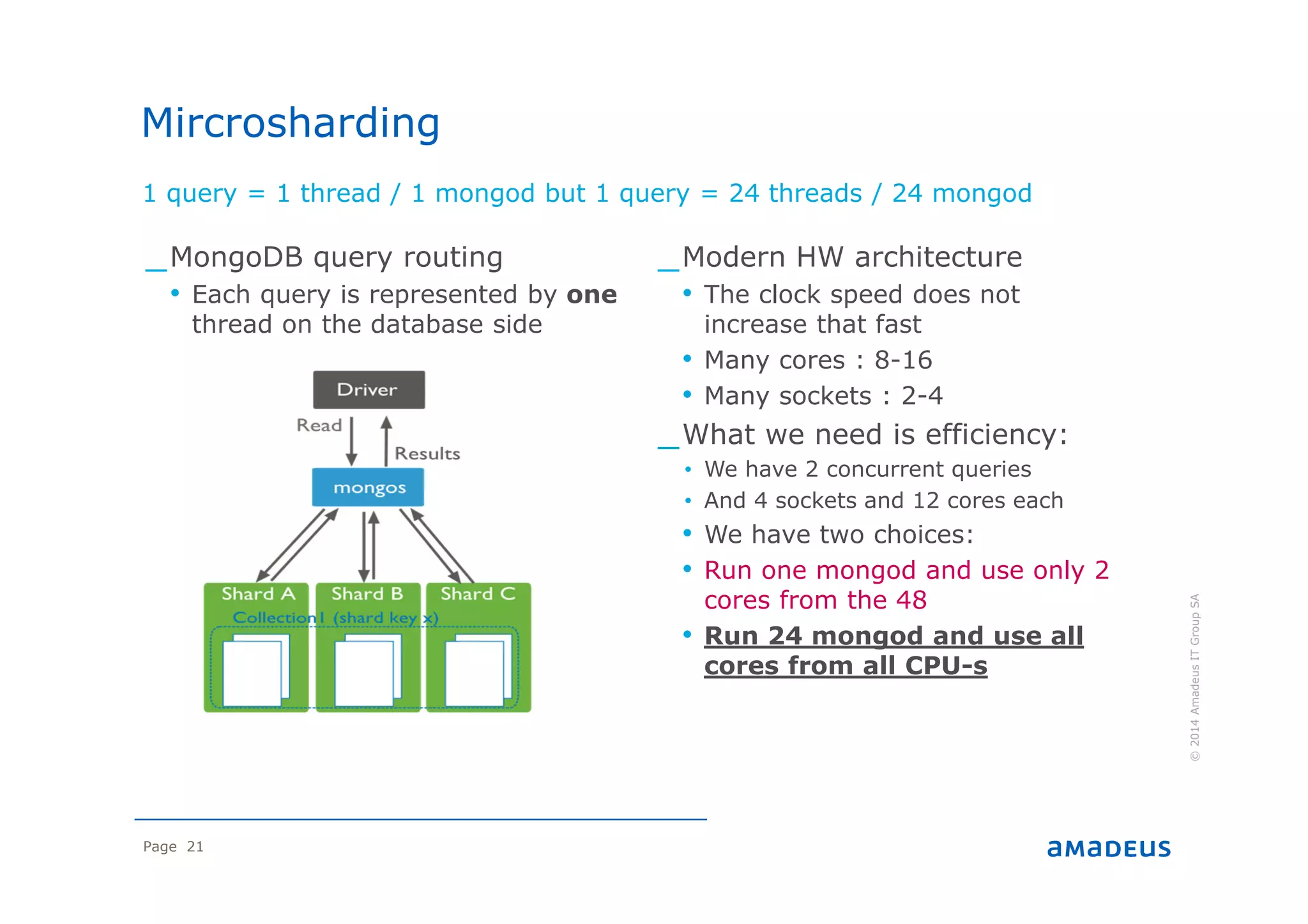

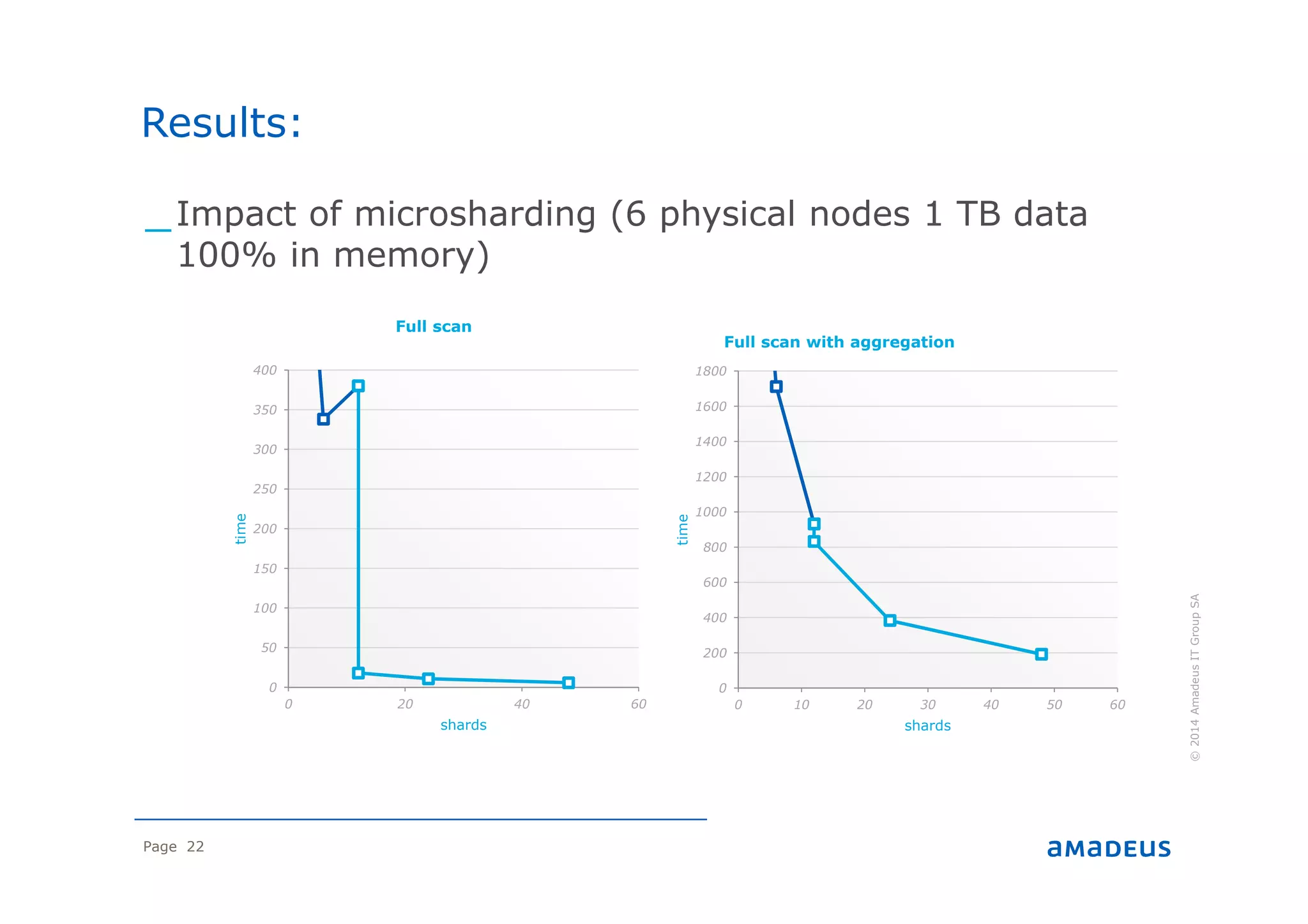

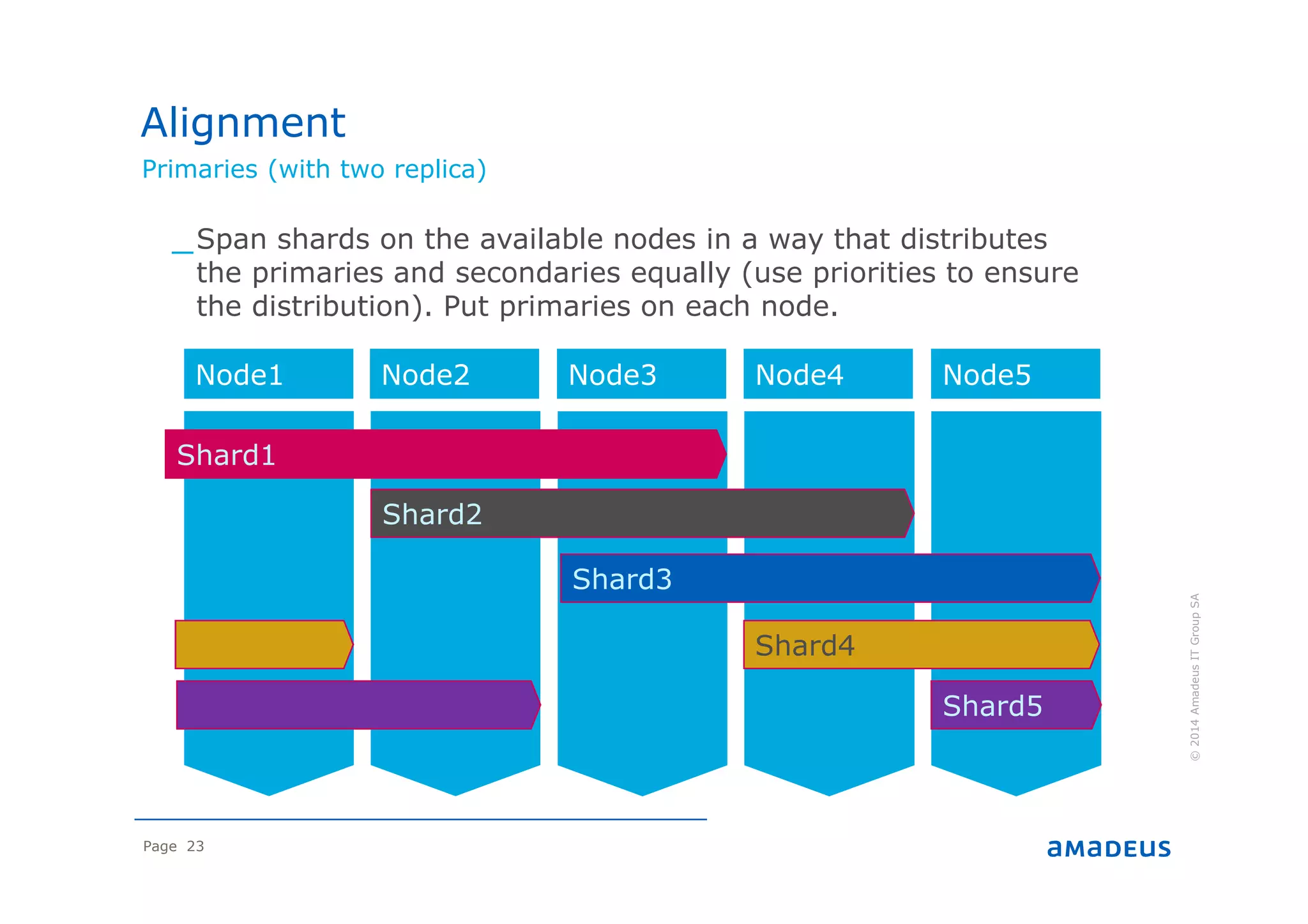

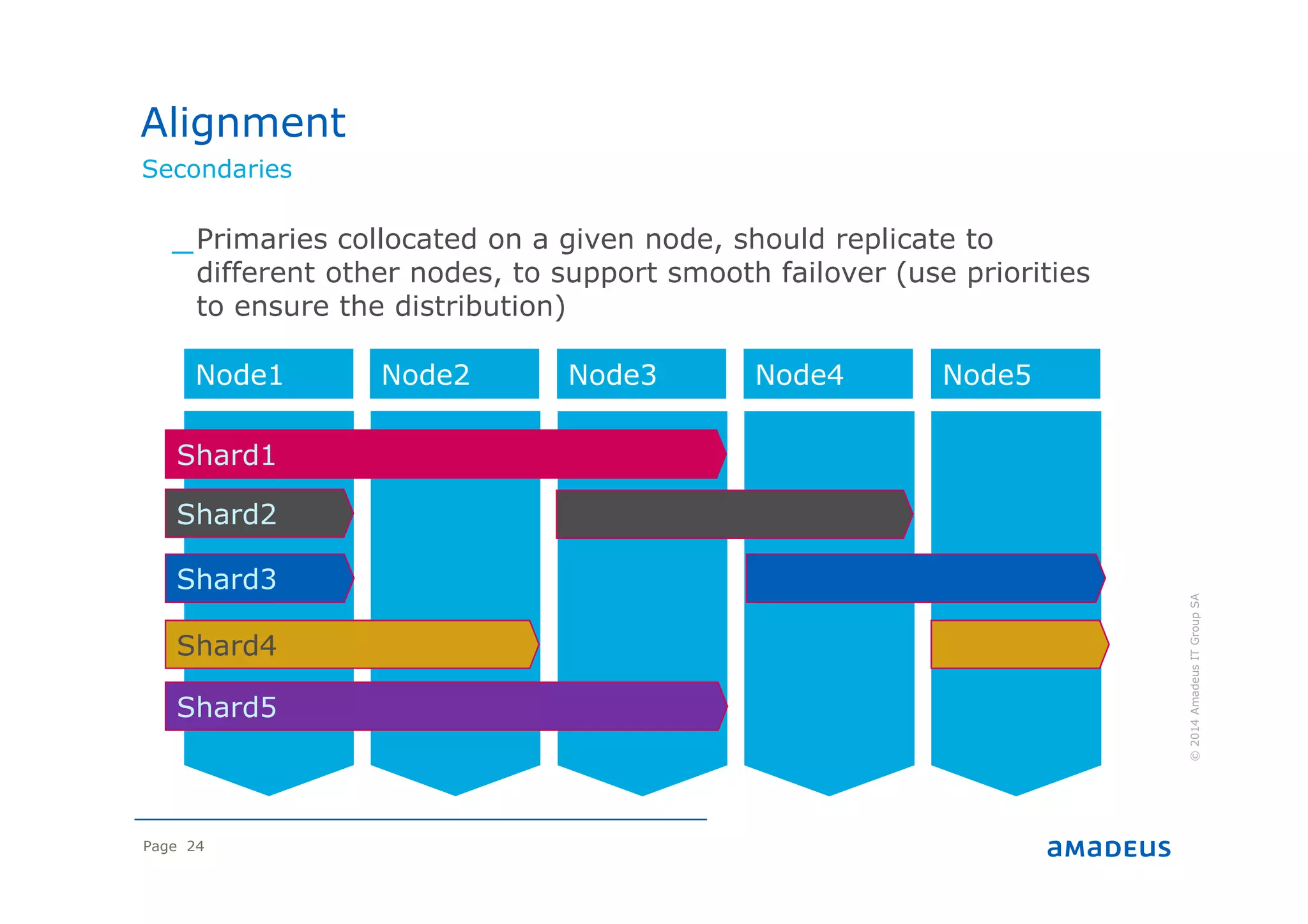

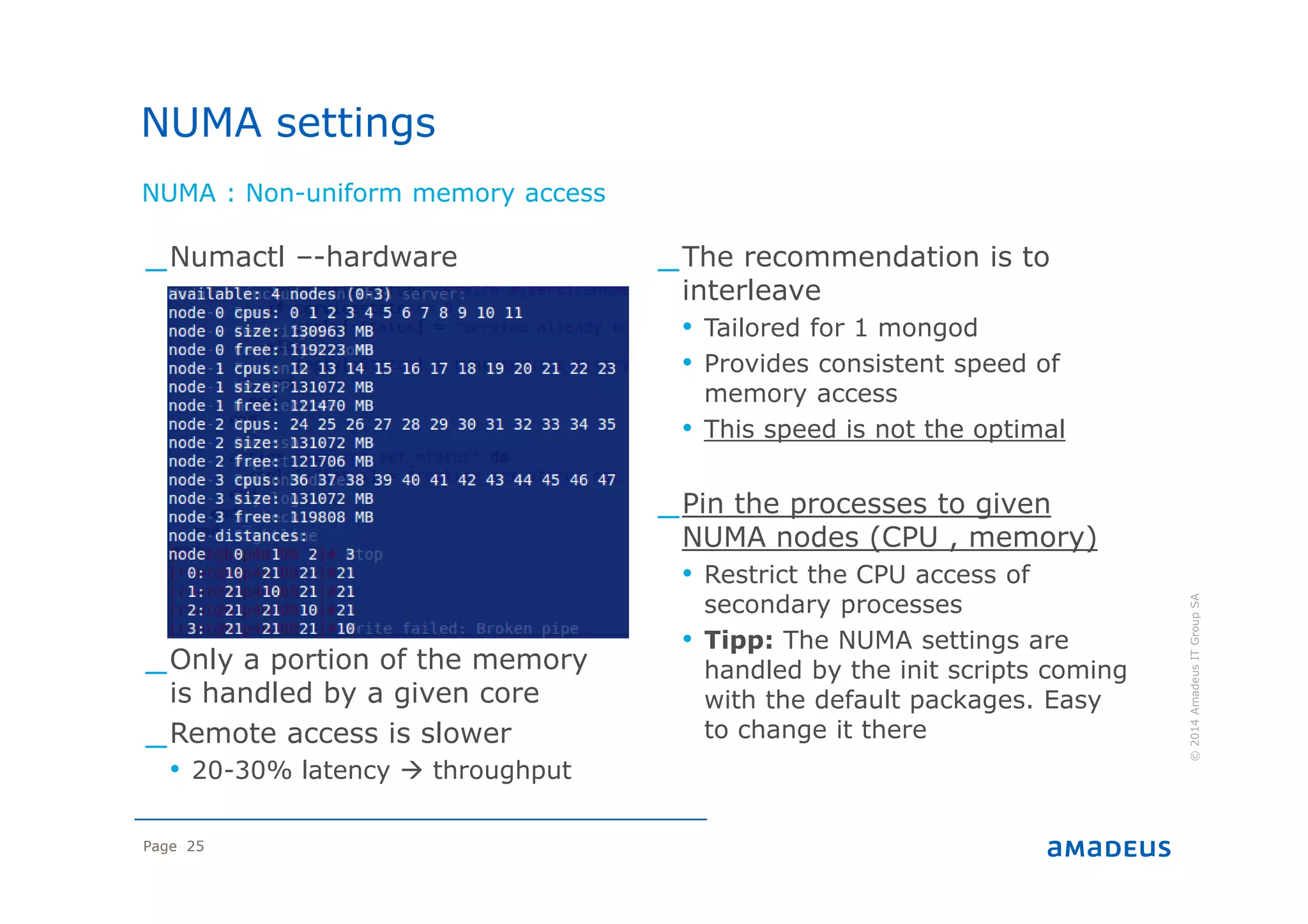

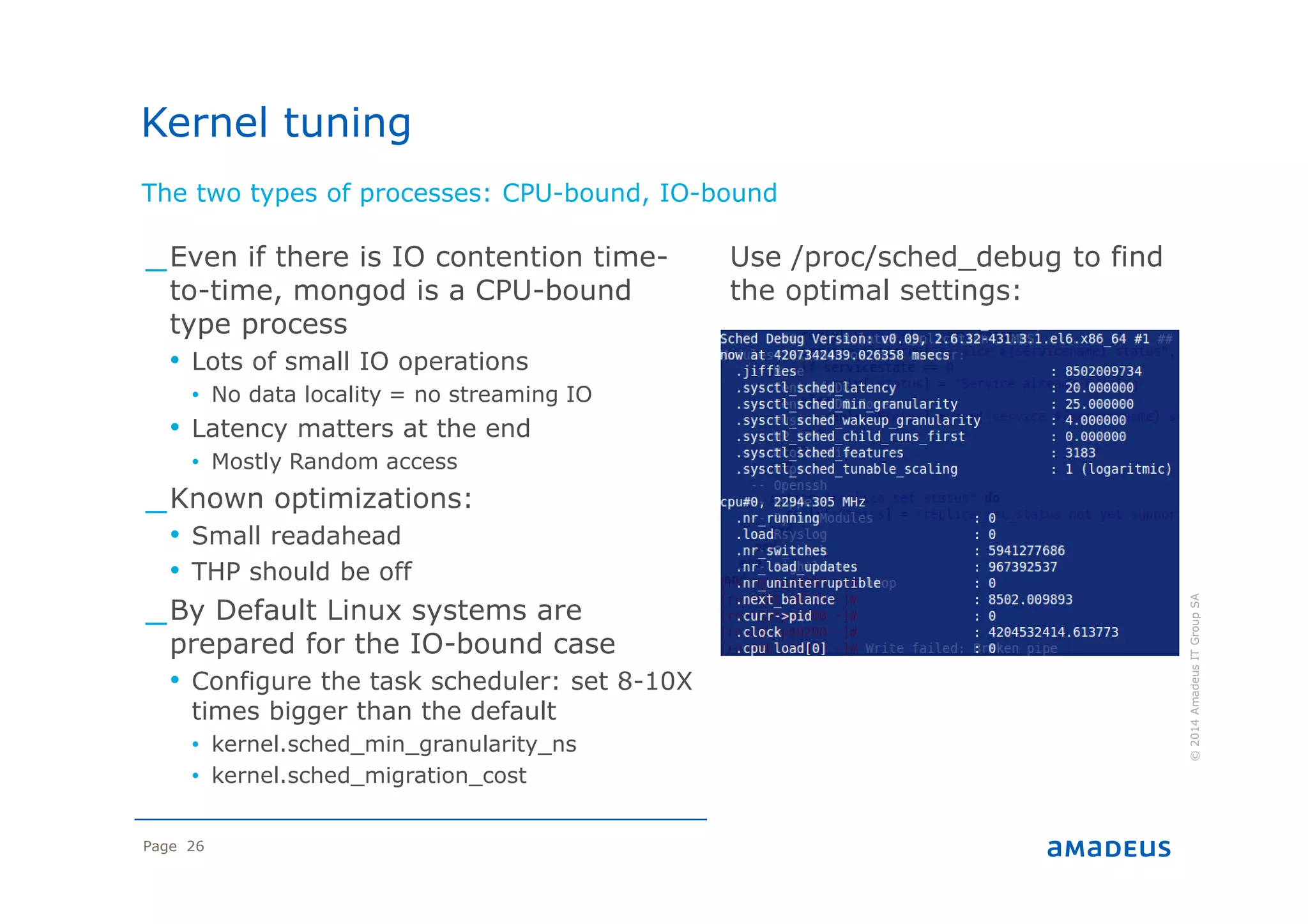

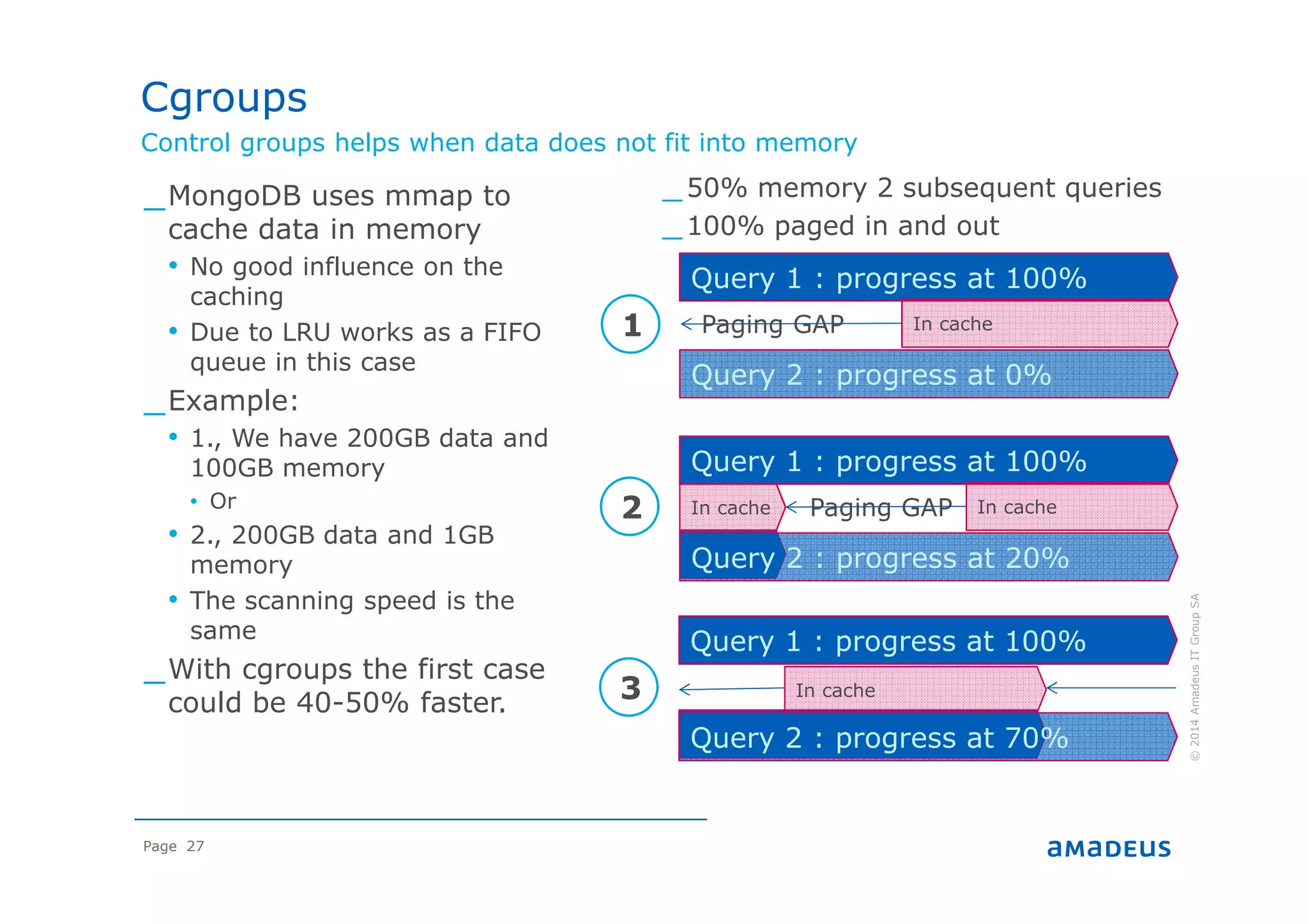

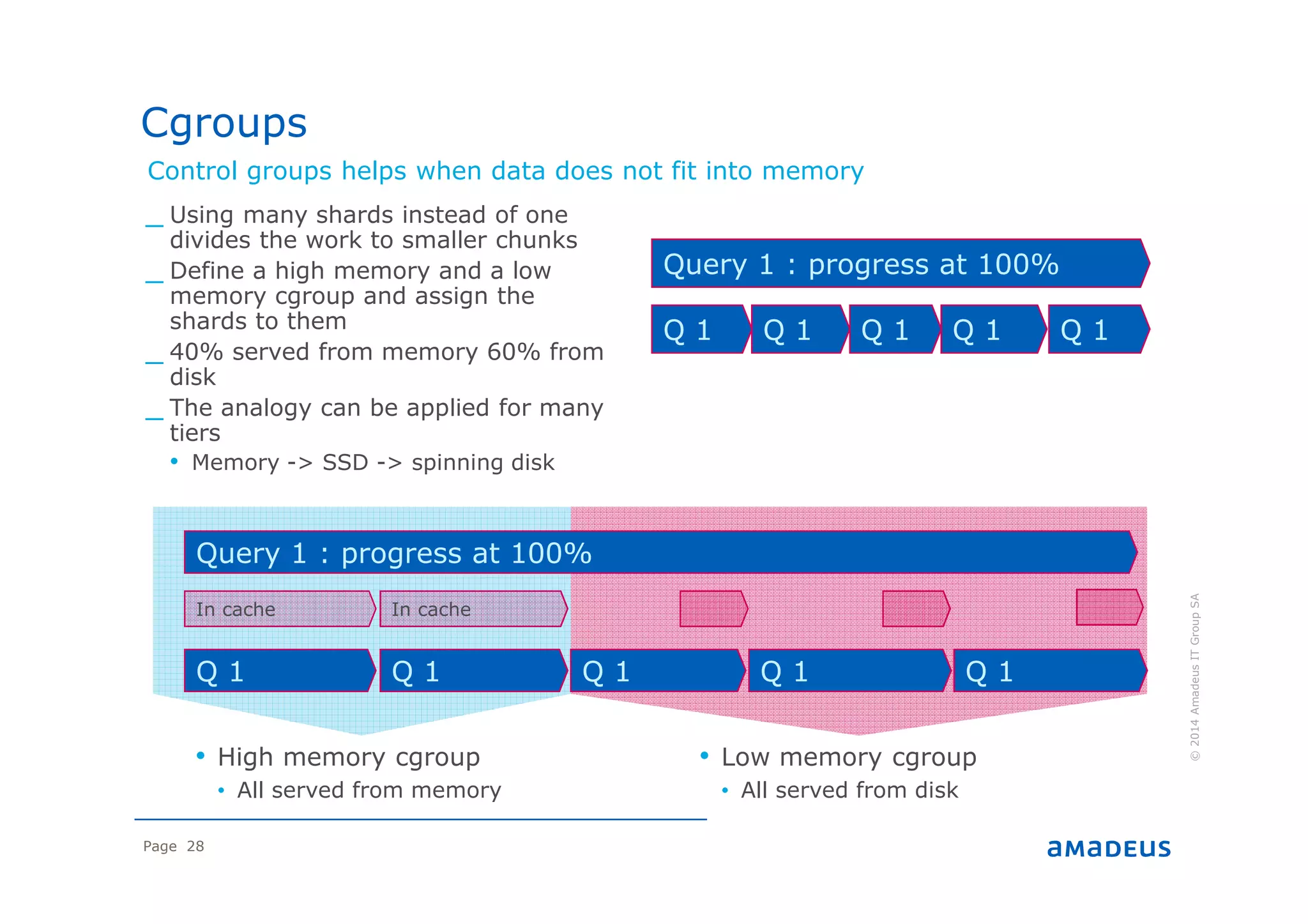

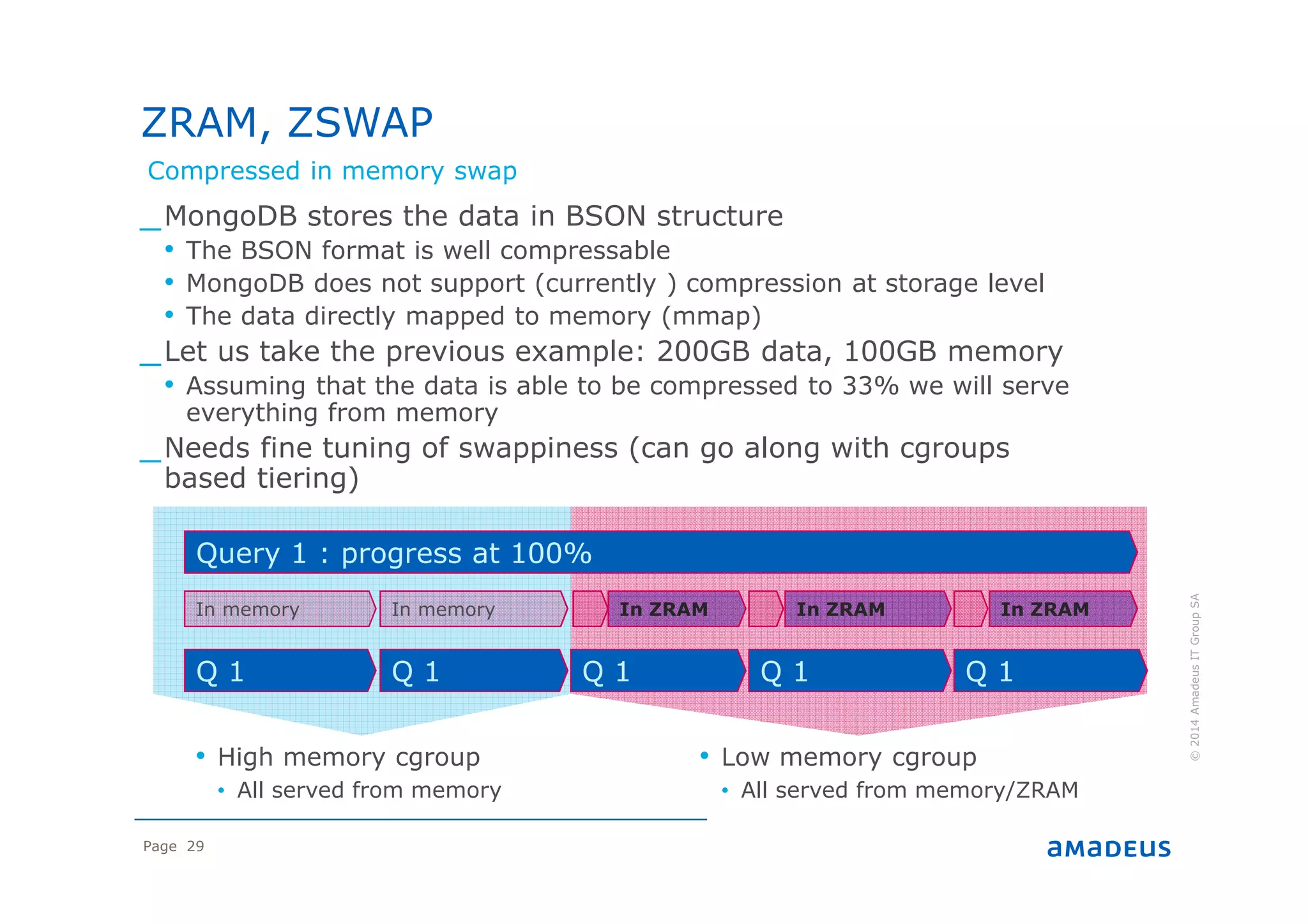

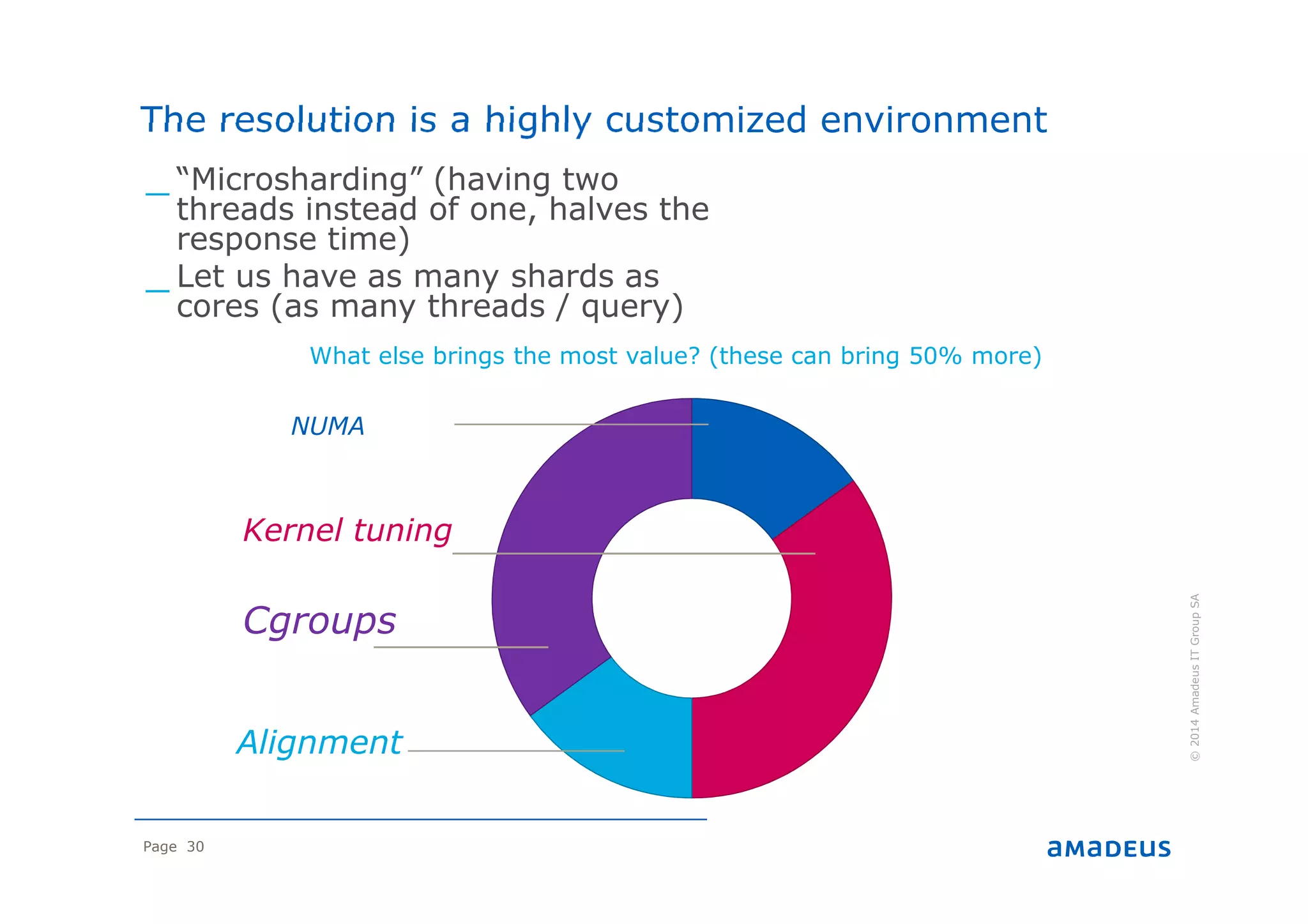

1. The document discusses how Amadeus customized MongoDB for real-time analytics workloads. It covers how they implemented microsharding, optimized OS and kernel settings, aligned shards and replicas, used cgroups and compressed memory to improve performance.

2. The customizations allowed MongoDB to efficiently utilize their hardware, improve concurrency and scaling. While not a perfect fit out of the box, with optimizations MongoDB was able to meet their enterprise requirements for real-time analytics.

3. The document concludes that MongoDB is a good fit after customizations, and future features may improve its capabilities further for their analytical workloads.