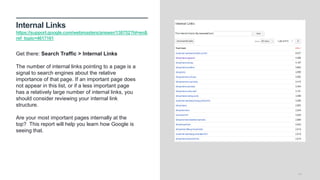

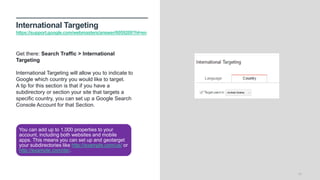

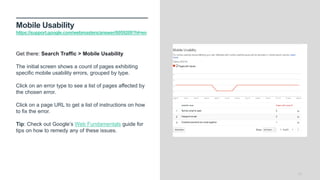

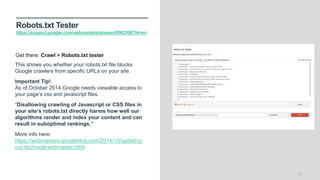

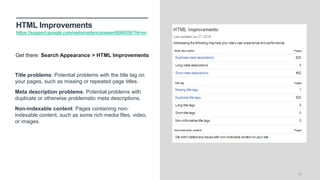

The document outlines best practices for using Google Search Console, emphasizing the importance of monitoring site performance and optimizing for user engagement. It covers various functionalities, including search analytics, crawl sections, and mobile usability, along with tips on improving click-through rates and managing site errors. The document also provides guidance on accessing different features and preparing for future lessons on SEO tools.