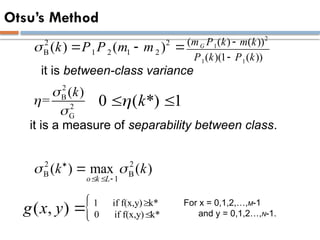

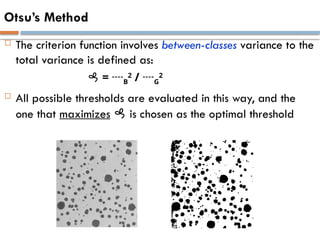

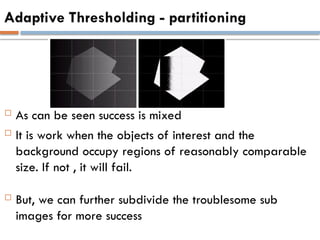

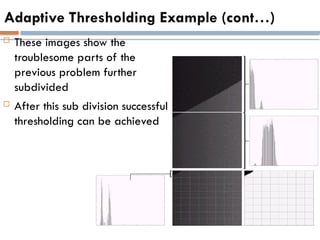

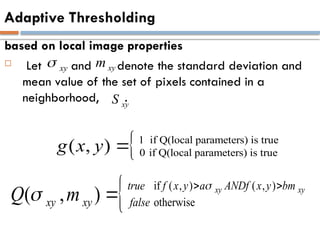

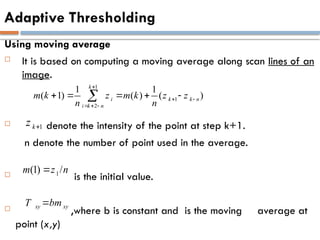

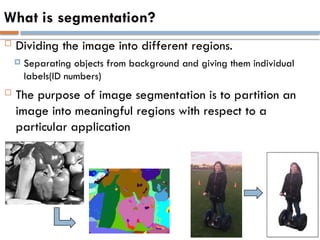

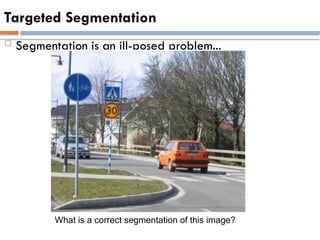

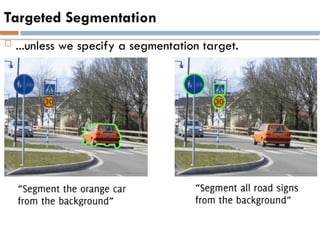

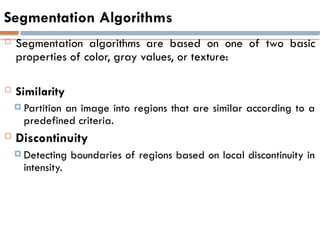

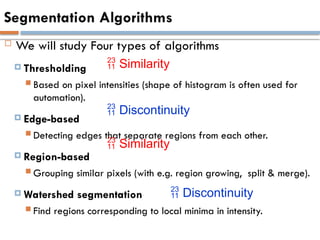

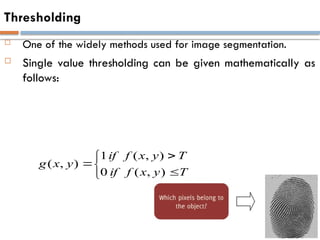

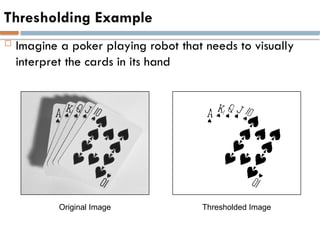

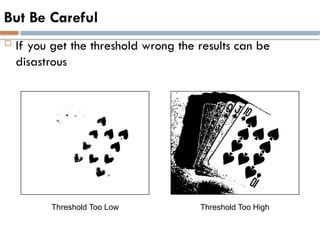

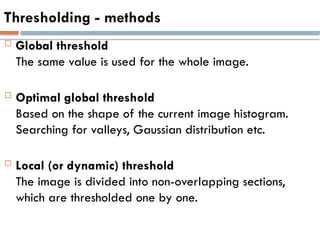

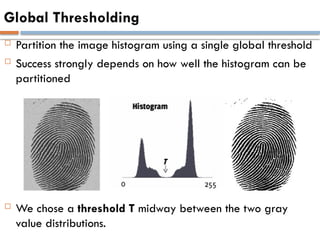

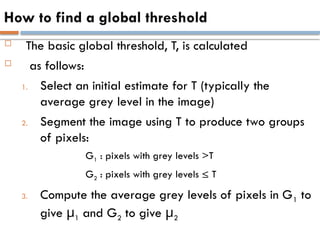

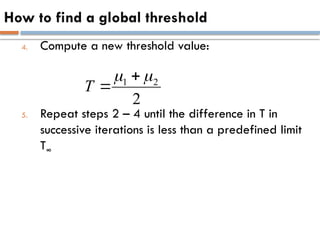

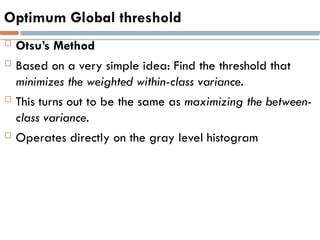

The document discusses digital image processing, focusing on image segmentation techniques such as thresholding, which divides images into meaningful regions for further analysis. It highlights the role of both low-level and high-level processing in understanding and interpreting images, and emphasizes the importance and challenges of accurate segmentation in image analysis tasks. Various segmentation algorithms are outlined, including global and adaptive thresholding methods, along with Otsu’s method for determining optimal thresholds.

![Otsu’s Method

{0,1,2,…,L-1} , L means gray level intensity

Select a threshold , and use it to

classify : intensity in the range and :

( ) ,0 1

T k k k L

1

C [0, ]

k 2

C [ 1, 1]

k L

1

2

2

G

0

=

L

G i

i

i m p

1

0

( )

k

i

i

P k p

1

2 1

1

( ) 1 ( )

L

i

i k

P k p P k

,

1 1 2 2 G

P m P m m

1 2

+ =1

P P

,

0 1 2 1

... L

MN n n n n

i

n

M*N = total number of pixel.

= number of pixels with intensity i

it is global variance.](https://image.slidesharecdn.com/4-1-241113170451-3da20c4e/85/Segmentation-is-preper-concept-to-hands-pptx-24-320.jpg)