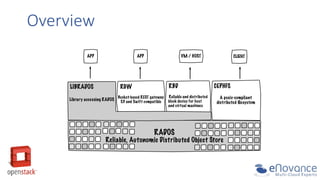

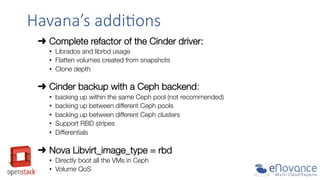

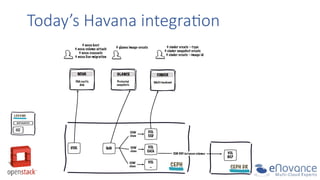

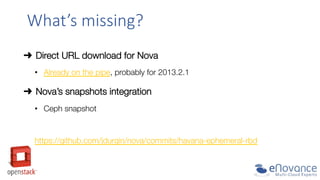

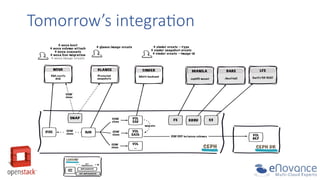

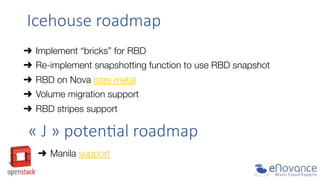

Ceph is a unified distributed storage system that integrates well with OpenStack, offering features like self-management, painless scaling, and efficient data placement. The document discusses its development, especially under the Havana release, highlighting enhancements such as the Cinder driver refactor and backup capabilities. Future roadmaps include improvements for snapshot integration, volume migration, and additional support for OpenStack components.