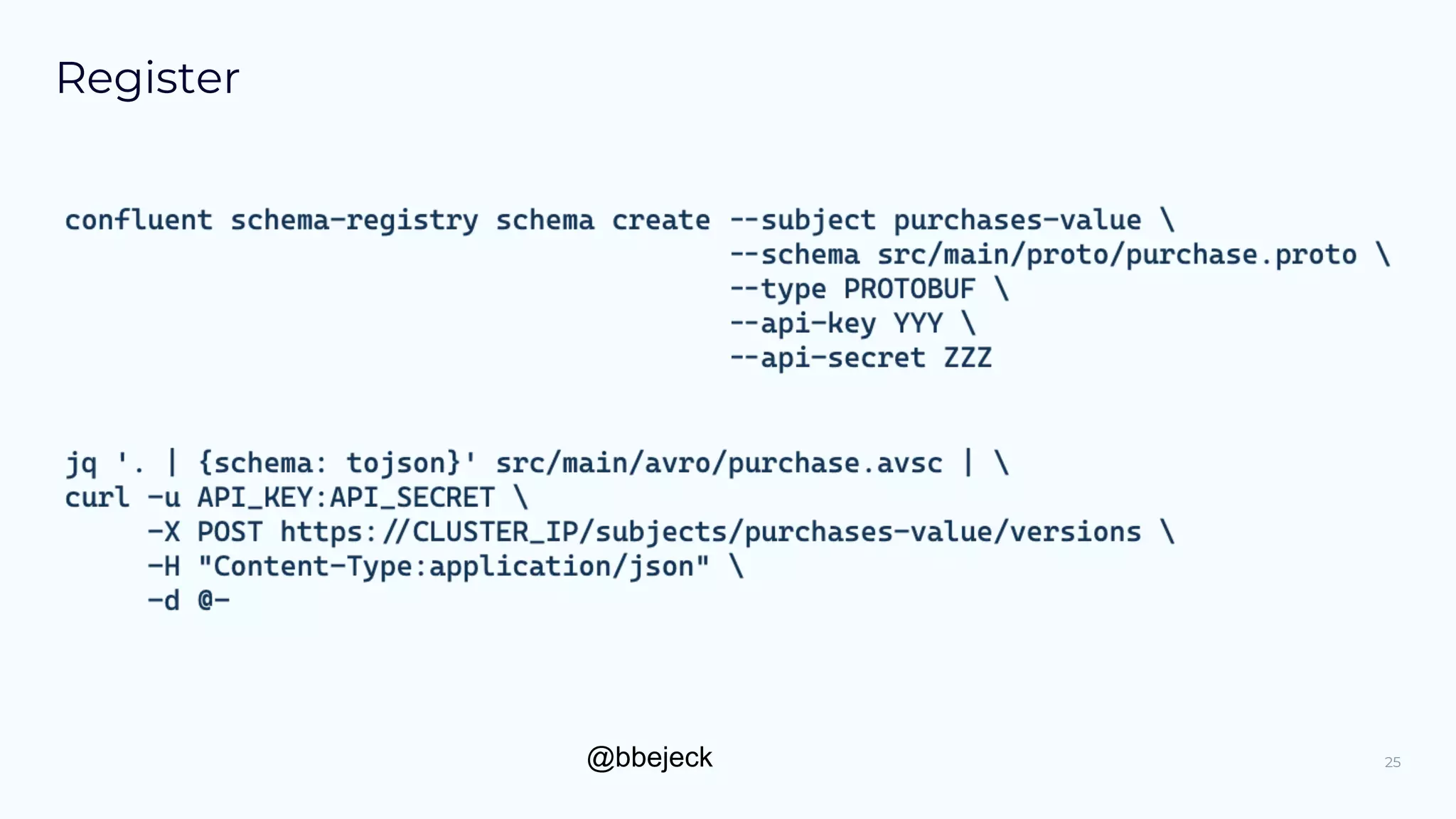

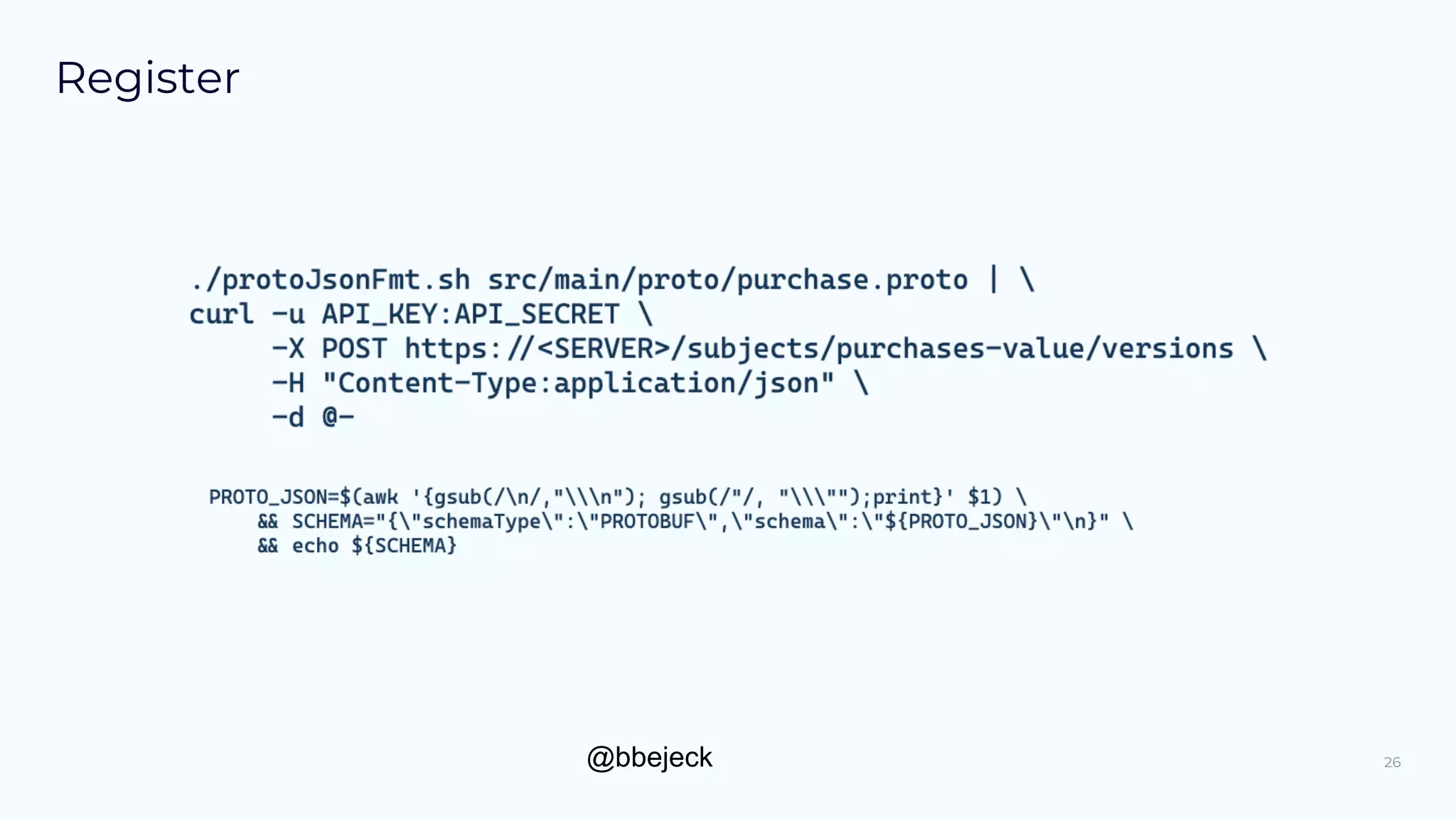

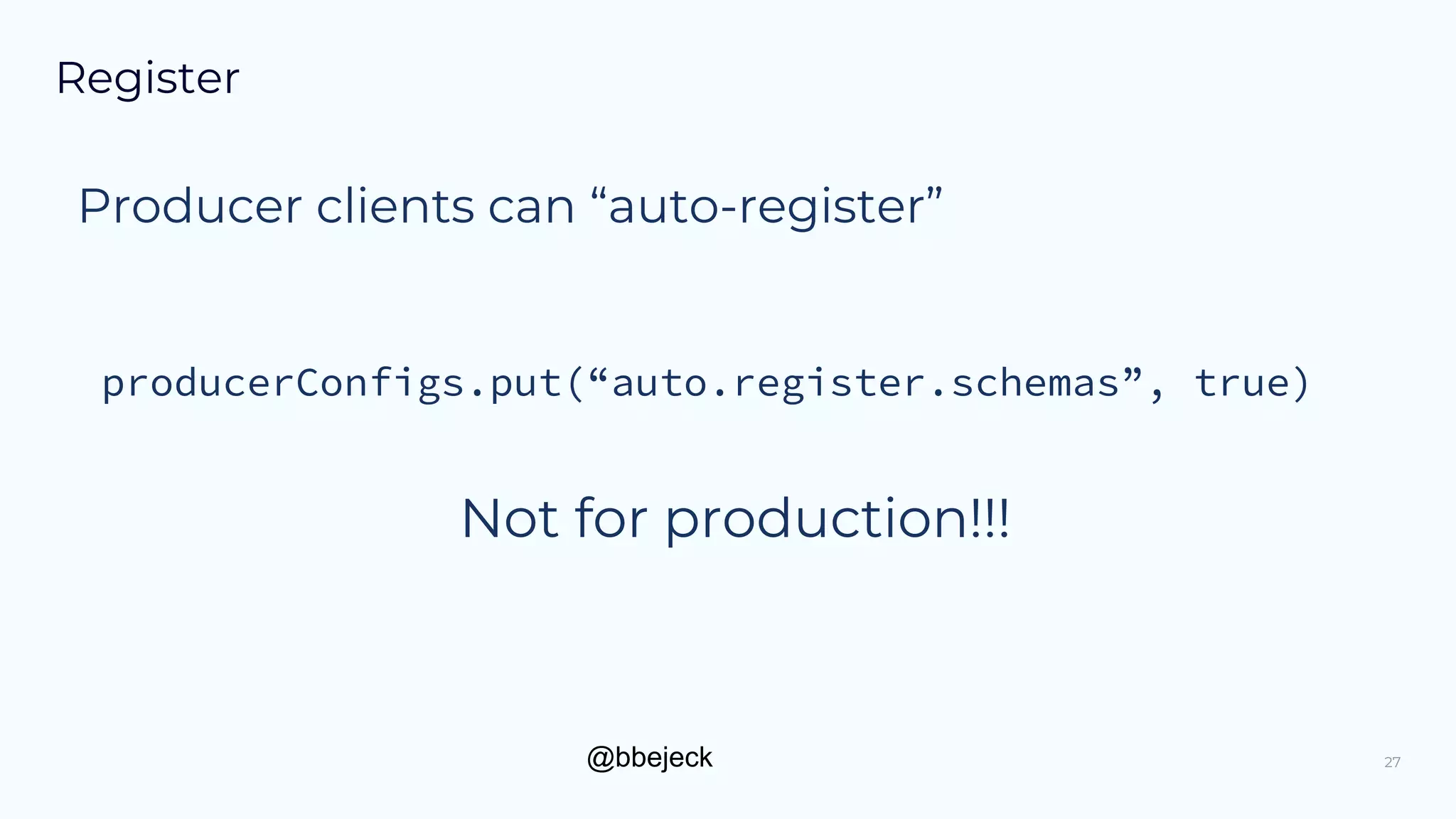

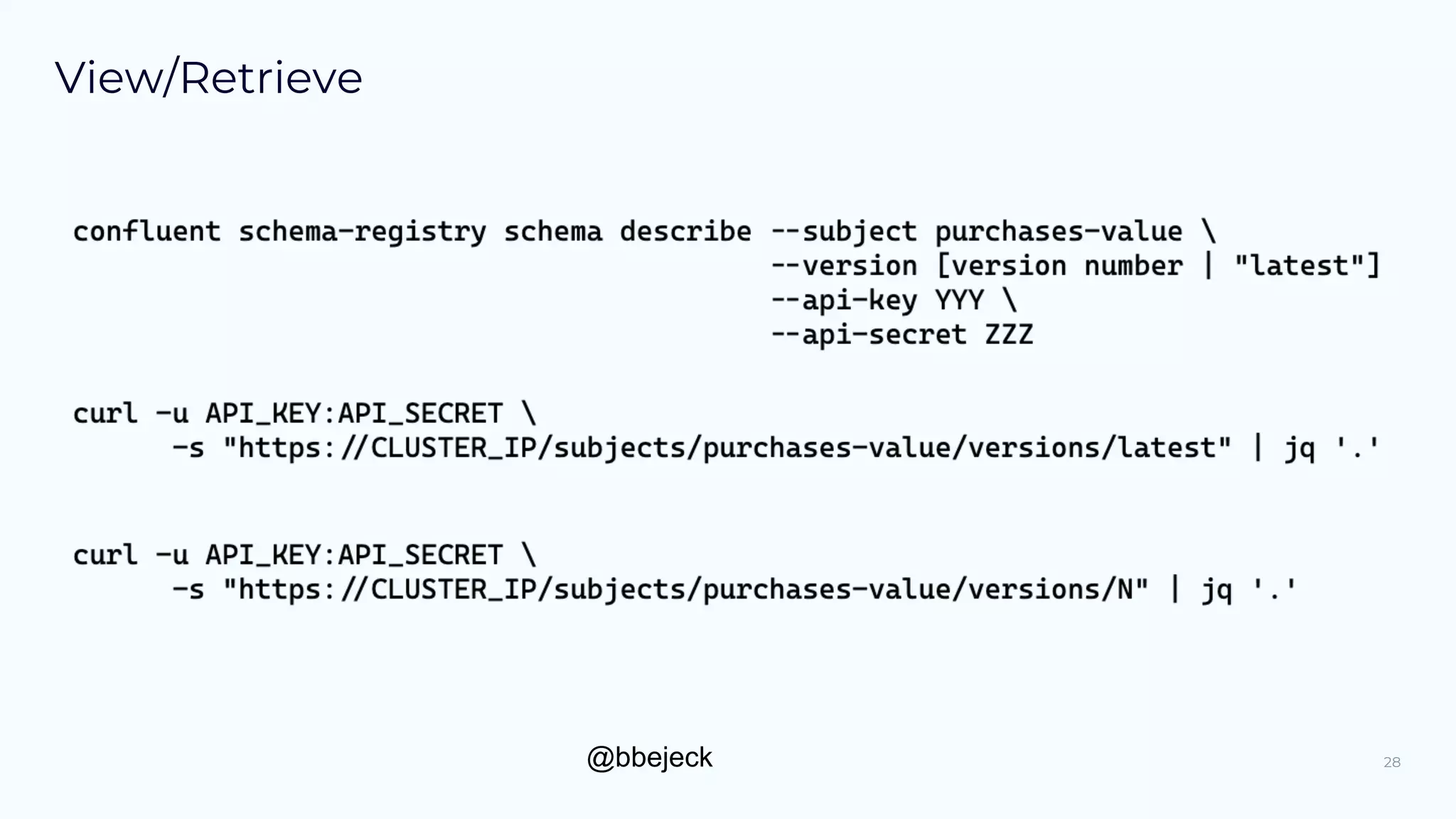

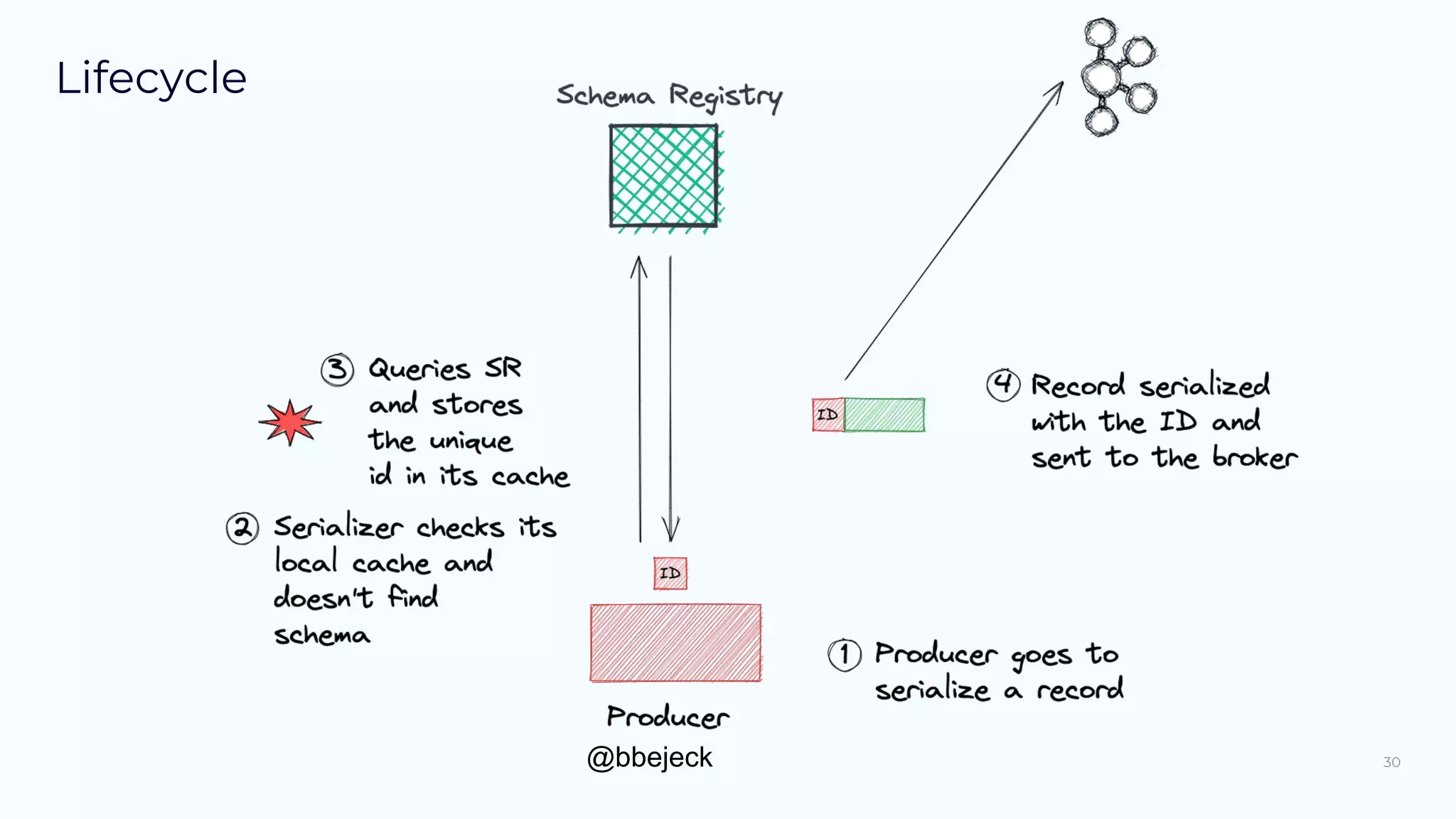

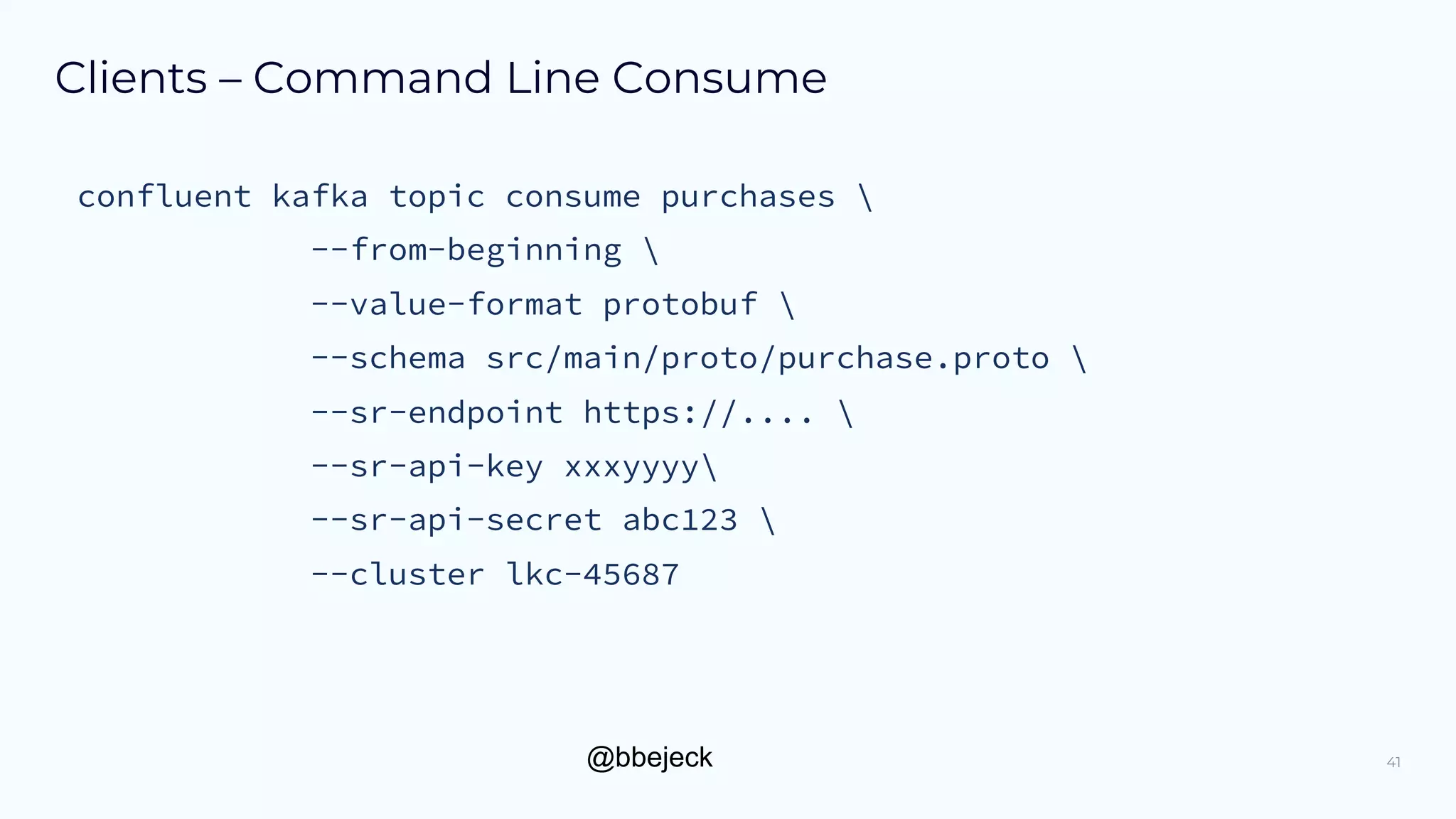

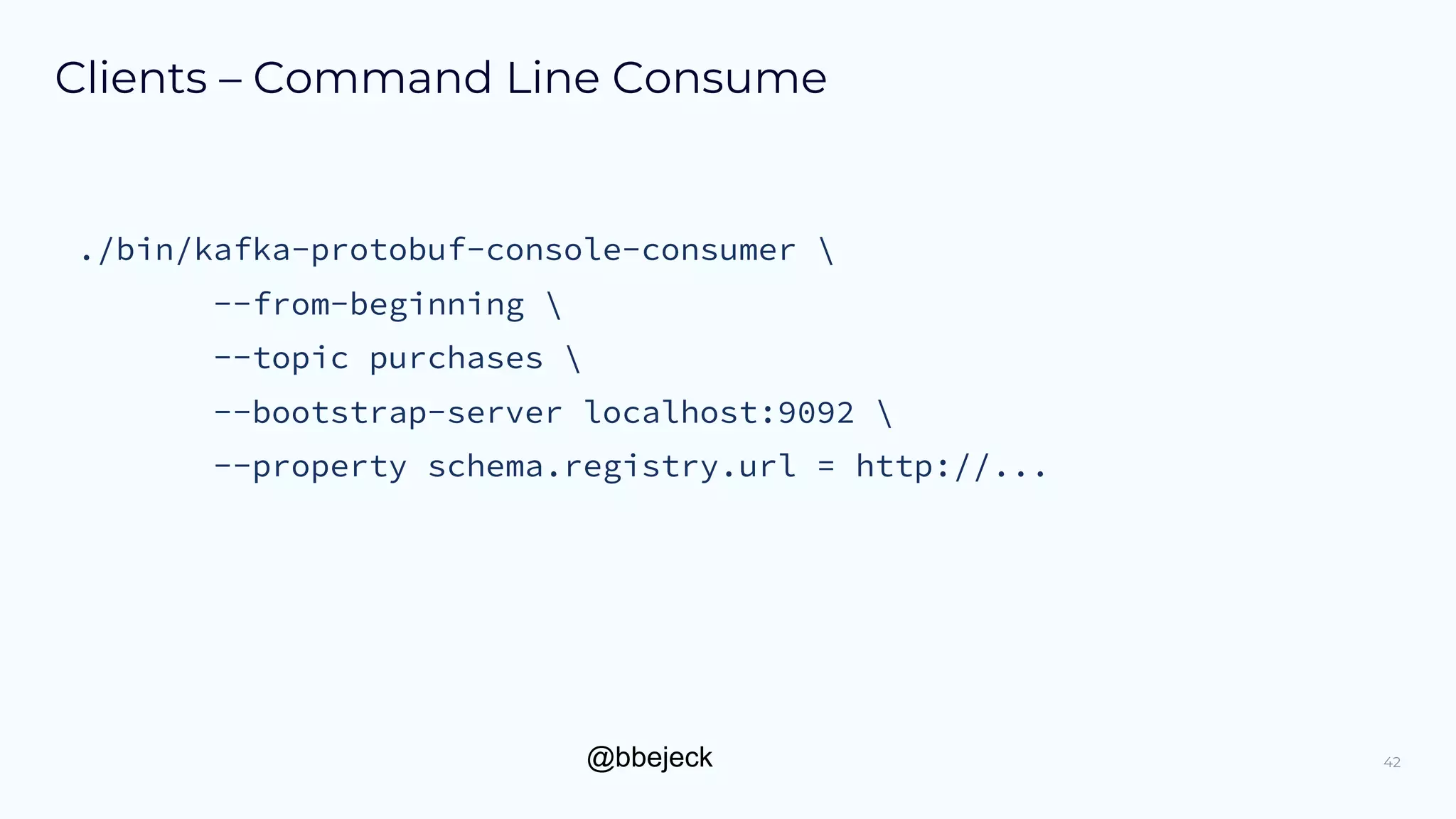

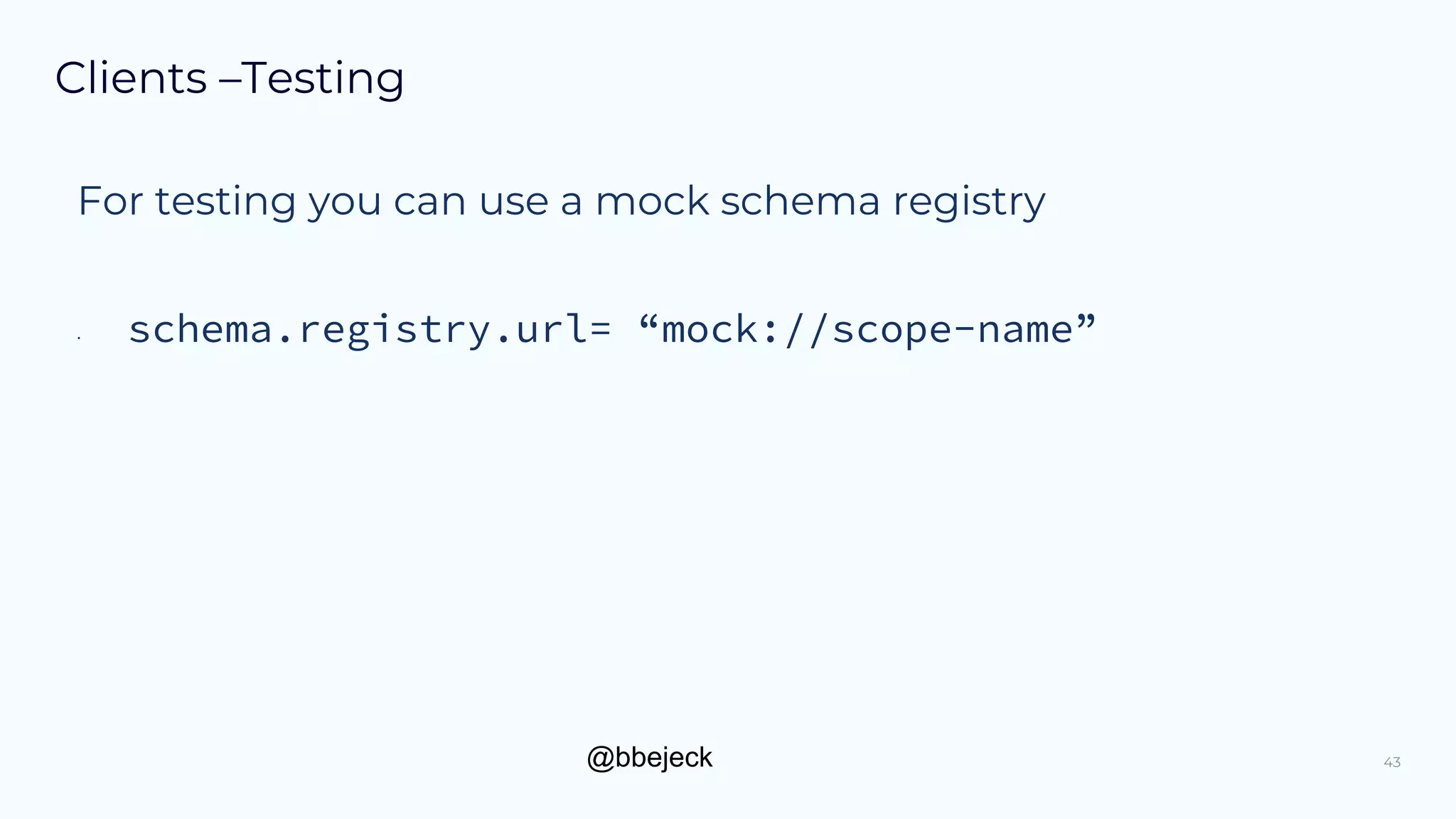

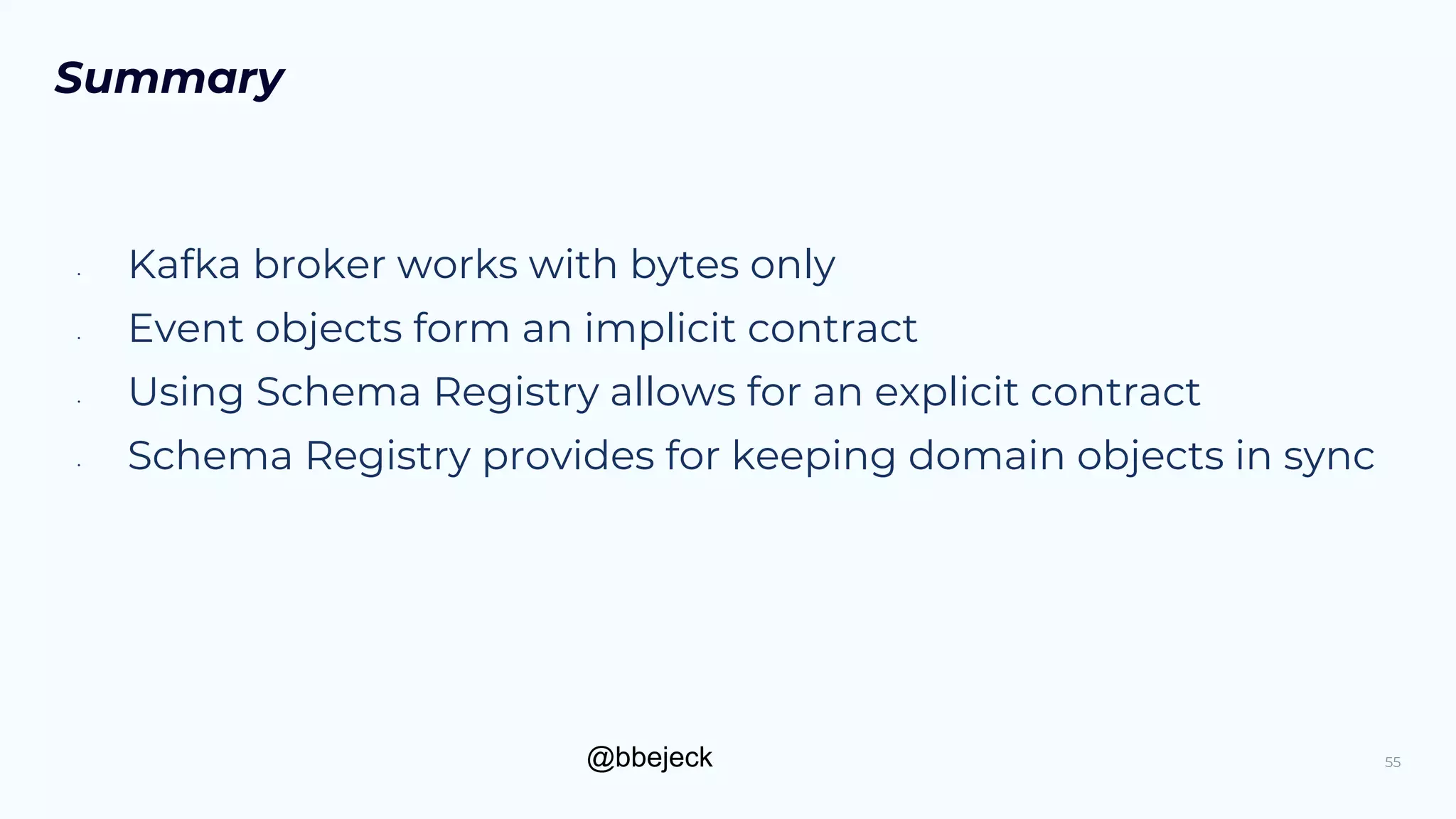

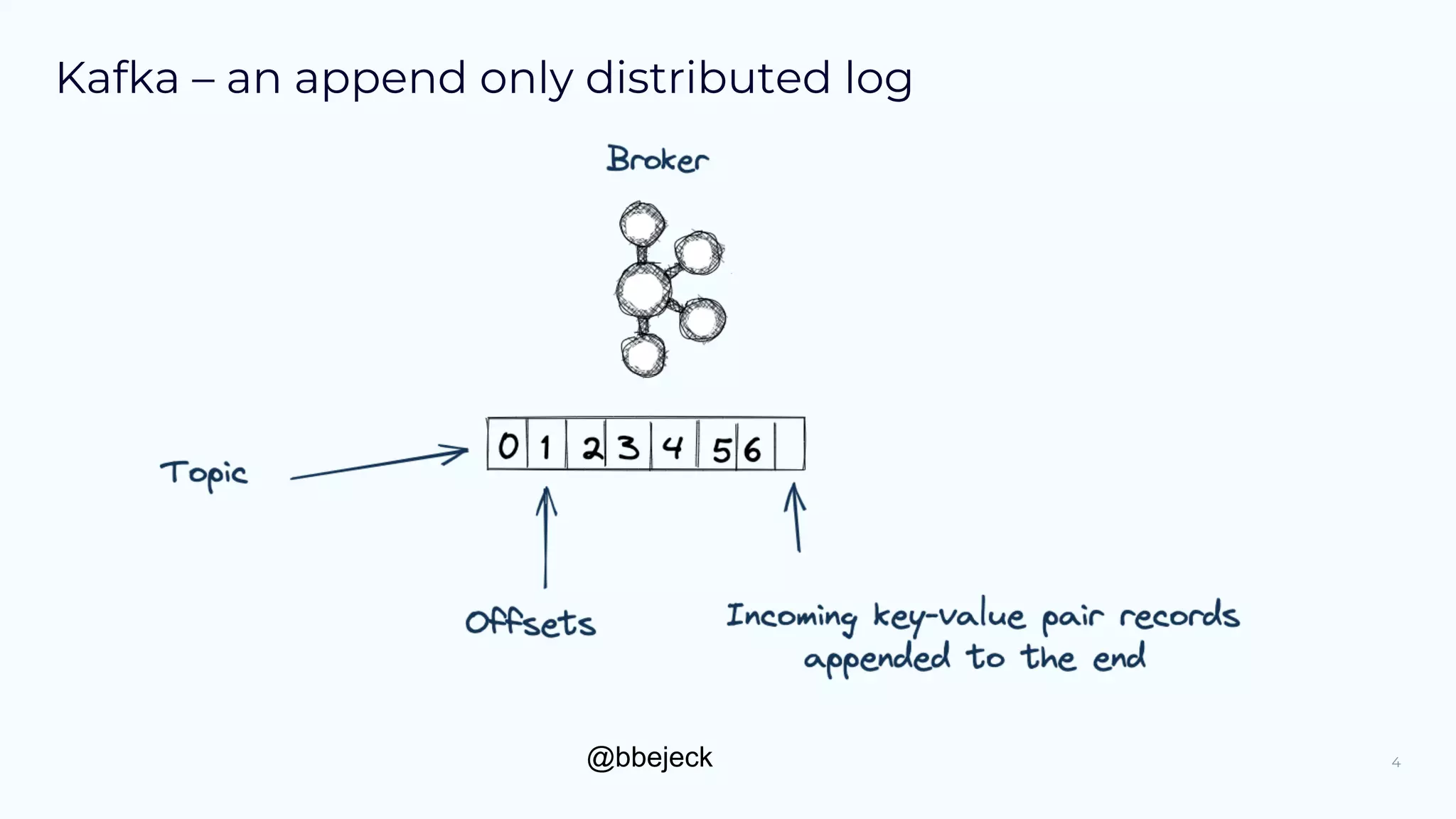

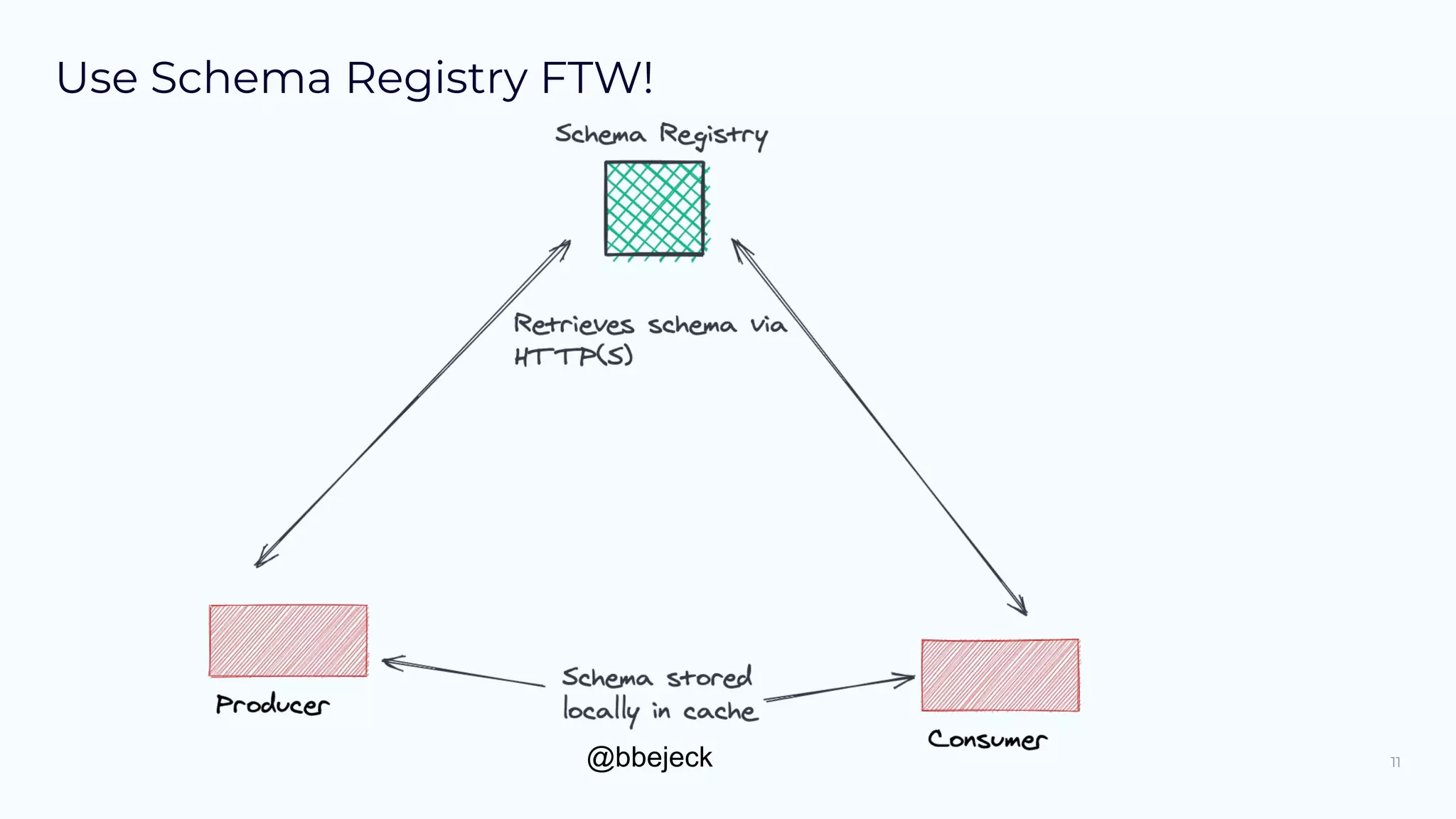

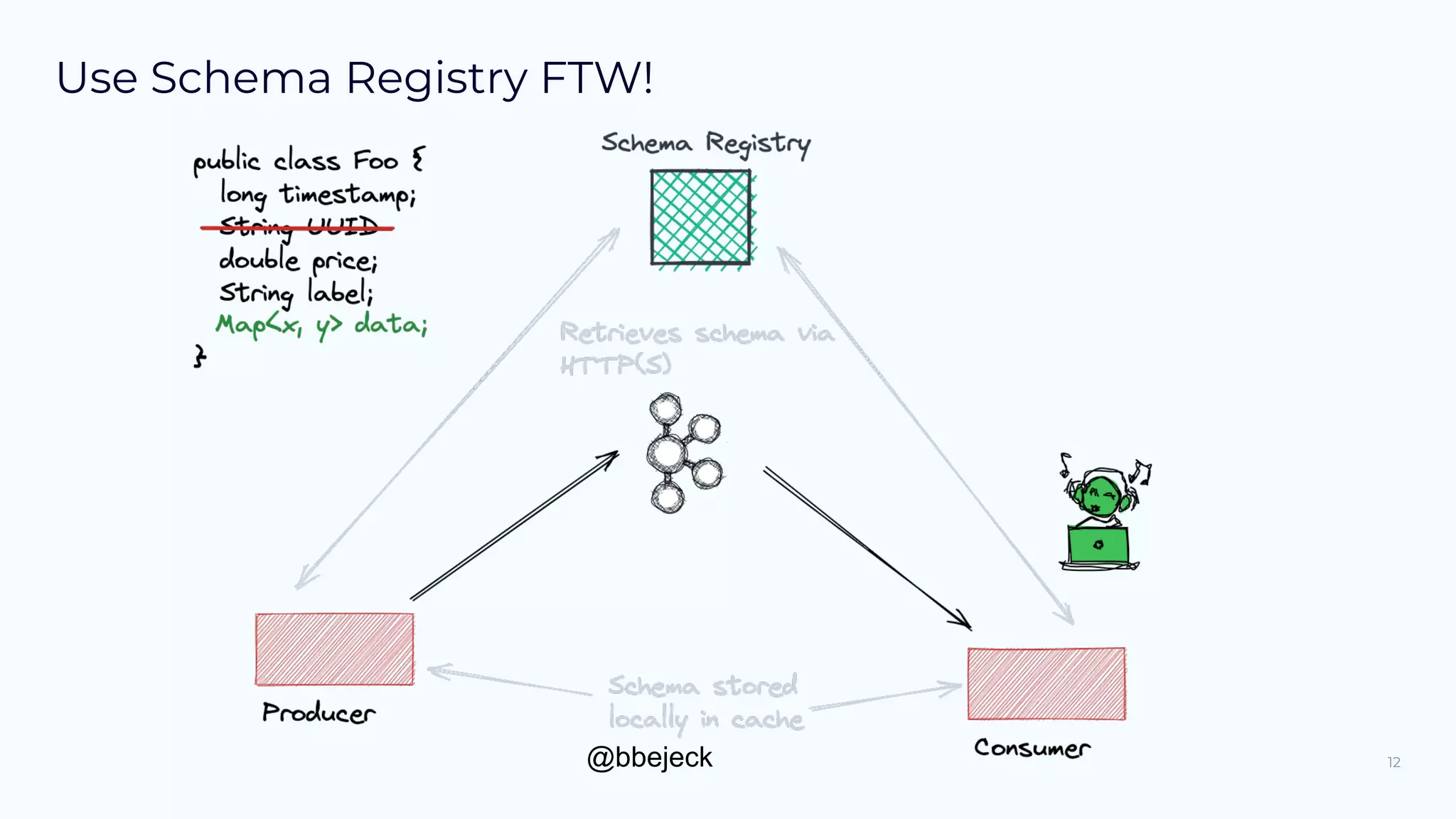

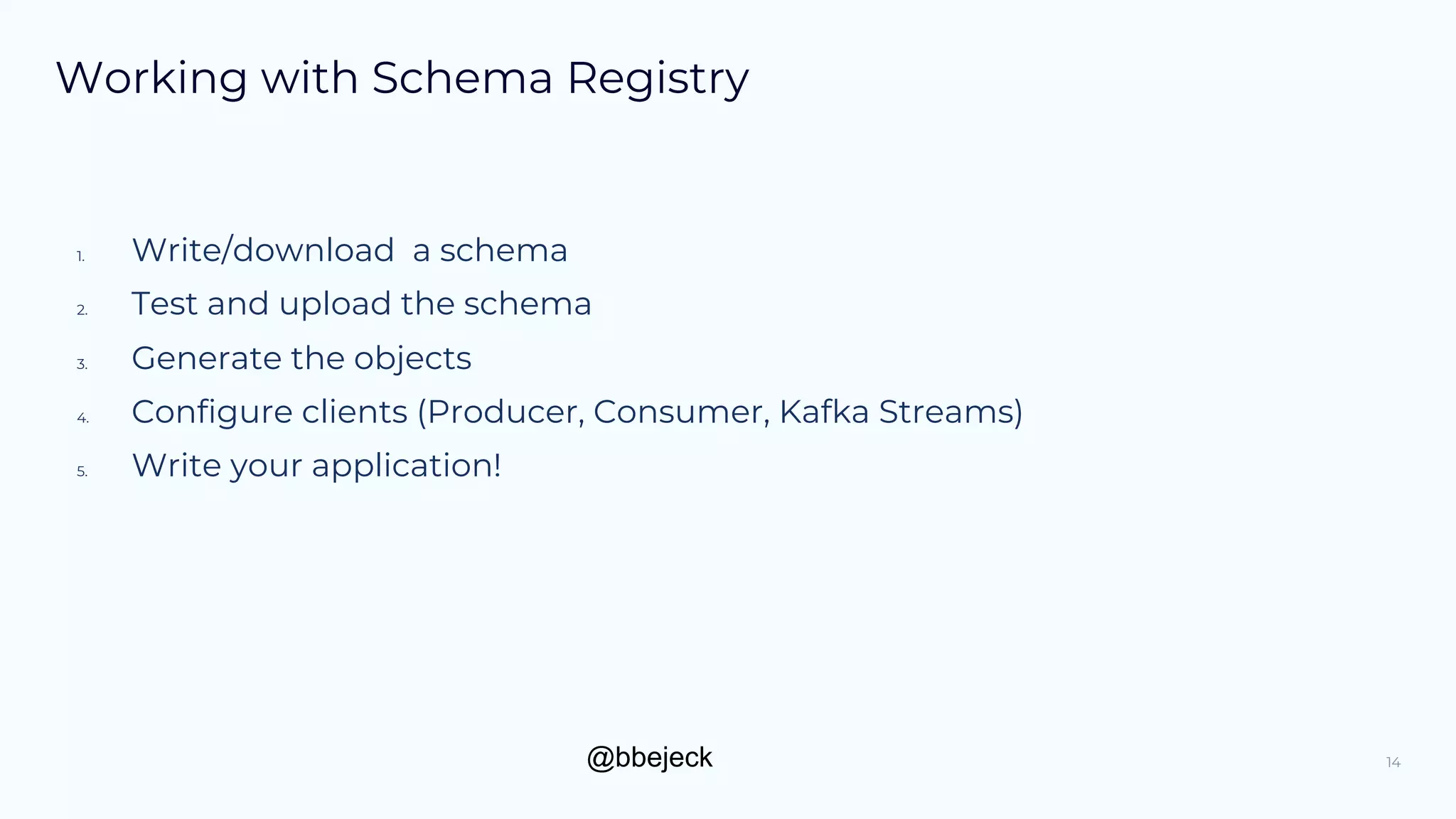

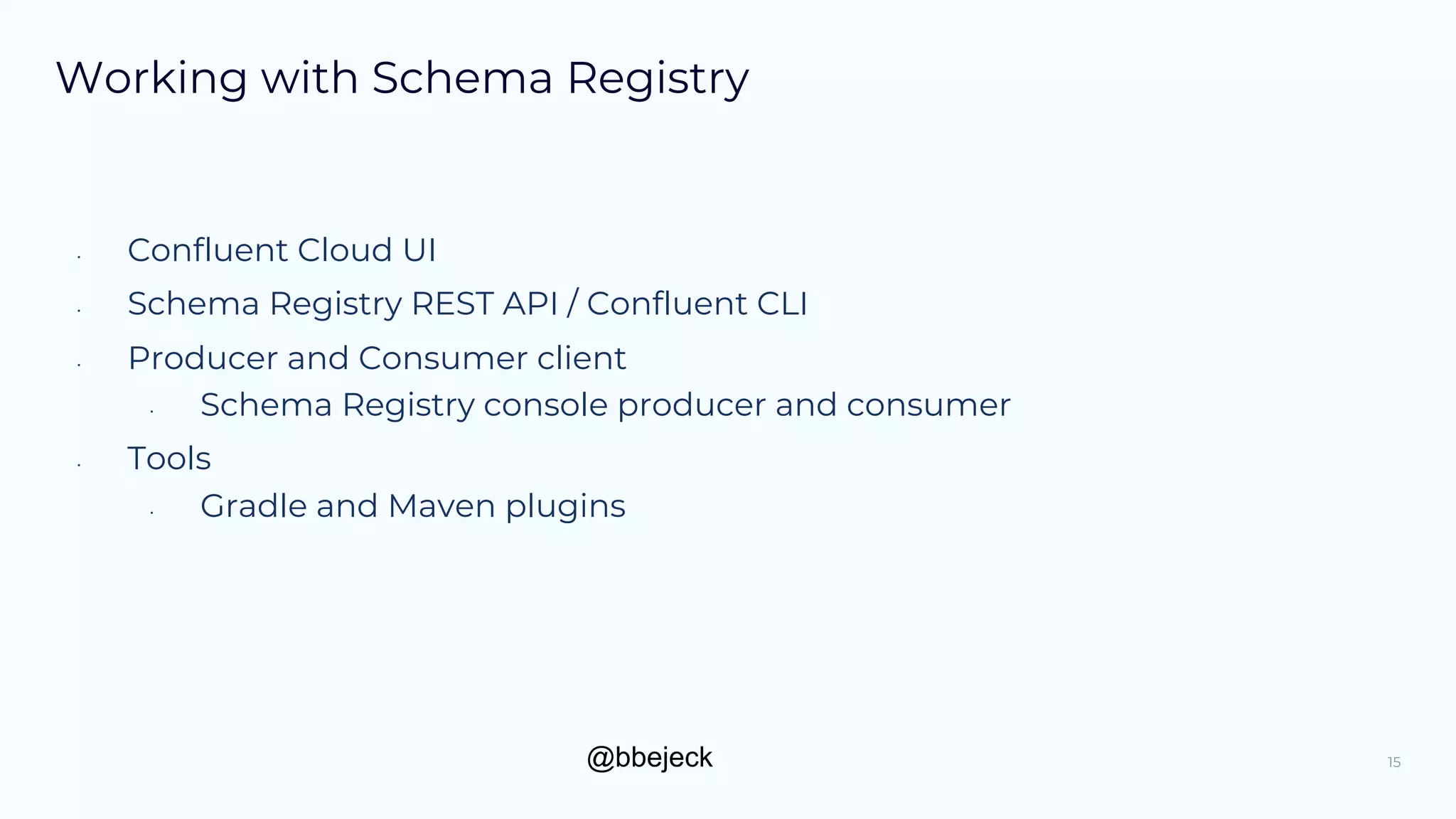

The document provides an overview of the Schema Registry in Apache Kafka, detailing its importance in managing schema evolution and compatibility for event objects. It outlines the process for building, registering, and using schemas with various formats like Avro, Protocol Buffers, and JSON Schema, as well as configuration options for producers and consumers. Additionally, it discusses schema compatibility checks, the lifecycle of schemas, and includes resources for further learning.

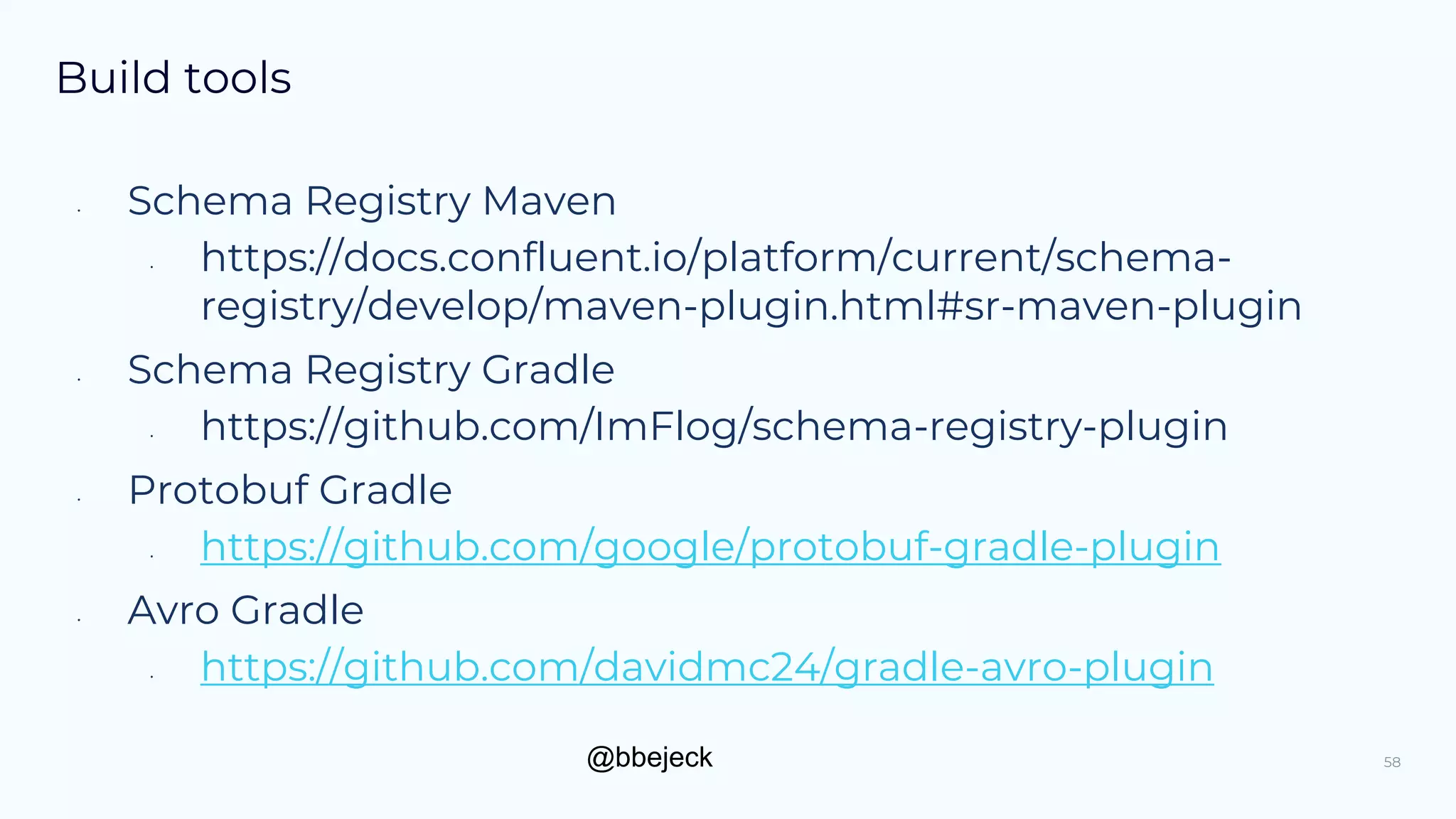

![@bbejeck

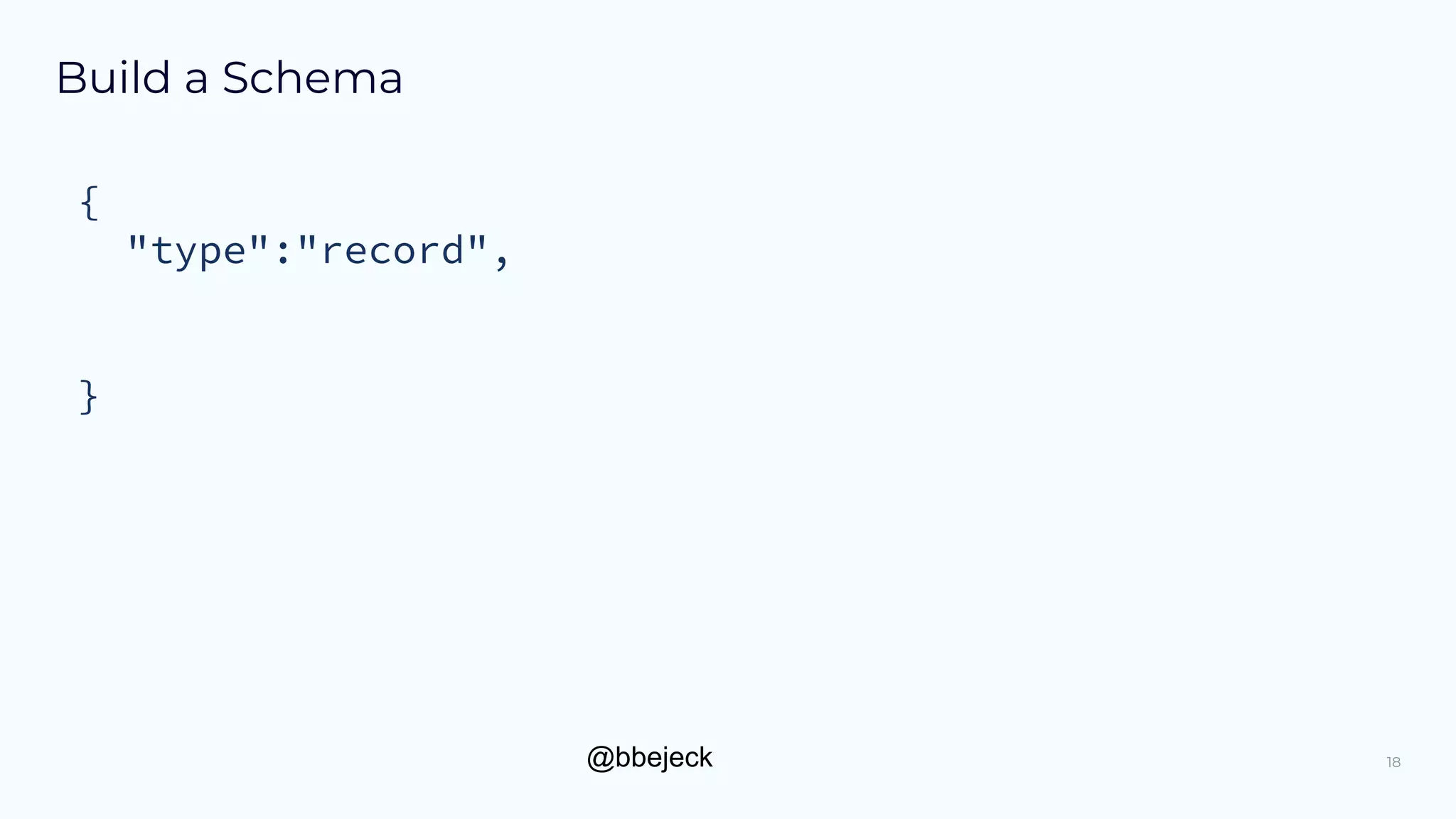

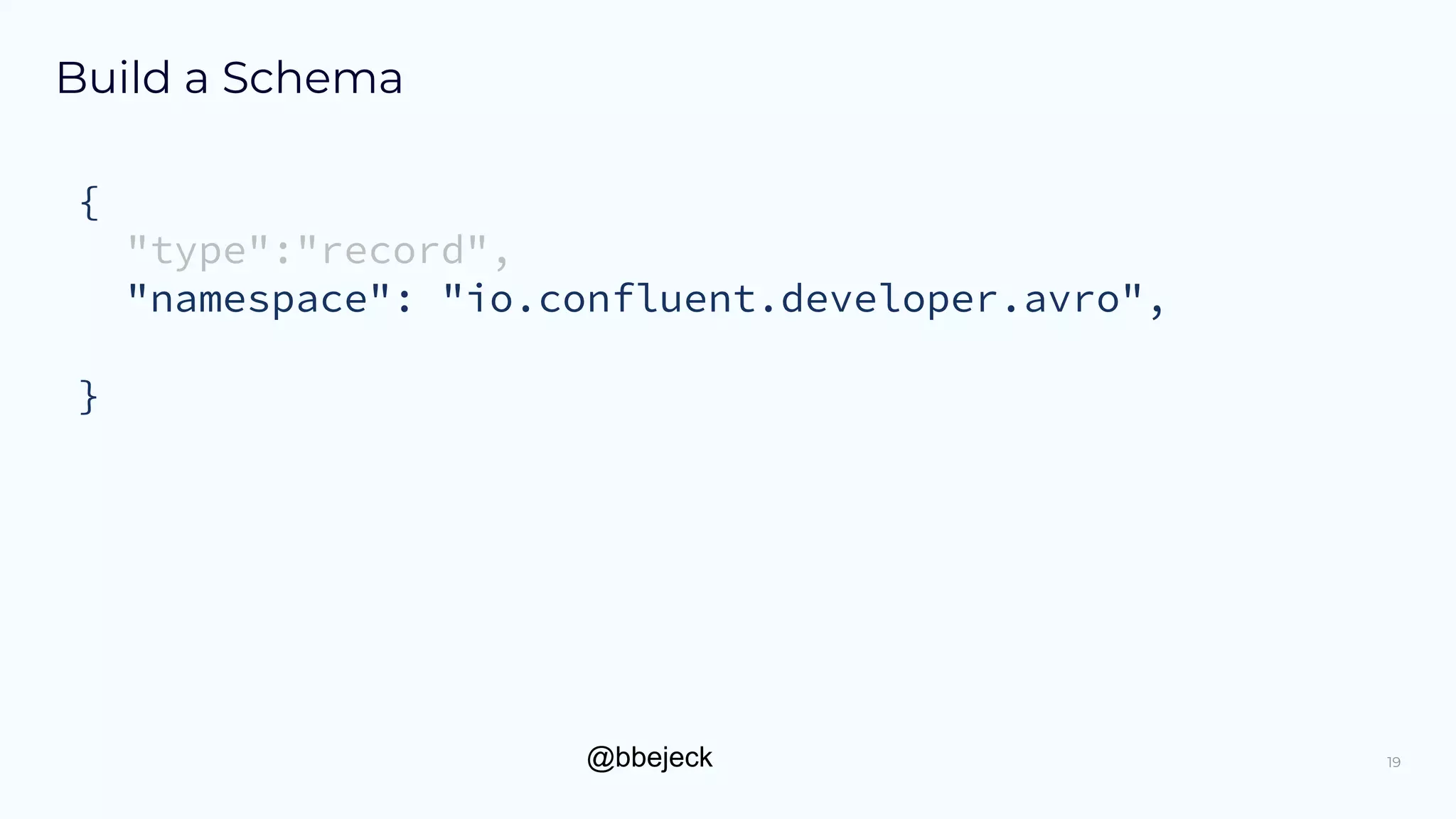

Build a Schema

{

"type":"record",

"namespace": "io.confluent.developer.avro",

"name":"Purchase",

"fields": [

{"name": "item", "type":"string"},

{"name": "amount", "type": "double”, ”default”:0.0},

{"name": "customer_id", "type": "string"}

]

}

21](https://image.slidesharecdn.com/bs40billbejeckconfluent-220503181755/75/Schema-Registry-101-with-Bill-Bejeck-Kafka-Summit-London-2022-21-2048.jpg)

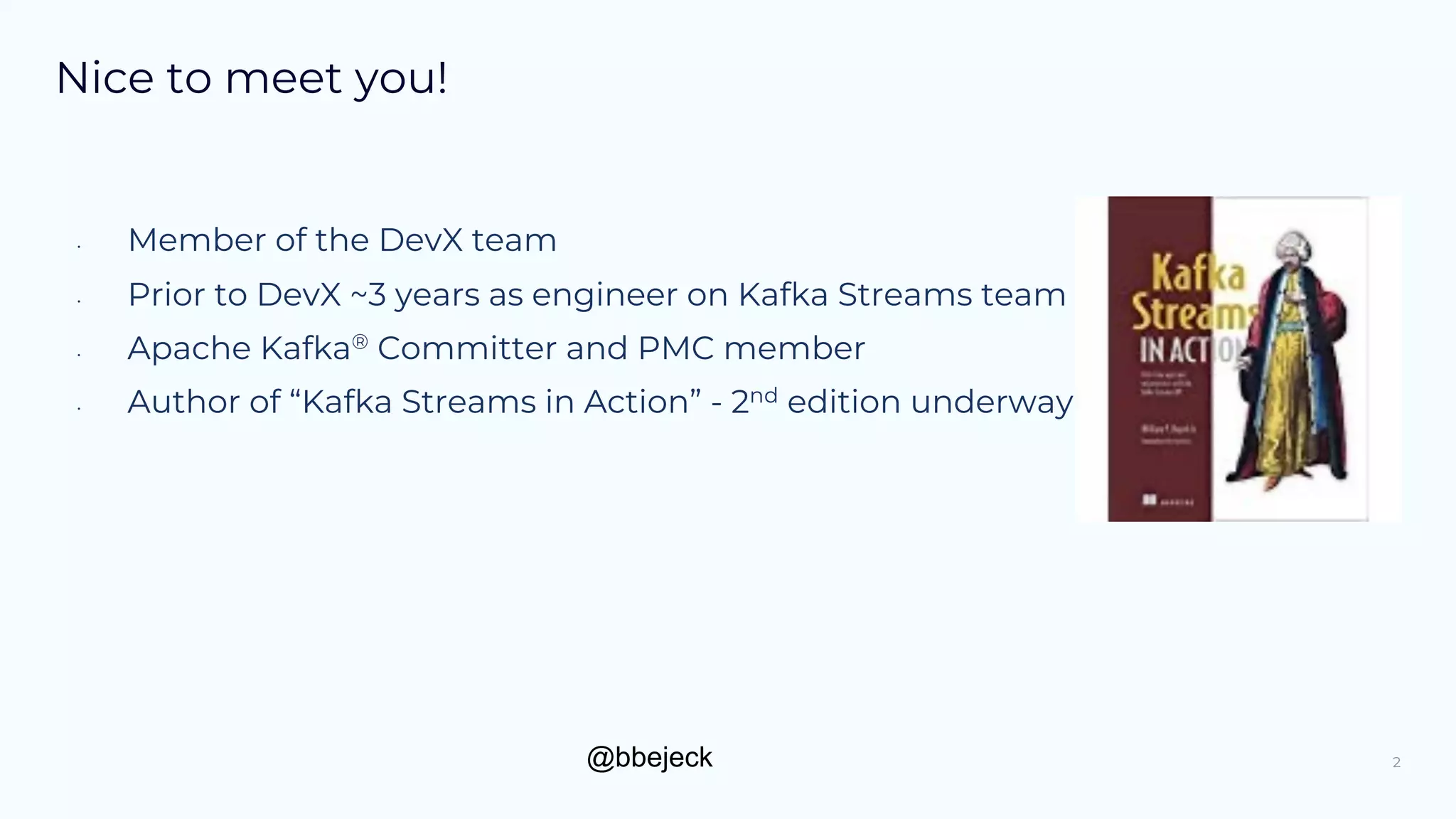

![@bbejeck

Build a Schema

Avro

{"name": ”list", "type":{ "type": "array", "items" : "string",

"default": [] }}

{"name": ”numbers", "type": {"type": "map", "values": ”long”,

“default” : {}}}

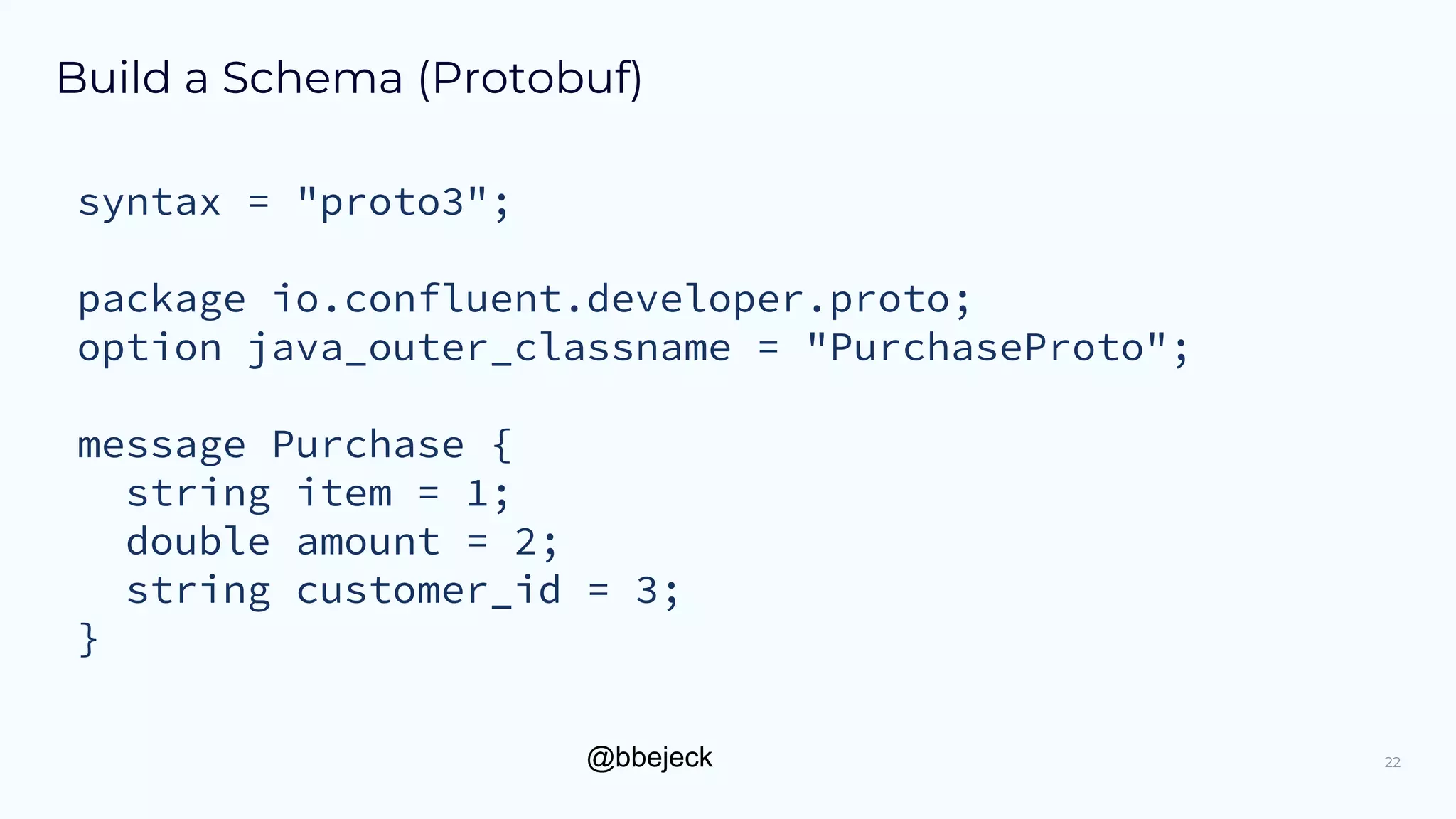

Protobuf

repeated string strings = 1;

map<string, string> projects = 2;

map<string, Message> myOtherMap = 3;

23](https://image.slidesharecdn.com/bs40billbejeckconfluent-220503181755/75/Schema-Registry-101-with-Bill-Bejeck-Kafka-Summit-London-2022-23-2048.jpg)