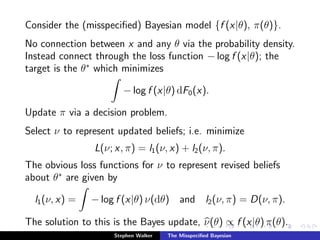

1) The document discusses using a misspecified Bayesian model to extract specific information from big data, such as the value of an integral, rather than fully modeling the data.

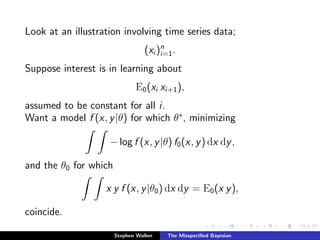

2) It proposes choosing a misspecified model f(x|θ) and updating beliefs about the value of θ0 that satisfies the integral, rather than θ itself, using the Bayes update.

3) The argument is that this approach is asymptotically valid for learning about the target parameter θ* that minimizes expected loss, even if the model does not connect x to θ, as long as θ0 and θ* coincide under the chosen model.