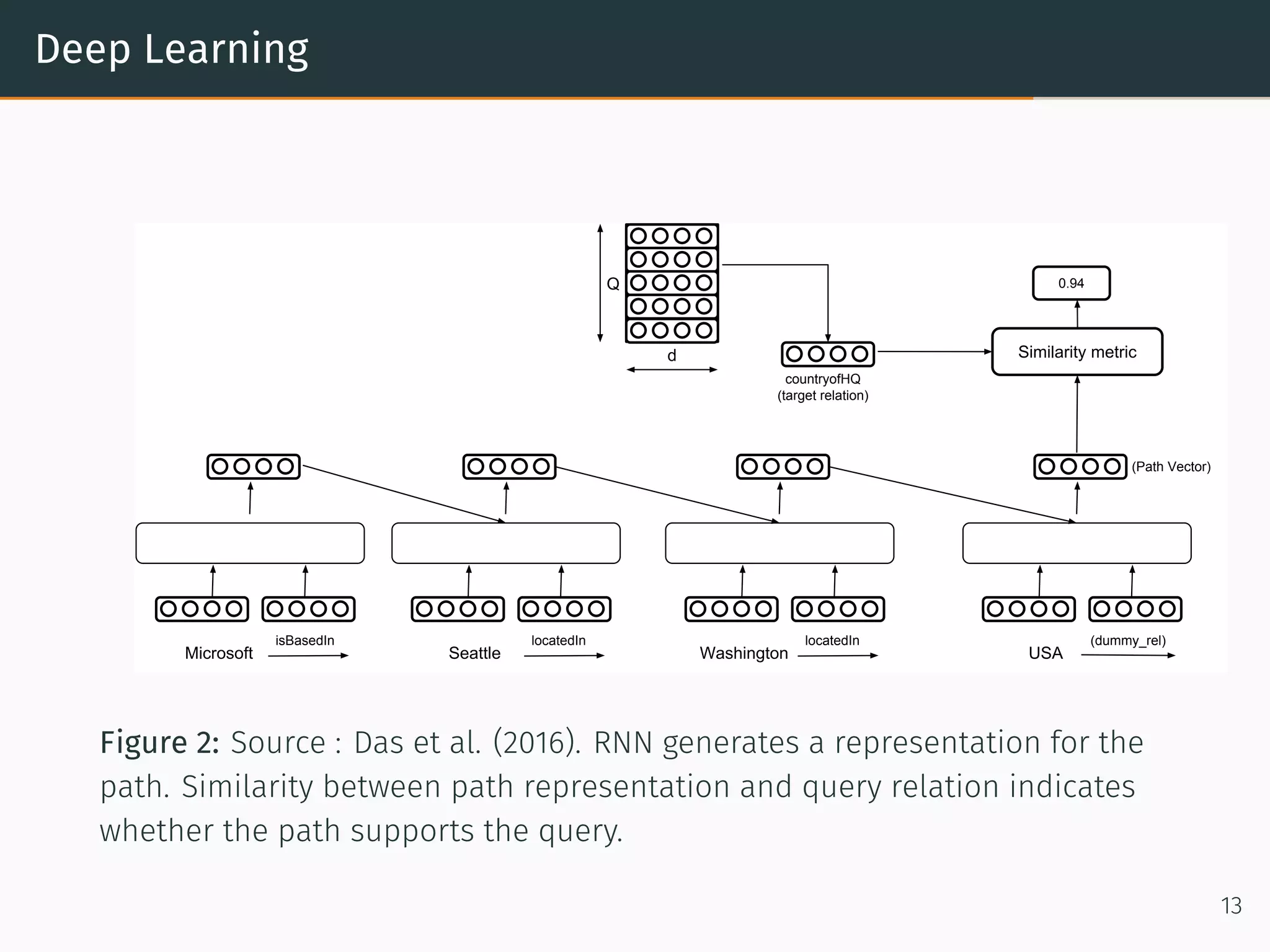

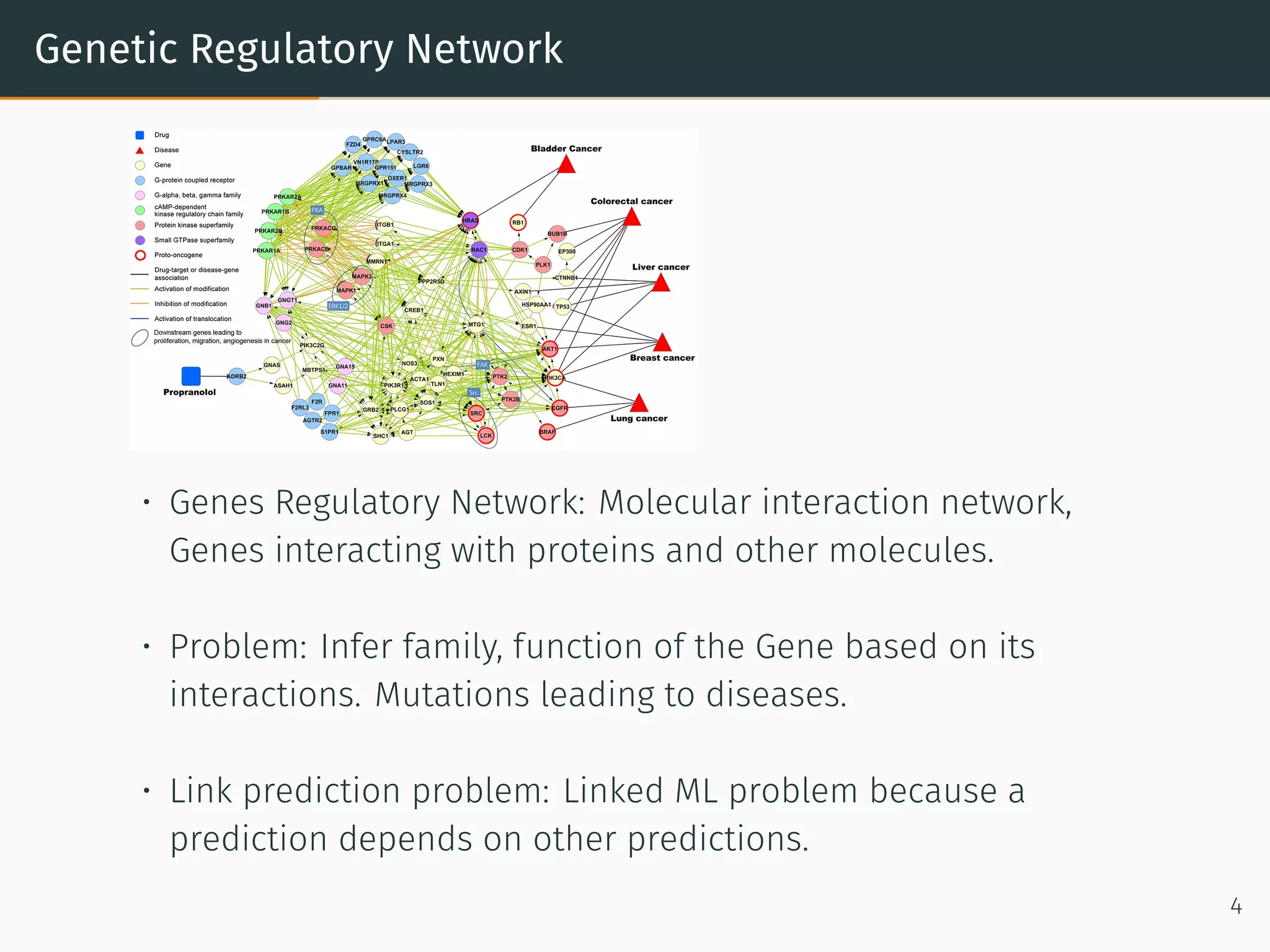

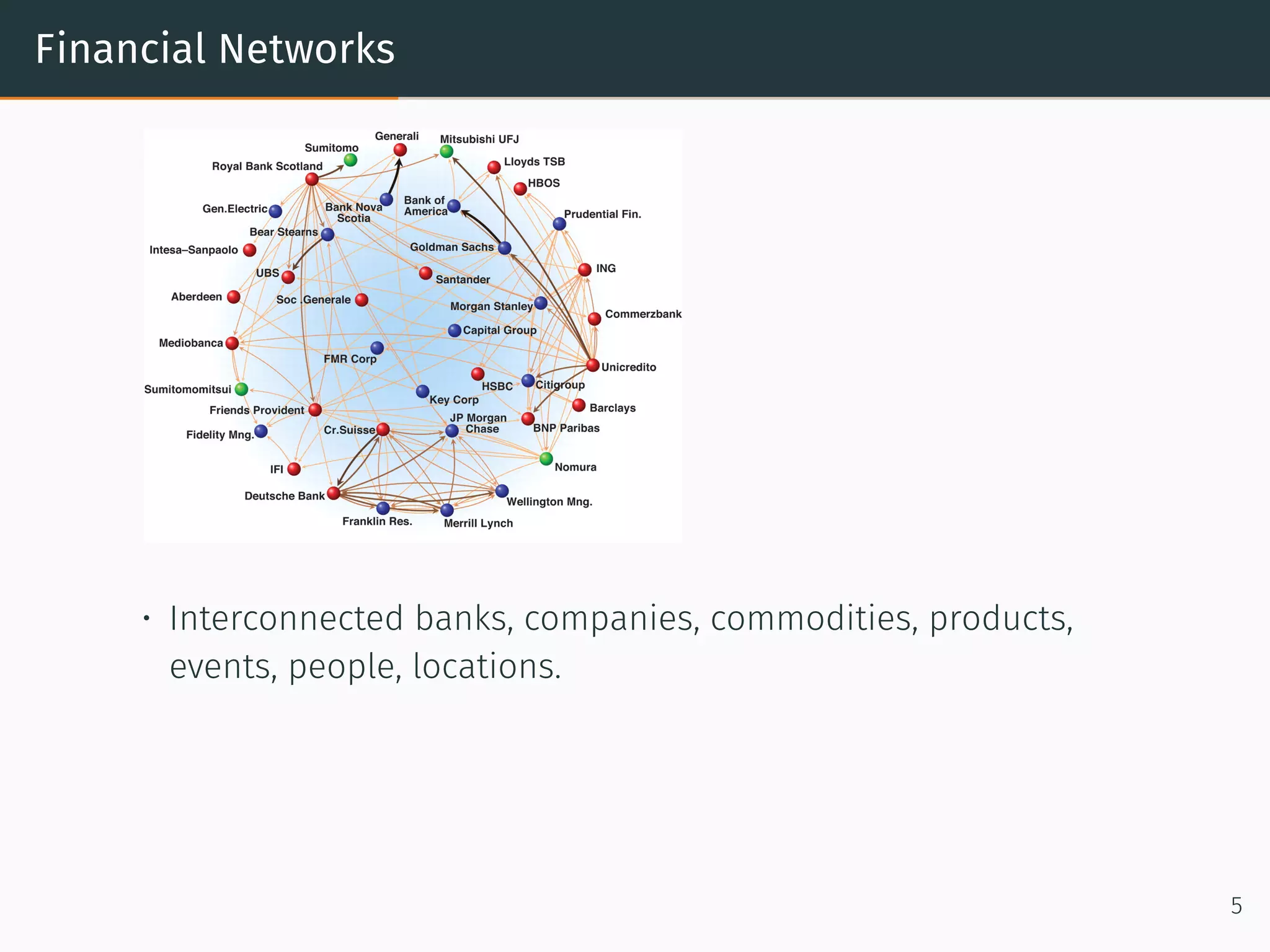

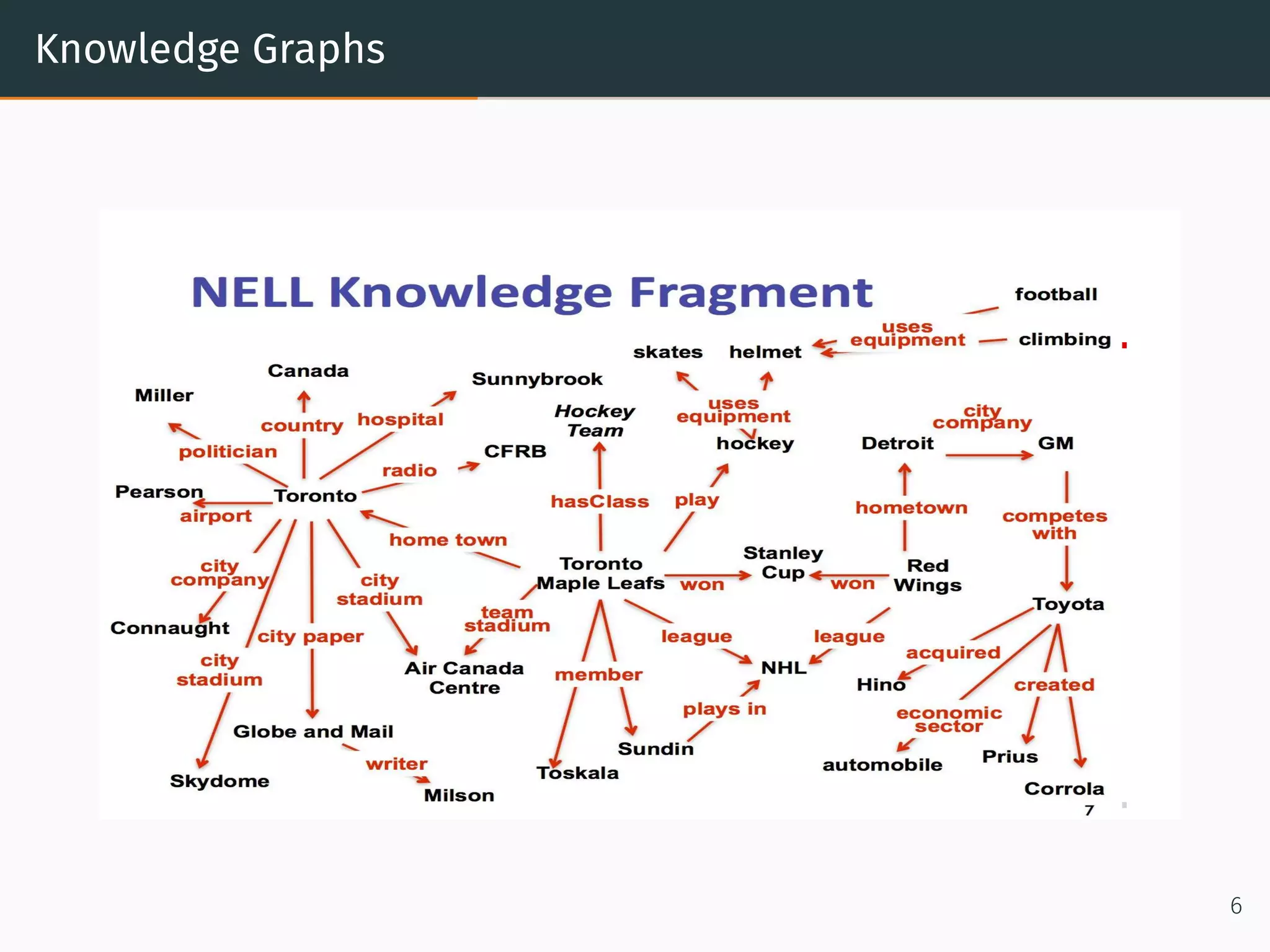

This document provides an overview of relational machine learning models and applications. It discusses how networks and graphs like social networks, biological networks, financial networks, and knowledge graphs can be modeled using relational machine learning. Specific models discussed include recommendation engines that use matrix factorization, the RESCAL model for multi-relational data, bilinear diagonal models for scalability, and TransE which models relationships as translations in the embedding space. The document also covers generating negative samples and different loss functions used for training these models.

![RESCAL Model

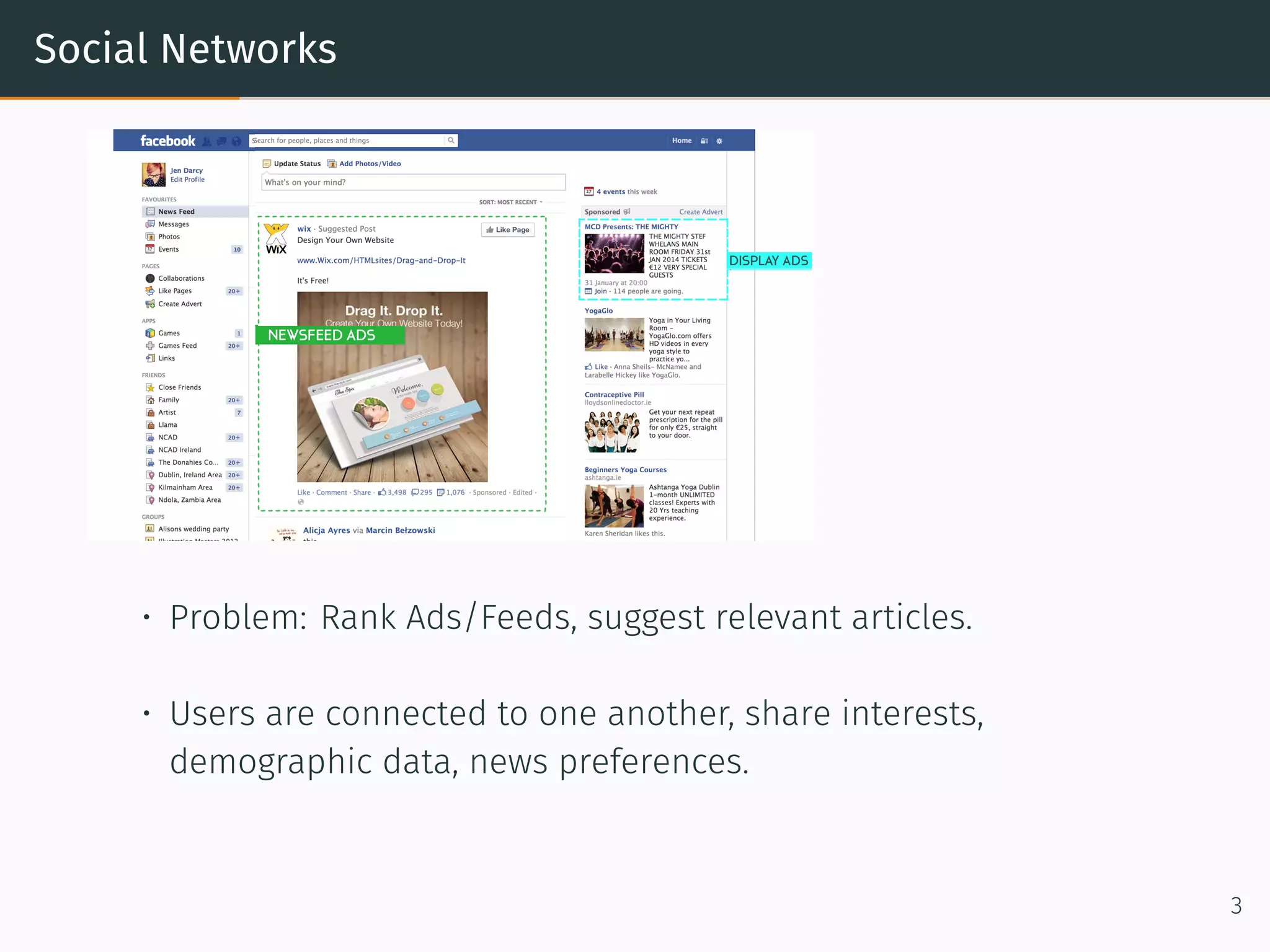

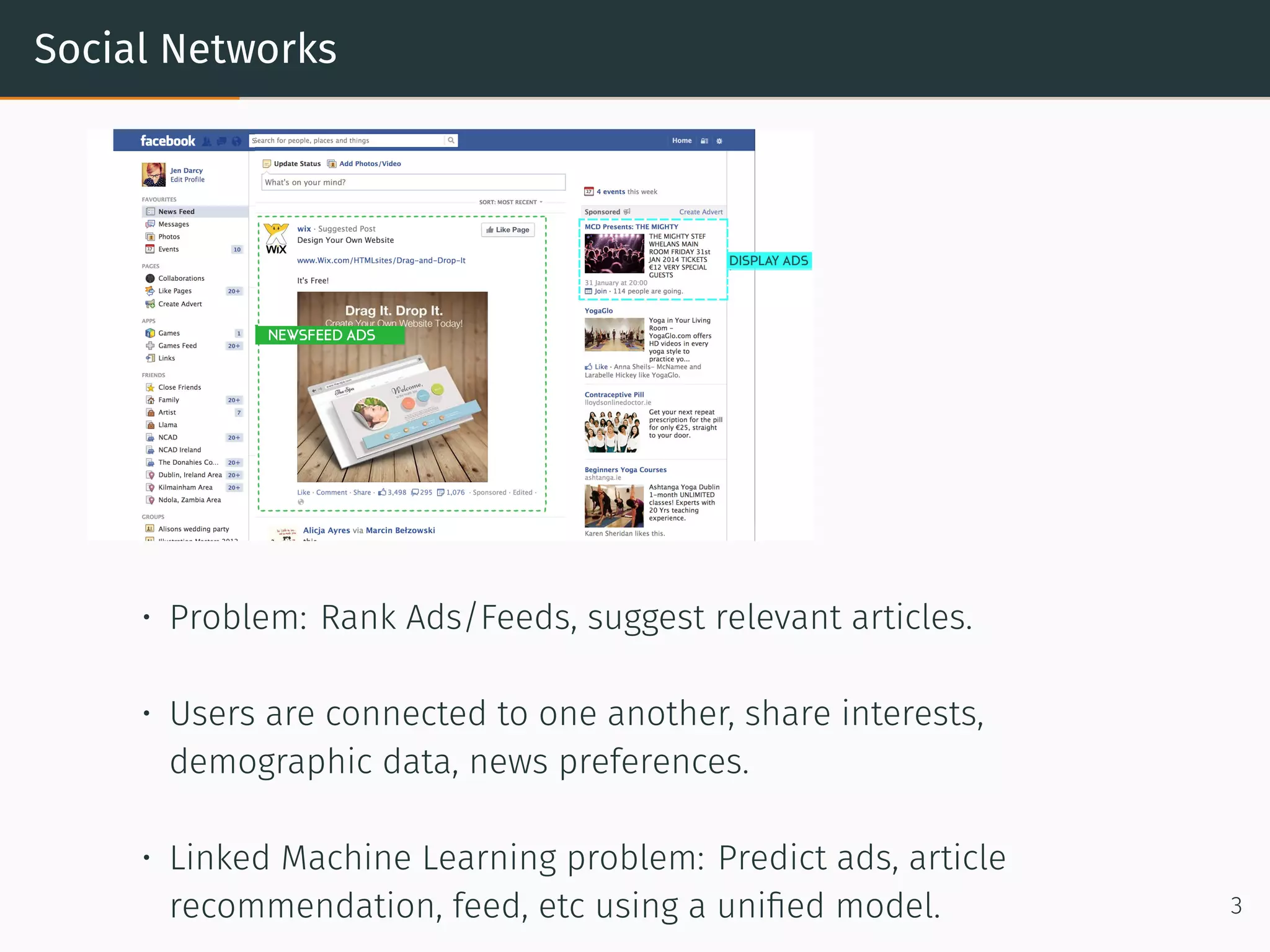

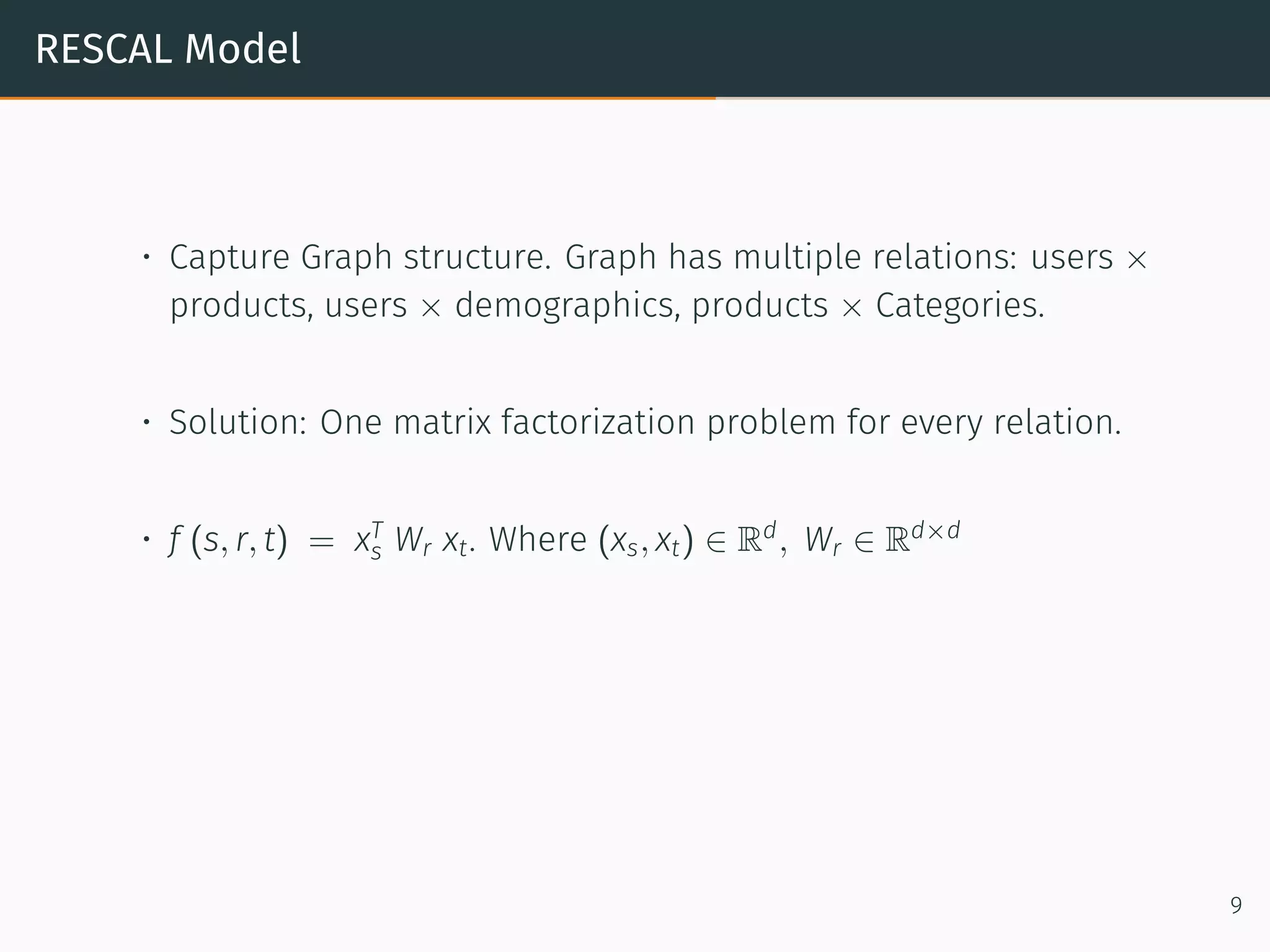

• Capture Graph structure. Graph has multiple relations: users ×

products, users × demographics, products × Categories.

• Solution: One matrix factorization problem for every relation.

• f (s, r, t) = xT

s Wr xt. Where (xs, xt) ∈ Rd

, Wr ∈ Rd×d

• Max-Margin: max

[

0, 1 −

(

f(s, r, t) − f(s, r, t′

)

)

]

. Can also use

softmax, or l2 loss like collaborative filtering.

9](https://image.slidesharecdn.com/relational-machine-learningupdated-170316094346/75/Relational-machine-learning-32-2048.jpg)

![Billinear Diag. and TransE Model

• RESCAL [2]: Requires O(Ned + Nrd2

) parameters. Scalability

issues for large Nr.

11](https://image.slidesharecdn.com/relational-machine-learningupdated-170316094346/75/Relational-machine-learning-35-2048.jpg)

![Billinear Diag. and TransE Model

• RESCAL [2]: Requires O(Ned + Nrd2

) parameters. Scalability

issues for large Nr.

• Bilinear Diag [4]: Enforce Wr to be a diagonal matrix. Assumes

symmetric relations. Why? Memory Complexity : O(Ned + Nrd)

11](https://image.slidesharecdn.com/relational-machine-learningupdated-170316094346/75/Relational-machine-learning-36-2048.jpg)

![Billinear Diag. and TransE Model

• RESCAL [2]: Requires O(Ned + Nrd2

) parameters. Scalability

issues for large Nr.

• Bilinear Diag [4]: Enforce Wr to be a diagonal matrix. Assumes

symmetric relations. Why? Memory Complexity : O(Ned + Nrd)

• TransE [1] : f(s, r, t) = −||(xs + xr) − xt||2.

11](https://image.slidesharecdn.com/relational-machine-learningupdated-170316094346/75/Relational-machine-learning-37-2048.jpg)

![Billinear Diag. and TransE Model

• RESCAL [2]: Requires O(Ned + Nrd2

) parameters. Scalability

issues for large Nr.

• Bilinear Diag [4]: Enforce Wr to be a diagonal matrix. Assumes

symmetric relations. Why? Memory Complexity : O(Ned + Nrd)

• TransE [1] : f(s, r, t) = −||(xs + xr) − xt||2.

• TransE : Can it model all types of relations. Why?

11](https://image.slidesharecdn.com/relational-machine-learningupdated-170316094346/75/Relational-machine-learning-38-2048.jpg)

![Billinear Diag. and TransE Model

• RESCAL [2]: Requires O(Ned + Nrd2

) parameters. Scalability

issues for large Nr.

• Bilinear Diag [4]: Enforce Wr to be a diagonal matrix. Assumes

symmetric relations. Why? Memory Complexity : O(Ned + Nrd)

• TransE [1] : f(s, r, t) = −||(xs + xr) − xt||2.

• TransE : Can it model all types of relations. Why?

• Takeaway: Make sure parameters are shared. Either shared

representation or shared layer.

11](https://image.slidesharecdn.com/relational-machine-learningupdated-170316094346/75/Relational-machine-learning-39-2048.jpg)

![Negative Sampling

• How to generate negative samples? Negatives may not be

provided.

• Closed World Assumption: If not a positive then must be a

negative.

• Max-Margin: max

[

0, 1 −

(

f(s, r, t) − f(s, r, t′

)

)

]

. Softer negatives:

(s, r,′

t′

) more negative than (s, r, t)

12](https://image.slidesharecdn.com/relational-machine-learningupdated-170316094346/75/Relational-machine-learning-42-2048.jpg)

![Negative Sampling

• How to generate negative samples? Negatives may not be

provided.

• Closed World Assumption: If not a positive then must be a

negative.

• Max-Margin: max

[

0, 1 −

(

f(s, r, t) − f(s, r, t′

)

)

]

. Softer negatives:

(s, r,′

t′

) more negative than (s, r, t)

• Soft Max Loss : log(1 + exp(−yif(si, ri, ti)). Negatives are ‘really’

negative.

12](https://image.slidesharecdn.com/relational-machine-learningupdated-170316094346/75/Relational-machine-learning-43-2048.jpg)

![Negative Sampling

• How to generate negative samples? Negatives may not be

provided.

• Closed World Assumption: If not a positive then must be a

negative.

• Max-Margin: max

[

0, 1 −

(

f(s, r, t) − f(s, r, t′

)

)

]

. Softer negatives:

(s, r,′

t′

) more negative than (s, r, t)

• Soft Max Loss : log(1 + exp(−yif(si, ri, ti)). Negatives are ‘really’

negative.

• Number of negative samples during training affect performance.

See [3].

12](https://image.slidesharecdn.com/relational-machine-learningupdated-170316094346/75/Relational-machine-learning-44-2048.jpg)