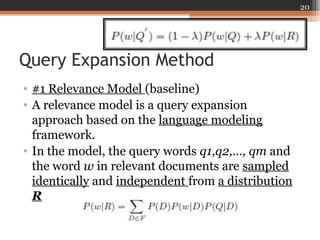

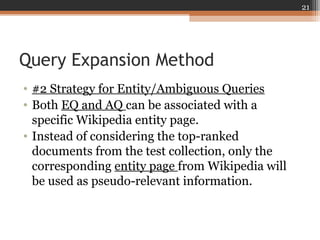

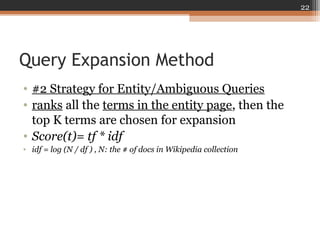

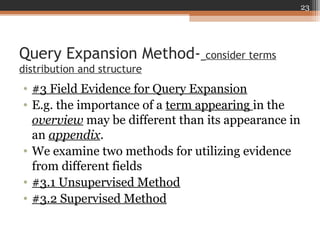

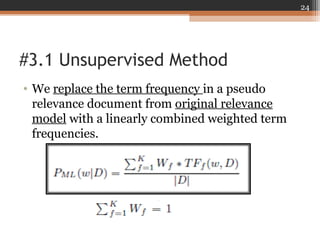

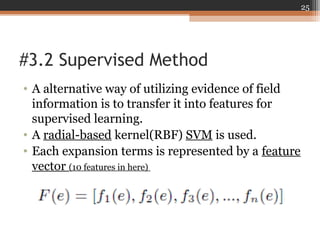

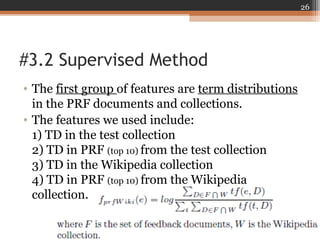

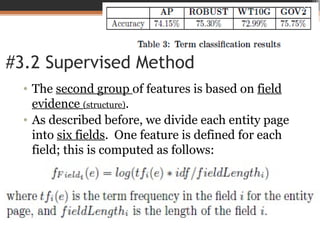

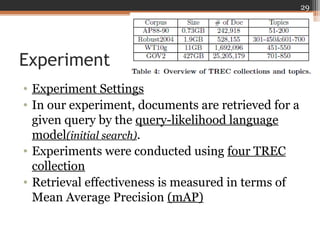

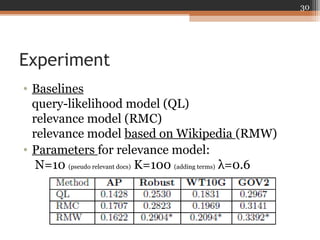

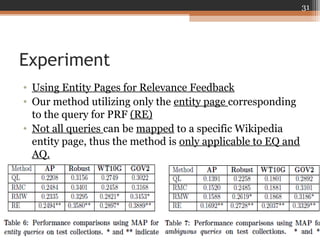

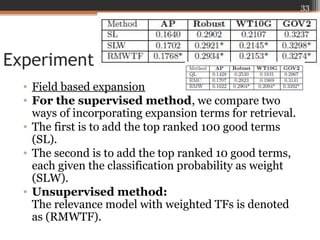

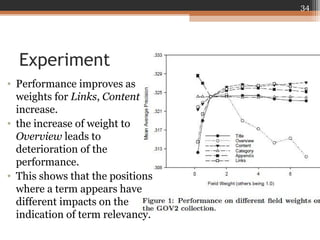

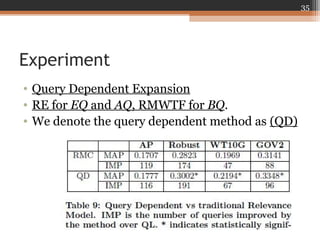

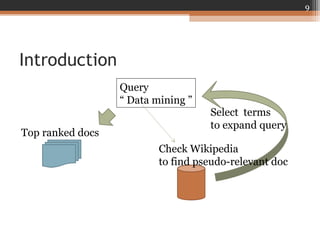

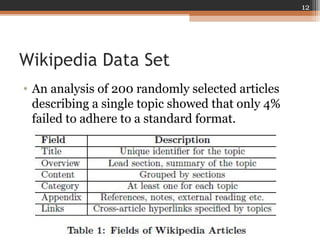

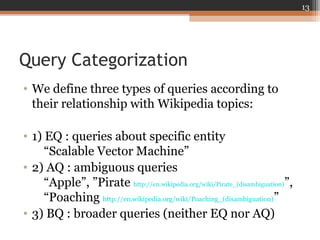

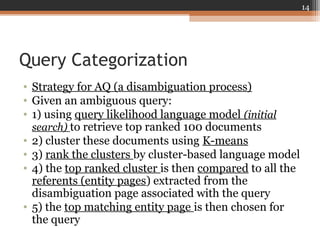

This document summarizes a presentation on using Wikipedia for query expansion in pseudo-relevance feedback (PRF) for information retrieval. Three query expansion methods are proposed: 1) a baseline relevance model, 2) selecting terms from the Wikipedia entity page for entity/ambiguous queries, and 3) two field-based methods (unsupervised and supervised) that consider term distributions and structures. Experiments on TREC collections show the query-dependent approach, which selects the expansion method based on query type, outperforms the baseline relevance model. Future work could include exploring term selection for broader queries and evaluating on additional datasets.

![Query Categorization

• 3) rank the clusters by cluster-based language

model, as proposed by Lee et. Al [19]

15](https://image.slidesharecdn.com/query-dependent-pseudorelevance-feedback-based-on-wikipedia-160212152441/85/Query-Dependent-Pseudo-Relevance-Feedback-based-on-Wikipedia-15-320.jpg)