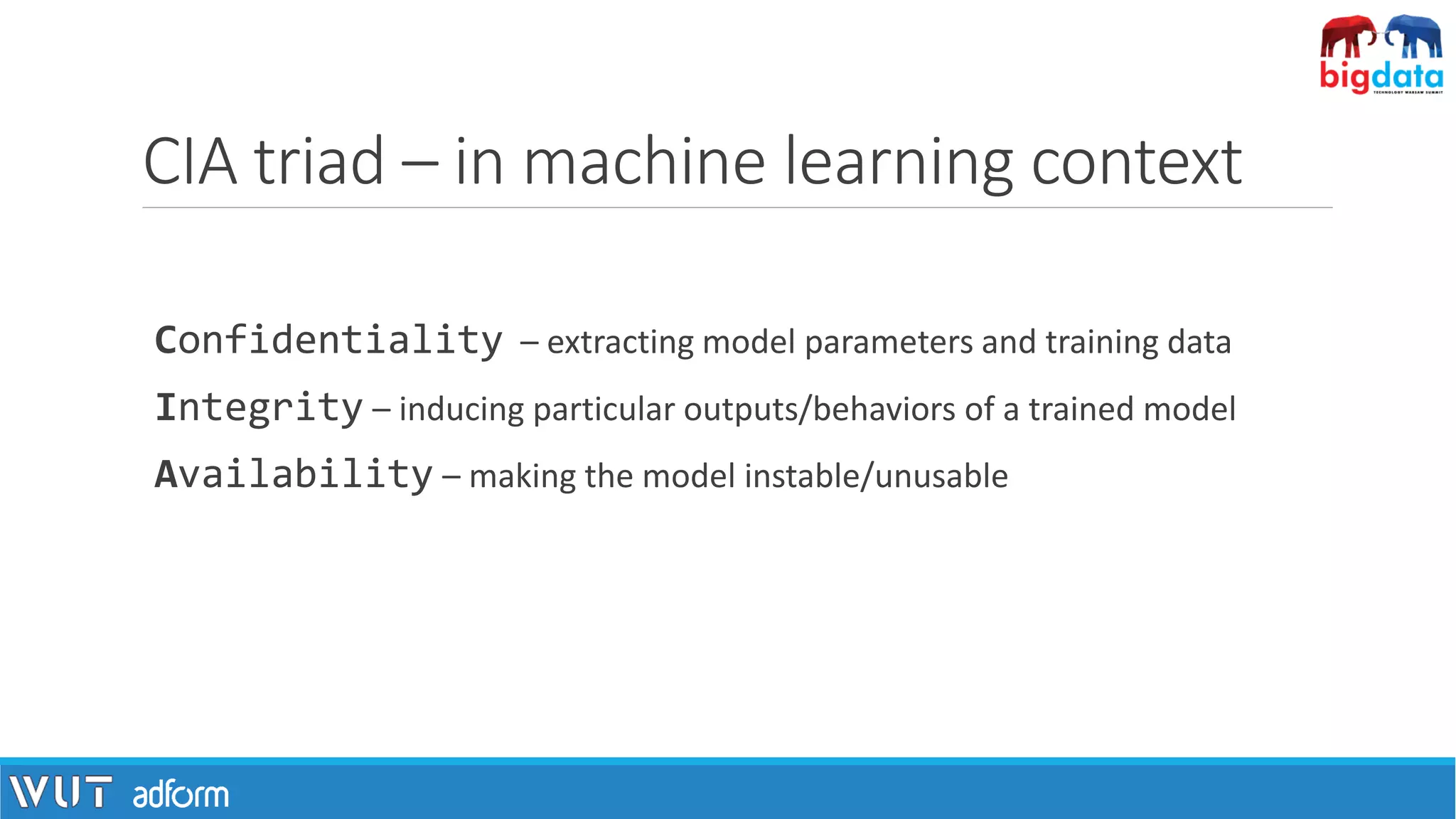

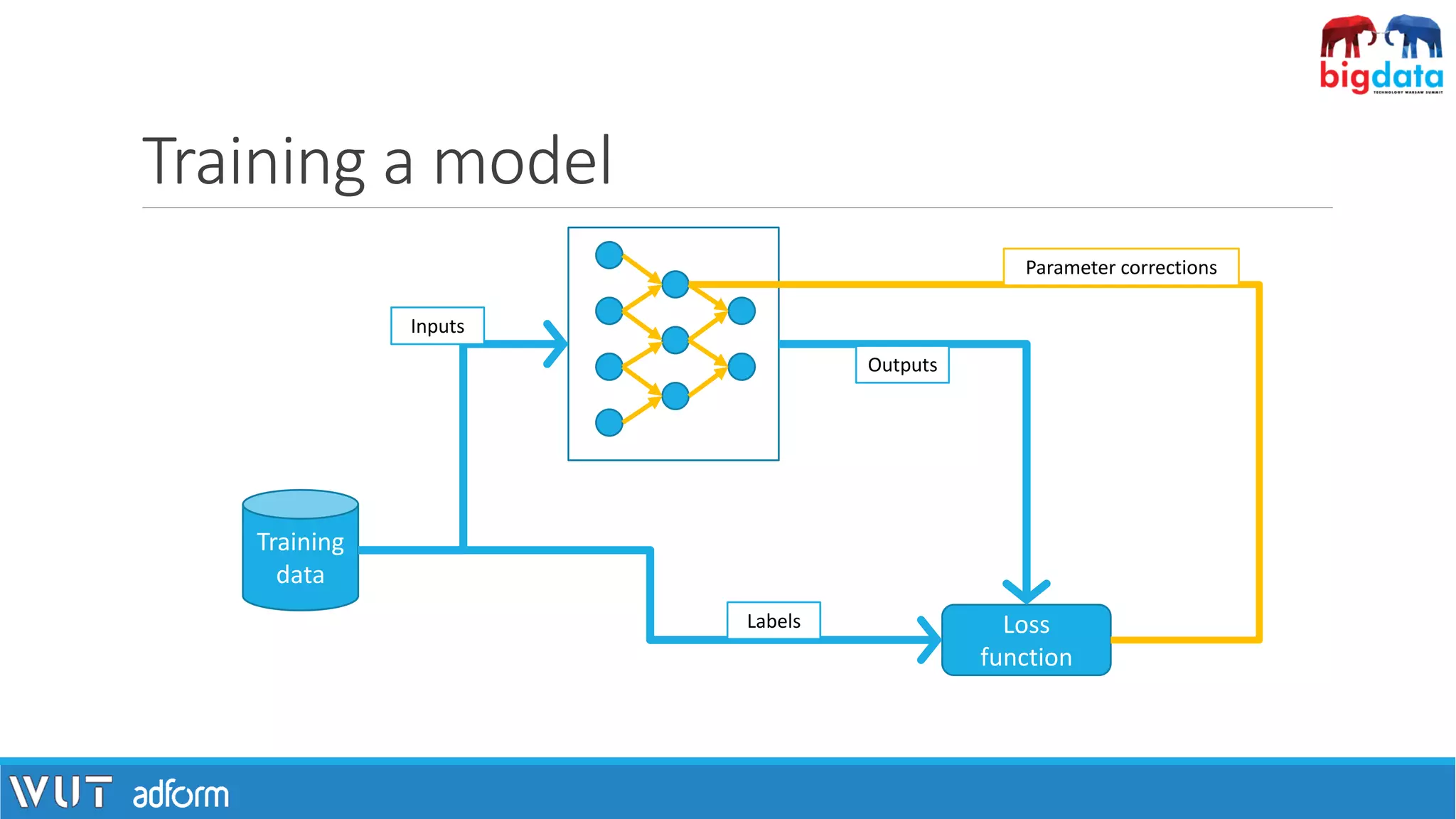

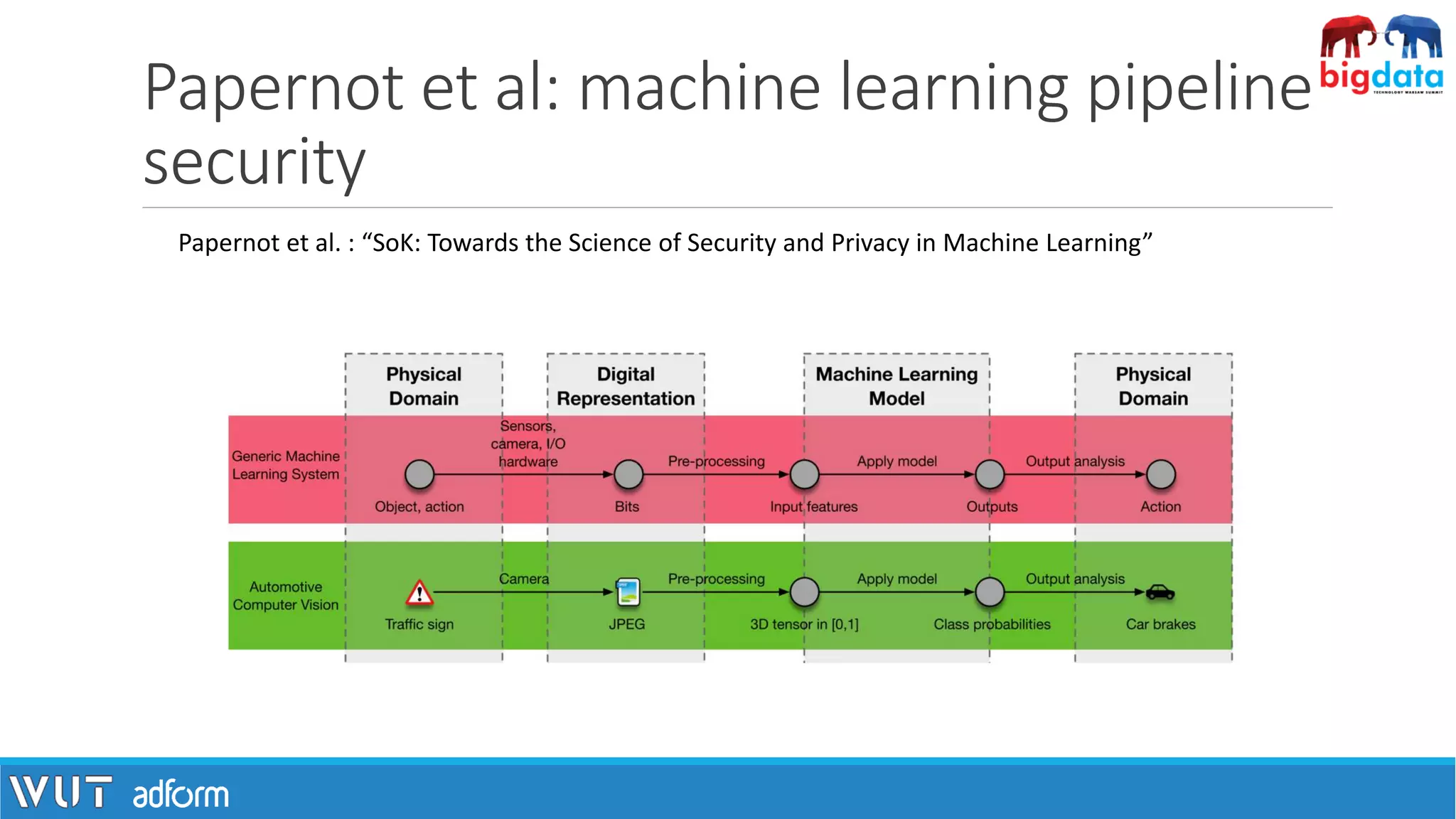

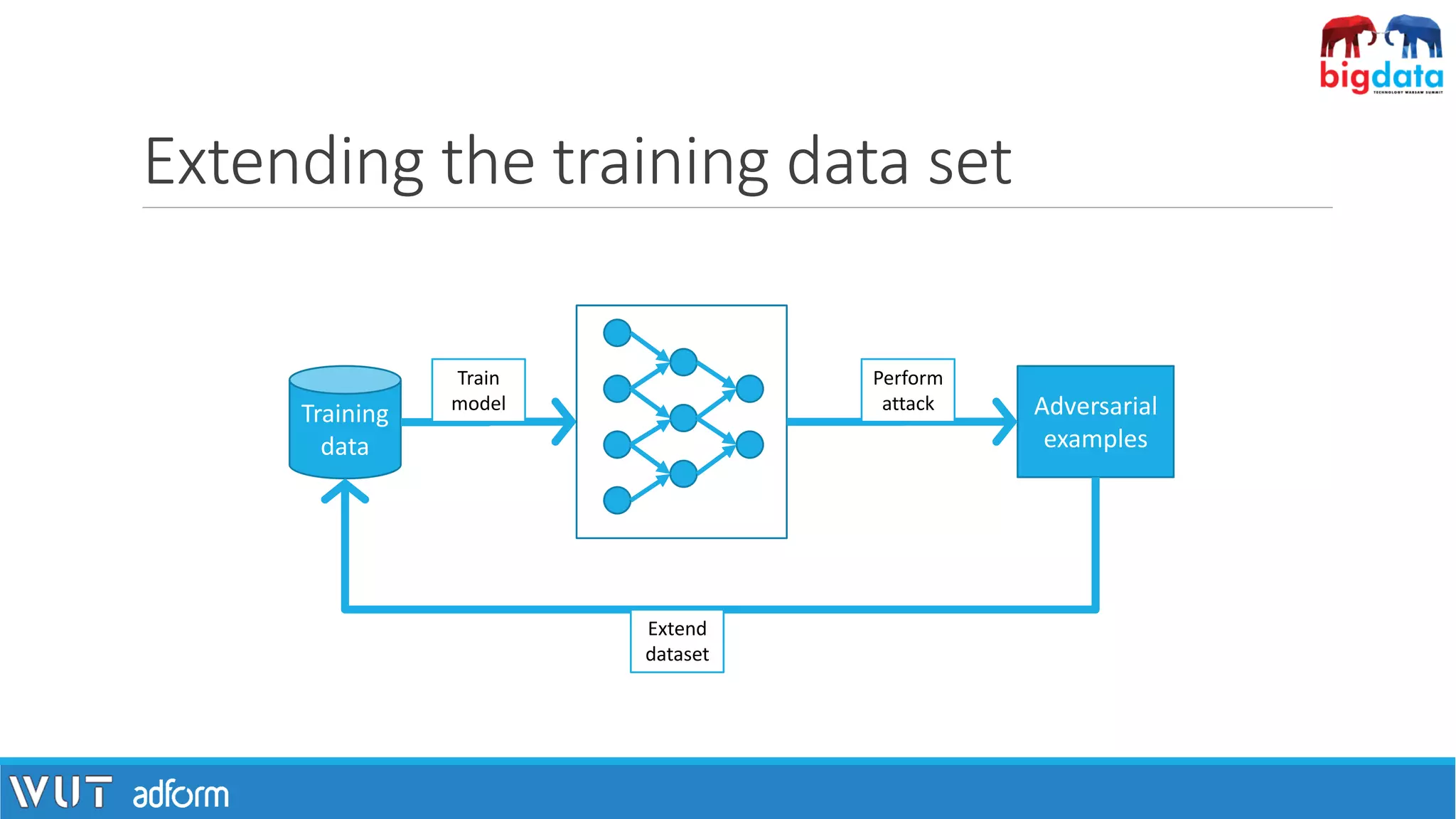

The document discusses the intersection of machine learning and security, highlighting tools like Lyrebird and FakeApp that create realistic digital content. It examines the CIA triad in the context of machine learning, focusing on confidentiality, integrity, and availability, and suggests differential privacy as a potential remedy for data anonymity issues. Additionally, it covers the challenges posed by adversarial examples in machine learning and presents initial defense methods against such attacks.

![AI and machine learning

help to create new tools

[1] Image: https://pixabay.com/pl/sztuczna-inteligencja-ai-robota-2228610/

Some of them make us

rethink what is “real”](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-2-2048.jpg)

![lyrebird.ai

“Lyrebird allows you to create a digital voice that sounds like you with only one minute of audio.” [1]

[1] Quote & image: https://lyrebird.ai/](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-3-2048.jpg)

![Learning lip sync from audio

[1] Suwajanakorn, Supasorn, Steven M. Seitz, and Ira Kemelmacher-Shlizerman. "Synthesizing obama: learning lip sync from audio." ACM Transactions on Graphics (TOG) 36.4 (2017): 95.

[2] Image: https://youtu.be/9Yq67CjDqvw](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-4-2048.jpg)

![FakeApp

”A desktop app for creating photorealistic faceswap videos made with deep learning” [1]

[1] http://www.fakeapp.org/

[2] Image: Nicolas Cage fake movie compilation: https://youtu.be/BU9YAHigNx8](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-5-2048.jpg)

![ML through the security lens

[1] Image: https://pixabay.com/pl/streszczenie-geometryczny-%C5%9Bwiata-1278059/](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-6-2048.jpg)

![Sharing datasets is tricky

[1] Image: https://www.theguardian.com/world/2018/jan/28/fitness-tracking-app-gives-away-location-of-secret-us-army-bases

A. Narayanan and V. Shmatikov. “Robust de-anonymization of large sparse datasets (how to break anonymity

of the Netflix prize dataset)”. IEEE Symposium on Security and Privacy. 2008.](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-9-2048.jpg)

![A possible remedy: differential privacy

• A promise made to a data subject:

“You will not be affected, adversely or otherwise, by allowing your data to be

used in any study or analysis, no matter what other studies, data sets, or

information sources, are available.” [1]

• Adding randomness helps in protecting individual privacy.

[1] Dwork, C., & Roth, A. (2013). The Algorithmic Foundations of Differential Privacy. Foundations and Trends® in Theoretical Computer Science, 9(3–4), 211–407.](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-10-2048.jpg)

![Rapid progress in image recognition

[1] Left image MNIST: https://upload.wikimedia.org/wikipedia/commons/2/27/MnistExamples.png

[2] Right image CIFAR: https://www.cs.toronto.edu/~kriz/cifar.html

[3] Wan, Li, et al. "Regularization of neural networks using dropconnect." International Conference on Machine Learning. 2013.

[4] Graham, Benjamin. "Fractional max-pooling." arXiv preprint arXiv:1412.6071 (2014)

MNIST: 99.79% [3]

CIFAR-10: 96.53% [4]](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-14-2048.jpg)

![“5 days after Microsoft announced it had beat the human benchmark of 5.1% errors with a 4.94% error

grabbing neural network, Google announced it had one-upped Microsoft by 0.04%” [1]

[1] https://www.eetimes.com/document.asp?doc_id=1325712

“Human level” results](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-15-2048.jpg)

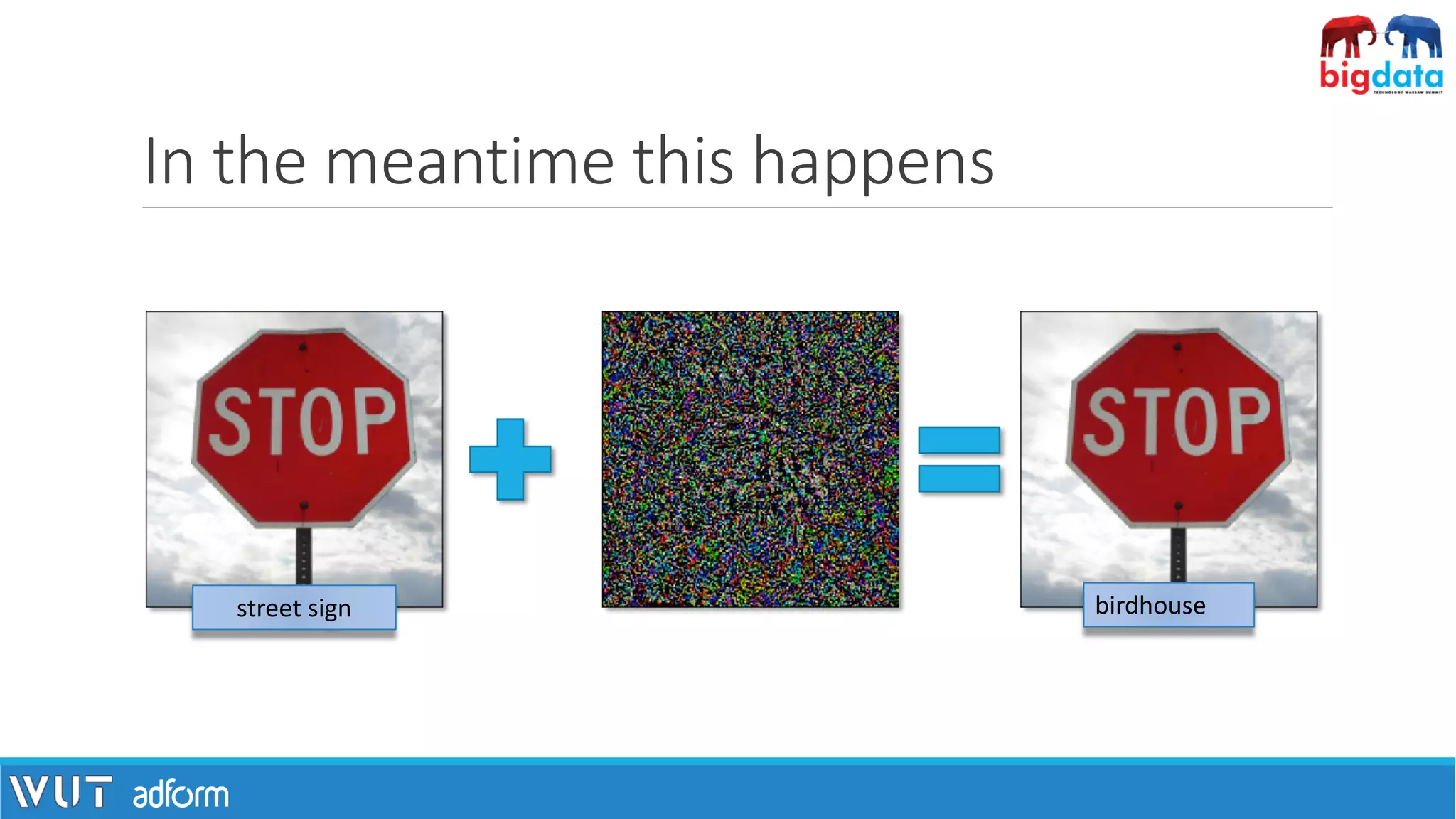

![Adversarial examples

[1] I. J. Goodfellow, J. Shlens, and C. Szegedy, “Explaining and Harnessing Adversarial Examples”, 2014.

“[…] inputs formed by applying small but intentionally worst-case perturbations […] (which) results in

the model outputting an incorrect answer with high confidence” [1]

Goodfellow et al.](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-17-2048.jpg)

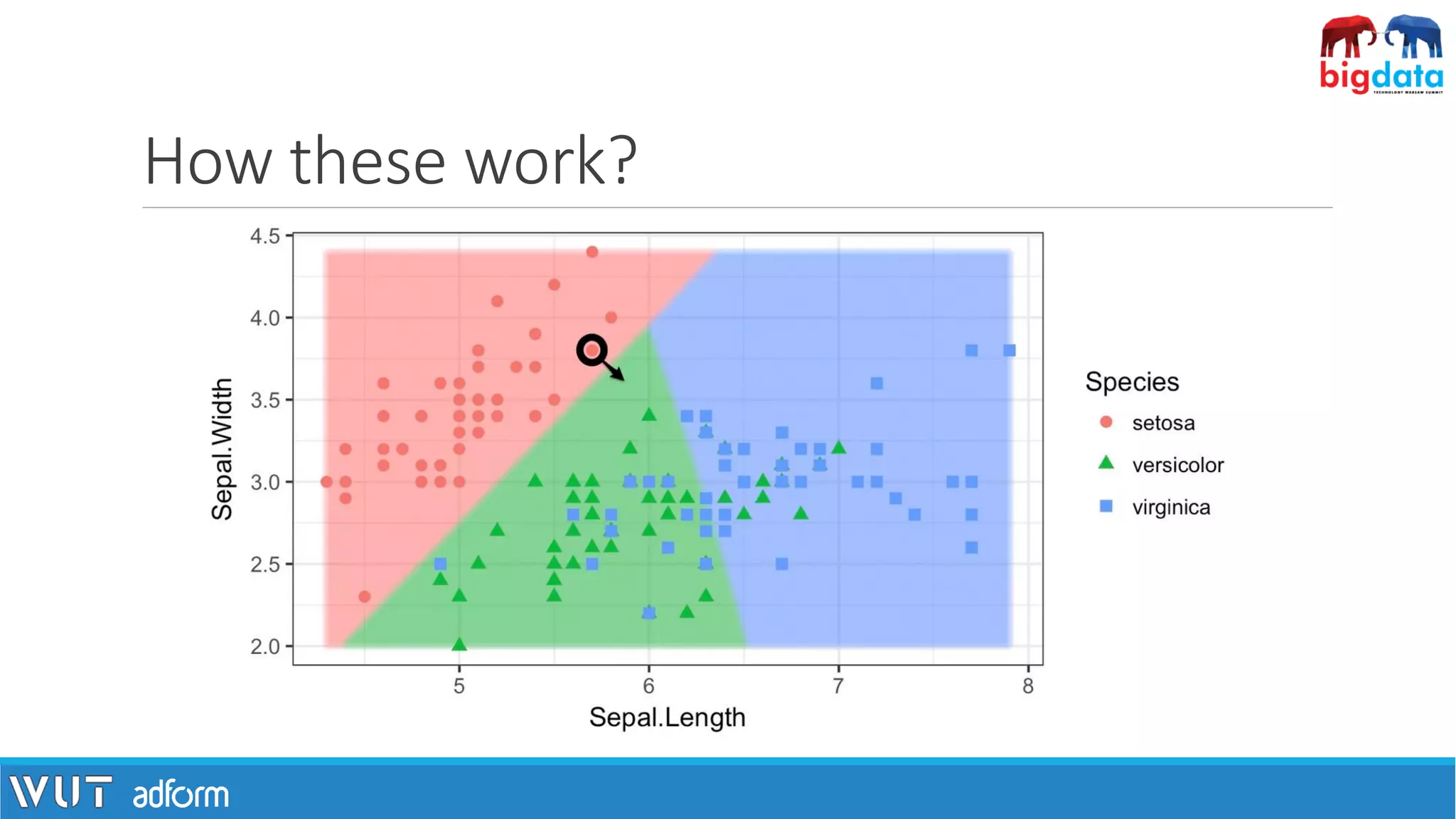

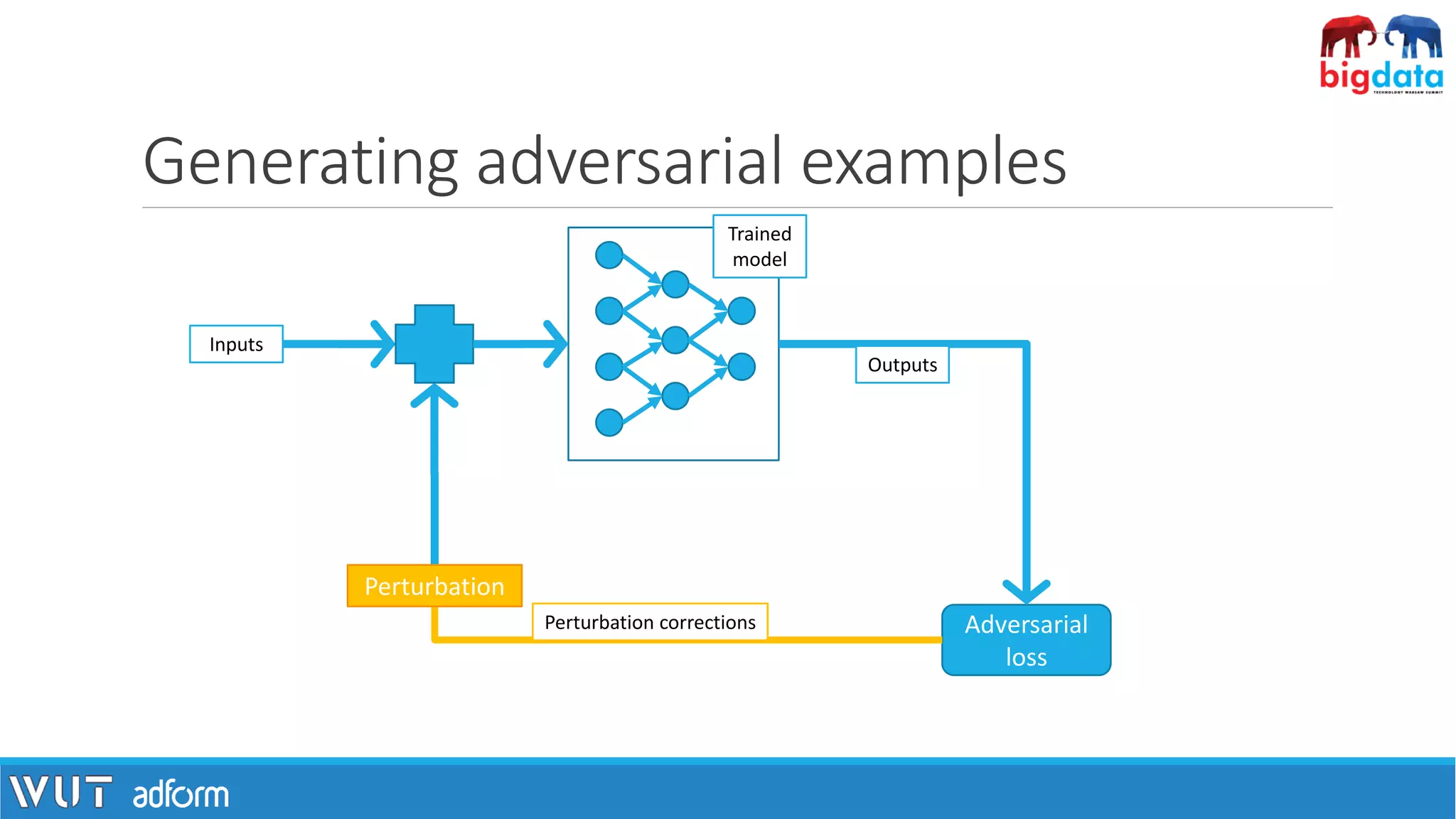

![How these work?

▪Given a classifier f(x) we need to find a (minimal) perturbation r for which

f(x+r) ≠ f(x).

▪Finding r can be realized as an optimization task.

[1] Black box https://cdn.pixabay.com/photo/2014/04/03/10/22/black-box-310220_960_720.png

[2] White box https://cdn.pixabay.com/photo/2013/07/12/13/55/box-147574_960_720.png](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-18-2048.jpg)

![One step further: adversarial patch

[1] Brown, T. B., Mané, D., Roy, A., Abadi, M., & Gilmer, J. (n.d.). „Adversarial Patch”

toaster](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-22-2048.jpg)

![Two steps further: adversarial object

[1] Athalye, A., Engstrom, L., Ilyas, A., & Kwok, K. (2017). Synthesizing Robust Adversarial Examples.

[2] Images: http://www.labsix.org/physical-objects-that-fool-neural-nets/

Trained

model

Adversarial

attack

Adversarial

3D model

3D Printing](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-23-2048.jpg)

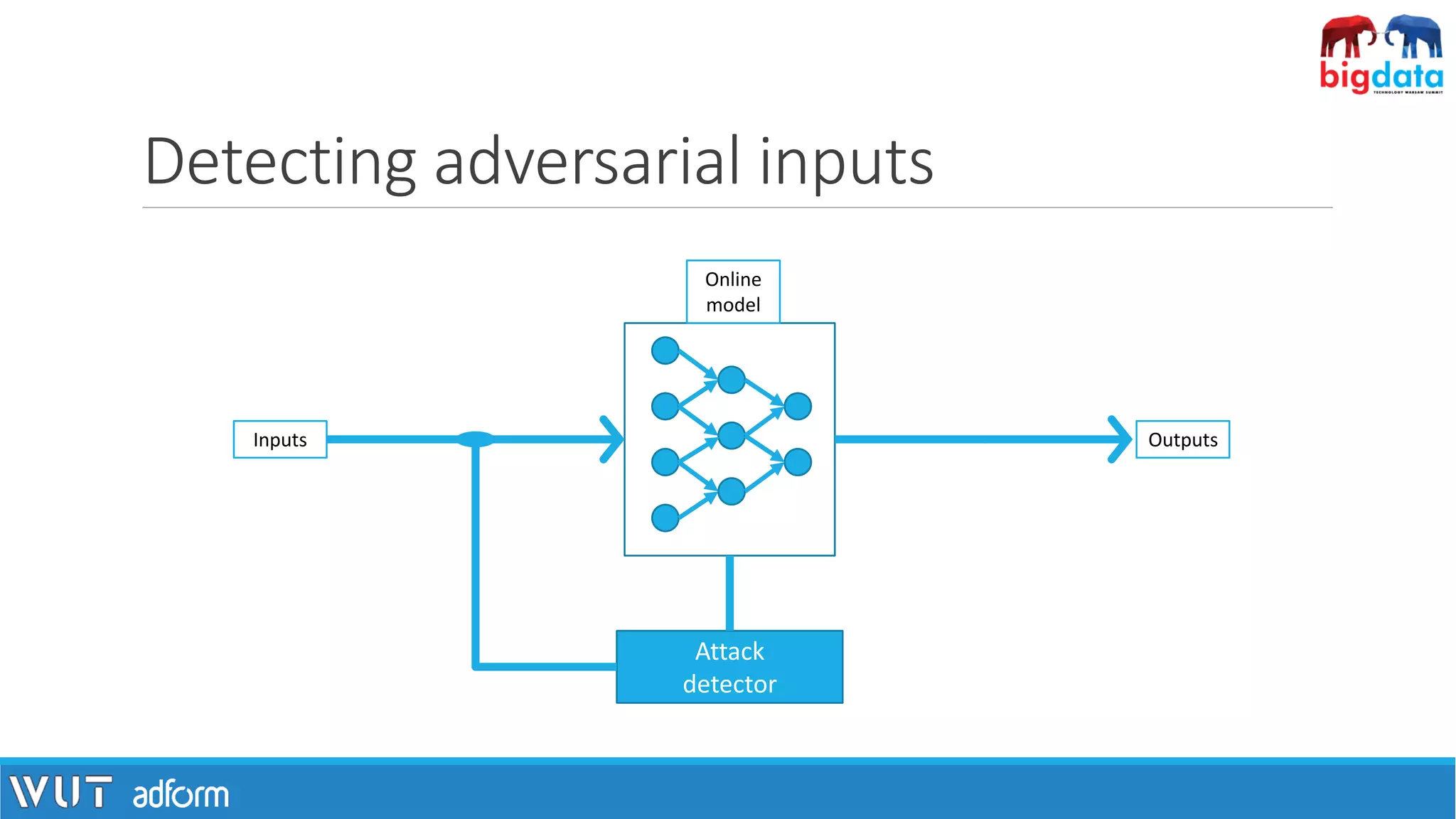

![Defense methods – first attempts

• Gradient masking.

• Defensive distillation.

[1] Image: http://cdn.emgn.com/wp-content/uploads/2016/01/society-will-fail-emgn-16.jpg](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-25-2048.jpg)

![Conclusions

[1] http://maxpixel.freegreatpicture.com/](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-29-2048.jpg)

![„In the history of science and technology, the

engineering artifacts have almost always

preceded the theoretical understanding[…] if you

are not happy with our understanding of the

methods you use everyday, fix it” [2]

Yann LeCun

[1] http://maxpixel.freegreatpicture.com/

[2] comment to a Ali Rahimi's "Test of Time" award talk at NIPS](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-30-2048.jpg)

![Thank you for your

attention!

ON THE SIDE NOTE – WE’RE HIRING! ☺

[1] http://maxpixel.freegreatpicture.com/](https://image.slidesharecdn.com/pawelzawistowski-180323120343/75/Machine-learning-security-Pawel-Zawistowski-Warsaw-University-of-Technology-Adform-31-2048.jpg)