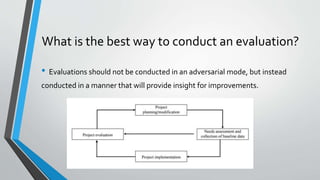

This document summarizes chapters 1 and 2 of "The 2010 User-Friendly Handbook for Project Evaluation" by Joy Frechtling. It discusses the reasons for conducting evaluations, including allowing for improvement and providing unanticipated insights. It outlines two types of evaluations: formative evaluations provide ongoing feedback, while summative evaluations assess the overall success of a completed project. Formative evaluations include implementation evaluations to check that a project is proceeding as planned and progress evaluations to check that goals are being met. The document contrasts program and project evaluations.