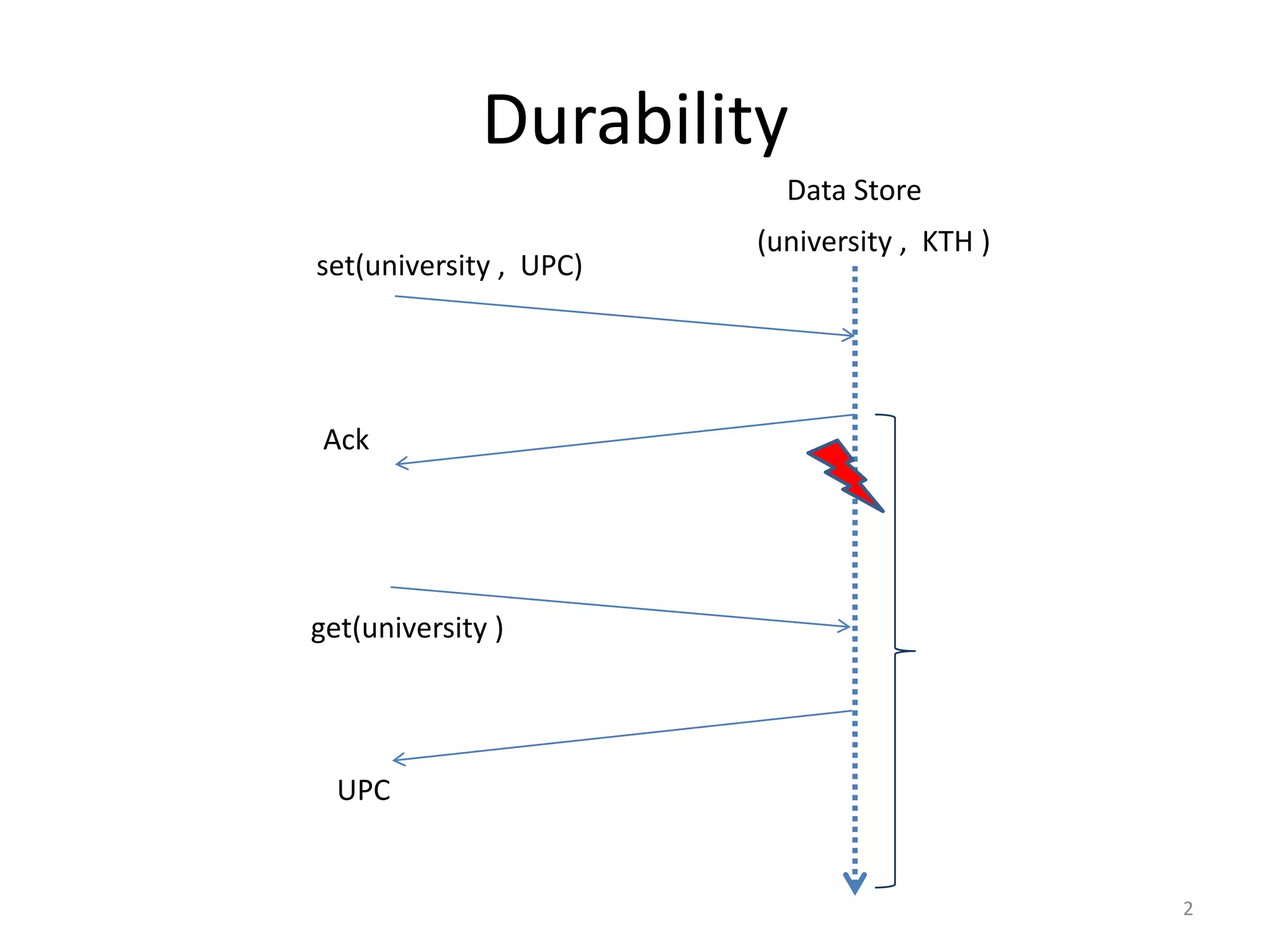

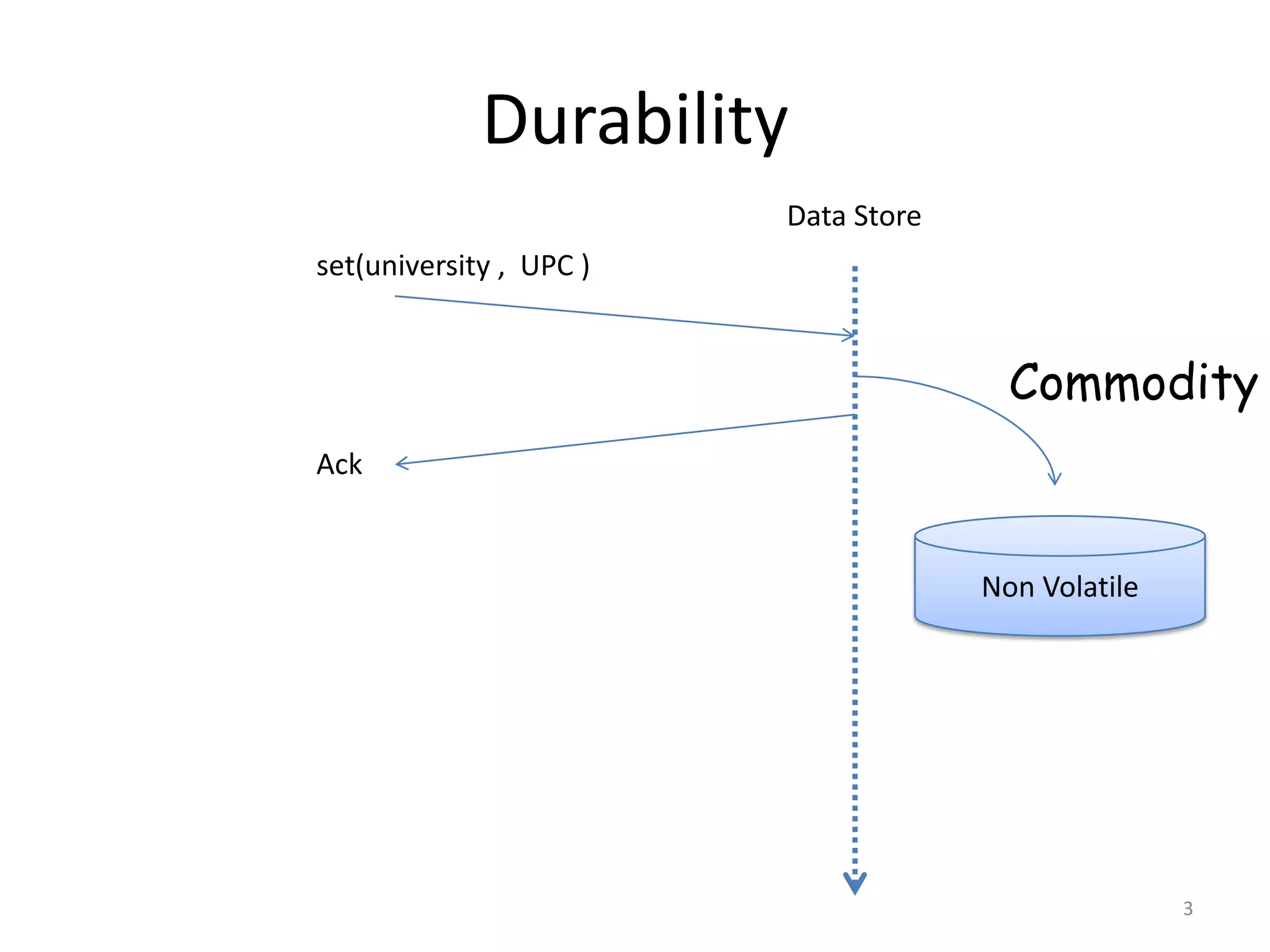

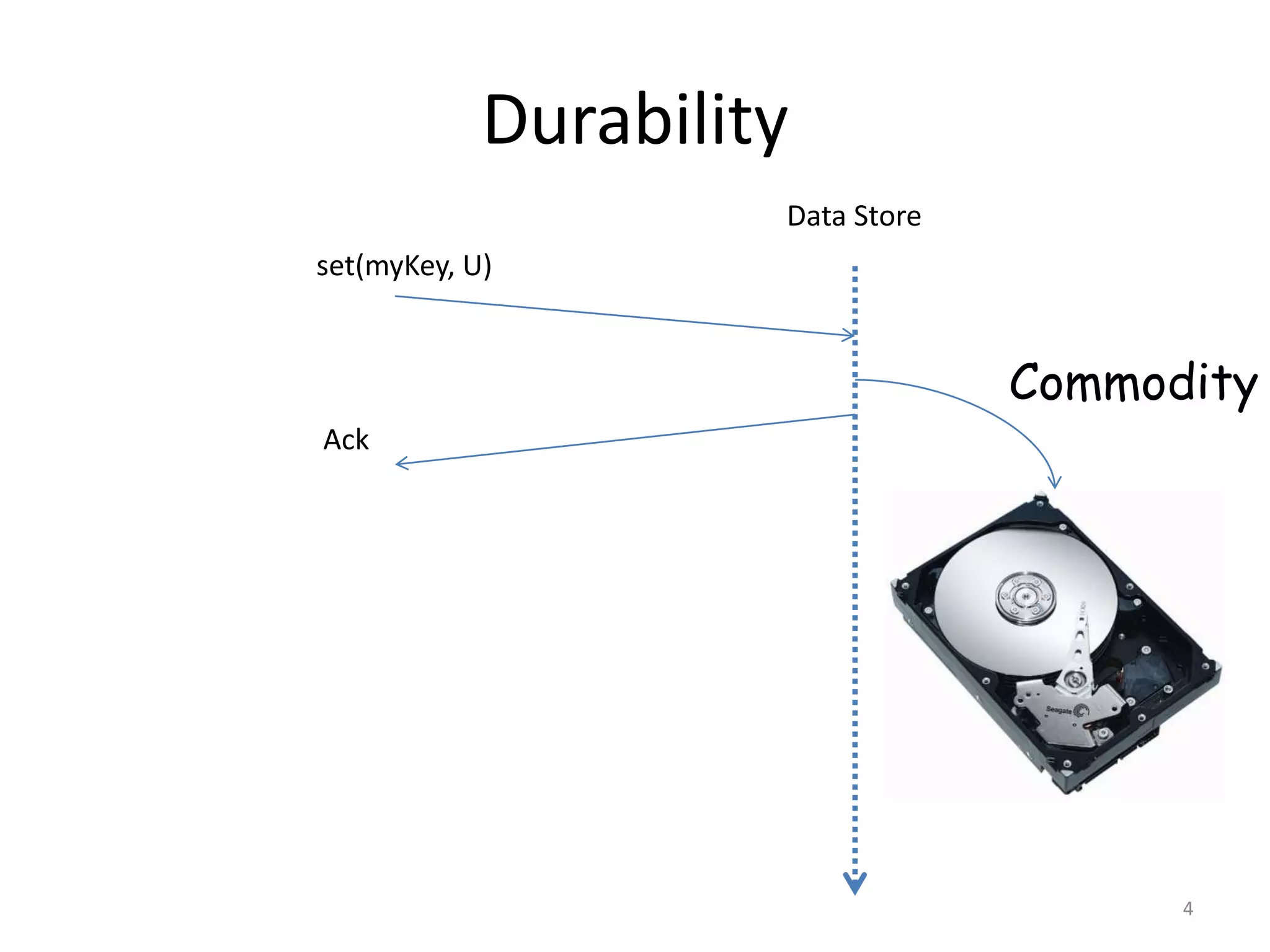

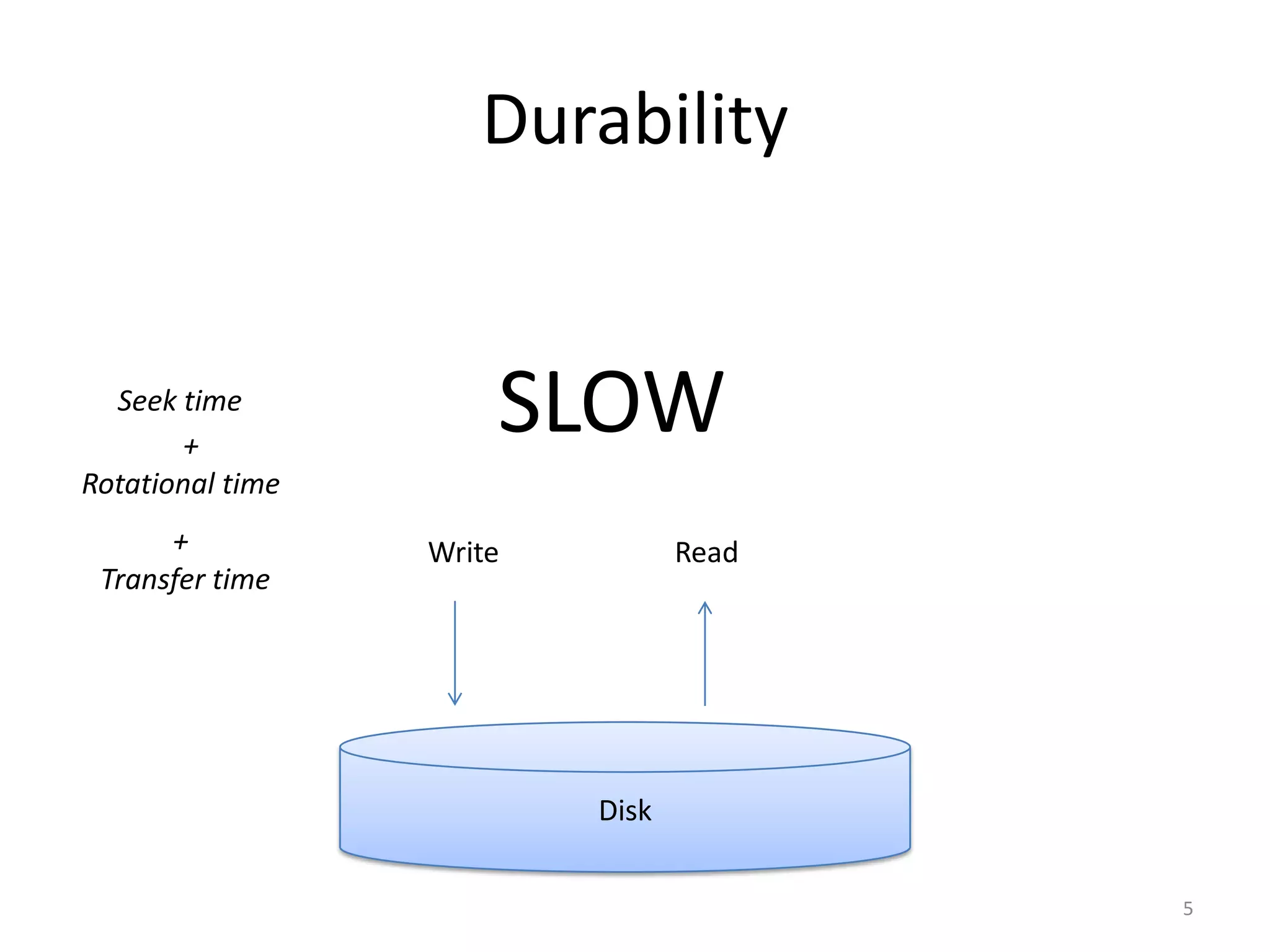

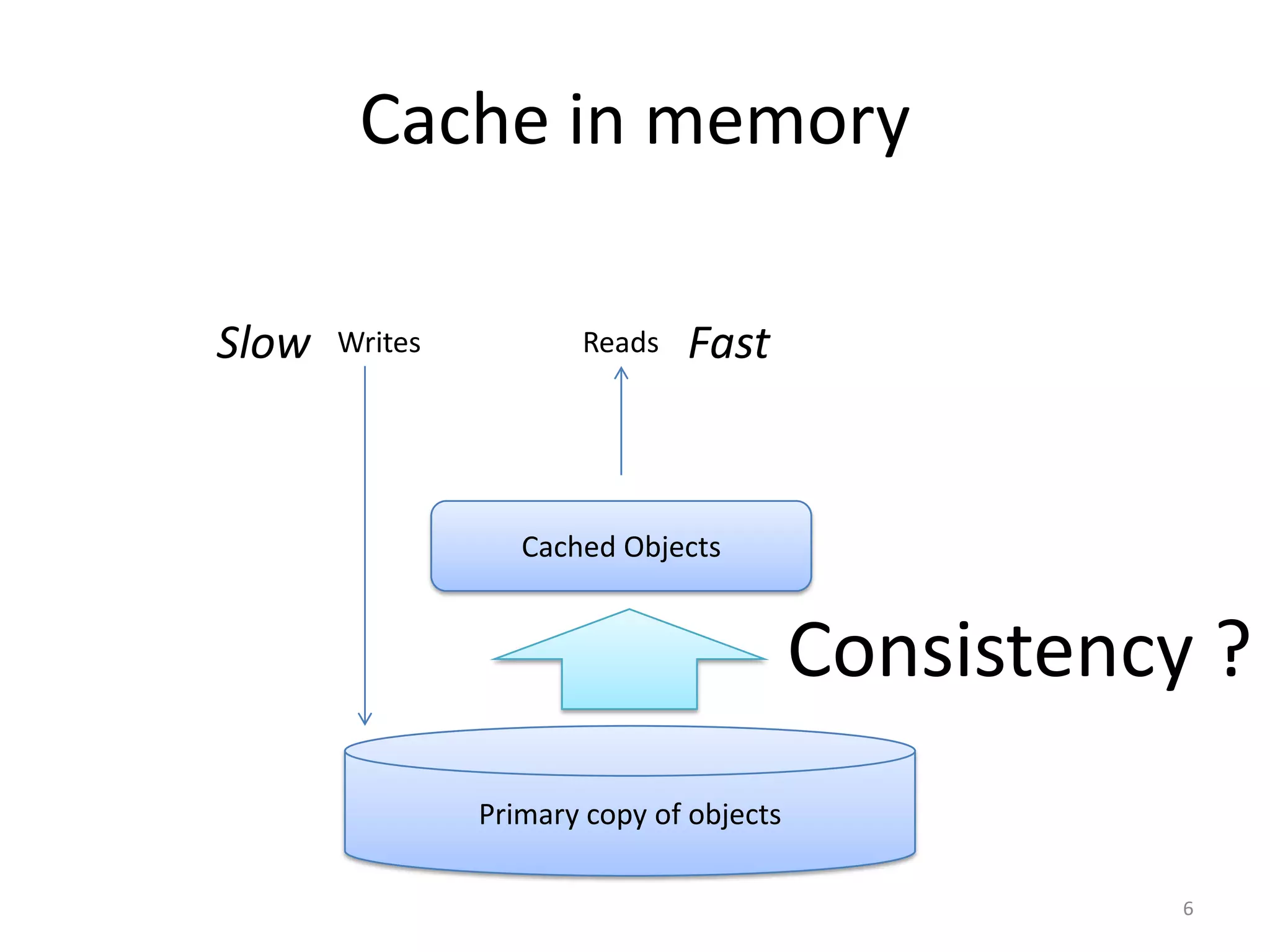

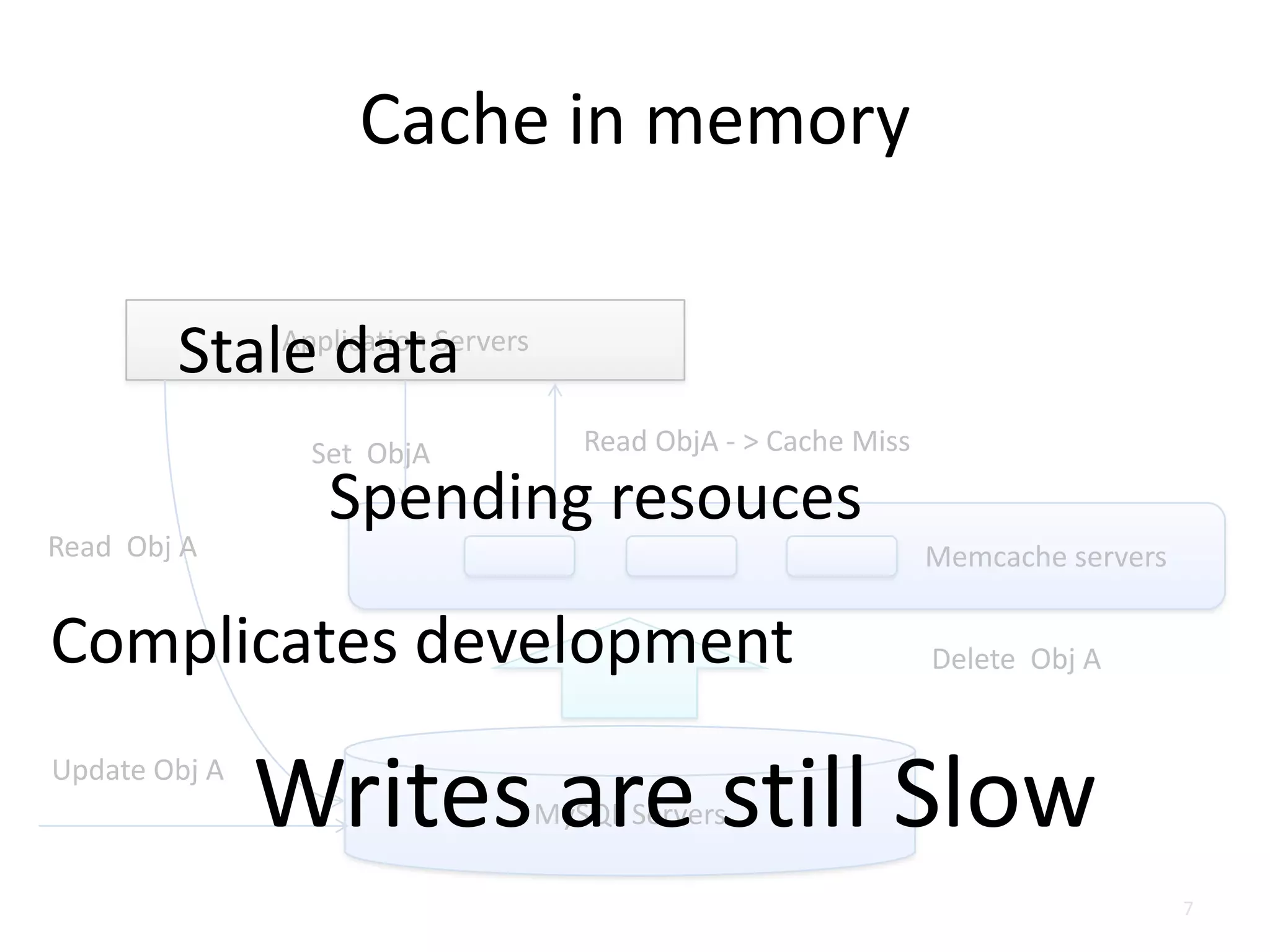

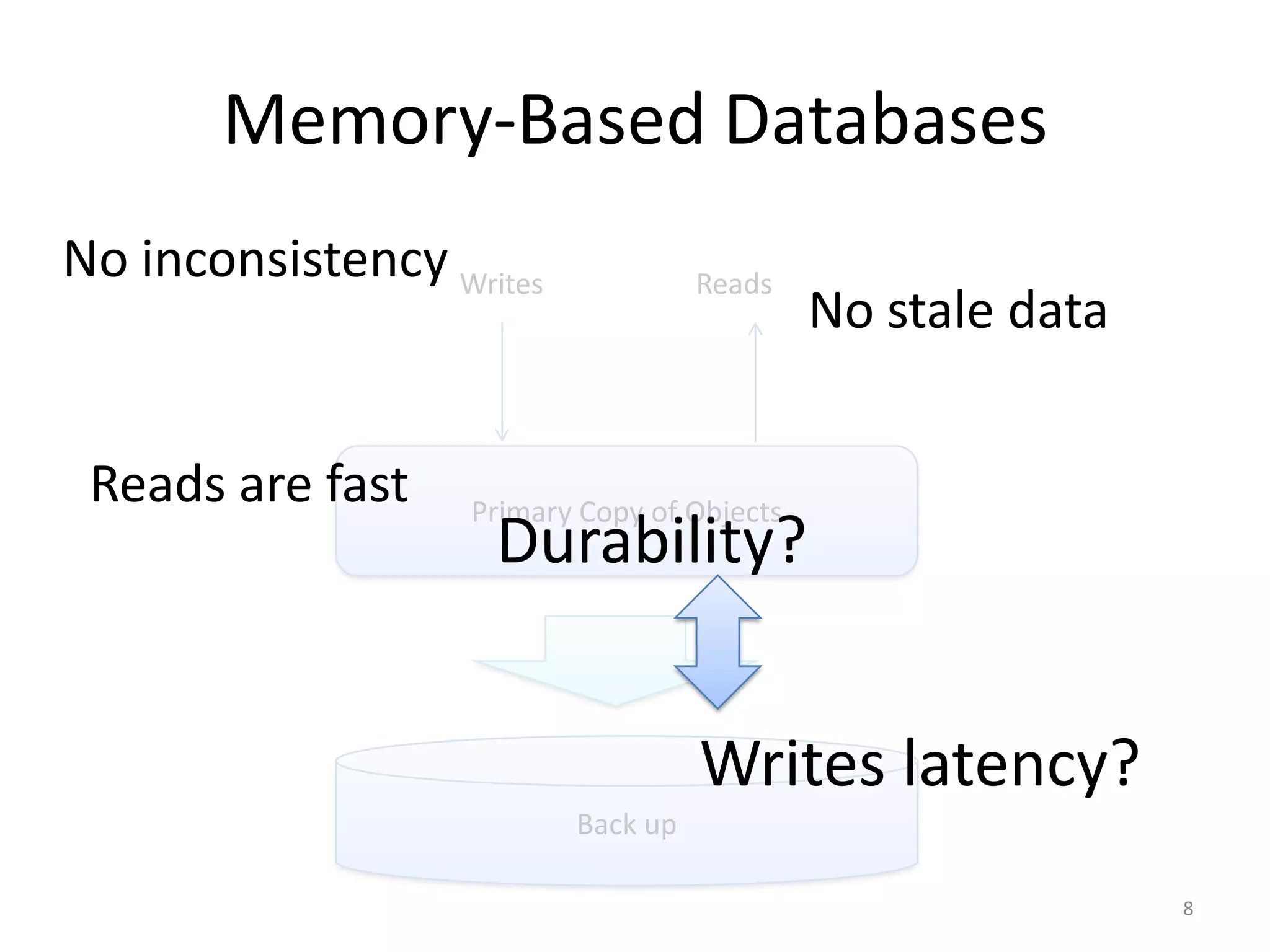

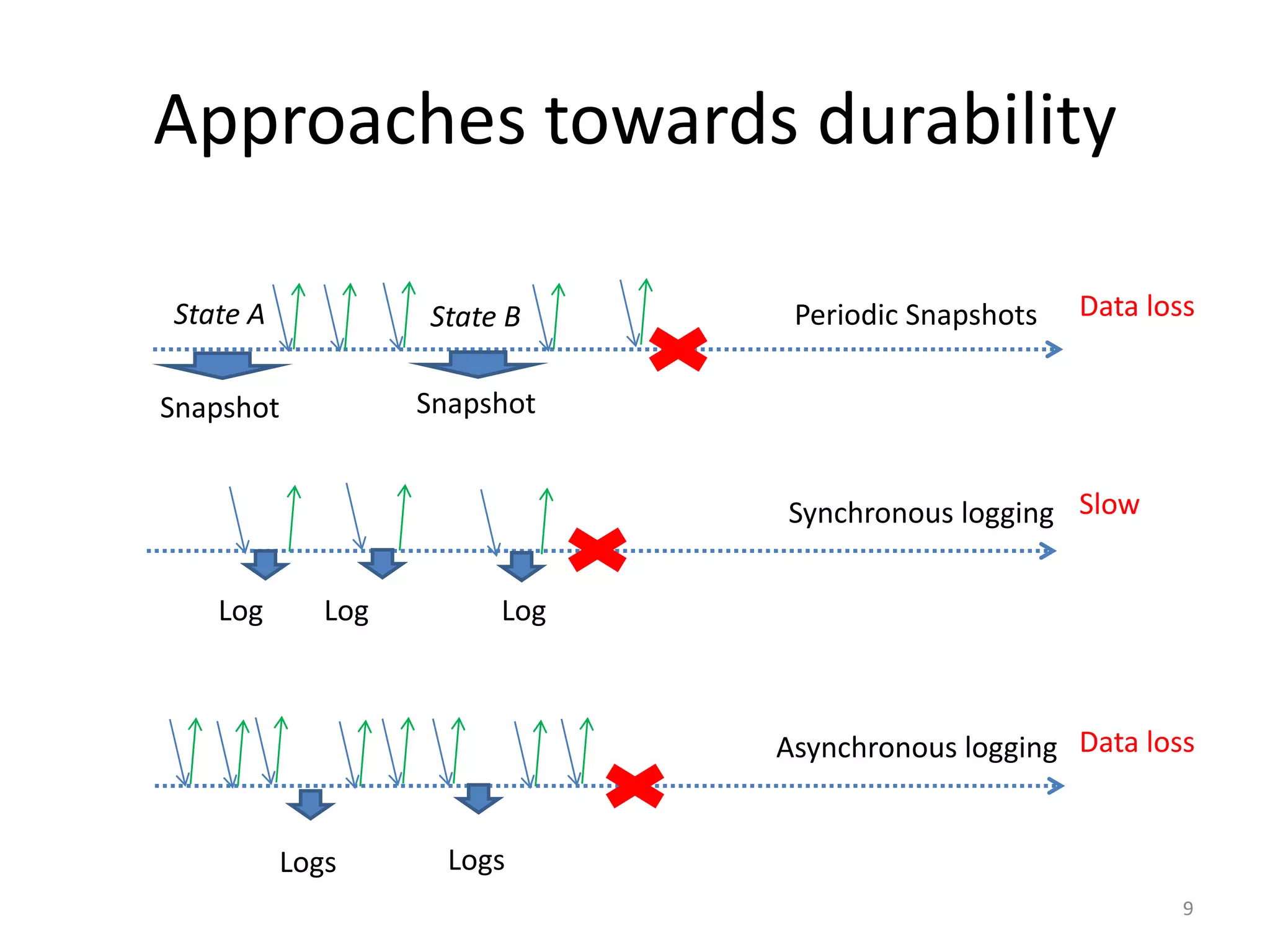

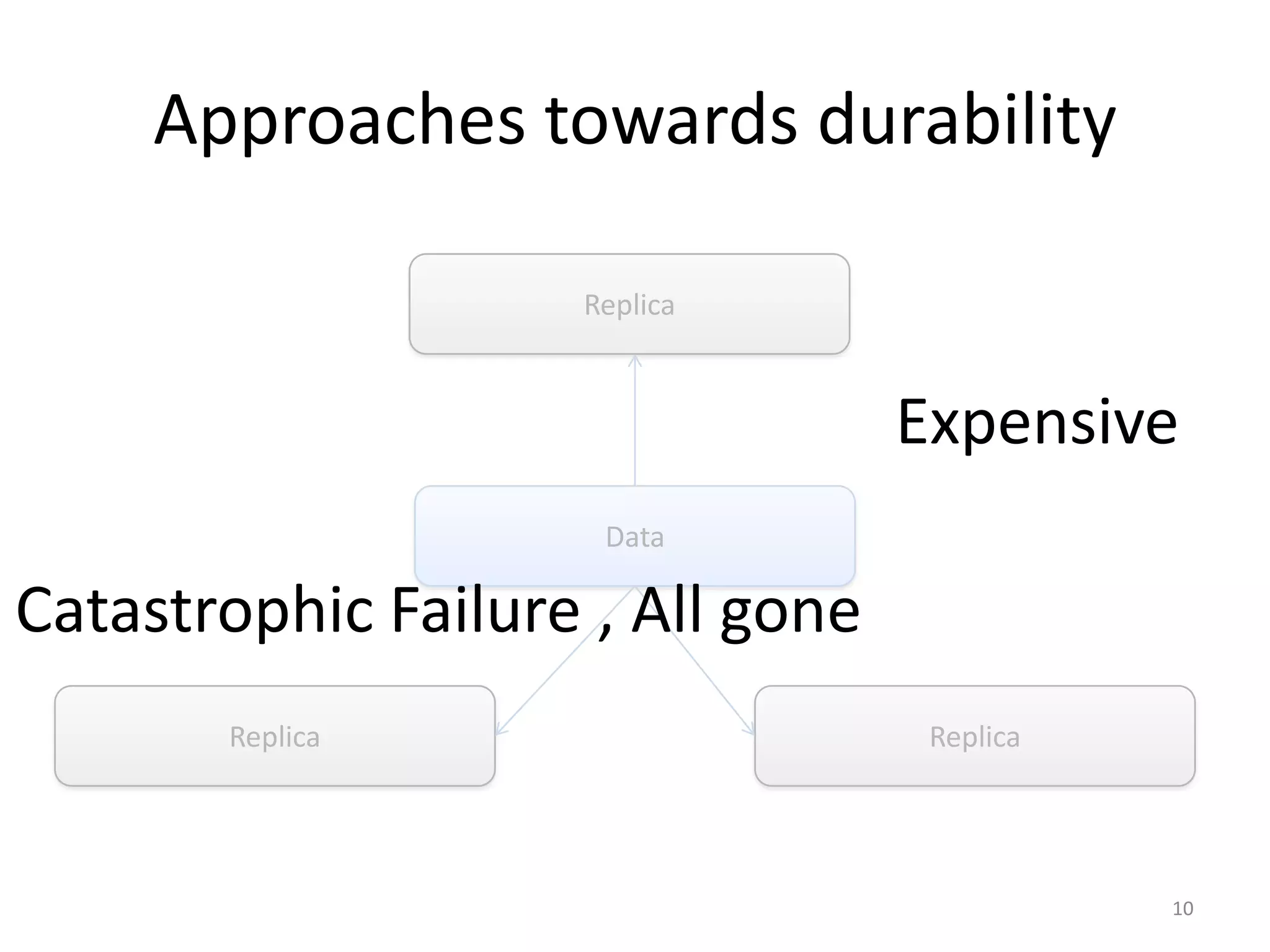

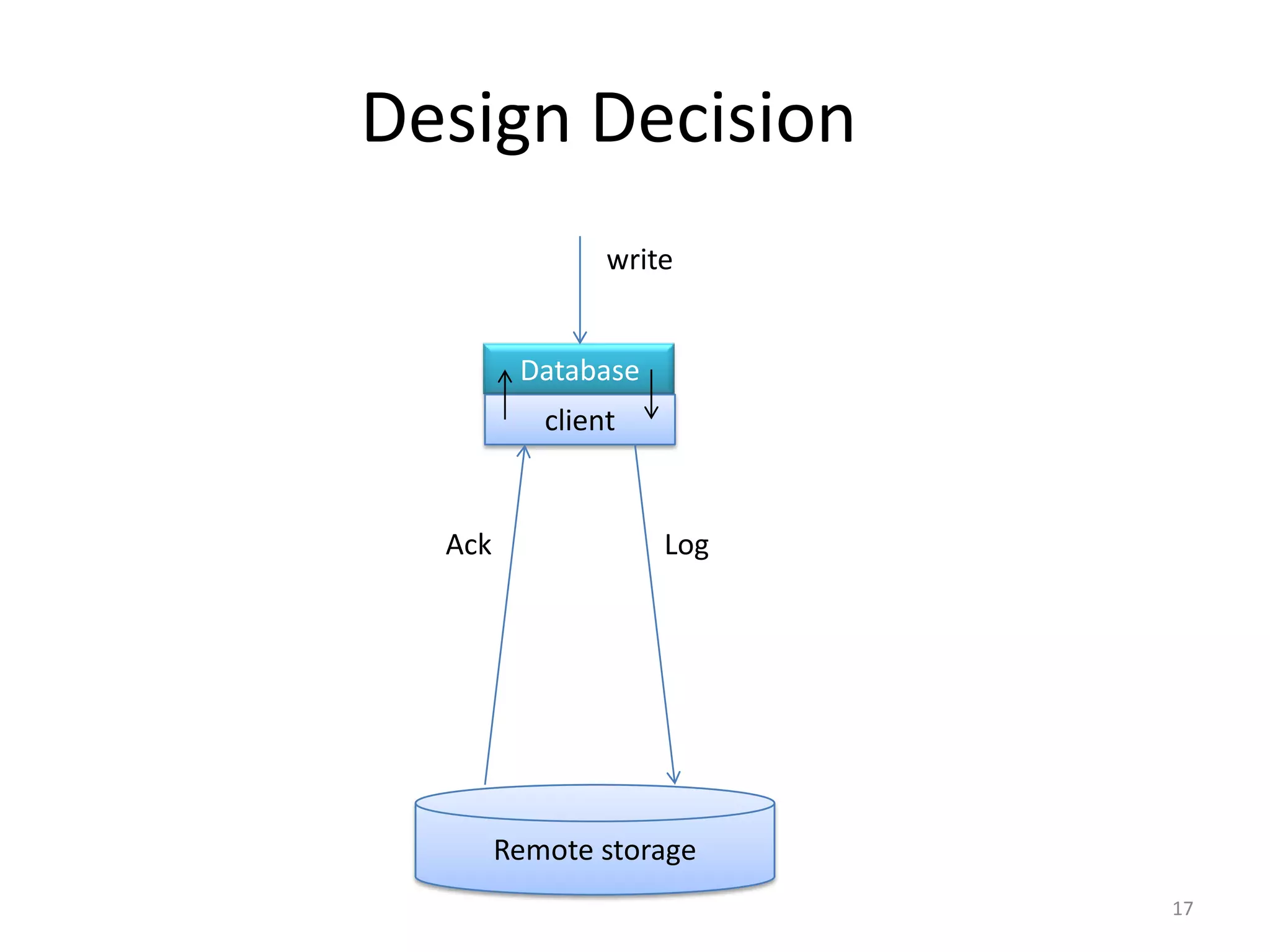

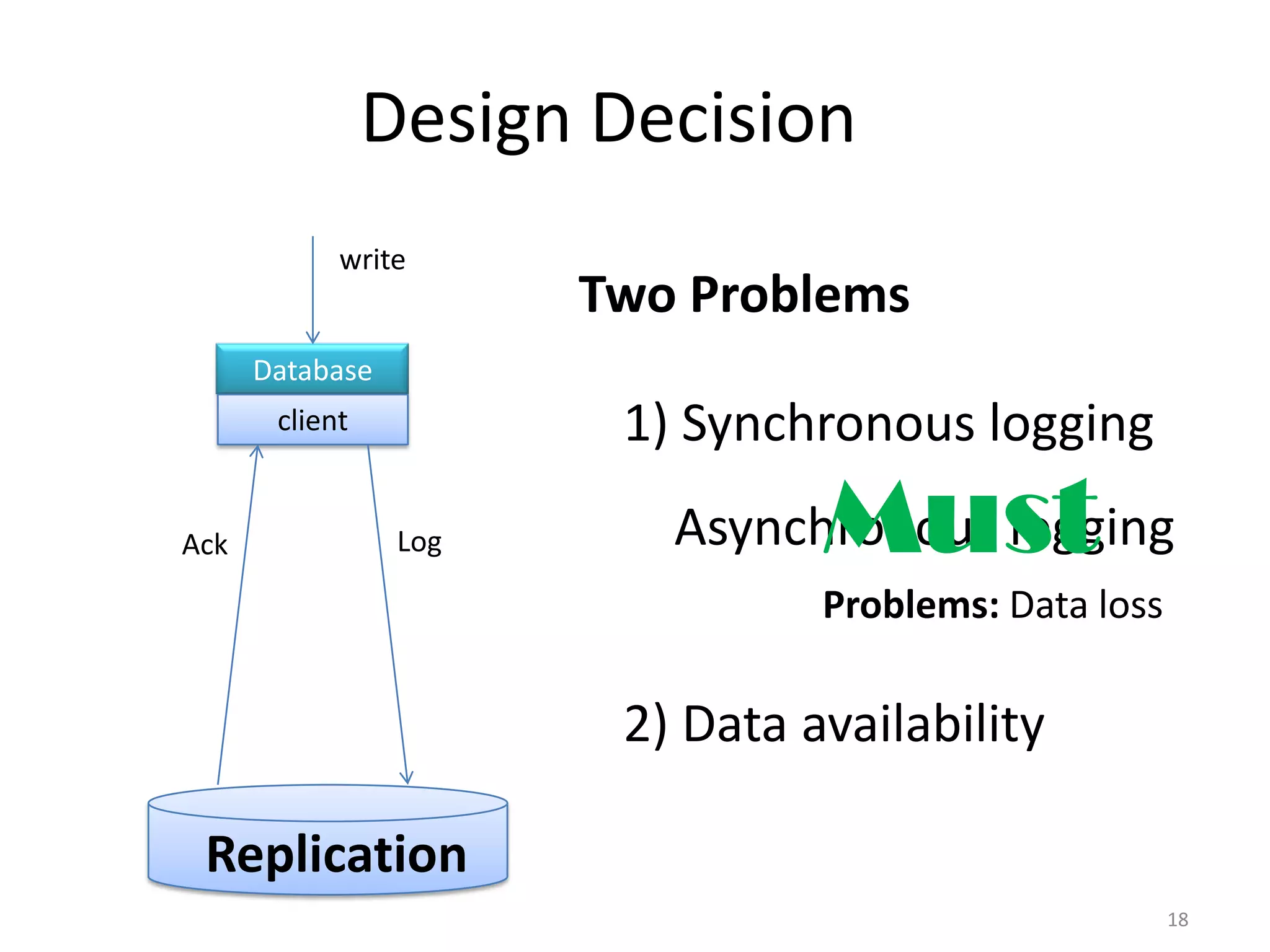

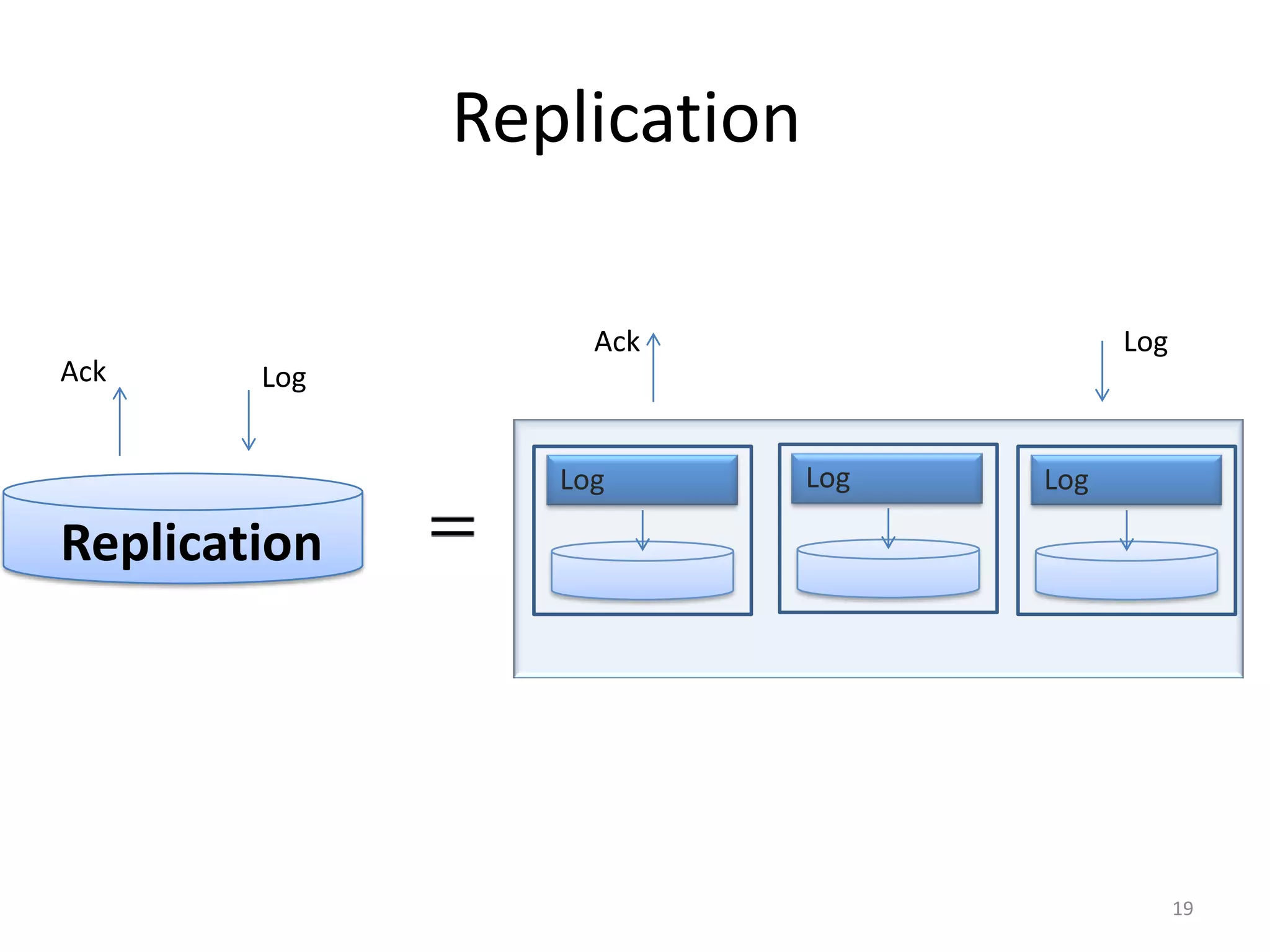

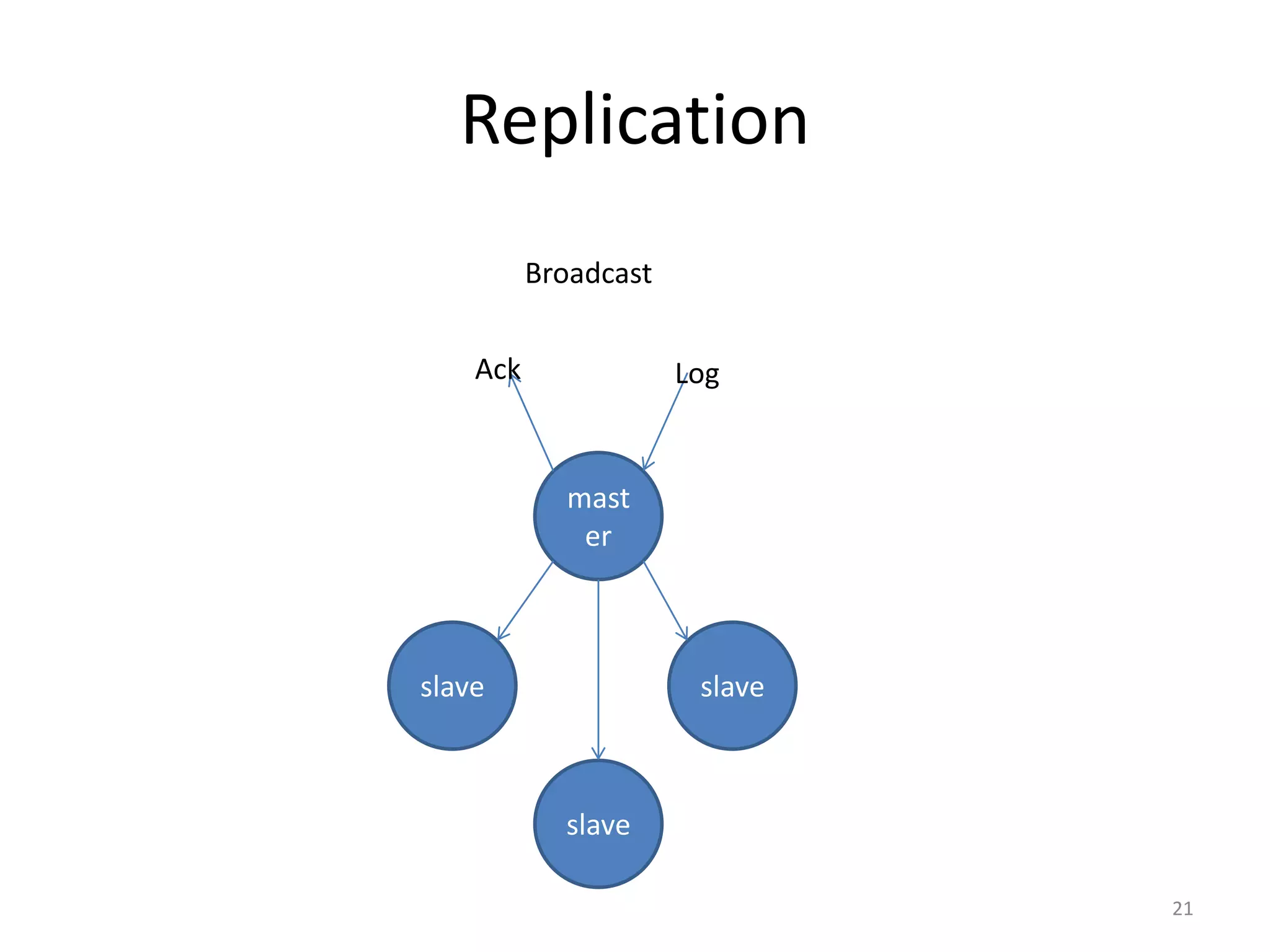

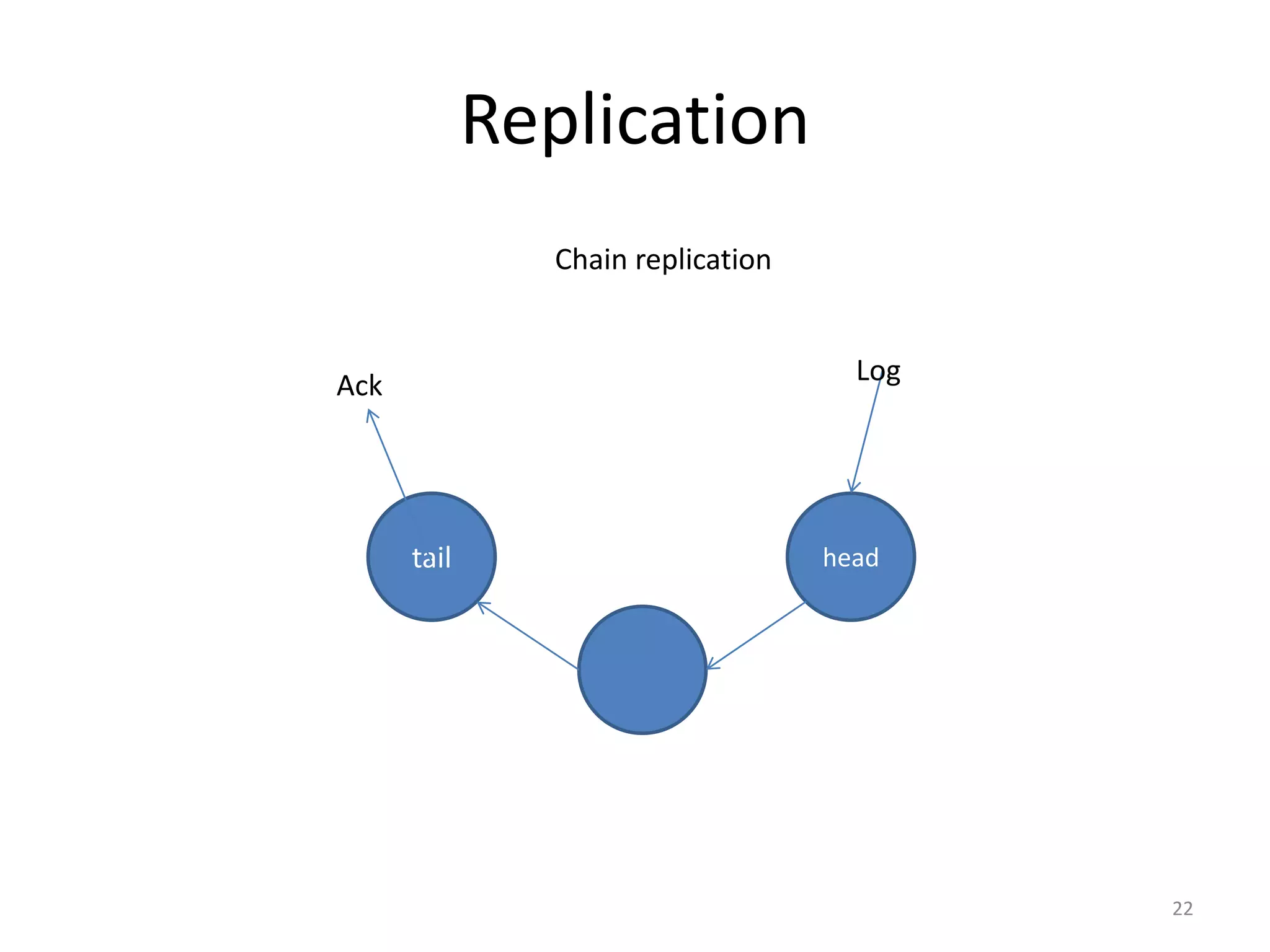

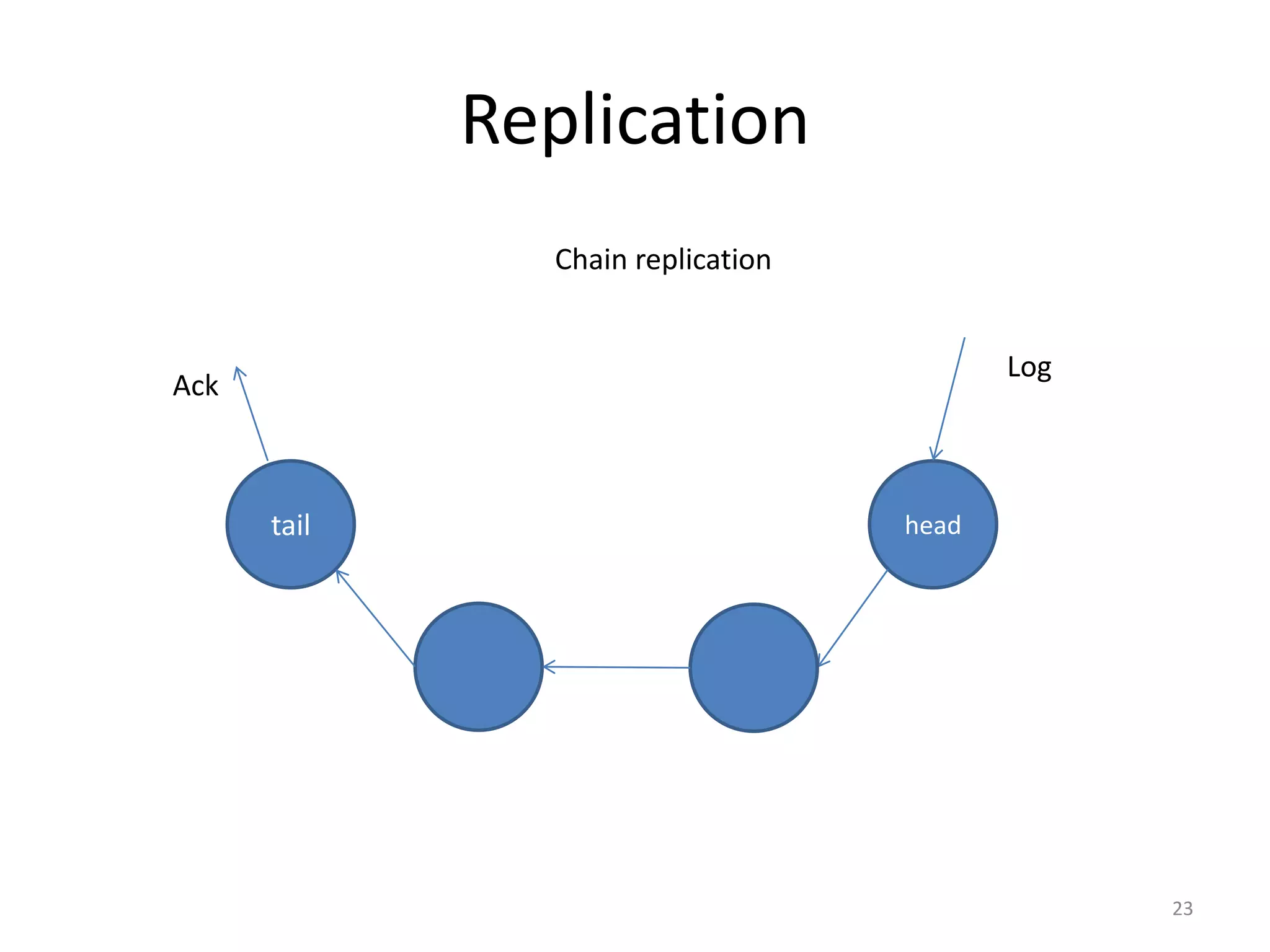

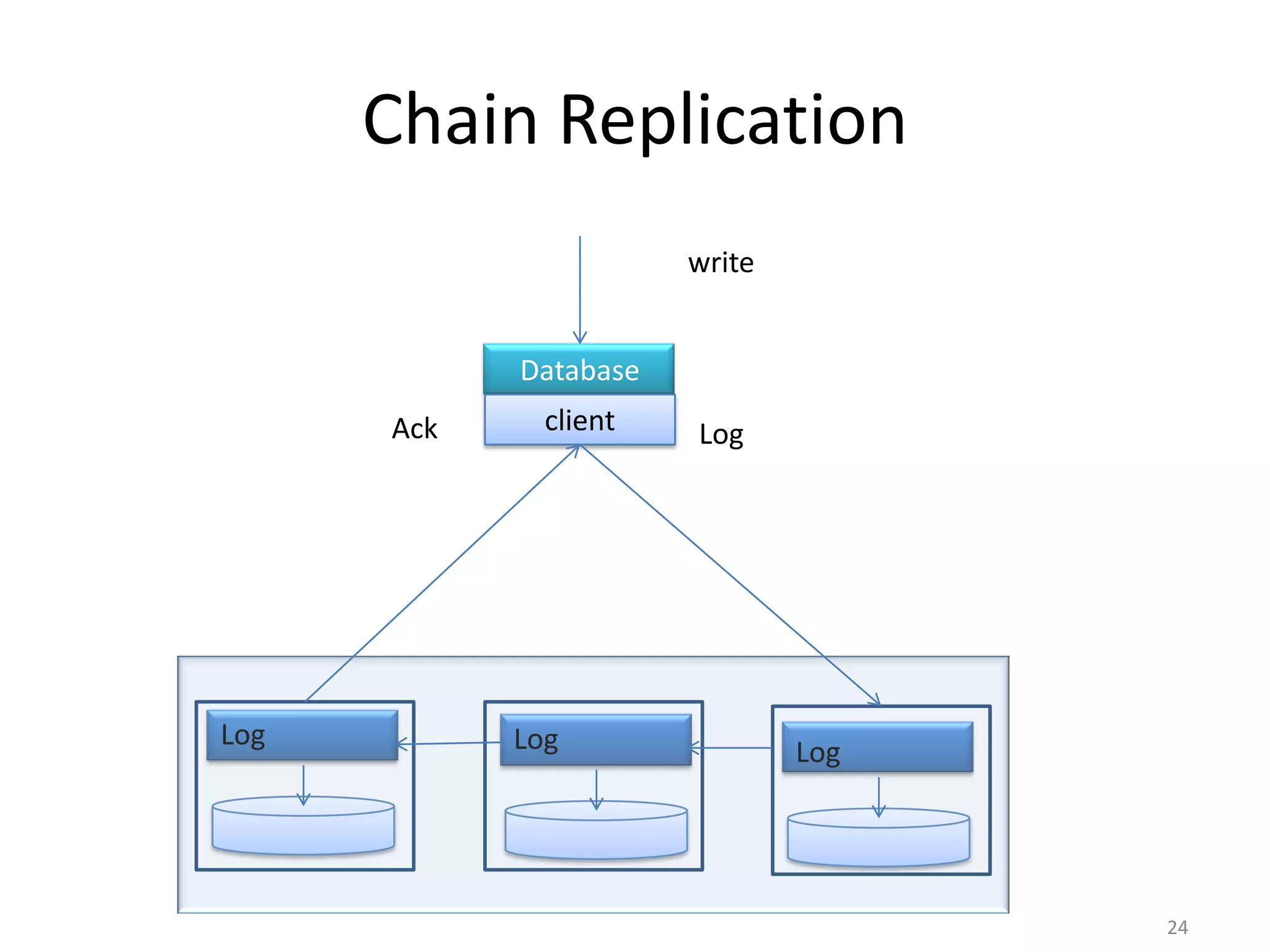

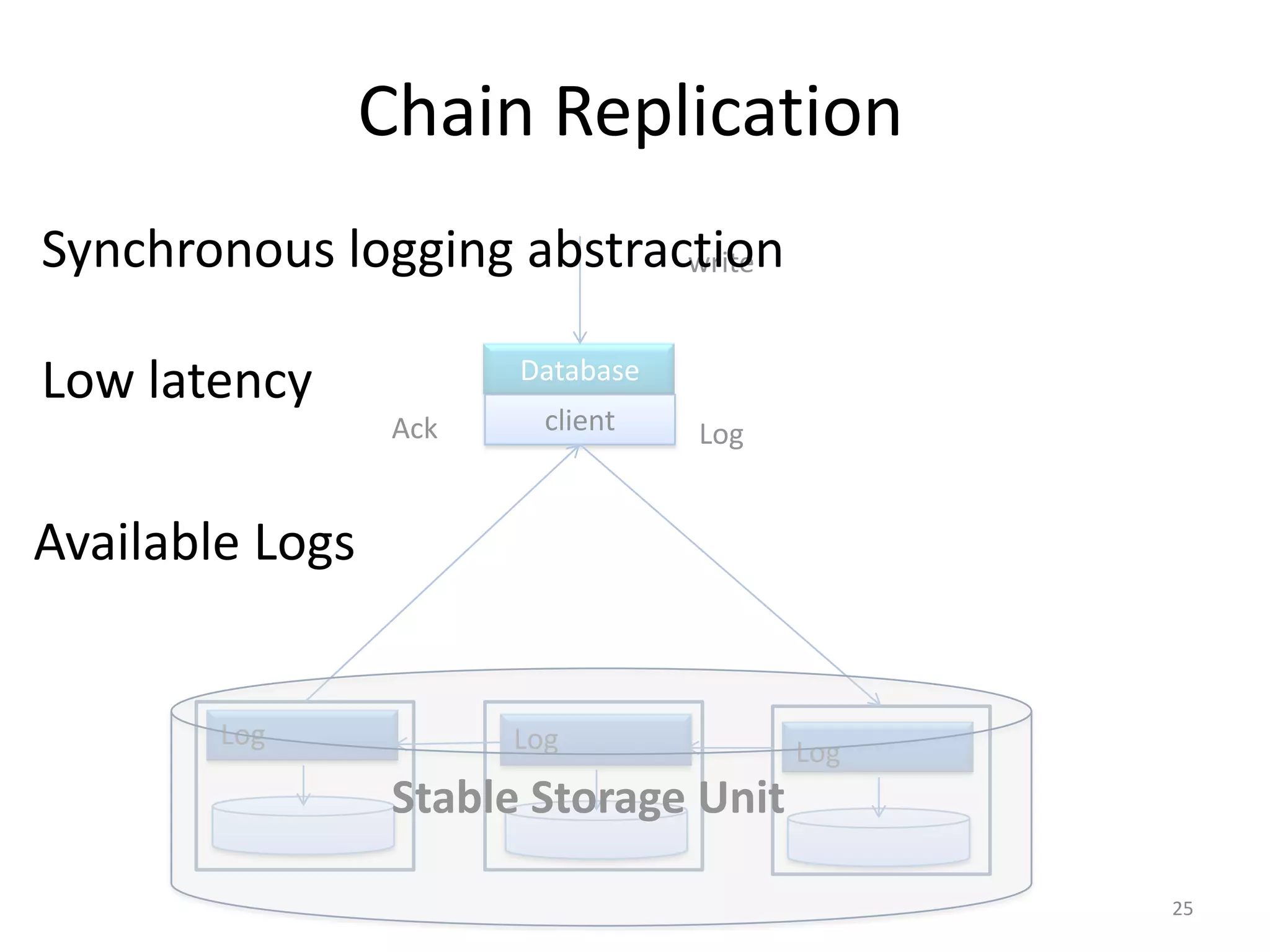

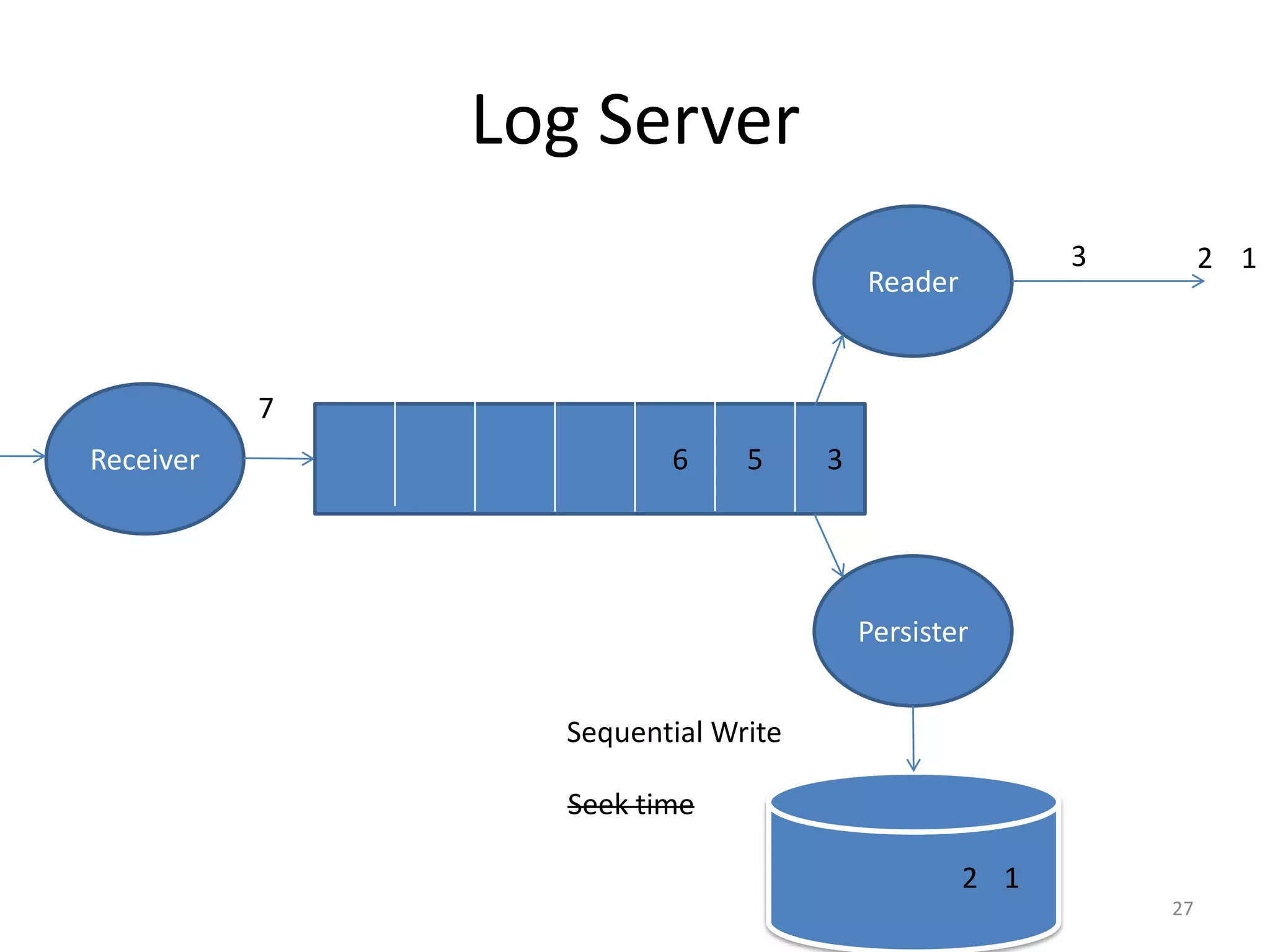

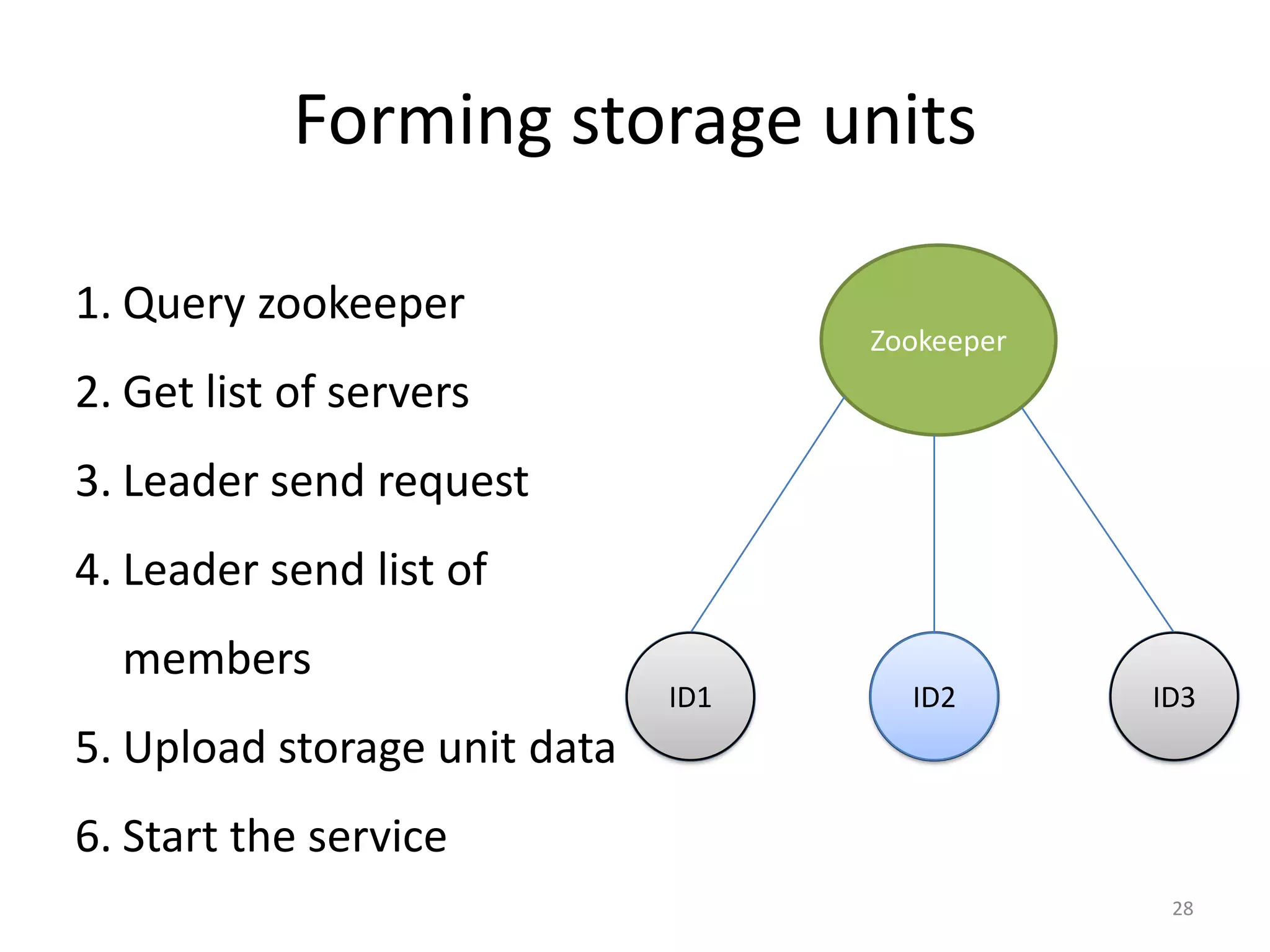

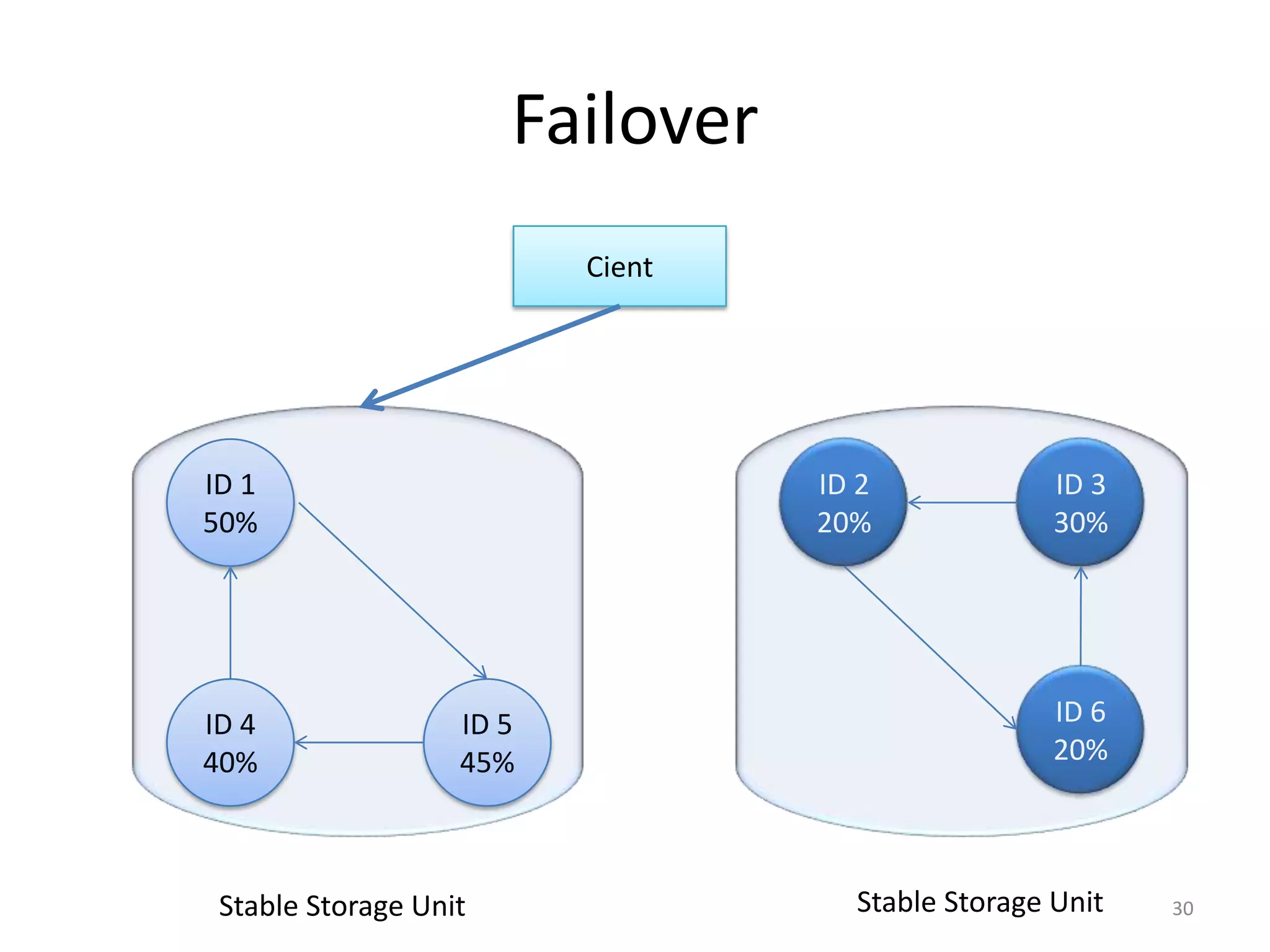

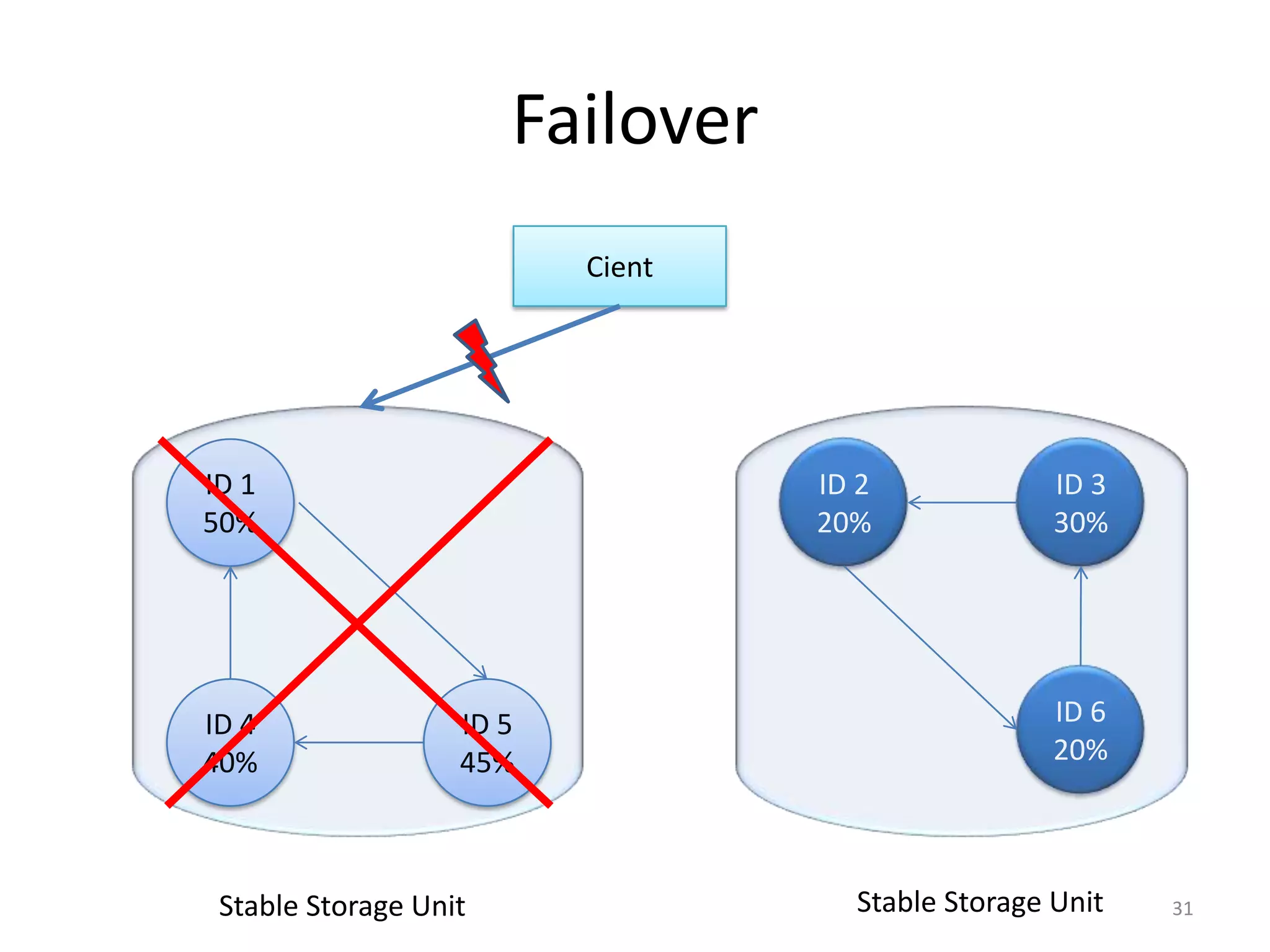

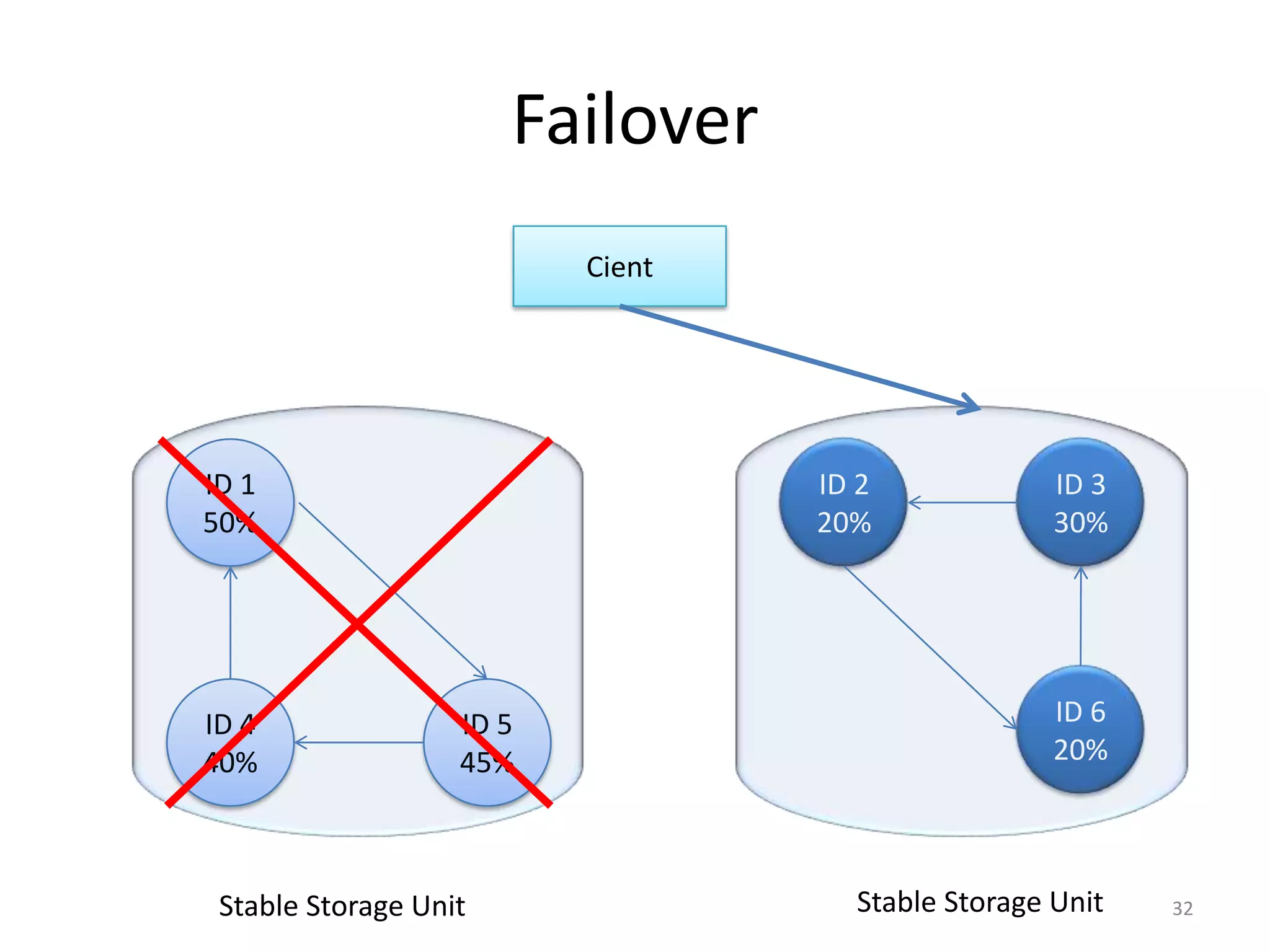

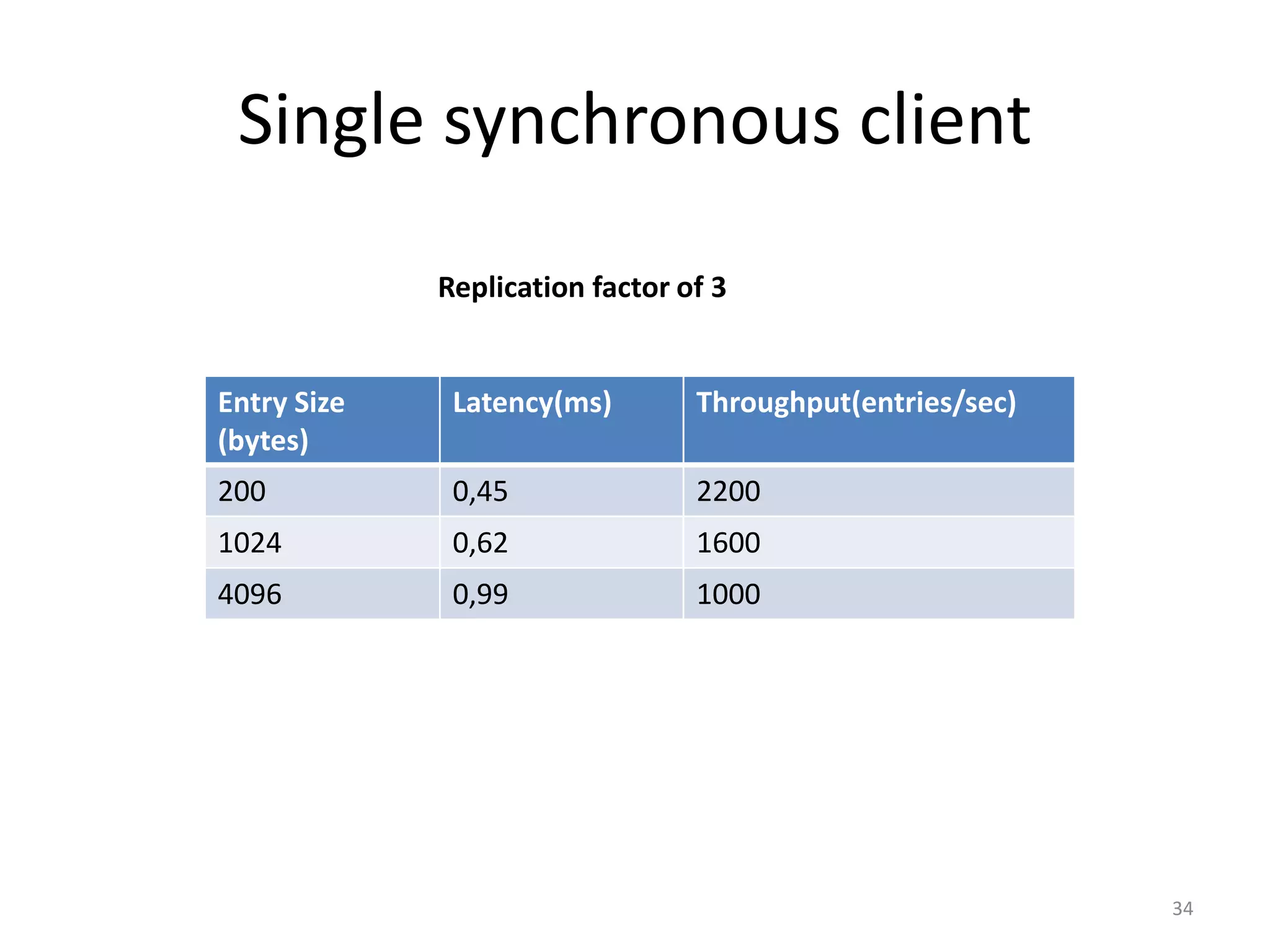

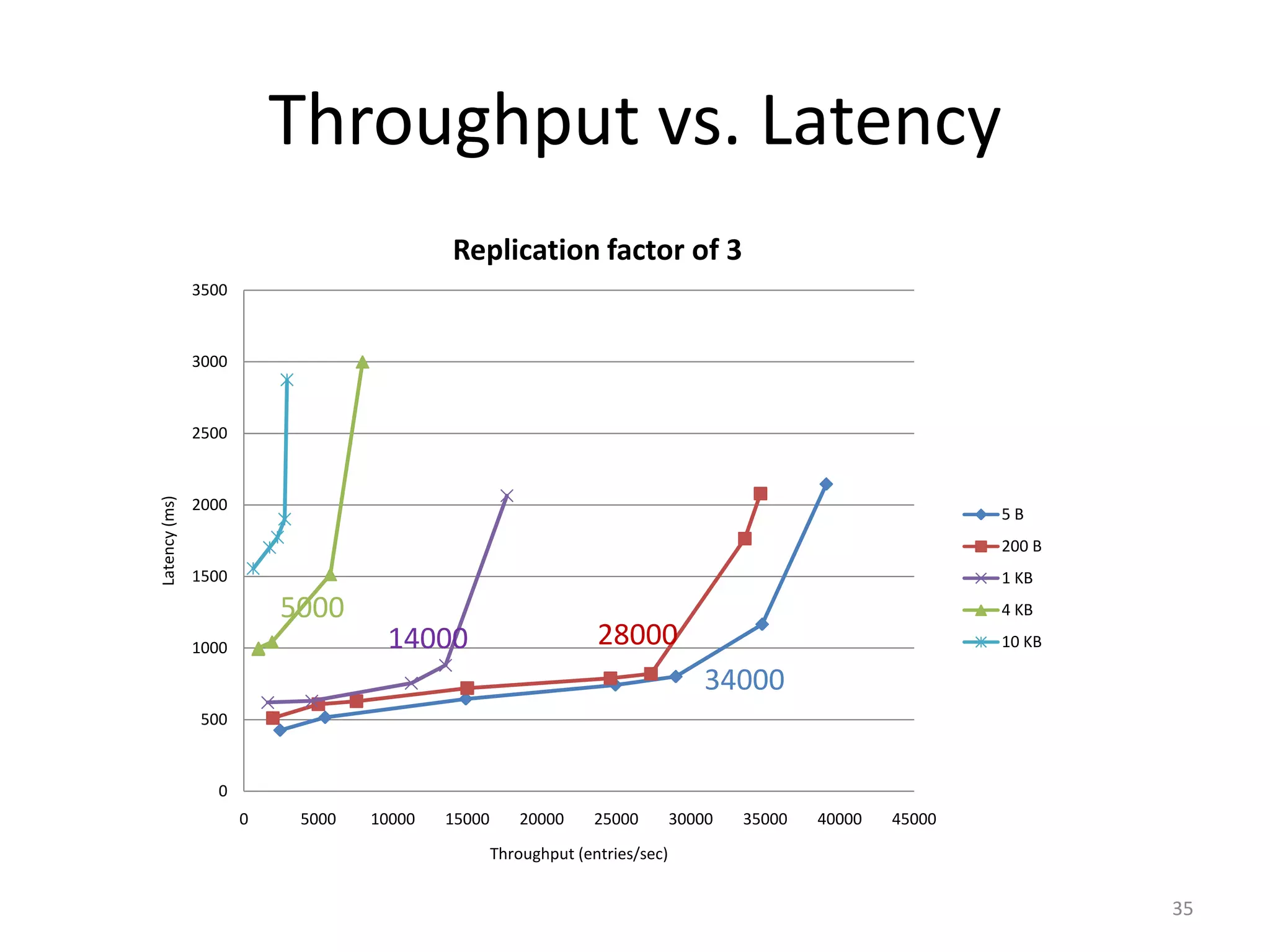

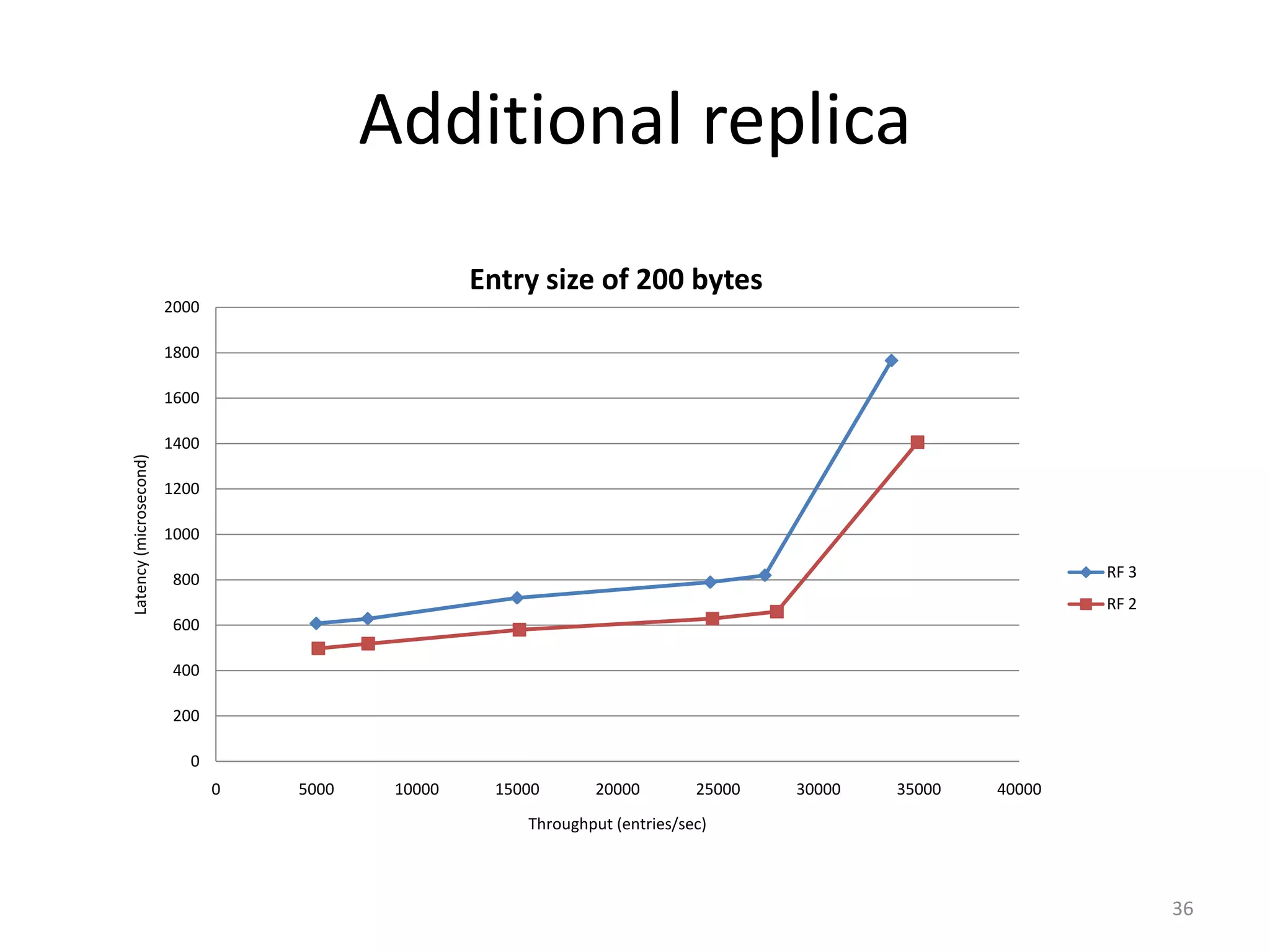

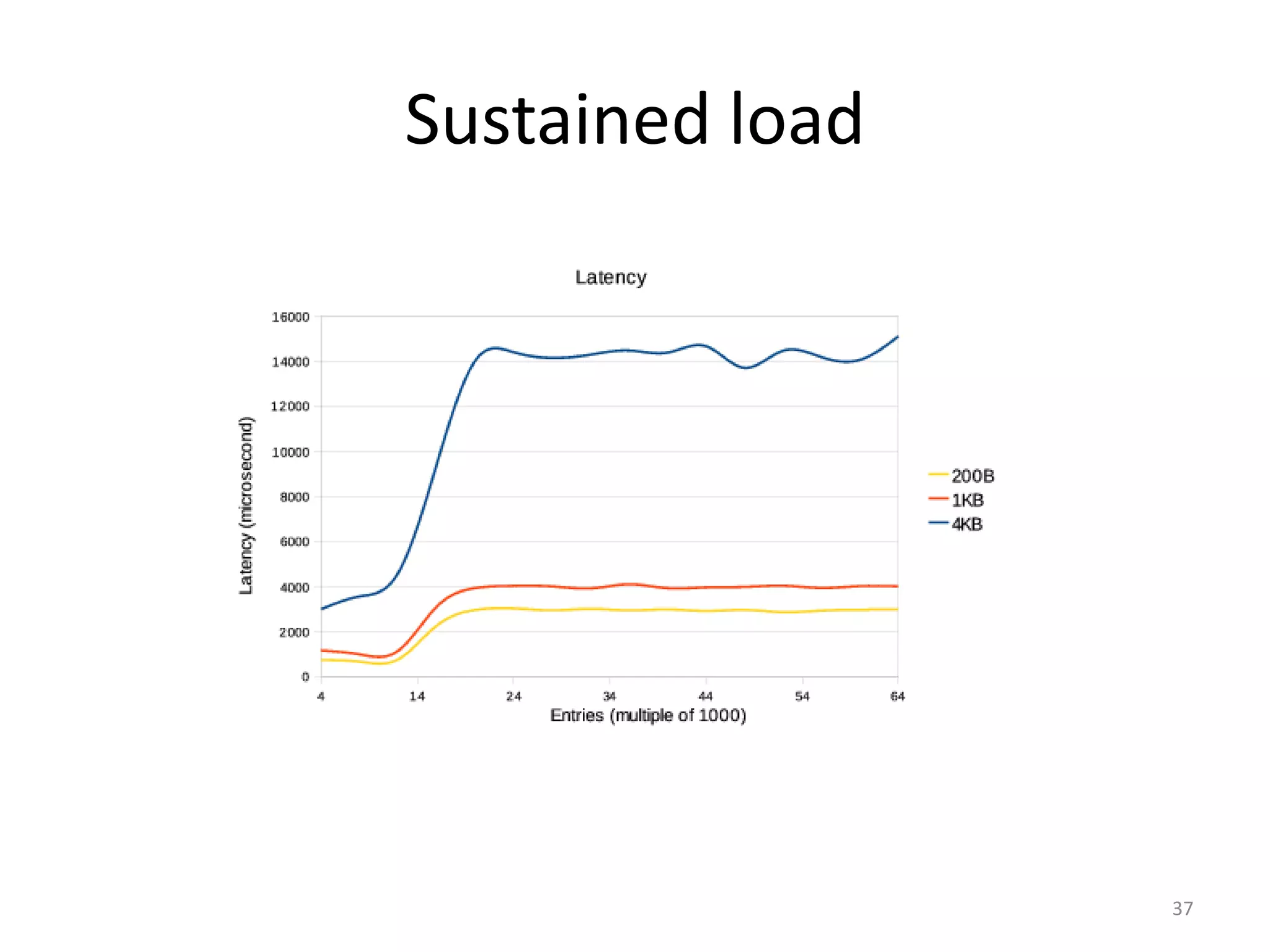

The document proposes a design for a durable, low-latency key-value data store. It discusses approaches to achieving durability like periodic snapshots and log replication. The proposed solution uses asynchronous log replication to remote storage units for durability and availability. The design uses chain replication of logs for synchronous writes and allows forming stable storage units across multiple machines for scalability. Evaluation shows the design can achieve high throughput and low latency even with additional replication.