This document provides an overview and introduction to using the command line interface and submitting jobs to the NIAID High Performance Computing (HPC) Cluster. The objectives are to learn basic Unix commands, practice file manipulation from the command line, and submit a job to the HPC cluster. The document covers topics such as the anatomy of the terminal, navigating directories, common commands, tips for using the command line more efficiently, accessing and mounting drives on the HPC cluster, and an overview of the cluster queue system.

![1/13/15

6

Tips to make life easier!

Tab completion: hit Tab to make computer guess your filename.

type: ls unix[Tab]

result: ls unix_hpc

If nothing happens on the first tab, press tab again…

Up Arrow: recall the previous command(s)

Ctrl+a go to beginning of line

Ctrl+e go to end of line

Ctrl+c kill current running process in terminal

Aliases (put in ~/.bashrc file … see handout)

alias ls='ls -AFG'

alias ll='ls -lrhT'

history show every command issued during the session

!ls repeat the previous “ls” command

!! repeat the previous command

man [command] read the manual for the command

man ls read the manual for the ls command

11

Accessing the NIAID HPC

§ Login to HPC “submit node,” which is the computer from which you submit jobs.

ssh secure shell, remote login

ssh ngscXXX@hpc.niaid.nih.gov fill in XXX with number

§ Copy

files

to/from

HPC

scp secure copy to remote location

scp -r ~/data/dir username@hpc.niaid.nih.gov:~/data/

§ ssh

and

scp

will

prompt

you

to

enter

your

password

12](https://image.slidesharecdn.com/hpcclustercomputingandunixbasics201501142slidesperpage-170503193157/85/UNIX-Basics-and-Cluster-Computing-6-320.jpg)

![1/13/15

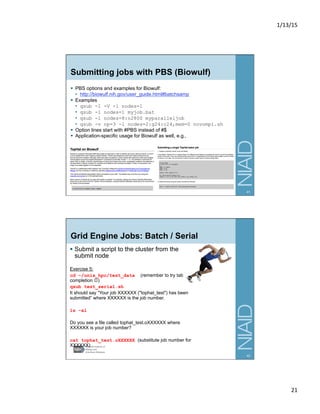

20

Quick Look at a Shell Script

Exercise 4:

cd ~/unix_hpc/test_data

cat test_serial.sh

§ A few things to notice:

• #!/bin/bash

– “shebang” or “hashbang,” used to specify the program to run for the script

• qsub options (next slide)

• export (used to set environmental variables)

• PATH=/path/to/folder:/path/to/another/folder:$PATH

– used to allow you to simply type the name of the executable instead of the

full path to the executable, e.g., type “tophat” instead of “/usr/local/

bio_apps/tophat/bin/tophat”

• Comments about when you ran the job

• Command for job

*PBS Script for Biowulf as well.

39

SGE qsub options

qsub [options] script.sh command to submit a job to the cluster

-S /bin/bash shell to use (default is csh)

-N job_name name for your job

-q queue.q queue(s) to submit to, e.g.,

memLong.q,memRegular.q

-M user@niaid.nih.gov email address to send alert to

-m abe when to send email (e.g., beginning, end, aborted)

-l resources resources to request, e.g.,

h_vmem=20G,h_cpu=1:00:00,mem_free=10G

-cwd run from current working directory. Output to here.

-j y join stderr and stdout into one

-pe threaded 10 parallel environment: “round” means processors

could be on separate machines, “threaded” all

processors on same machine. number of processors/

threads.

§ You can put these options on the command-line or in your shell

script

§ Lines with these options should begin with #$

40](https://image.slidesharecdn.com/hpcclustercomputingandunixbasics201501142slidesperpage-170503193157/85/UNIX-Basics-and-Cluster-Computing-20-320.jpg)

![1/13/15

22

Grid Engine Jobs: Parallel

§ pe commands (threaded, single, etc.)

§ Basic use in script:

#$ -pe threaded 8

§ Can also use advanced options, e.g.,

• "-pe 12threaded 48" means use 12 cores per node, for a total

of 48 cores needed. This will allocate the job to run on 4 nodes

with 12 cores each. Your program must be able to support this

• "-pe threaded 5-10" means run the job with 10 if available, but

down to 5 cores is fine too.

§ Do the math for memory!

• h_vmem is not total, it’s per thread. E.g., if you have a job that

needs 10G total, running on 5 processors, you’ll assign

h_vmem=2G, not h_vmem=10G.

• Let’s edit our script to make it run parallel…

43

Edit Shell Script in the Terminal with nano

Navigation in nano:

§ use arrow keys for up, down, left, right

§ Ctrl+a for beginning of line; Ctrl+e for end of line

§ Other commands at bottom of screen e.g., Ctrl+o, Ctrl+x

Exercise 6:

cd ~/unix_hpc/test_data

Make new script for parallel, open in nano

cp test_serial.sh test_parallel.sh

nano test_parallel.sh

Add line to script with SGE options

#$ -pe threaded 4

Modify tophat command

tophat -p 4 …

Save and close

Ctrl+o, [ENTER]

Ctrl+x

Now submit the jobs

qsub test_serial.sh

qsub test_parallel.sh

44](https://image.slidesharecdn.com/hpcclustercomputingandunixbasics201501142slidesperpage-170503193157/85/UNIX-Basics-and-Cluster-Computing-22-320.jpg)

![1/13/15

23

Monitoring Jobs

Exercise 7:

qsub test_tenminutes.sh

qstat check on submitted jobs

echo $LOGNAME check your username

qstat -u $LOGNAME check status or your jobs

qstat -u $LOGNAME -ext check resource usage, including memory

qstat -u $LOGNAME -ext -g t get extended details, including MASTER, SLAVE

nodes for parallel jobs

qstat -j job-ID get detailed information about your job status

qacct –j 999072 see info about a job after it was run

qalter [new qsub options] [job id] In case you want to change parameters while in

“qw” status

qdel –u username delete all of your submitted jobs

qdel jobnumber delete a single job

§ Websites

• Cluster status:

http://hpcweb.niaid.nih.gov/#about?type=About%20Links&requestType=Cluster

%20Status

• Current State: http://hpcwiki.niaid.nih.gov/index.php/Current_State

• Ganglia toolkit: http://cluster.niaid.nih.gov/ganglia/

45

Contact Us

andrew.oler@nih.gov

ScienceApps@niaid.nih.gov

h5p://bioinforma;cs.niaid.nih.gov

46](https://image.slidesharecdn.com/hpcclustercomputingandunixbasics201501142slidesperpage-170503193157/85/UNIX-Basics-and-Cluster-Computing-23-320.jpg)