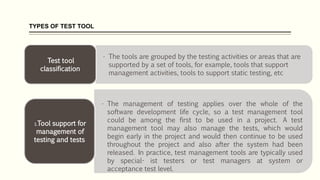

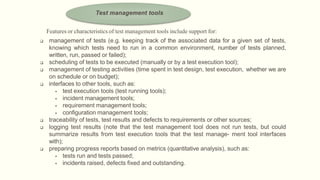

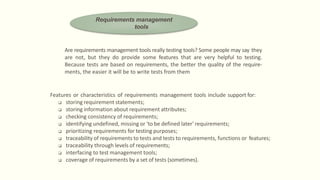

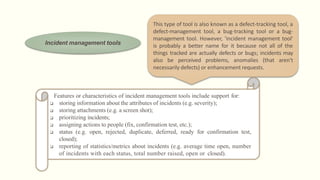

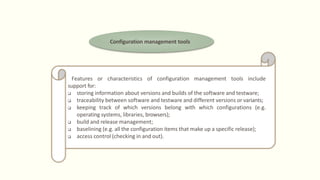

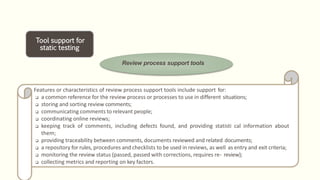

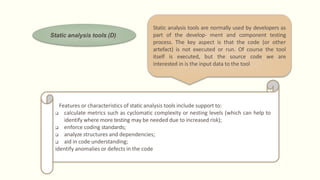

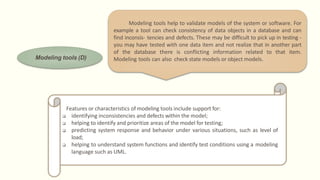

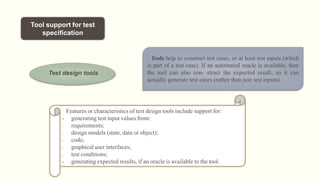

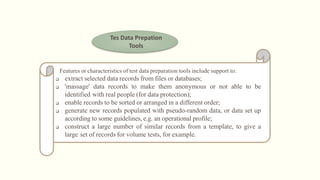

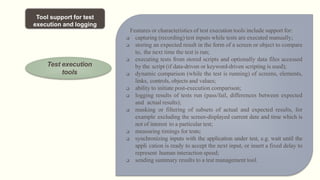

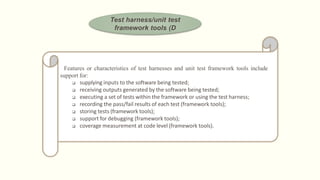

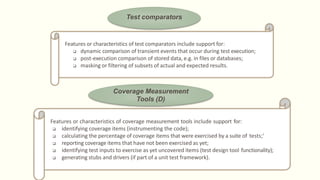

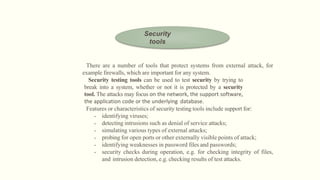

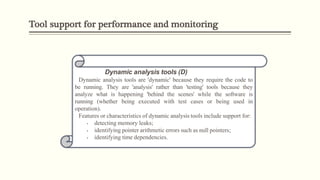

This document discusses various types of tools that can be used to support the testing process. It describes tools for test management, requirements management, incident management, configuration management, static testing, test specification, test execution and logging, performance and security testing. For each type of tool, it provides examples of key features and functions they provide to support testing activities. The document emphasizes that any type of tool can potentially be used to support testing in some way.