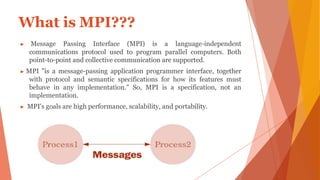

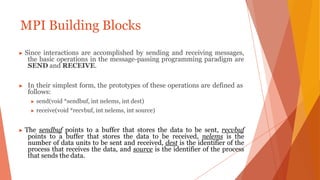

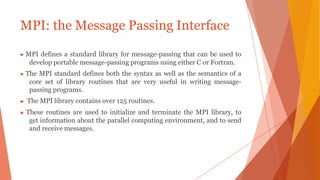

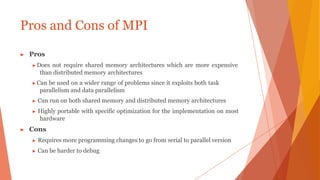

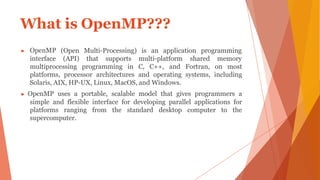

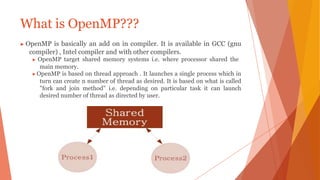

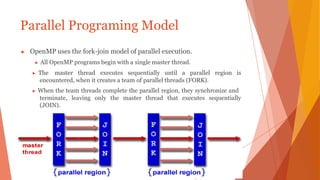

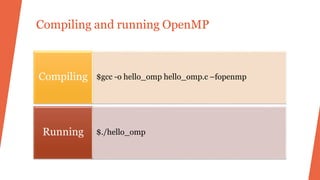

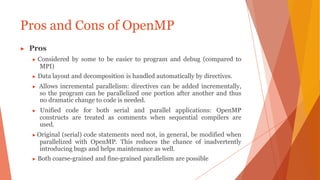

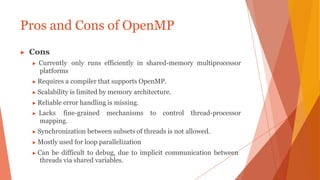

This document provides an overview of MPI (Message Passing Interface) and OpenMP (Open Multi-Processing) for parallel programming. MPI is a standard for message passing between processes across distributed memory systems. It uses send and receive operations as basic building blocks. OpenMP is an API that supports shared memory multiprocessing programming using directives like parallel and threads. It uses a fork-join model where the master thread creates worker threads for parallel regions. Both approaches have pros and cons for different types of parallel architectures and problems.