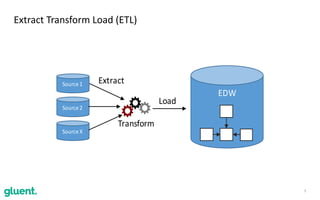

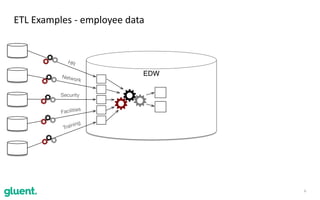

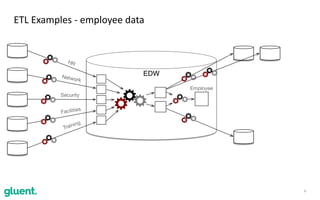

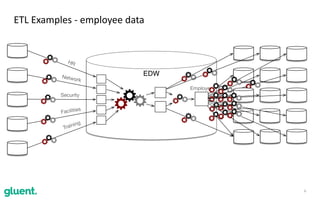

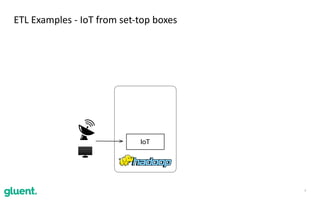

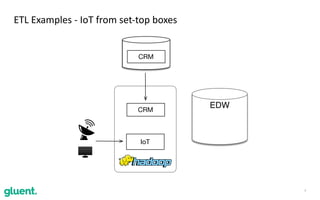

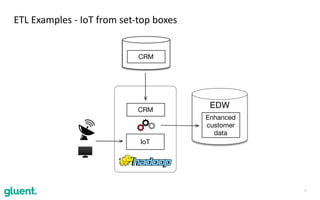

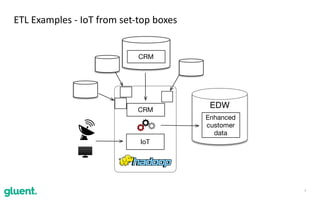

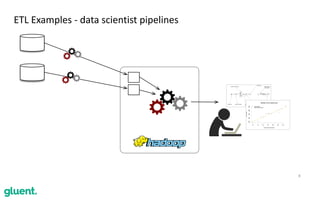

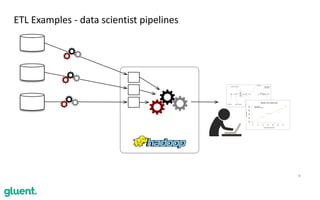

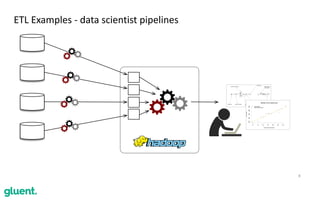

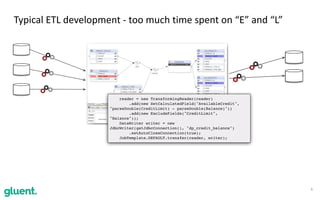

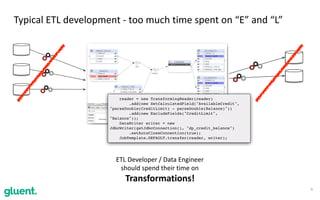

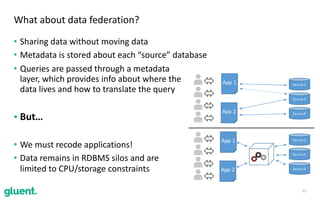

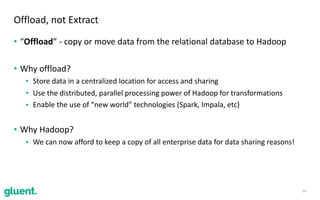

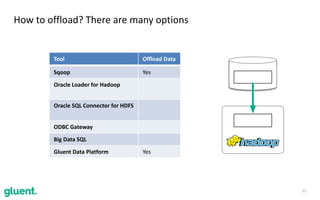

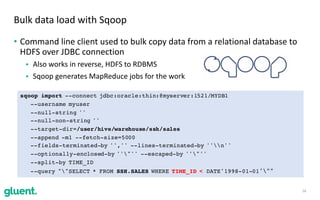

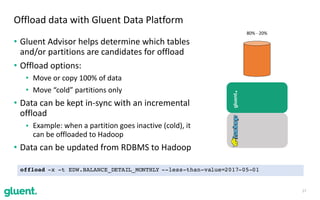

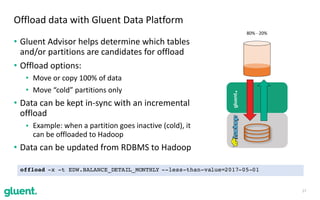

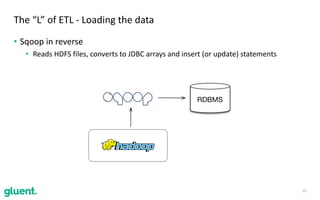

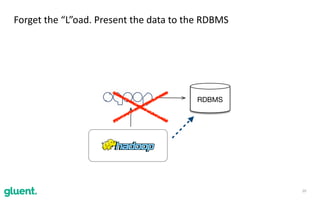

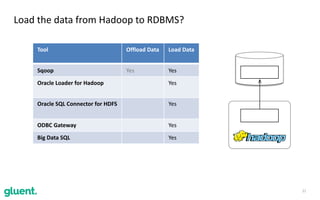

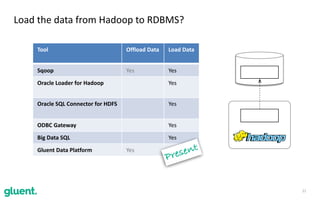

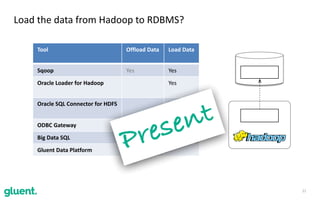

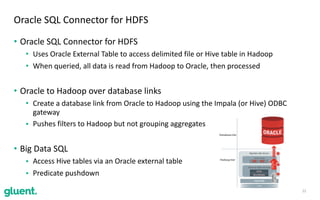

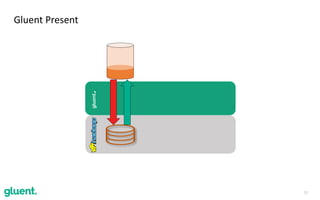

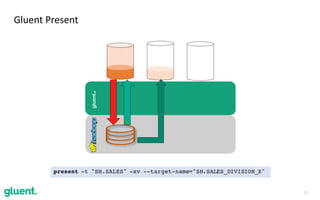

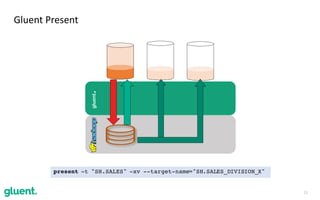

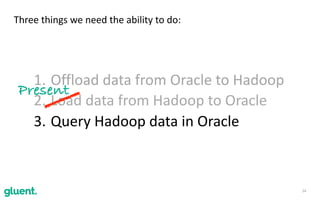

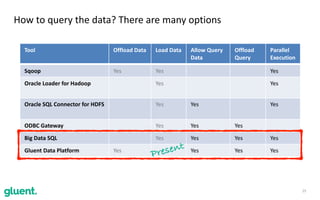

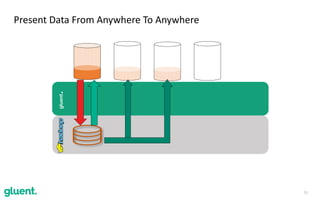

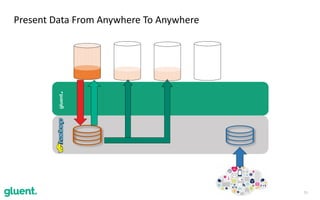

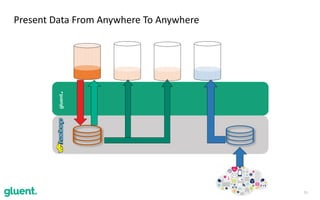

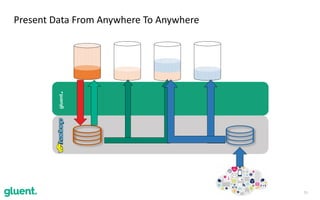

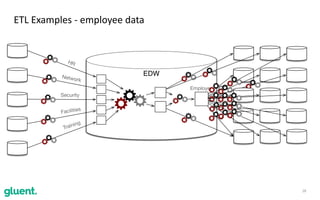

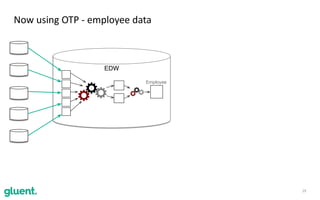

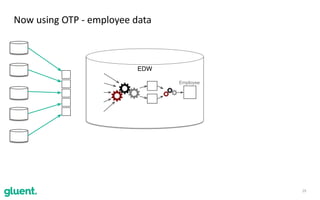

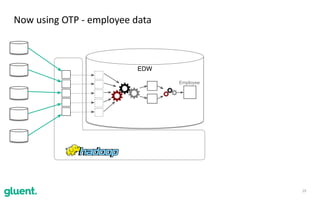

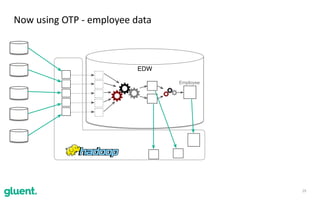

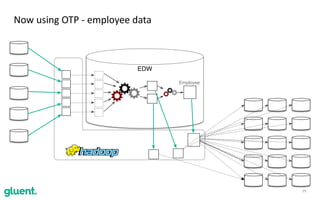

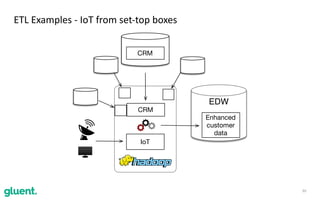

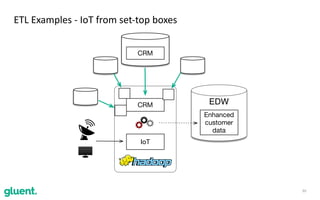

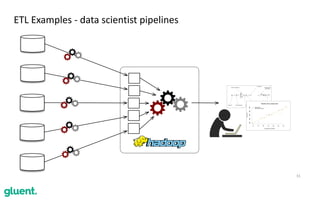

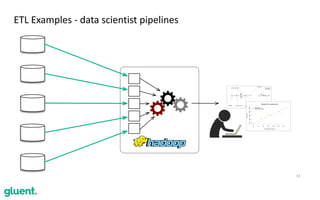

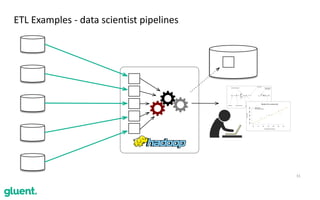

The document discusses Gluent's approach to data integration, emphasizing the need to offload, transform, and present data effectively, shifting from traditional ETL (Extract, Transform, Load) to OTP (Offload, Transform, Present). It highlights various tools and methods for offloading data from Oracle to Hadoop and vice versa, advocating for improved access and processing capabilities within enterprises. Gluent's platform aims to enhance efficiency and reduce the time for data movement, enabling organizations to leverage data sharing more effectively.