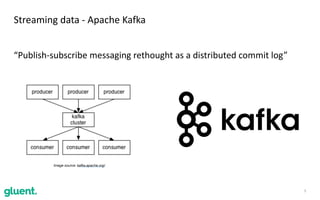

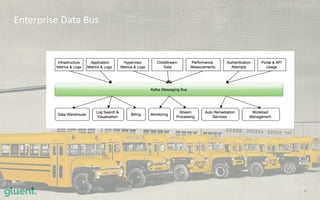

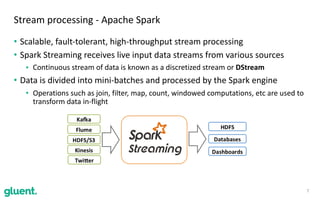

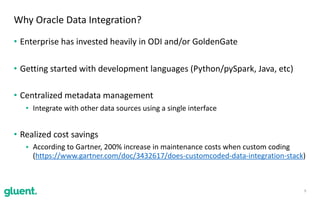

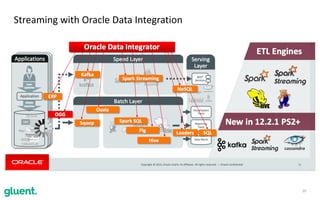

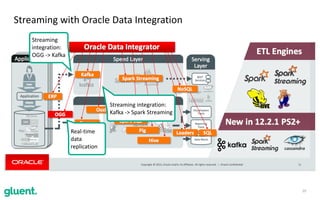

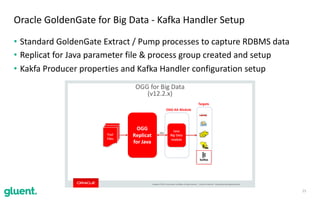

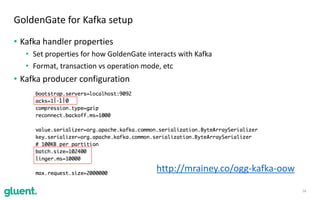

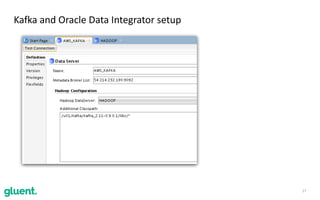

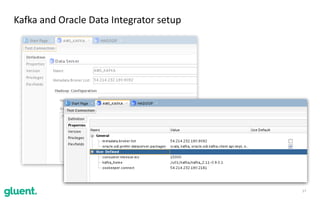

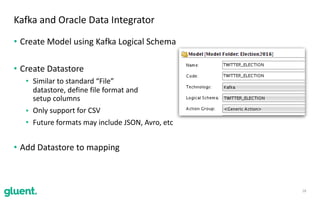

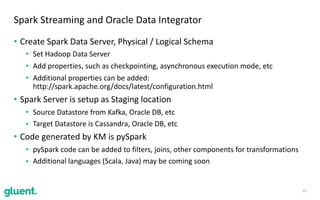

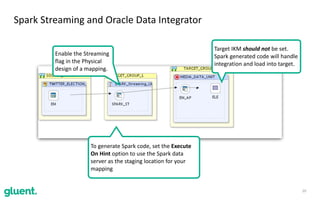

The document discusses streaming transformations using Oracle Data Integration, emphasizing the importance of real-time data processing and its applications, such as retail analysis and IoT data analysis. It outlines the benefits of Oracle Data Integration, specifically its scalability, and interaction with Apache Kafka and Spark Streaming for efficient data handling. Additionally, it highlights the ease of setup and management provided by Goldengate in facilitating data integration from various sources.