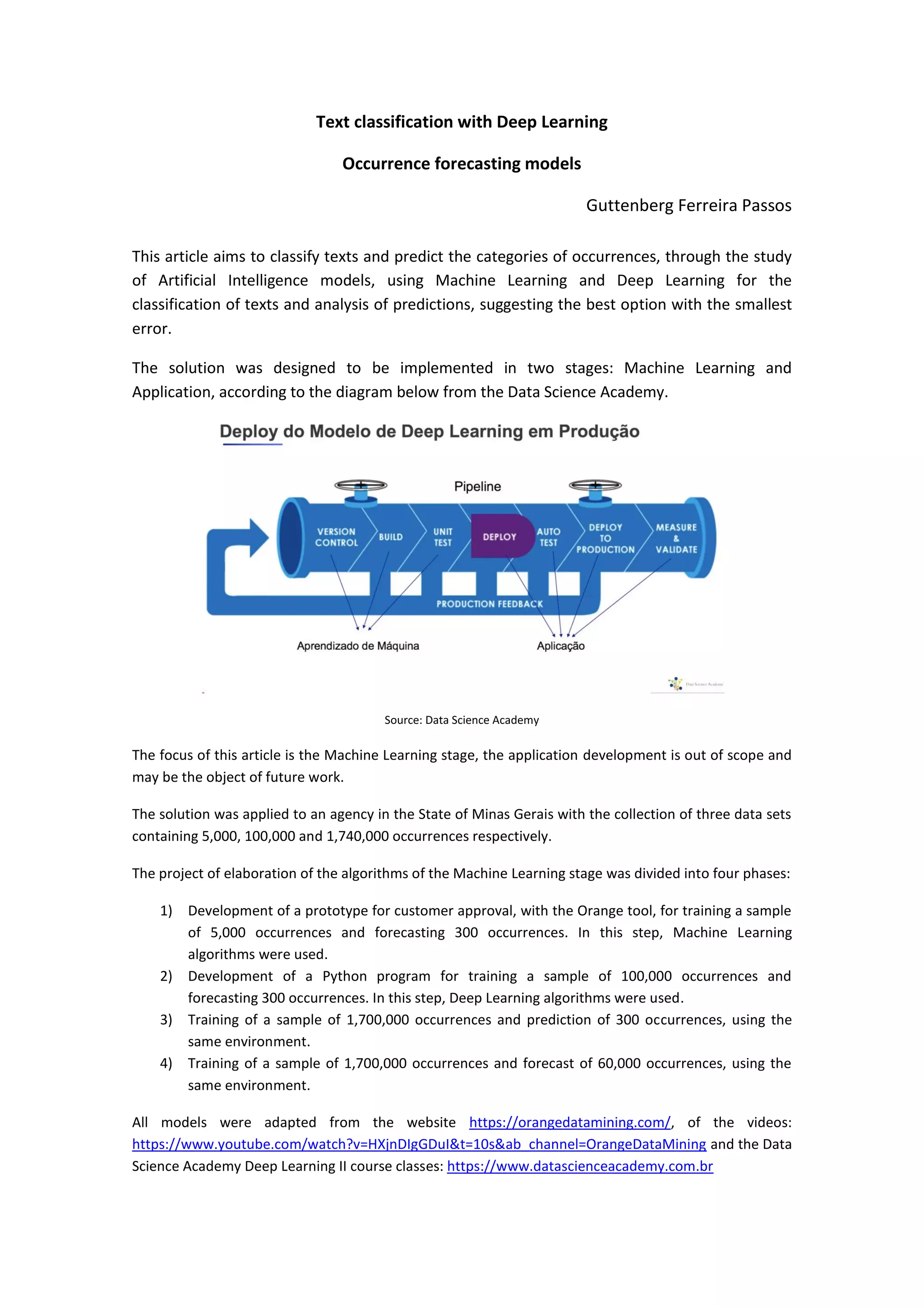

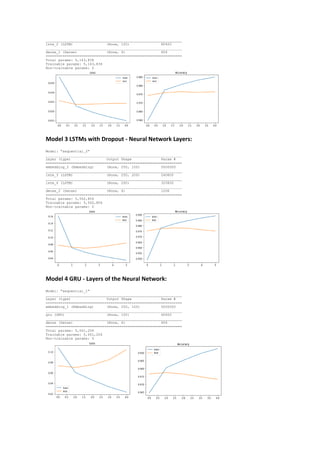

This article discusses a two-stage solution for text classification and occurrence prediction using machine learning and deep learning algorithms. Phases include prototype development, model training on various datasets, and performance evaluation, concluding that combined LSTM and CNN models achieved a high accuracy of 97%. The findings suggest adopting this deep learning model for future applications in production.