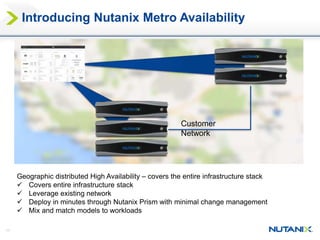

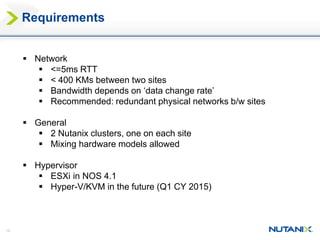

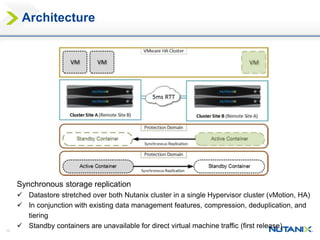

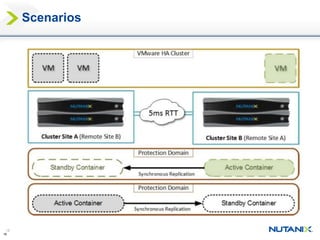

This document discusses Nutanix's Metro Availability feature, which provides geographic distributed high availability across an entire infrastructure stack. It leverages synchronous storage replication between two Nutanix clusters in different sites to provide redundancy. The architecture stretches data stores across both clusters so virtual machines can failover and migrate between sites. It requires less than 5ms latency and 400km or less of distance between sites.