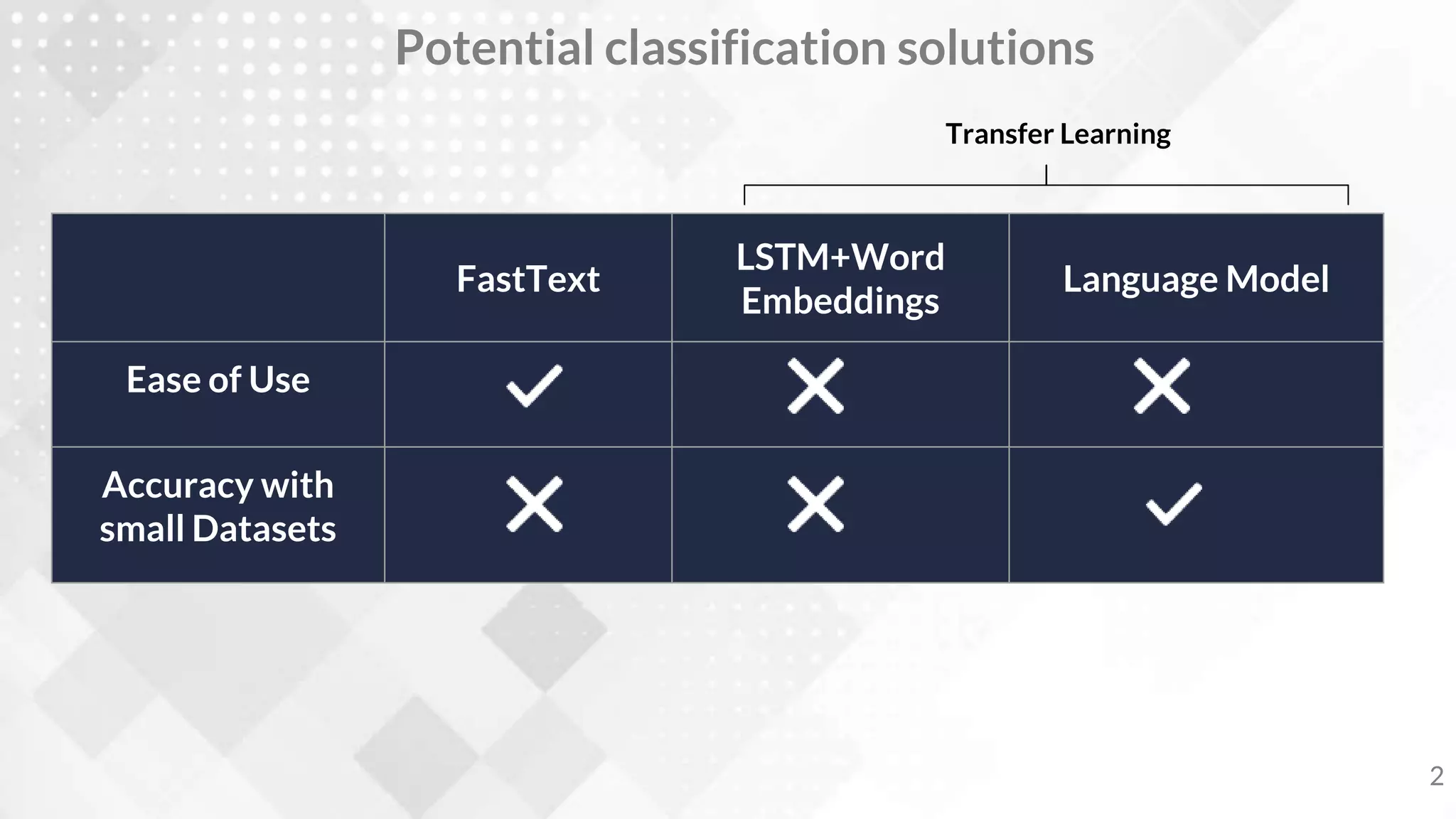

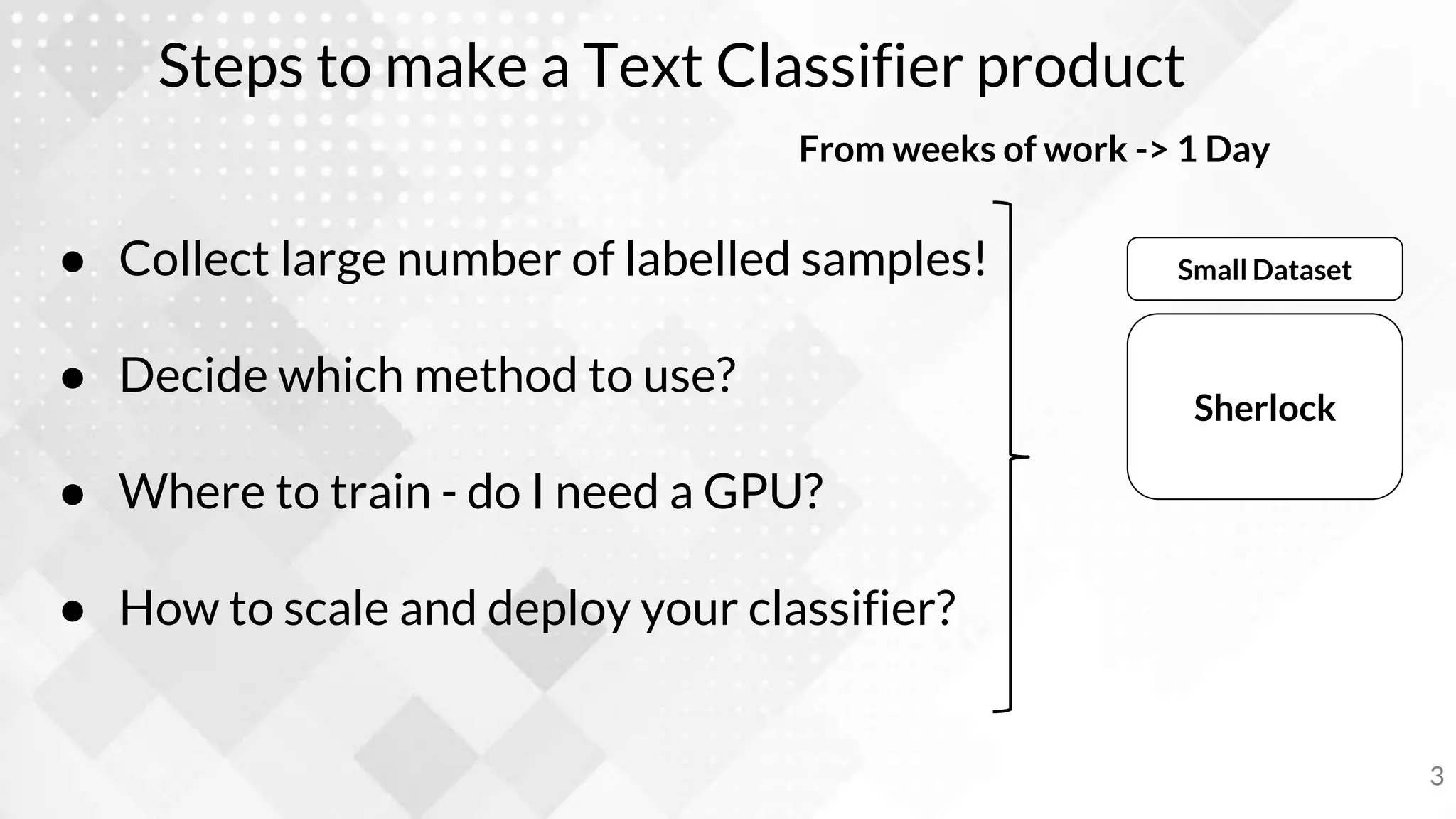

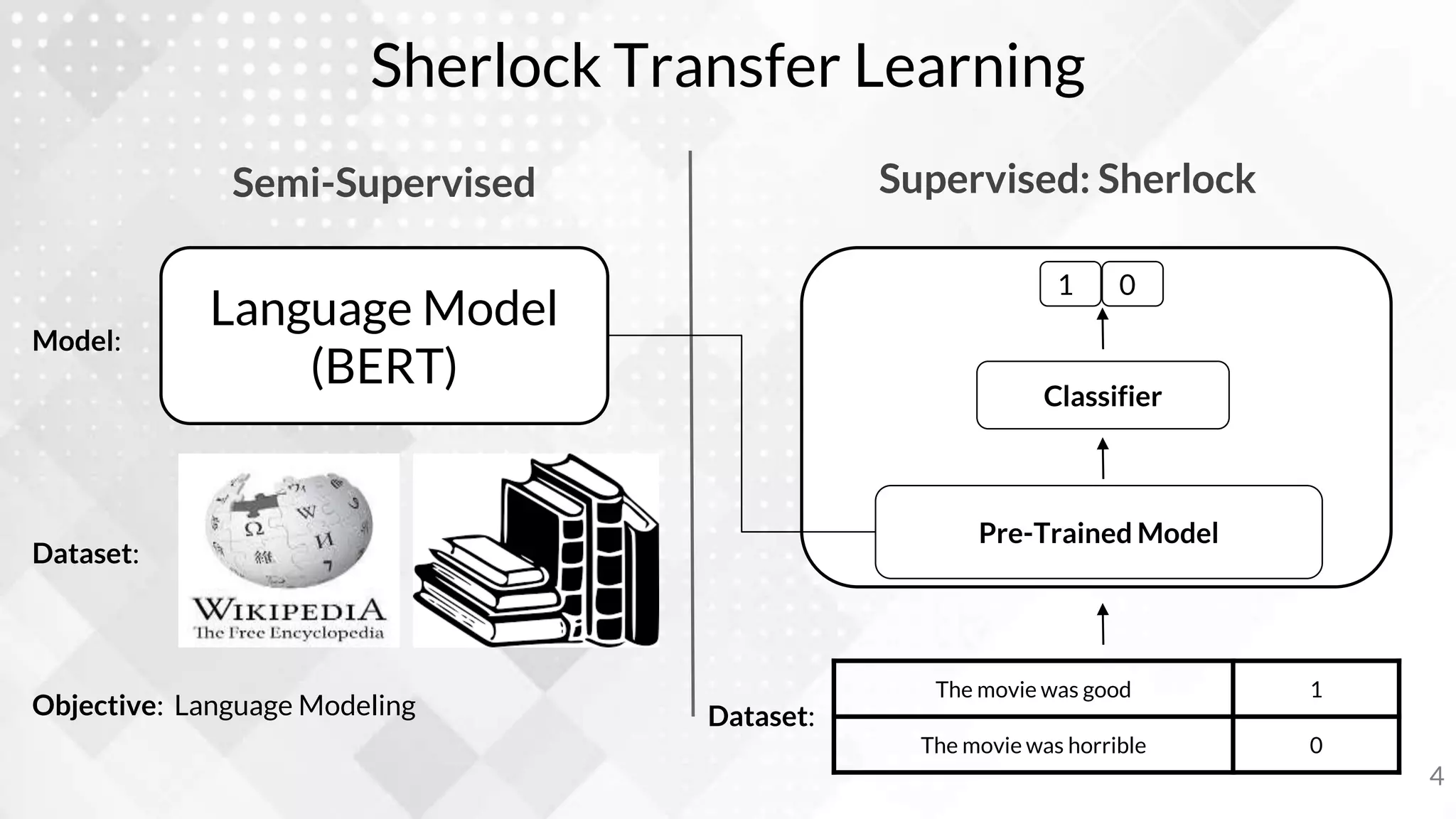

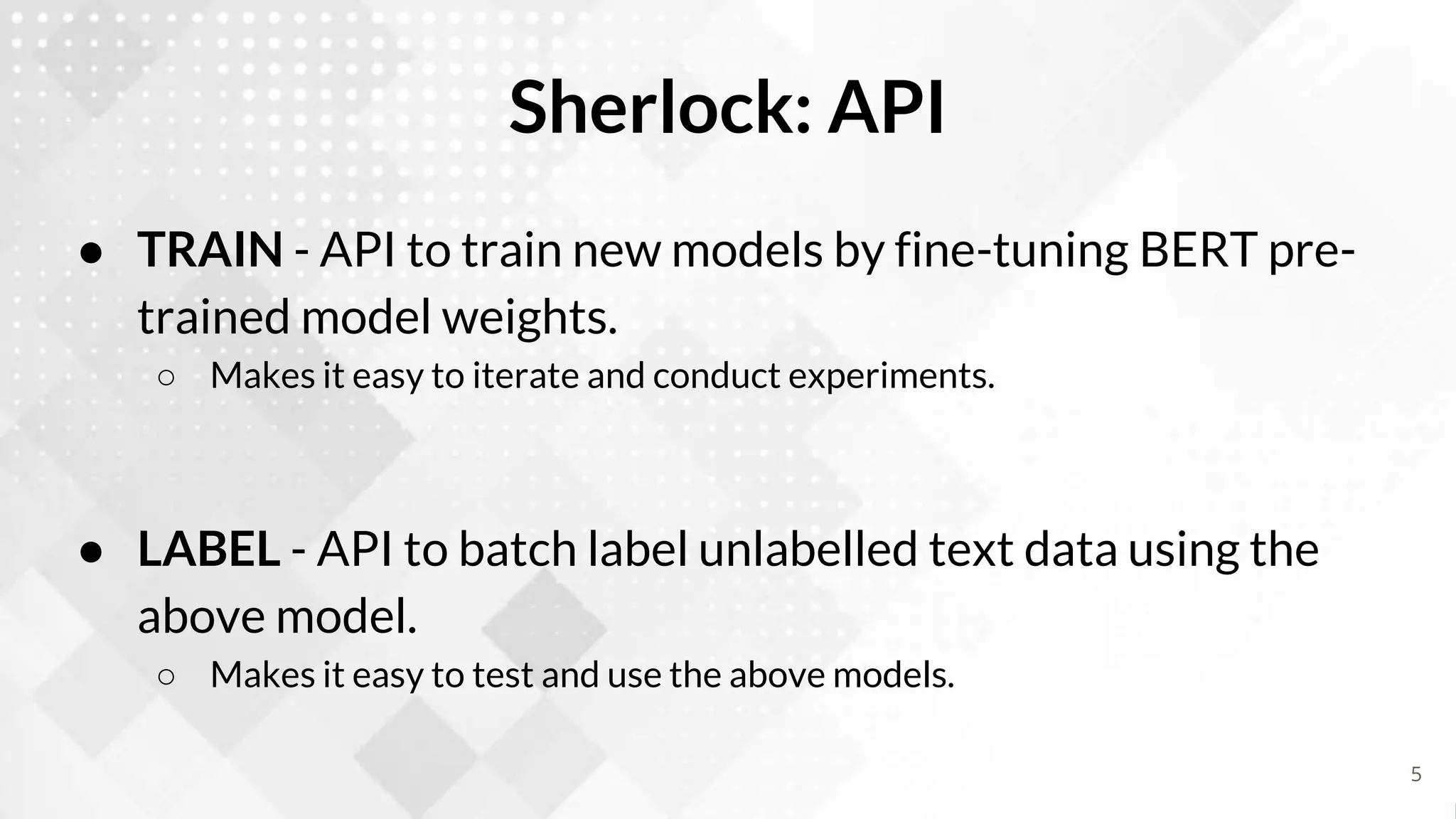

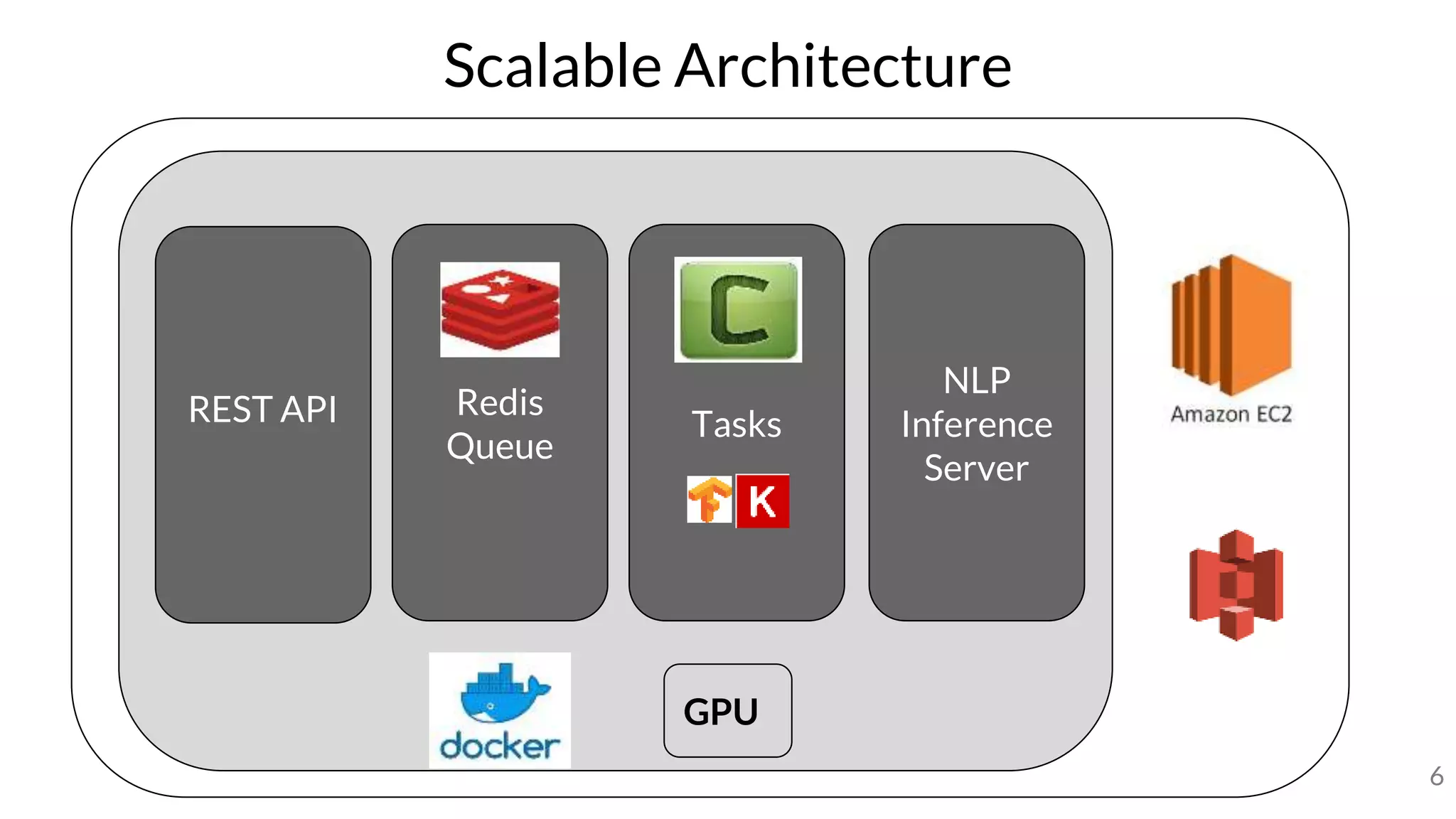

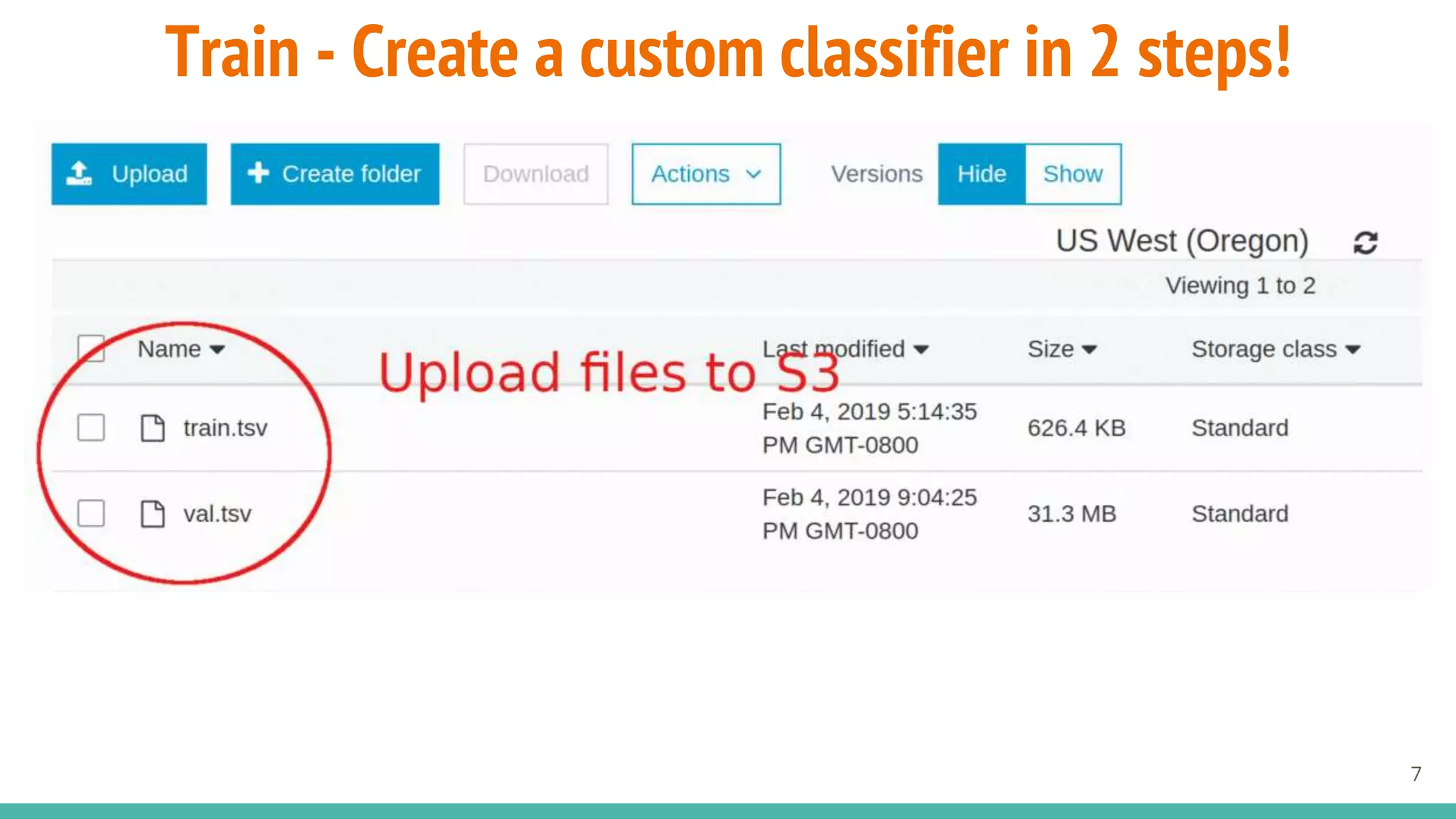

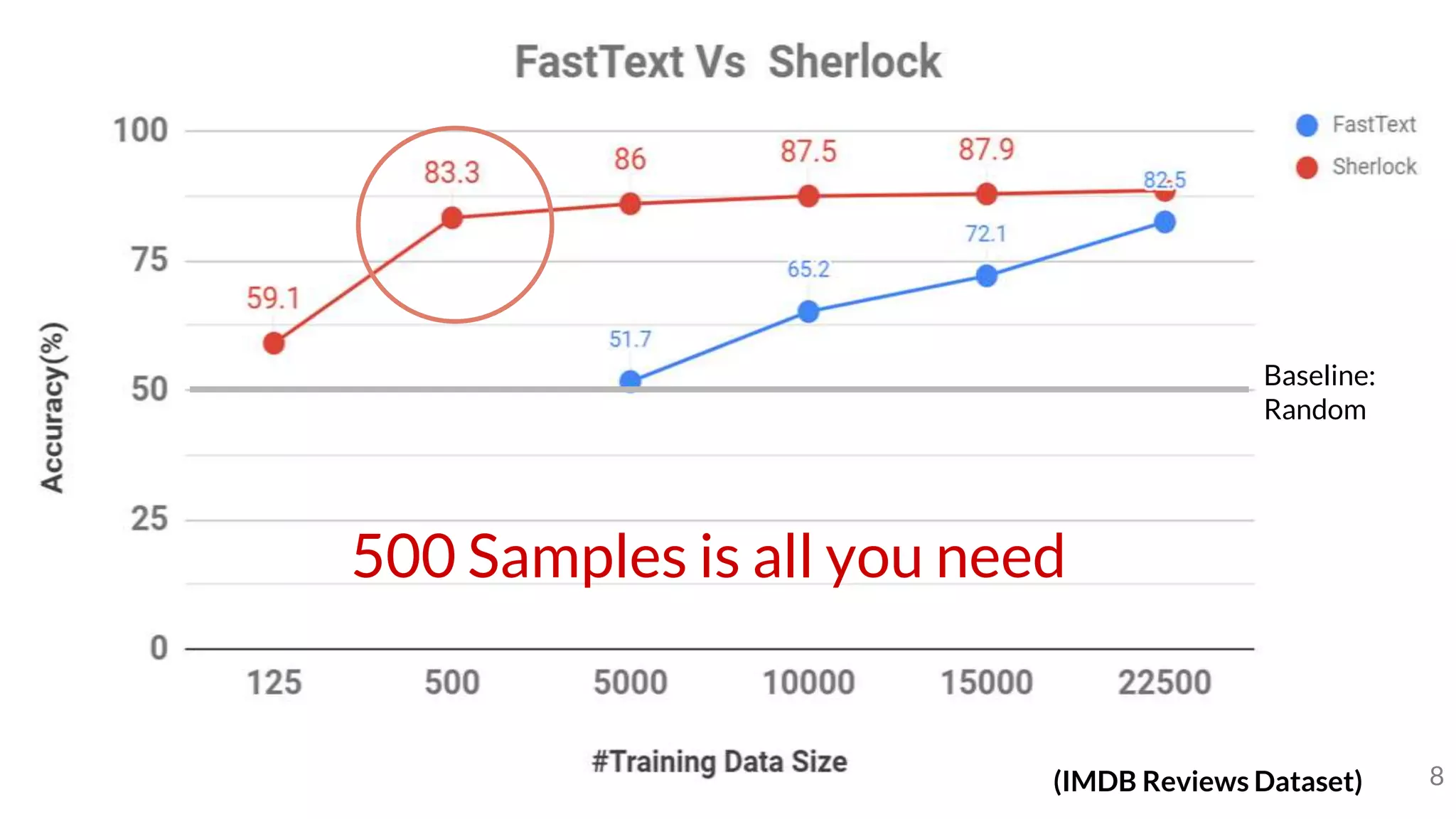

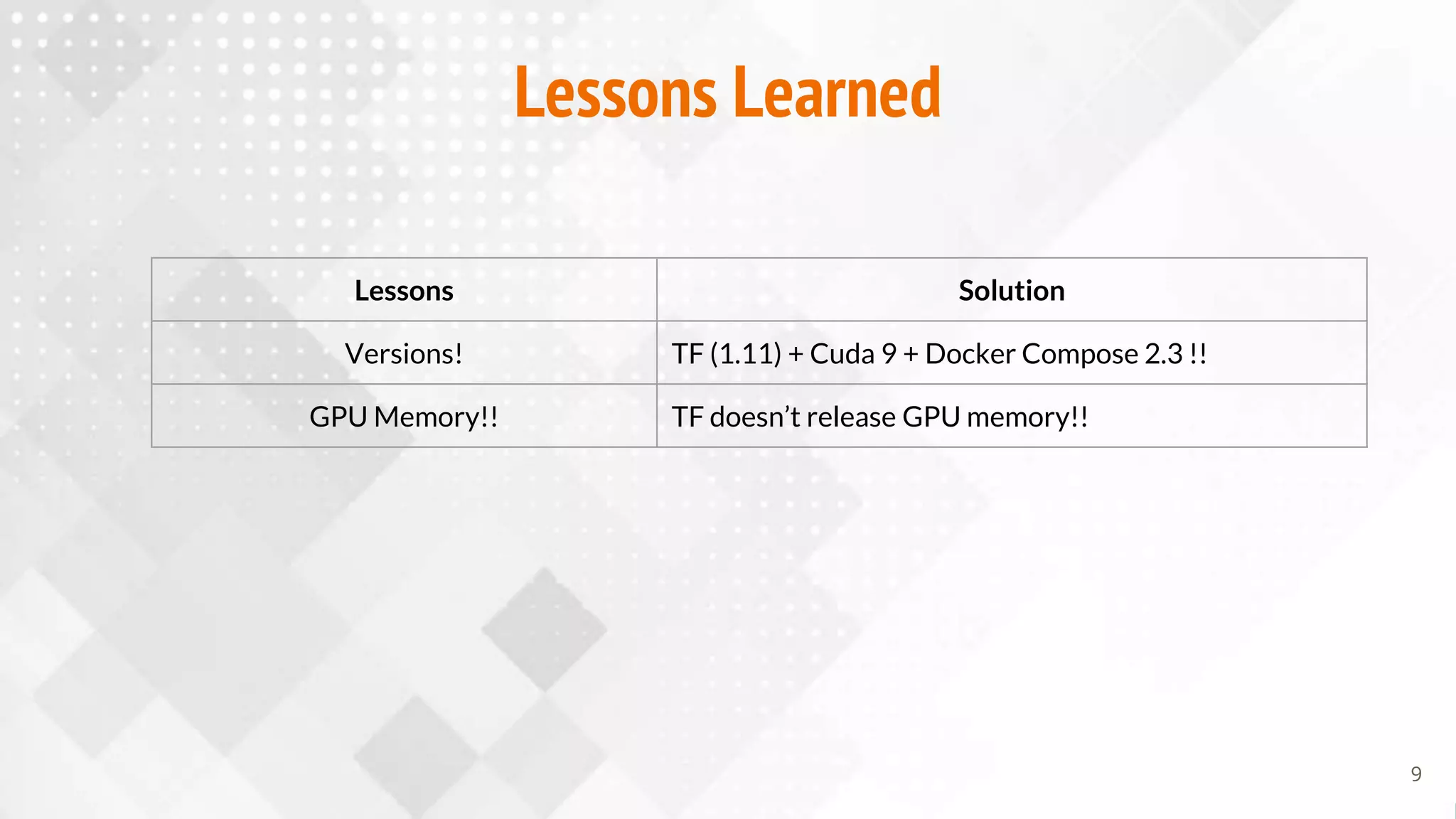

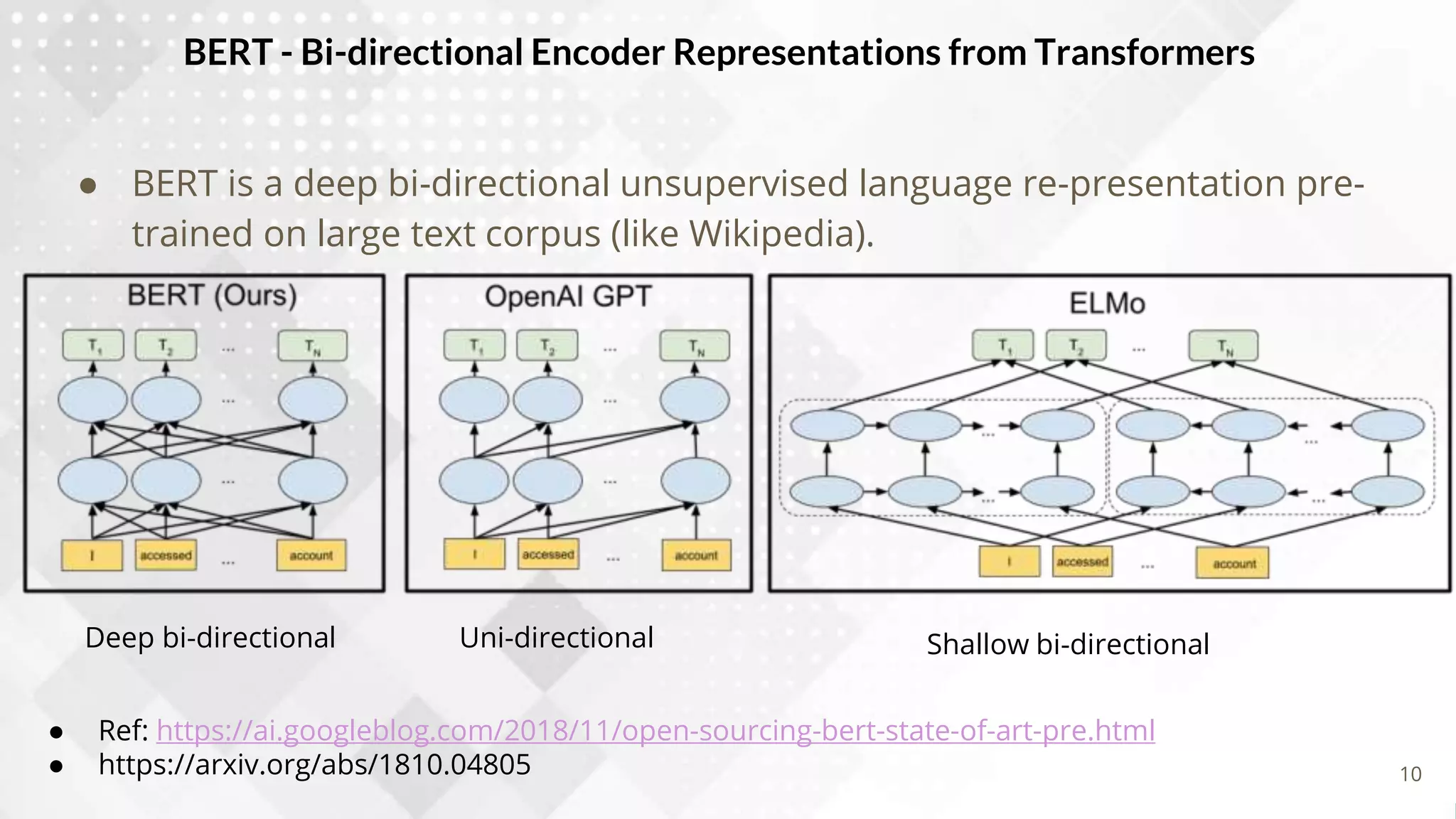

Sherlock is an NLP transfer learning platform that allows users to easily create text classifiers using small datasets. It provides APIs to train models using BERT pre-trained language models and to label datasets with the trained models. The platform addresses common issues in deploying models in production using Docker, TensorFlow, and GPUs. It demonstrates that models using BERT can achieve high accuracy even with small training datasets of 500 samples or less.