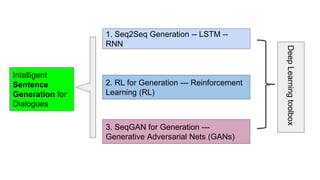

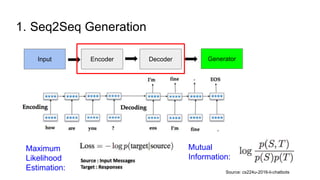

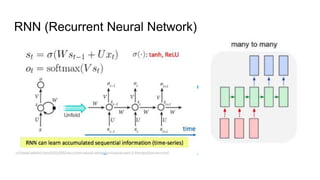

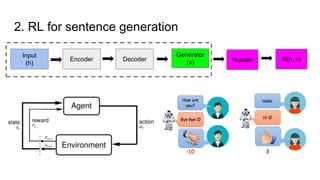

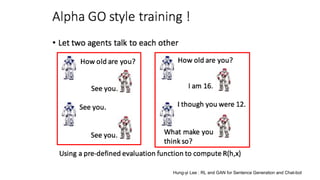

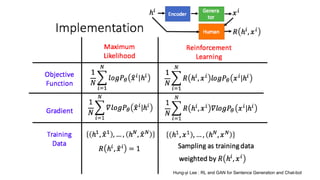

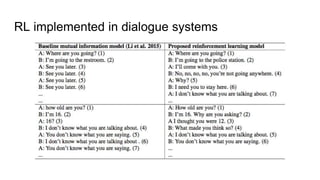

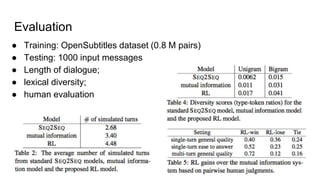

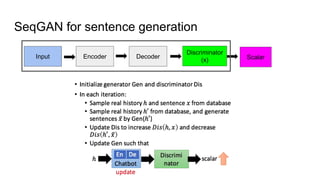

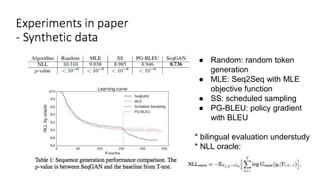

The document discusses various deep learning approaches for dialogue systems, including seq2seq generation, reinforcement learning, and seqgan techniques. It highlights the limitations of traditional models in generating engaging responses and how methods like reinforcement learning improve conversation quality by enhancing informativity and coherence. Furthermore, it demonstrates the effectiveness of seqgan in guiding generative models with discriminative feedback for better performance in synthetic and real-world data.